The ProteinCartography pipeline searches sequence and structure databases for matches to input proteins and builds maps of protein space for the purposes of discovery and exploration.

You can find a general overview of the pipeline in the Pub

.

Comparing protein structures across organisms can help us generate interesting biological hypotheses. This pipeline allows users to build interactive maps of structurally similar proteins useful for discovery and exploration.

Our pipeline starts with user-provided protein(s) of interest and searches the available sequence and structure databases for matches. Using the full list of matches, we can build a "map" of all the similar proteins and look for clusters of proteins with similar features. Overlaying a variety of different parameters such as taxonomy, sequence divergence, and other features onto these spaces allows us to explore the features that drive differences between clusters.

- Clone the GitHub repository.

git clone https://github.com/Arcadia-Science/ProteinCartography.git - Install

condaand/ormambaif you don't already have them installed. - Create a conda environment containing the software needed to run the pipeline.

Run this code from within the ProteinCartography repo.conda env create -f envs/cartography_tidy.yml -n cartography_tidy conda activate cartography_tidy - Run the Snakemake pipeline using a demo protein (human ACTB, P60709).

Set

nto be the number of cores you'd like to use for running the pipeline.snakemake --snakefile Snakefile --configfile demo/config_actin.yml --use-conda --cores n - Inspect results.

In the

demo/output/clusteringresults/directory, you should find the following files:actin_aggregated_features.tsv: metadata file containing protein feature hitsactin_aggregated_features_pca_umap.html: interactive UMAP scatter plot of resultsactin_aggregated_features_pca_tsne.html: interactive t-SNE scatter plot of resultsactin_leiden_similarity.html: mean cluster TM-score similarity heatmapactin_semantic_analysis.htmlandactin_semantic_analysis.pdf: simple semantic analysis of clusters

We have been able to successfully run the pipeline on macOS and Amazon Linux 2 machines with at least 8GB RAM and 8 cores.

For the data generated for our pub, we ran the pipeline on an AWS EC2 instance of type t2.2xlarge (32 GiB RAM + 8 vCPU).

The pipeline supports two modes: Search and Cluster.

In this default mode, the pipeline starts with a set of input proteins of interest in PDB and FASTA format and performs broad BLAST and Foldseek searches to identify hits. The pipeline aggregates all hits, downloads PDBs, and builds a map. The pipeline is implemented in Snakefile.

- Query protein files in FASTA and PDB format.

- Each protein should have a unique protein identifier (

protid). - The

protidshould be the prefix of the FASTA and PDB files (e.g.P60709.fasta,P60709.pdb). - Proteins provided as FASTAs <400aa in length will be folde automatically by the pipeline using the ESMFold API.

- For proteins >400aa in length, we recommend using ColabFold.

- Each protein should have a unique protein identifier (

- Custom

config.ymlfile.config.ymlcontains the default parameters of the pipeline, which are used if a custom file is not provided.- We recommend making a copy of this file and customizing the file to fit your search parameters.

- Passing the path of the custom config file through the

--configfileflag to Snakemake will overwrite the defaults inconfig.yml. - Key Parameters:

input: directory containing input PDBs and FASTAs.output: directory where all pipeline outputs are placed.analysis_name: nickname for the analysis, appended to important output files.- See

config.ymlfor additional parameters.

- (Optional)

features_override.tsvfile.- This file can be used to pass protein metadata for custom proteins not found in UniProt.

- These proteins will not automatically have metadata retrieved, so providing this information is useful for visualizing custom input proteins.

- The columns of this file are described in Feature file main columns.

- It can also be used to overwrite metadata values for specific proteins.

- Follow steps 1-3 in the Quickstart section above to set up and activate the conda environment.

- Set up a

config.ymlfile specifying input and output directories and an analysis name. - Add query protein files in FASTA and PDB format to the input folder.

- You can pull down proteins with a UniProt accession number and AlphaFold structure using the following command.

Replace {accession} with your UniProt accession (e.g. P24928).

python ProteinCartography/fetch_accession.py -a {accession} -o input -f fasta pdbThis saves a FASTA file from UniProt and a PDB file from AlphaFold to theinput/folder. - For non-UniProt proteins, users can provide a FASTA file (one entry per file).

- If the protein is <400aa, the pipeline can generate a custom PDB file using the ESMFold API.

- If the protein is >400aa, you should provide a PDB file with a matching prefix, which you can generate using ColabFold or other software.

- You can pull down proteins with a UniProt accession number and AlphaFold structure using the following command.

Replace {accession} with your UniProt accession (e.g. P24928).

- Run this command, replacing the

config.ymlwith your config file and8with the number of cores you want to allocate to Snakemake.

snakemake --snakefile Snakefile --configfile config.yml --use-conda --cores 8

In this mode, the pipeline starts with a folder containing PDBs of interest and performs just the clustering and visualization steps of the pipeline, without performing any searches or downloads. The pipeline is implemented in Snakefile_ff.

- Input protein structures in PDB format.

- Each protein should have a unique protein identifier (

protid) which matches the PDB file prefix, as described for Search mode. - The pipeline does not yet support proteins with multiple chains.

- Each protein should have a unique protein identifier (

config_ff.ymlfile with custom settings.config_ff.ymlis an example file that contains the defaults of the pipeline.- We recommend making a copy of this file and adjusting the settings.

- A custom config file passed through the

--configfileflag to Snakemake will overwrite the defaults. - Key Parameters:

input: directory containing input PDBs and FASTAs.output: directory where all pipeline outputs are placed.analysis_name: nickname for the analysis, appended to important output files.features_file: path to features file (described below).- (Optional)

keyids: keyprotidvalues for proteins to highlight similar to input proteins in the Search mode. - See

config_ff.ymlfor additional parameters.

- Features file with protein metadata.

- Usually, we call this file

uniprot_features.tsvbut you can use any name. - The file should be placed into the

inputdirectory. - This file contains protein metadata for each protein used for visualization purposes.

- The columns of this file are described in Feature file main columns.

- Usually, we call this file

- Follow steps 1-3 in the Quickstart section above to set up and activate the conda environment.

- Set up a

config_ff.ymlfile specifying input and output directories and an analysis name. - Add input protein structures in PDB format to your input folder.

- The pipeline does not yet support PDBs with multiple chains.

- Make a

uniprot_features.tsvfile. You can generate this one of two ways. If you have a list of UniProt accessions, you can provide that as a .txt file and automatically pull down the correct annotations. Alternatively, you can manually generate the file.

3.1 Generating the file using query_UniProt.py- Create a

uniprot_ids.txtfile that contains a list of UniProt accessions, one per line. - Run the following code from the base of the GitHub repository. Modify the input folder path to your input folder.

python ProteinCartography/query_uniprot.py -i uniprot_ids.txt -o input_ff/uniprot_features.tsv

3.2 Manually generating the file. - For each protid in the dataset, you should gather relevant protein metadata.

- The first column of the file should be the unique protein identifer, with

protidas the column name. - You should have additional columns for relevant metadata as described in Feature file main columns.

- Default columns that are missing will be ignored.

- Create a

- Run this command, replacing the

config_ff.ymlwith your config file and8with the number of cores you want to allocate to Snakemake.

snakemake --snakefile Snakefile_ff --configfile config_ff.yml --use-conda --cores 8

- Snakefile: the Snakemake pipeline that orchestrates this repo's functions.

- config.yml: default config file for the pipeline.

- envs/: the conda environments used for this repo.

- demo/: contains

config_actin.ymlas well as a FASTA and PDB for human actin, used for the demo. - ProteinCartography/: scripts that are called by Snakemake, importable in Python.

- pub/: files related to the ProteinCartography pub.

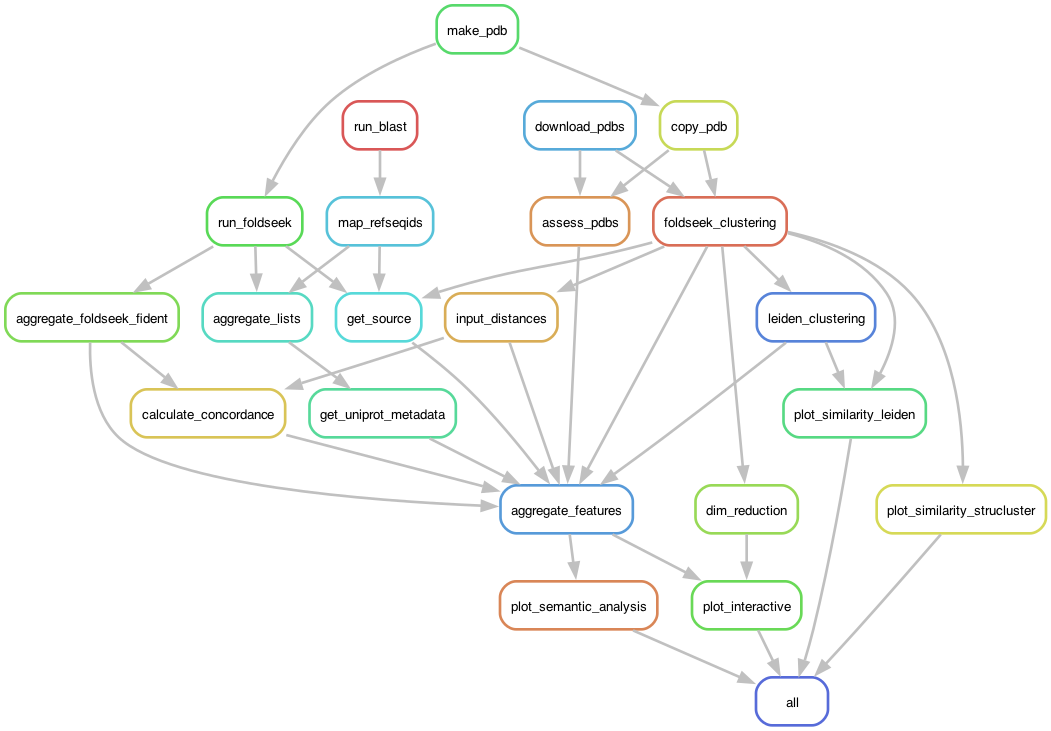

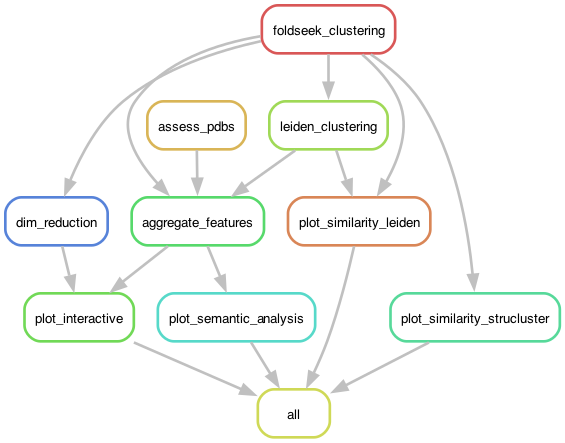

The Search mode of the pipeline performs all of the following steps.

The Cluster mode starts at the Clustering step.

- Fold input FASTA files using ESMFold.

- The pipeline starts with input FASTA and/or PDB files, one entry per file, with a unique protein identifier (

protid). - If a matching PDB file is not provided, the pipeline will use the ESMFold API to generate a PDB file for FASTA sequences shorter than 400aa.

- The pipeline starts with input FASTA and/or PDB files, one entry per file, with a unique protein identifier (

-

Search the AlphaFold databases using queries to the Foldseek webserver API for each provided

.pdbfile.- The pipeline searches

afdb50,afdb-proteomeandafdb-swissprotfor each input protein and aggregate the results. You can customize to add or remove databases using the config file. - Currently, the pipeline is limited to retrieving a maximum of 1000 hits per protein, per database.

- The pipeline searches

-

Search the non-redundant GenBank/RefSeq database using blastp for each provided

.fastafile.- Takes the resulting output hits and maps each GenBank/RefSeq hit to a UniProt ID using

requestsand the UniProt REST API. - TODO: This can fail for large proteins (>700aa) due to remote BLAST CPU limits. To overcome this error, you can manually run BLAST locally or via the webserver and create an accession list file with the name format

{protid}.blasthits.refseq.txtin theoutput/blastresults/directory.

- Takes the resulting output hits and maps each GenBank/RefSeq hit to a UniProt ID using

-

Aggregate the list of Foldseek and BLAST hits from all input files into a single list of UniProt IDs.

-

Download annotation and feature information for each hit protein from UniProt.

-

Filter proteins based on key UniProt metadata. The pipeline removes:

- Proteins marked as fragments by UniProt.

- Deleted or outdated proteins.

- Proteins larger than or smaller than user-specified maximum and minimum size parameters, specified in the

config.ymlfile.

-

Download a

.pdbfile from each remaining protein from AlphaFold.- This part is a bit slow, as it's currently limited to the number of cores provided to Snakemake.

- Sometimes this will fail, possibly due to rate limits. Restarting Snakemake with the

--rerun-incompleteflag usually resolves this.

-

Generate a similarity matrix and cluster all protein .pdb files using Foldseek.

- By default,

foldseek searchmasks results with an e-value > 0.001. We set these masked values to 0. - By default,

foldseek searchreturns at most 1000 hits per protein. For spaces where there are >1000 proteins, this results in missing comparisons. We also set missing values to 0. - When all hit proteins are highly similar, these settings can result in artefactually steep cutoffs in TM-score in the visualization. A TM-score of 0 in the scatter plot does not mean that there is no structural similarity - it instead is likely that the protein's structural similarity was not within the top 1000 hits. In the future, we plan to perform this filtering via e-value, and to re-calculate the TM-score for every input protein versus every hit to more accurately display in the visualization.

- By default,

-

Perform dimensionality reduction and clustering on the similarity matrix.

- By default, we perform 30-component PCA prior to running both TSNE and UMAP for visualization.

- For clustering, we use the defaults of

scanpy's Leiden clustering implementation.

-

Generate a variety of

_features.tsvfiles.- Each file has, as its first column, a list of protein ids (protid) that are shared between files.

- We query UniProt to get all metadata from that service as a

uniprot_features.tsvfile. - Foldseek generates a

struclusters_features.tsvfile. - We perform Leiden clustering to generate a

leiden_features.tsvfile. - We extract from Foldseek's all-v-all TM-score analysis a distance from every protid to our input protids as

<input_protid>_distance_features.tsvfiles. Not available in Cluster mode: - We extract from Foldseek search a fraction sequence identity for every protid in our input protids as

<input_protid>_fident_features.tsvfiles. - We subtract the fraction sequence identity from the TM-score to generate a

<input_protid>_convergence_features.tsvfile. - We determine the source of each file in the analysis (whether it was found from blast or Foldseek) as the

source_features.tsvfile.

-

Aggregate features.

- All of the features.tsv files are combined into one large

aggregated_features.tsvfile.

- All of the features.tsv files are combined into one large

-

Calculate per-cluster structural similarities.

- For every Leiden or Foldseek structural cluster of proteins, get the mean structural similarity within that cluster and between that cluster and every other cluster.

- Plot it as a heatmap with suffix

_leiden_similarity.htmlor_structural_similarity.html.

-

Perform simple semantic analysis on UniProt annotations.

- For the annotations in each cluster, aggregate them and count the frequency of every full annotation string.

- Perform a word count analysis on all of the annotations and generate a word cloud.

- Save as a PDF file with suffix

_semantic_analysis.pdf.

-

Build an explorable HTML visualization using

Plotlybased on the aggregated features.-

An example can be found here

-

Each point has hover-over information.

-

Default parameters include:

- Leiden Cluster: Protein cluster as called by scanpy's implementation of Leiden clustering.

- Annotation Score: UniProt annotation score from 0 to 5 (5 being the best evidence).

- Broad Taxon: the broad taxonomic category for the source organism. There are two modes: 'euk' and 'bac'.

- Assigns the "smallest" taxonomic scope from the rankings below for each point. So, a mouse gene would get

Mammaliabut notVertebrata. - For 'euk', uses the taxonomic groups

Mammalia, Vertebrata, Arthropoda, Ecdysozoa, Lophotrochozoa, Metazoa, Fungi, Viridiplantae, Sar, Excavata, Amoebazoa, Eukaryota, Bacteria, Archaea, Viruses - For 'bac', uses the taxonomic groups

Pseudomonadota, Nitrospirae, Acidobacteria, Bacillota, Spirochaetes, Cyanobacteria, Actinomycetota, Deinococcota, Bacteria, Archaea, Viruses, Metazoa, Fungi, Viridiplantae, Eukaryota

- Assigns the "smallest" taxonomic scope from the rankings below for each point. So, a mouse gene would get

- Length: length of the protein in amino acids.

- Source: how the protein was added to the clustering space (

blast,foldseekorblast+foldseek).

-

Power users can customize the plots using a variety of rules, described below.

-

The plot_interactive() function has two required arguments:

- a path to the aggregated features file with coordinates that you'd like to plot

- a

plotting_rulesdictionary describing how the data should be plotted

The plotting_rules dictionary should have the following format.

Each column is an entry in the dictionary containing a dictionary of rules.

{

'column1.name': {

'type': 'categorical',

'parameter1': value,

'parameter2': value,

...

}

'column2.name': {

'type': 'hovertext',

...

}

}

The possible rules for each column are as follows:

- 'type'(required):

'categorical','continuous','taxonomic', or'hovertext'- Determines the plotting style of the data.

- If 'categorical', expects the data to be in string format.

- If 'continuous', expects the data to be in numerical (int or float) format.

- If 'taxonomic', expects an ordered list of taxa to be used for grouping.

- If 'hovertext', won't plot the data as a dropdown menu option, but will include the data in the hover-over text box.

- 'fillna':

- A value to fill rows that are

np.nan. - For categorical plots, usually empty string

''. - For continuous plots, usually

0.

- A value to fill rows that are

- 'apply':

- A function that will be applied to every element of the feature before plotting.

- This can be used to convert a numerical value into a categorical value with a lambda function.

- For example,

lambda x: str(x)

- 'skip_hover':

- Boolean, whether to include this data in the hovertext.

- 'textlabel':

- A string that replaces the column name on buttons and in hover text.

- Useful if the column name is too ugly.

- 'color_scale':

- A named Plotly colorscale, or:

- A list of lists that can be converted into a Plotly colorscale (don't use tuples).

- 'cmin':

- Minimum value to use for color scale. Defaults to minimum value for that column.

- 'cmax':

- Maximum value to use for color scale. Defaults to maximum value for that column.

- Note: if the value of 'fillna' is lower than the cmin, na values will have their own light grey coloration, distinct from the rest of the color scale. Good for indicating values that are missing, rather than actually calculated.

- 'color_order':

- A list of HEX code colors used for coloring categorical variables.

- If there aren't enough colors for unique values of the data, will generate up to 3x more colors of varying darkness.

- 'color_dict':

- A dictionary of key: value pairs for coloring categorical variables.

- The key is the name of the category and the value is a HEX code color.

- 'taxon_order':

- Exclusively used for 'taxonomic' style plots. A list of ranked-order taxa for categorization.

- Should start with more-specific taxa and expand to less-specific taxa.

The pipeline generates a large number of .txt and .tsv files with specific formatting expectations.

Many of the pipeline's scripts accept these specific format conventions as input or return them as output.

These are the primary formats and their descriptions.

These files end with '.txt' and contain a list of accessions (RefSeq, GenBank, UniProt), one per line.

- Example:

A0A2J8L4A7 K7EV54 A0A2J8WJR8 A0A811ZNA7 ... - Input to:

- Output from:

These files end with '.tsv' and contain distance or similarity matrices, usually all-v-all.

- Example::

protid A0A2J8L4A7 K7EV54 A0A2J8WJR8 A0A811ZNA7 A0A2J8L4A7 1 0.9 0.85 0.7 K7EV54 0.9 1 0.91 0.6 A0A2J8WJR8 0.85 0.91 1 0.71 A0A811ZNA7 0.7 0.6 0.71 1 - Input to:

- Output from:

These files end with '.tsv' and contain a protid column, which is the unique identifier of each protein in the dataset.

The remaining columns are metadata for each protein. These metadata can be of any data type.

- Example:

protid Length LeidenCluster Organism A0A2J8L4A7 707 1 Pan troglodytes (Chimpanzee) K7EV54 784 2 Pongo abelii (Sumatran orangutan) A0A2J8WJR8 707 1 Pongo abelii (Sumatran orangutan) A0A811ZNA7 781 1 Nyctereutes procyonoides (Raccoon dog) - Input to:

- Output from:

A variety of metadata features for each protein are usually pulled from UniProt for visualization purposes. An example features_file.tsv is provided as part of the repo.

If you are providing a set of custom proteins (such as those not fetched from UniProt) when using the Search mode, you may want to include a features_override.tsv file that contains these features for your proteins of interest. This will allow you to visualize your protein correctly in the interactive HTML map. You can specify the path to this file using the override_file parameter in config.yml.

When using Cluster mode, you should provide protein metadata in a uniprot_features.tsv file. You can specify the path to this file using the features_file parameter in config_ff.yml.

Note that the override_file parameter also exists in the Cluster mode. The difference between features_file and override_file is that the former is used as the base metadata file (replacing the uniprot_features.tsv file normally retrieved from UniProt, whereas the latter is loaded after the base metadata file, replacing any information pulled from the features_file. In the Search mode, you can therefore use the override_file parameter to correct errors in metadata provided by UniProt or replace values for specific columns in the uniprot_features.tsv file that is retrieved by the pipeline.

For either custom proteins provided through override_file in either mode, or base metadata provided by features_file in Cluster mode, you should strive to include the default columns in the table below. Features used for color schemes in the default plotting rules are marked with (Plotting) below. Features used only for hover-over description are marked with (Hovertext).

| feature | example | description | source |

|---|---|---|---|

"protid" |

"P42212" |

(Required) the unique identifier of the protein. Usually the UniProt accession, but can be any alphanumeric string | User-provided or UniProt |

"Protein names" |

"Green fluorescent protein" |

(Hovertext) a human-readable description of the protein | UniProt |

"Gene Names (primary)" |

"GFP" |

(Hovertext) a gene symbol for the protein | UniProt |

"Annotation" |

5 |

(Plotting) UniProt Annotation Score (0 to 5) | UniProt |

"Organism" |

"Aequorea victoria (Water jellyfish) (Mesonema victoria)" |

(Hovertext) Scientific name (common name) (synonyms) | UniProt |

"Taxonomic lineage" |

"cellular organisms (no rank), Eukaryota (superkingdom), ... Aequoreidae (family), Aequorea (genus)" |

string of comma-separated Lineage name (rank) for the organism's full taxonomic lineage |

UniProt |

"Lineage" |

["cellular organisms", "Eukaryota", ... "Aequoreidae", "Aequorea"] |

(Plotting) ordered list of lineage identifiers without rank information, generated from "Taxonomic lineage" |

ProteinCartography |

"Length" |

238 |

(Plotting) number of amino acids in protein | UniProt |