A curated list of resources relevant to legged locomotion learning of robotics.

I'm new to the region of legged locomotion, and this awesome list is mainly used to organize and share some source I've seen. This list will be updated gradually.

- awesome-isaac-gym

- Hybrid Robotics Publications

- Reinforcement-Learning-in-Robotics

- bipedal-robot-learning-collection

- Awesome_Quadrupedal_Robots

- Awesome-Implicit-NeRF-Robotics

- Legged-Robots

- Awesome Robot Descriptions

| Name | Maker | Formats | License | Meshes | Inertias | Collisions |

|---|---|---|---|---|---|---|

| Bolt | ODRI | URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| Cassie (MJCF) | Agility Robotics | MJCF | MIT | ✔️ | ✔️ | ✔️ |

| Cassie (URDF) | Agility Robotics | URDF | MIT | ✔️ | ✔️ | ✔️ |

| Spryped | Benjamin Bokser | URDF | GPL-3.0 | ✔️ | ✔️ | ✔️ |

| Upkie | Tast's Robots | URDF | Apache-2.0 | ✔️ | ✔️ | ✔️ |

| Name | Maker | Formats | License | Meshes | Inertias | Collisions |

|---|---|---|---|---|---|---|

| Atlas DRC (v3) | Boston Dynamics | URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| Atlas v4 | Boston Dynamics | URDF | MIT | ✔️ | ✔️ | ✔️ |

| Digit | Agility Robotics | URDF | ✖️ | ✔️ | ✔️ | ✔️ |

| iCub | IIT | URDF | CC-BY-SA-4.0 | ✔️ | ✔️ | ✔️ |

| JAXON | JSK | COLLADA, URDF, VRML | CC-BY-SA-4.0 | ✔️ | ✔️ | ✔️ |

| JVRC-1 | AIST | MJCF, URDF | BSD-2-Clause | ✔️ | ✔️ | ✔️ |

| NAO | SoftBank Robotics | URDF, Xacro | BSD-3-Clause | ➖ | ✔️ | ✔️ |

| Robonaut 2 | NASA JSC Robotics | URDF | NASA-1.3 | ✔️ | ✔️ | ✔️ |

| Romeo | Aldebaran Robotics | URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| SigmaBan | Rhoban | URDF | MIT | ✔️ | ✔️ | ✔️ |

| TALOS | PAL Robotics | URDF | LGPL-3.0 | ✔️ | ✔️ | ✔️ |

| Valkyrie | NASA JSC Robotics | URDF, Xacro | NASA-1.3 | ✔️ | ✔️ | ✔️ |

| WALK-MAN | IIT | Xacro | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| Name | Maker | Formats | License | Meshes | Inertias | Collisions |

|---|---|---|---|---|---|---|

| A1 | UNITREE Robotics | MJCF, URDF | MPL-2.0 | ✔️ | ✔️ | ✔️ |

| Aliengo | UNITREE Robotics | MJCF, URDF | MPL-2.0 | ✔️ | ✔️ | ✔️ |

| ANYmal B | ANYbotics | MJCF, URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| ANYmal C | ANYbotics | MJCF, URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| Go1 | UNITREE Robotics | MJCF, URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| HyQ | IIT | URDF | Apache-2.0 | ✔️ | ✔️ | ✔️ |

| Laikago | UNITREE Robotics | MJCF, URDF | MPL-2.0 | ✔️ | ✔️ | ✔️ |

| Mini Cheetah | MIT | URDF | BSD | ✔️ | ✔️ | ✔️ |

| Minitaur | Ghost Robotics | URDF | BSD-2-Clause | ✔️ | ✔️ | ✔️ |

| Solo | ODRI | URDF | BSD-3-Clause | ✔️ | ✔️ | ✔️ |

| Spot | Boston Dynamics | Xacro | ✖️ | ✔️ | ✖️ | ✔️ |

[legged_gym]: Isaac Gym Environments for Legged Robots

[domain-randomizer]: A standalone library to randomize various OpenAI Gym Environments

[cassie-mujoco-sim]: A simulation library for Agility Robotics' Cassie robot using MuJoCo (provide the cassie's model file)

[gym-cassie-run]: gym RL environment in which a mujoco simulation of Agility Robotics' Cassie robot is rewarded for walking/running forward as fast as possible.

[DRLoco]: Simple-to-use-and-extend implementation of the DeepMimic Approach using the MuJoCo Physics Engine and Stable Baselines 3, mainly for locomotion tasks [Doc]

[dm_control]: DeepMind's software stack for physics-based simulation and Reinforcement Learning environments, using MuJoCo.

[bipedal-skills]: Bipedal Skills Benchmark for Reinforcement Learning

[terrain_benchmark]: terrain-robustness benchmark for legged locomotion

[spot_mini_mini]: Dynamics and Domain Randomized Gait Modulation with Bezier Curves for Sim-to-Real Legged Locomotion. (ROS + Gazebo + Gym + Pybullet) [Doc]

[apex]: Apex is a small, modular library that contains some implementations of continuous reinforcement learning algorithms. Fully compatible with OpenAI gym.

[EAGERx]: Tutorial: Tools for Robotic Reinforcement Learning, Hands-on RL for Robotics with EAGER and Stable-Baselines3.

[FRobs_RL]: Framework to easily develop robotics Reinforcement Learning tasks using Gazebo and stable-baselines-3.

[Cassie_FROST]: This repository contains an example using C-FROST to generate a library of walking gaits for Cassie series robot. The code depends on FROST and C-FROST.

[GenLoco]: Official codebase for GenLoco: Generalized Locomotion Controllers for Quadrupedal Robots, containing code for training on randomized robotic morphologies to imitate reference motions as well as pre-trained policies and code to deploy these on simulated or real-world robots.

[rl-mpc-locomotion]: This repo is aim to provide a fast simulation and RL training framework for quadrupad locomotion. The control framework is a hierarchical controller composed of an higher-level policy network and a lower-level model predictive controller (MPC).

- A Survey of Sim-to-Real Transfer Techniques Applied to Reinforcement Learning for Bioinspired Robots. (TNNLS, 2021) [sim2real] [paper]

- Robot Learning From Randomized Simulations: A Review. (Frontiers in Robotics and AI, 2022) [sim2real] [paper]

- Sim-to-Real Transfer in Deep Reinforcement Learning for Robotics: a Survey. (IEEE SSCI, 2020) [sim2real] [paper]

- How to train your robot with deep reinforcement learning: lessons we have learned. (IJRR, 2021) [RL Discussion] [paper]

- Transformer in Reinforcement Learning for Decision-Making: A Survey. [paper] [github]

- Recent Approaches for Perceptive Legged Locomotion. [paper]

-

[2023] Torque-based Deep Reinforcement Learning for Task-and-Robot Agnostic Learning on Bipedal Robots Using Sim-to-Real Transfer. [paper]

-

[2023] Learning and Adapting Agile Locomotion Skills by Transferring Experience. [paper]

-

[2023] Agile and Versatile Robot Locomotion via Kernel-based Residual Learning. [paper]

-

[2023] Learning Bipedal Walking for Humanoids with Current Feedback. [paper] [code]

-

[2023] DreamWaQ: Learning Robust Quadrupedal Locomotion With Implicit Terrain Imagination via Deep Reinforcement Learning. [paper]

-

[2023] Learning Humanoid Locomotion with Transformers. [paper]

-

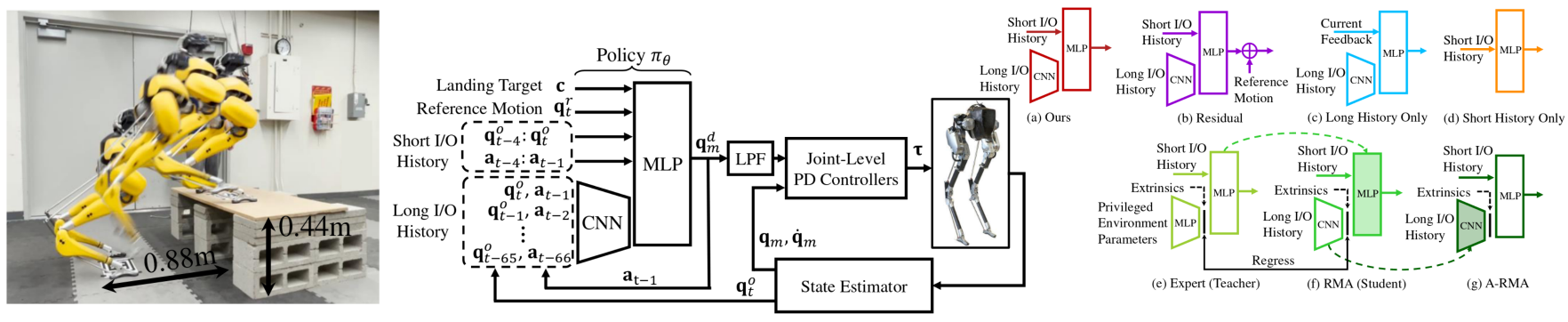

[2023] Robust and Versatile Bipedal Jumping Control through Multi-Task Reinforcement Learning. [paper]

-

[2022] Learning Visual Locomotion with Cross-Modal Supervision. [paper] [code soon]

-

[2022] MoCapAct: A Multi-Task Dataset for Simulated Humanoid Control. [imitation] [paper] [project]

-

[2022] Imitate and Repurpose: Learning Reusable Robot Movement Skills From Human and Animal Behaviors. [imitation] [paper]

-

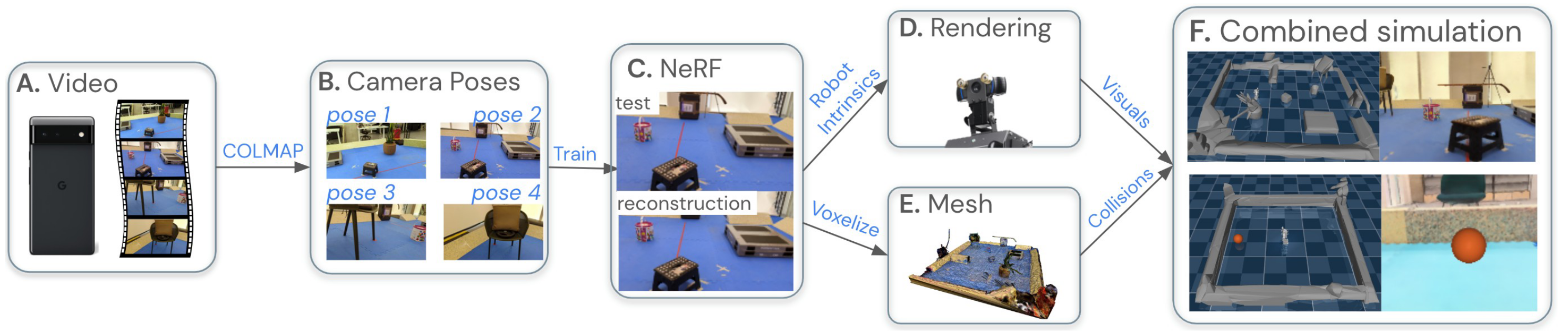

[2022] NeRF2Real: Sim2real Transfer of Vision-guided Bipedal Motion Skills using Neural Radiance Fields. [paper]

- [2022] Walking in Narrow Spaces: Safety-critical Locomotion Control for Quadrupedal Robots with Duality-based Optimization. [paper] [code]

- [2022] Bridging Model-based Safety and Model-free Reinforcement Learning through System Identification of Low Dimensional Linear Models. [paper]

- [2022] Dynamic Bipedal Maneuvers through Sim-to-Real Reinforcement Learning. [paper]

- [2022] Creating a Dynamic Quadrupedal Robotic Goalkeeper with Reinforcement Learning. [paper]

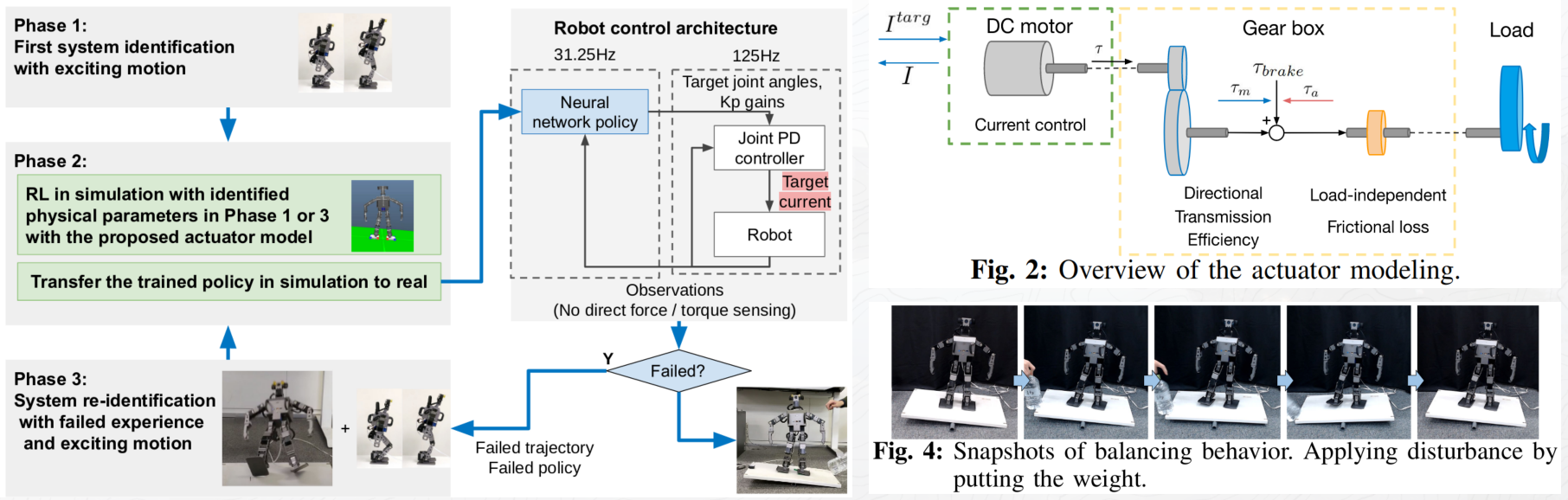

- [2022] Sim-to-Real Learning of Compliant Bipedal Locomotion on Torque Sensor-Less Gear-Driven Humanoid. [paper]

- [2021] RMA: Rapid Motor Adaptation for Legged Robots. [paper]

- [ICRA] ViNL: Visual Navigation and Locomotion Over Obstacles. [paper] [code] [project page]

- [ICRA] Generating a Terrain-Robustness Benchmark for Legged Locomotion: A Prototype via Terrain Authoring and Active Learning. [paper] [code]

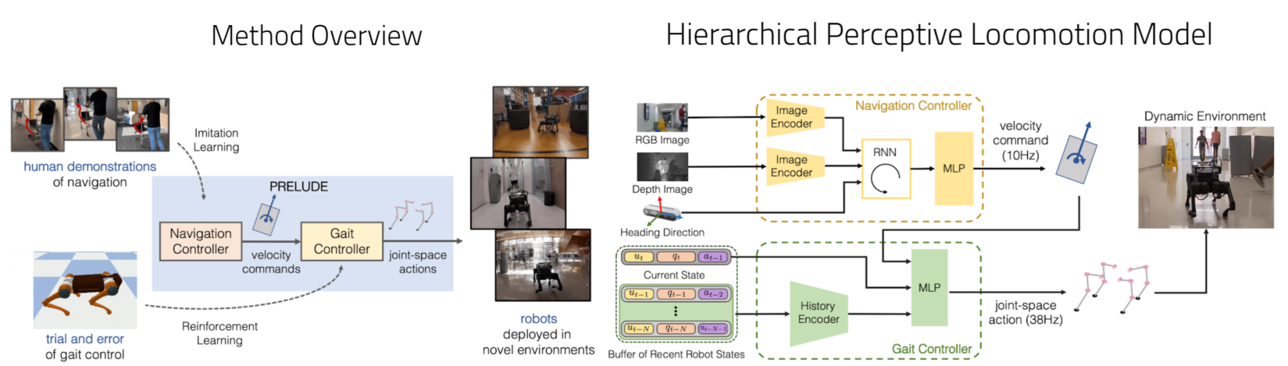

- [ICRA] Learning to Walk by Steering: Perceptive Quadrupedal Locomotion in Dynamic Environments. [perceptive locomotion] [paper] [code]

- [ICRA] Legs as Manipulator: Pushing Quadrupedal Agility Beyond Locomotion. [paper]

- [ICRA] DribbleBot: Dynamic Legged Manipulation in the Wild. [paper] [code soon]

- [L4DC] Continuous Versatile Jumping Using Learned Action Residuals. [paper]

- [Frontiers in Robotics and AI] Learning hybrid locomotion skills—Learn to exploit residual actions and modulate model-based gait control. [paper]

- [PMLR] Towards Real Robot Learning in the Wild: A Case Study in Bipedal Locomotion. [paper]

- [PMLR] Learning to Walk in Minutes Using Massively Parallel Deep Reinforcement Learning. [platform] [paper] [code]

- [CoRL] Walk These Ways: Tuning Robot Control for Generalization with Multiplicity of Behavior. (Oral) [control] [paper] [code]

- [CoRL] Deep Whole-Body Control: Learning a Unified Policy for Manipulation and Locomotion. (Oral) [paper]

- [CoRL] Legged Locomotion in Challenging Terrains using Egocentric Vision. [paper]

- [CoRL] DayDreamer: World Models for Physical Robot Learning. [paper]

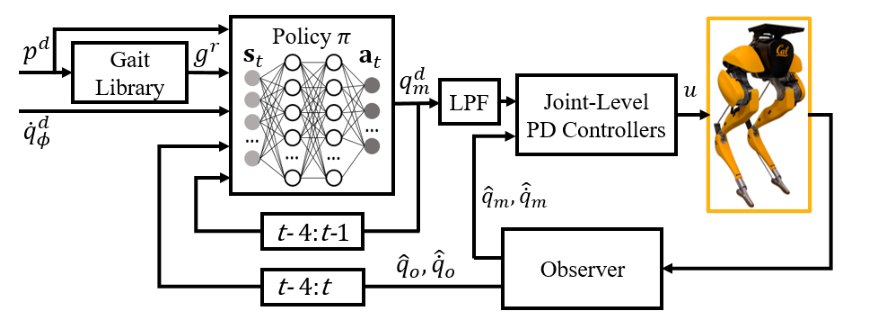

- [RSS] Rapid Locomotion via Reinforcement Learning. [paper] [code]

- [ICRA] An Adaptable Approach to Learn Realistic Legged Locomotion without Examples. [paper]

- [ICRA] Accessibility-Based Clustering for Effcient Learning of Locomotion Skills. [paper]

- [ICRA] Sim-to-Real Learning for Bipedal Locomotion Under Unsensed Dynamic Loads. [paper]

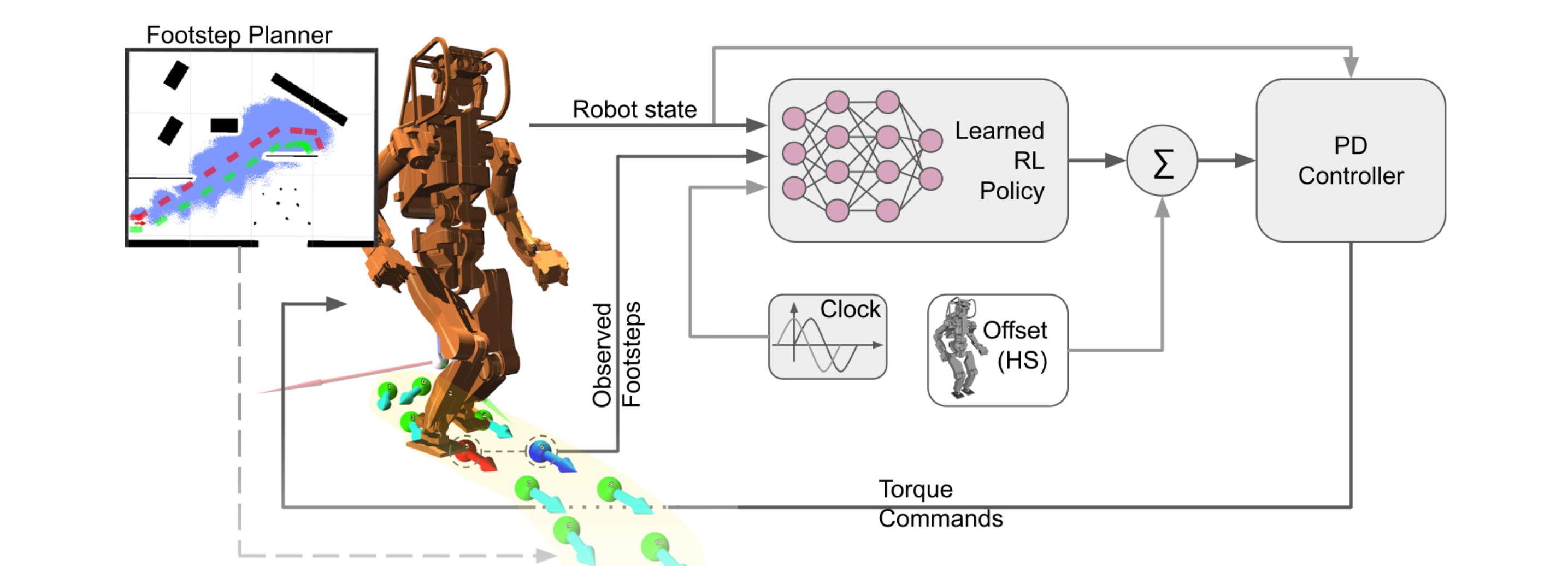

- [ICRA] Sim-to-Real Learning of Footstep-Constrained Bipedal Dynamic Walking. [paper]

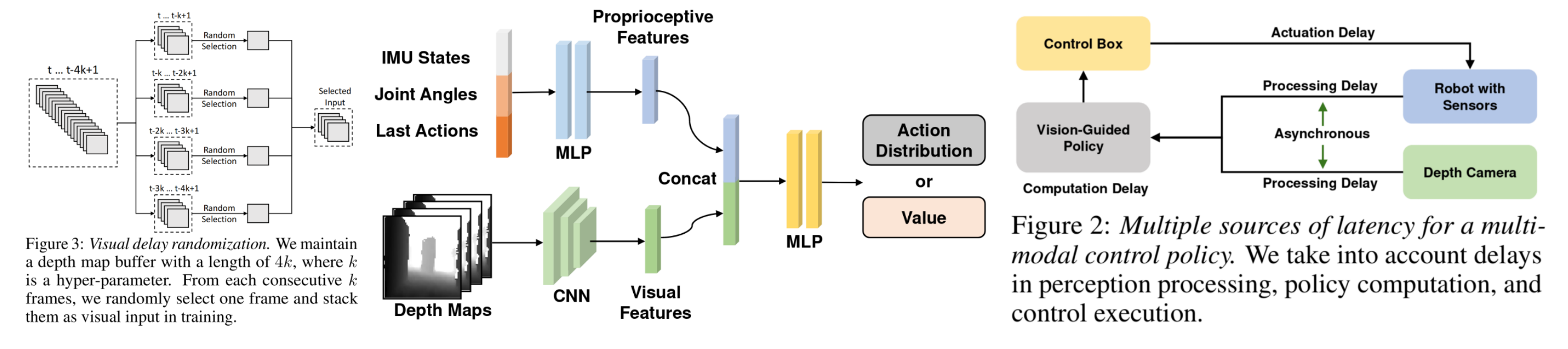

- [IROS] Vision-Guided Quadrupedal Locomotion in the Wild with Multi-Modal Delay Randomization. [vision-guide] [paper] [code]

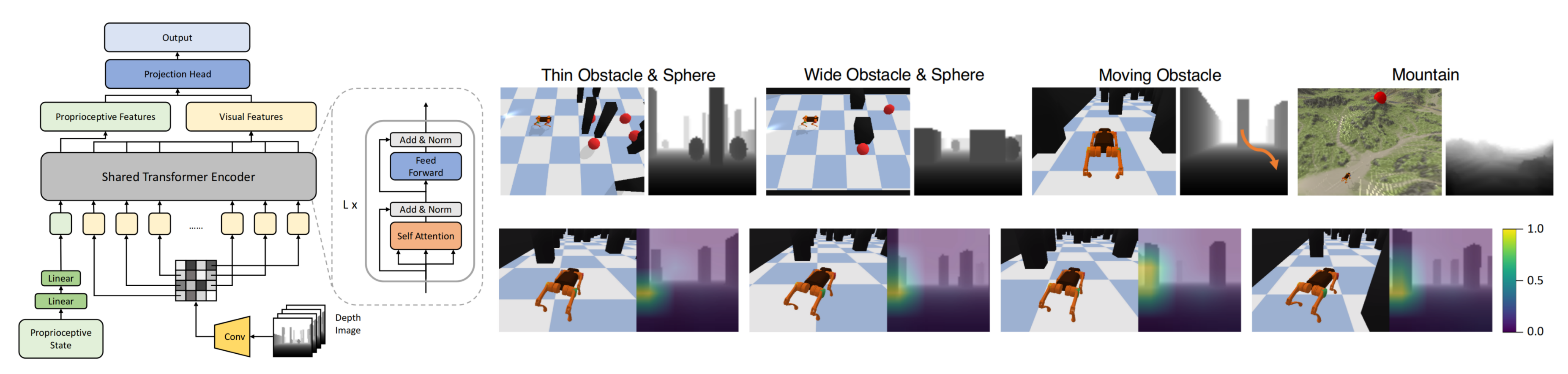

- [ICLR] Learning Vision-Guided Quadrupedal Locomotion End-to-End with Cross-Modal Transformers. [vision-guide] [paper] [code]

- [CVPR] Coupling Vision and Proprioception for Navigation of Legged Robots. [vision-guide] [paper] [code]

- [ACM GRAPH] ASE: Large-Scale Reusable Adversarial Skill Embeddings for Physically Simulated Characters [paper]

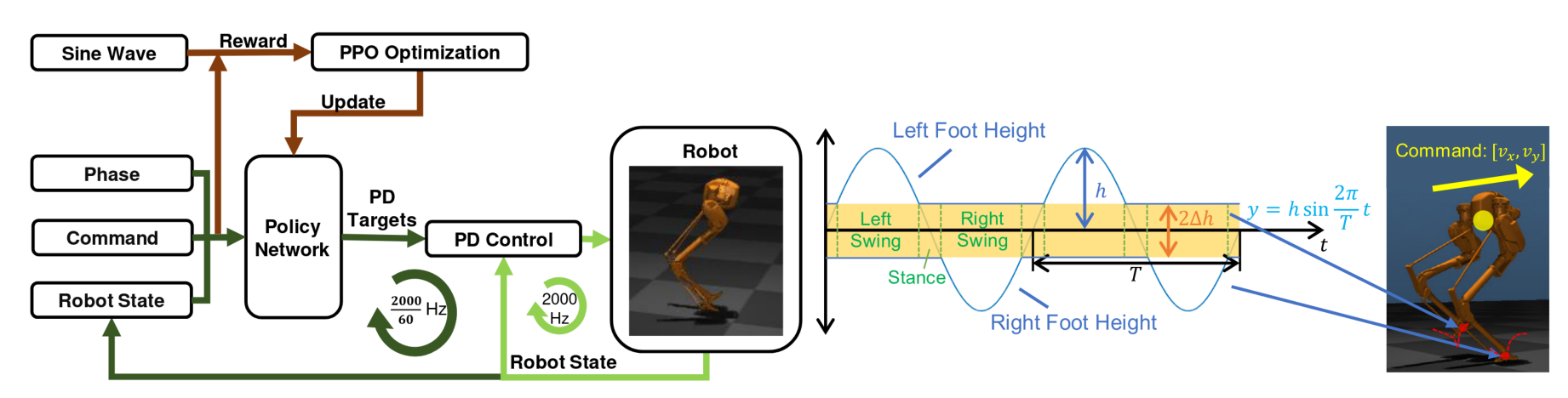

- [ICMA] Custom Sine Waves Are Enough for Imitation Learning of Bipedal Gaits with Different Styles. [paper]

- [Machines] Deep Reinforcement Learning for Model Predictive Controller Based on Disturbed Single Rigid Body Model of Biped Robots. [paper]

- [IEEE-RAS] Improving Sample Efficiency of Deep Reinforcement Learning for Bipedal Walking. [paper] [code]

- [IEEE-RAS] Dynamic Bipedal Turning through Sim-to-Real Reinforcement Learning. [paper]

- [IEEE-RAS] Learning Bipedal Walking On Planned Footsteps For Humanoid Robots. [paper] [code]

- [TCAS-II] Parallel Deep Reinforcement Learning Method for Gait Control of Biped Robot. [paper]

- [ScienceRobotics] Learning robust perceptive locomotion for quadrupedal robots in the wild. [paper]

- [IEEE-TRO] RLOC: Terrain-Aware Legged Locomotion Using Reinforcement Learning and Optimal Control. [paper] [code]

- [IEEE-RAL] Linear Policies are Sufficient to Realize Robust Bipedal Walking on Challenging Terrains. [paper]

- [ICRA] Reinforcement Learning for Robust Parameterized Locomotion Control of Bipedal Robots. [sim2real] [paper]

- [NeurIPS] Hierarchical Skills for Efficient Exploration. [control] [paper]

- [ICRA] Sim-to-Real Learning of All Common Bipedal Gaits via Periodic Reward Composition. [paper]

- [ICRA] Deepwalk: Omnidirectional bipedal gait by deep reinforcement learning. [paper]

- [IROS] Robust Feedback Motion Policy Design Using Reinforcement Learning on a 3D Digit Bipedal Robot. [paper]

- [RSS] Blind Bipedal Stair Traversal via Sim-to-Real Reinforcement Learning. [paper]

- [PMLR] From Pixels to Legs: Hierarchical Learning of Quadruped Locomotion. [paper]

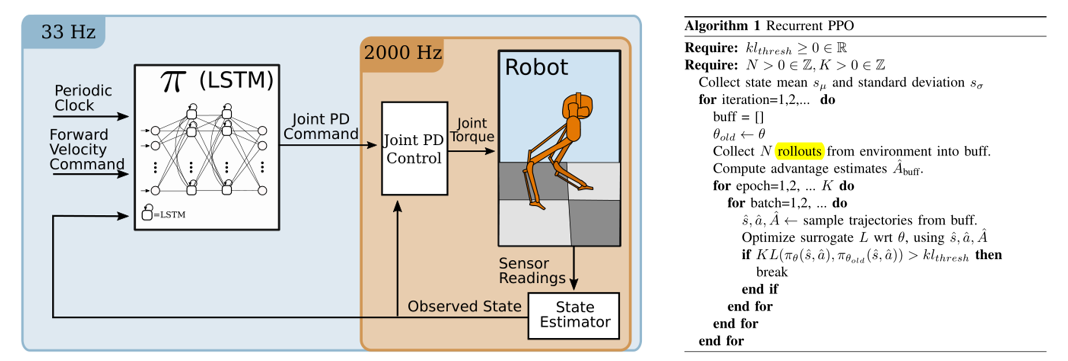

- [RSS] Learning Memory-Based Control for Human-Scale Bipedal Locomotion. [sim2real] [paper] [unofficial_code]

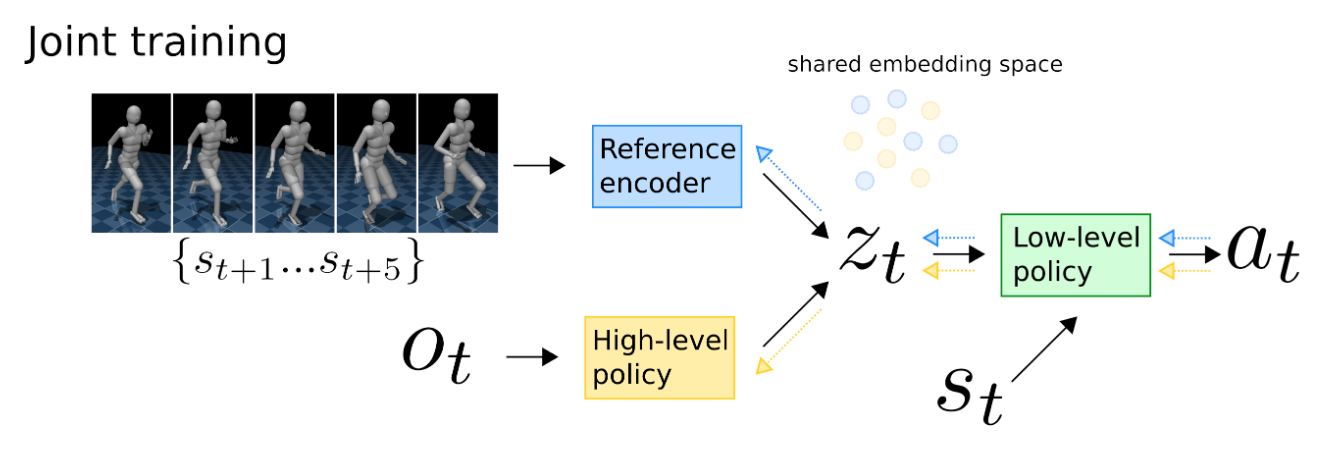

- [RSS] Learning Agile Robotic Locomotion Skills by Imitating Animals. [paper] [code] [code-pytorch]

- [IROS] Crossing the Gap: A Deep Dive into Zero-Shot Sim-to-Real Transfer for Dynamics. [sim2real] [paper] [code]

- [PMLR] CoMic: Complementary Task Learning & Mimicry for Reusable Skills. [imitation] [paper] [code]

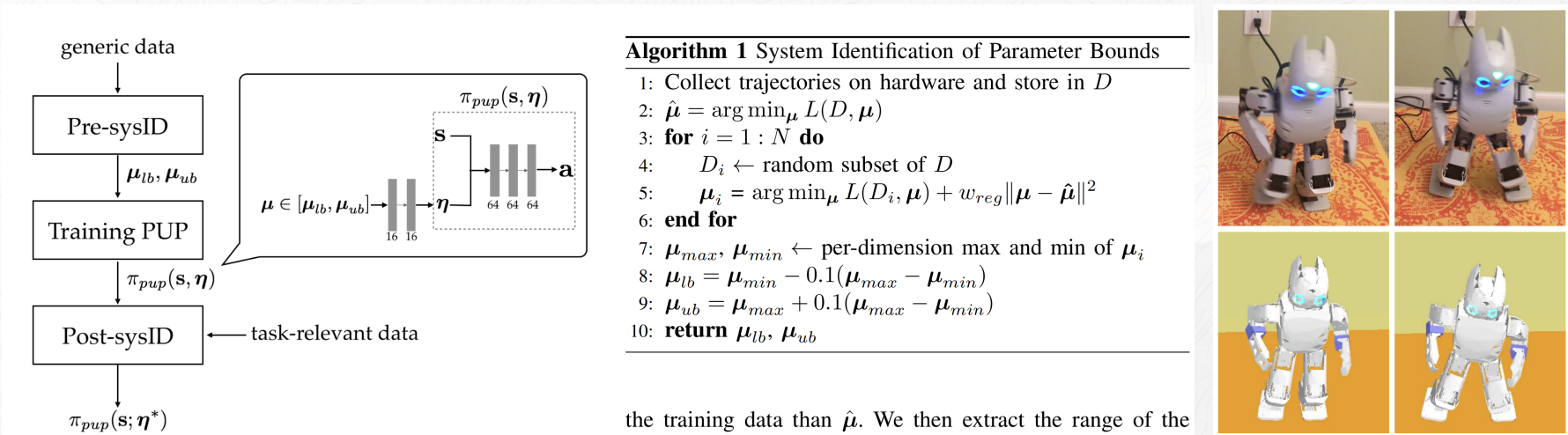

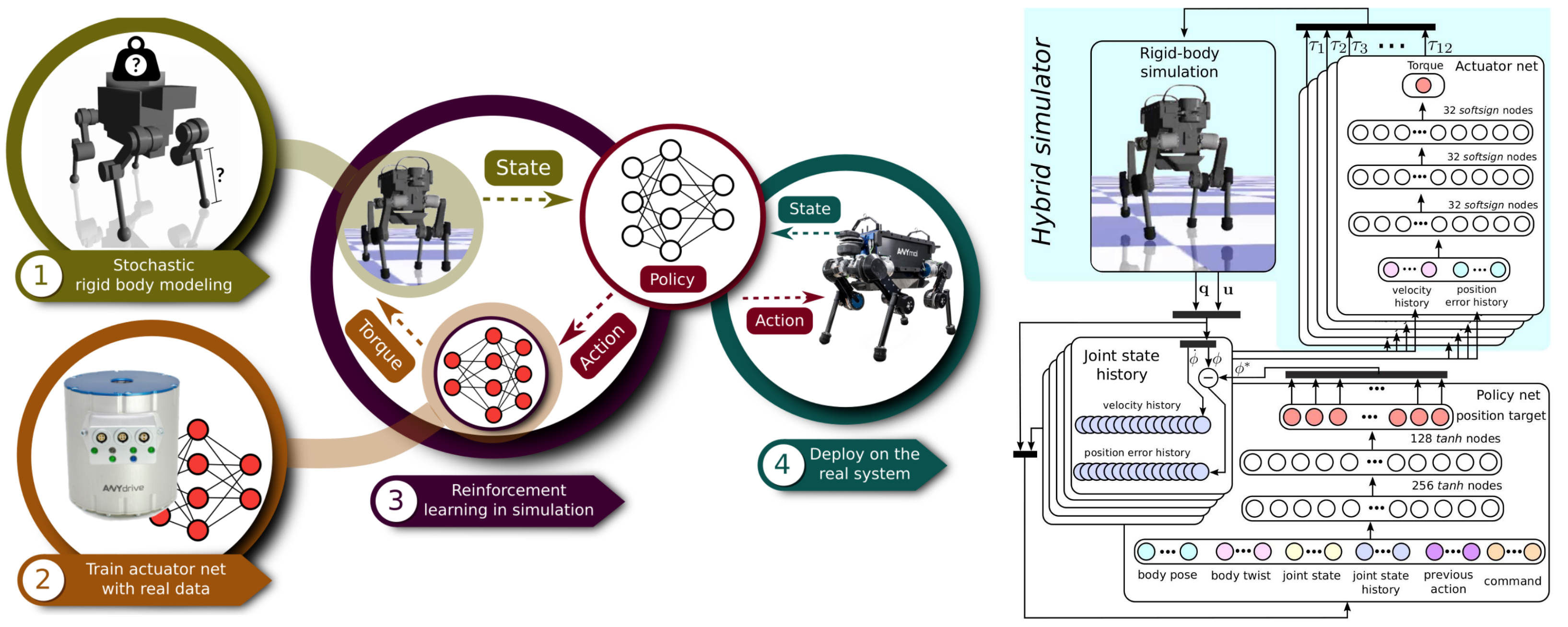

- [PMLR] Learning Locomotion Skills for Cassie: Iterative Design and Sim-to-Real. [paper]

[IEEE-RAL] Learning Natural Locomotion Behaviors for Humanoid Robots Using Human Bias. [paper]

- [IROS] Sim-to-Real Transfer for Biped Locomotion. [paper]

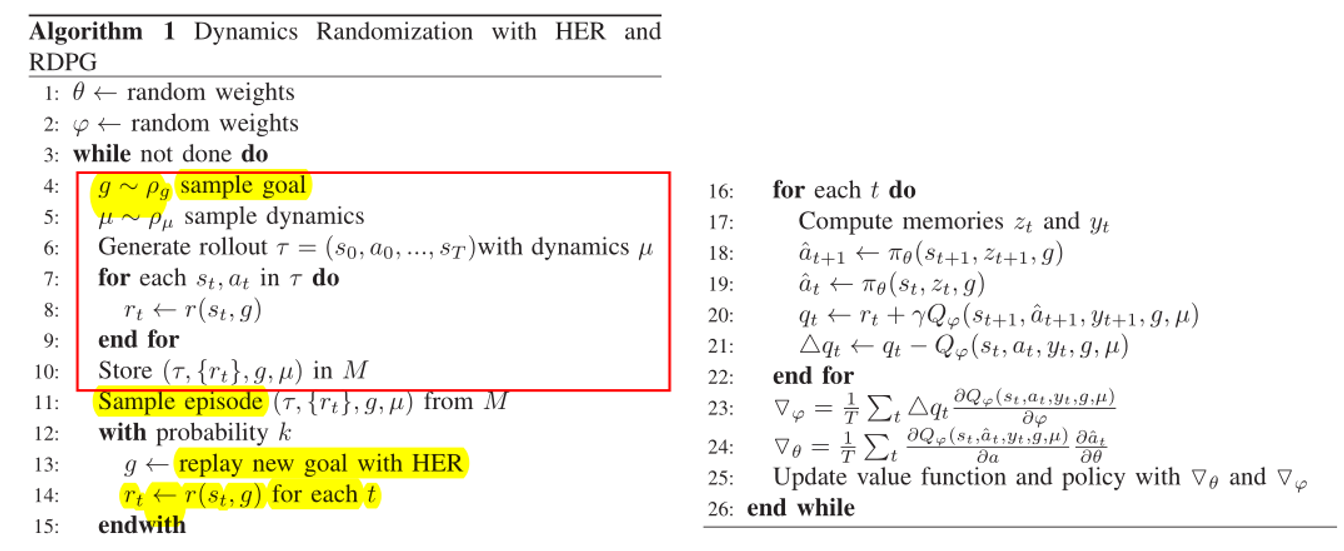

- [ICRA] Sim-to-Real Transfer of Robotic Control with Dynamics Randomization. [sim2real] [paper]

- [RSS] Sim-to-Real: Learning Agile Locomotion For Quadruped Robots. [sim2real] [paper]

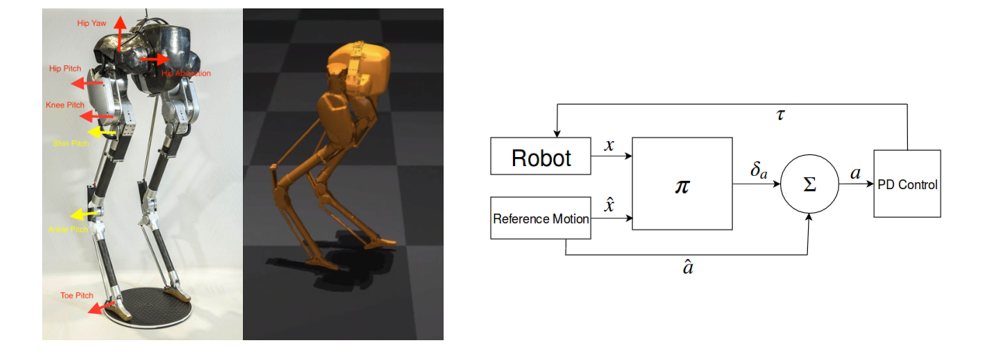

- [ACM SIGGRAPH] DeepMimic: Example-Guided Deep Reinforcement Learning of Physics-Based Character Skills. [paper] [code]

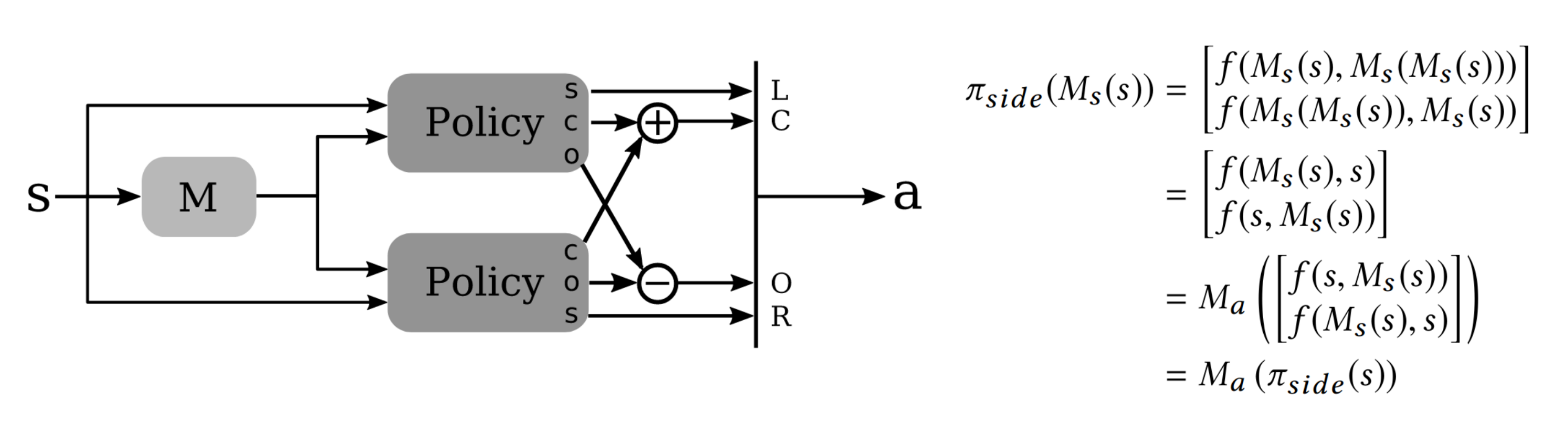

- [ACM TOG] Learning Symmetric and Low-Energy Locomotion. [paper]

- [IEEE RAS] Learning Whole-body Motor Skills for Humanoids. [paper]

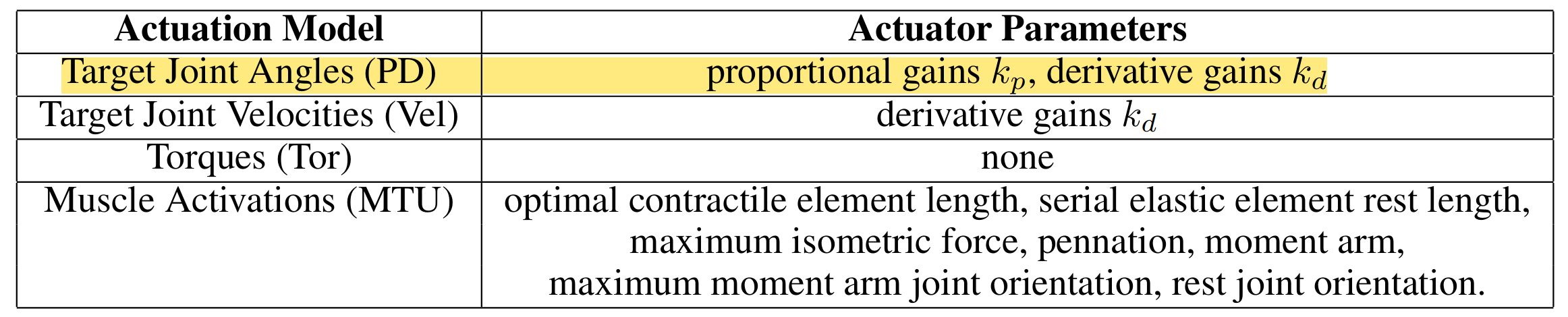

- [ACM SIGGRAPH] Learning locomotion skills using deeprl: Does the choice of action space matter? [control] [paper]