As part of the implementation series of Joseph Lim's group at USC, our motivation is to accelerate (or sometimes delay) research in the AI community by promoting open-source projects. To this end, we implement state-of-the-art research papers, and publicly share them with concise reports. Please visit our group github site for other projects.

This project is implemented by Te-Lin Wu and the codes have been reviewed by Shao-Hua Sun before being published.

This project is a PyTorch implementation of Conditional Image Synthesis With Auxiliary Classifier GANs which was published as a conference proceeding at ICML 2017. This paper proposes a simple extention of GANs that employs label conditioning in additional to produce high resolution and high quality generated images.

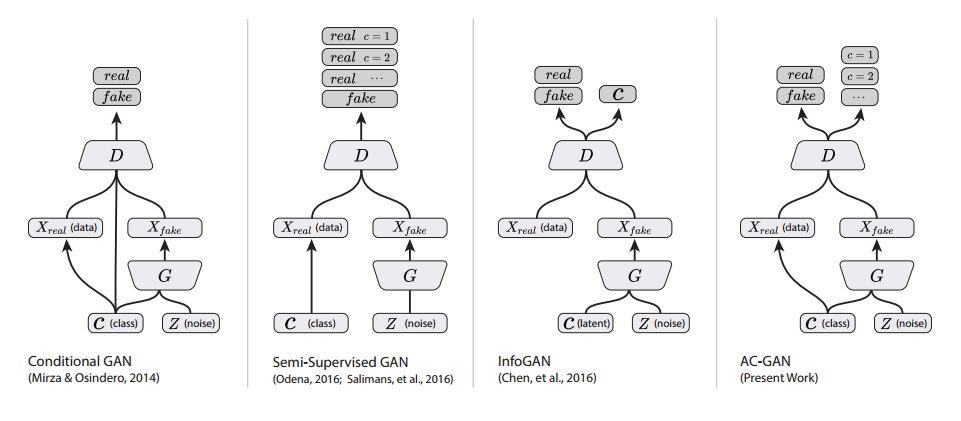

By adding an auxiliary classifier to the discriminator of a GAN, the discriminator produces not only a probability distribution over sources but also probability distribution over the class labels. This simple modification to the standard DCGAN models does not give tremendous difference but produces better results and is capable of stabilizing the whole adversarial training.

The architecture is as shown below for comparisons of several GANs.

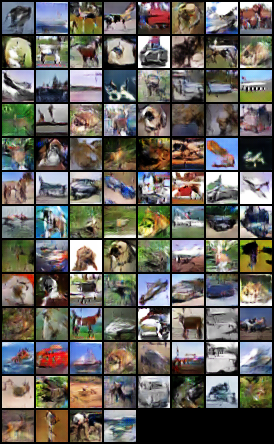

The sample generated images from ImageNet dataset.

The sample generated images from CIFAR-10 dataset.

The implemented model can be trained on both CIFAR-10 and ImageNet datasets.

Note that this implementation may differ in details from the original paper such as model architectures, hyperparameters, applied optimizer, etc. while maintaining the main proposed idea.

*This code is still being developed and subject to change.

Run the following command for details of each arguments.

$ python main.py -hYou should specify the path to the dataset you are using with argument --dataroot, the code will automatically check if you have cifar10 dataset downloaded or not. If not, the code will download it for you. For the ImageNet training you should download the whole dataset on their website, this repository used 2012 version for the training. And you should point the dataroot to the train (or val) directory as the root directory for ImageNet training.

In line 80 of main.py, you can change the classes_idx argument to take into other user-specified imagenet classes, and adjust the num_classes accordingly if it is not 10.

if opt.dataset == 'imagenet':

# folder dataset

dataset = ImageFolder(root=opt.dataroot,

transform=transforms.Compose([

transforms.Scale(opt.imageSize),

transforms.CenterCrop(opt.imageSize),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5)),

]),

classes_idx=(10,20))Example training commands, the code will automatically generate images for testing during training to the --outf directory.

$ python main.py --outf=/your/output/file/name --niter=500 --batchSize=100 --cuda --dataset=cifar10 --imageSize=32 --dataroot=/data/path/to/cifar10 --gpu=0Te-Lin Wu / @telin0411 @ Joseph Lim's research lab @ USC