Status and roadmap

martindurant opened this issue · 9 comments

Features to be implemented.

An asterisk shows the next item(s) on the list.

A question mark shows something that might (almost) work, but isn't tested.

- python2 compatibility

Reading

- Types of encoding ( https://github.com/Parquet/parquet-format/blob/master/Encodings.md )

- plain

- bitpacked/RLE hybrid

- dictionary

- decode to values

- make into categoricals

- delta (needs test data)

- delta-length byte-array (needs test data)

- compression algorithms (gzip, lzo, snappy, brotli)

- nulls

- repeated/list values (*)

- map, key-value types

- multi-file (hive-like)

- understand partition tree structure

- filtering by partitions

- parallelized for dask

- understand partition tree structure

- filtering by statistics

- converted/logical types

- alternative file-systems

- index handling

Writing

- primitive types

- converted/logical types

- encodings (selected by user)

- plain (default)

- dictionary encoding (default for categoricals)

- delta-length byte array (should be much faster for variable strings)

- delta encoding (depends on reading delta encoding)

- nulls encoding (for dtypes that don't accept NaN)

- choice of compression

- per column

- multi-file

- partitions on categoricals

- parallelize for dask

- partitions and division for dask

- append

- single-file

- multi-file

- consolidate files into logical data-set

- alternative file-systems

Admin

- packaging

- pypi, conda

- README

- documentation

- RTD

- API documentation and doc-strings

- Developer documentation (everything you need to run tests)

- List of parquet features not yet supported to establish expectations

- Announcement blogpost with example

Features not to be attempted

- nested schemas (maybe can find a way to flatten or encode as dicts)

- choice of encoding on write? (keep it simple)

- schema evolution

Can I make a request for an additional section for Administrative topics like packaging, documentation, etc..

What do we need for Dask.dataframe integration? Presumably we're depending on dask.bytes.open_files?

Yes, passing a file-like object that can be resolved in each worker would do: core.read_col currently takes an open file-object or a string that can be opened within the function. It probably should take a function to create a file object given a path (a parquet metadata file will reference other files with relative paths).

The only places that reading actually happens is core.read_thrift (where the size of the thrift structure is not known) and core._read_page (where the size in bytes is known). The former is small and would fit within a read-ahead buffer, the latter can be formed in terms of dask's read_bytes.

Hi there,

Are you guys aware of ongoing PyArrow development? It is also already on conda-forge and also has pandas <-> parquet read/write (through Arrow), although I don't think it supports multi-file yet.

@lomereiter Yes, we're very aware. We've been waiting for comprehensive Parquet read-write functionality from Arrow for a long while. Hopefully fastparquet is just a stopgap measure until PyArrow matures as a comprehensive solution.

Hi, amazing work. Two things I noticed:

- pytest required at runtime (imported in utils.py) which is a bit unusual

- if column names are not string types then saving fails (e.g.

AttributeError: 'int' object has no attribute 'encode')

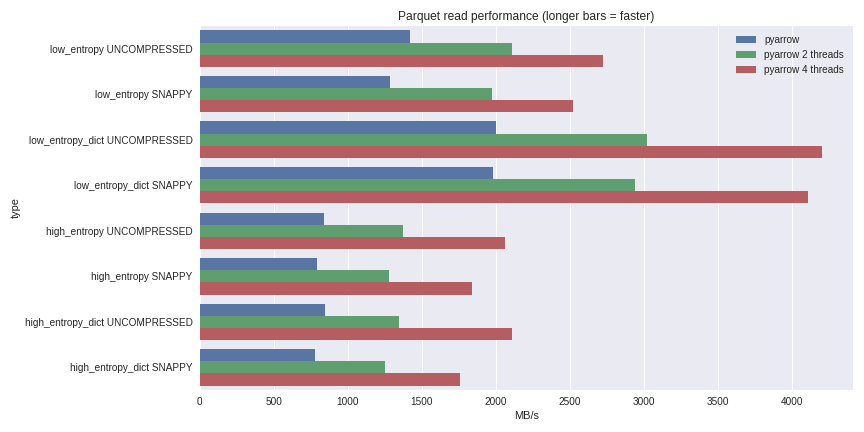

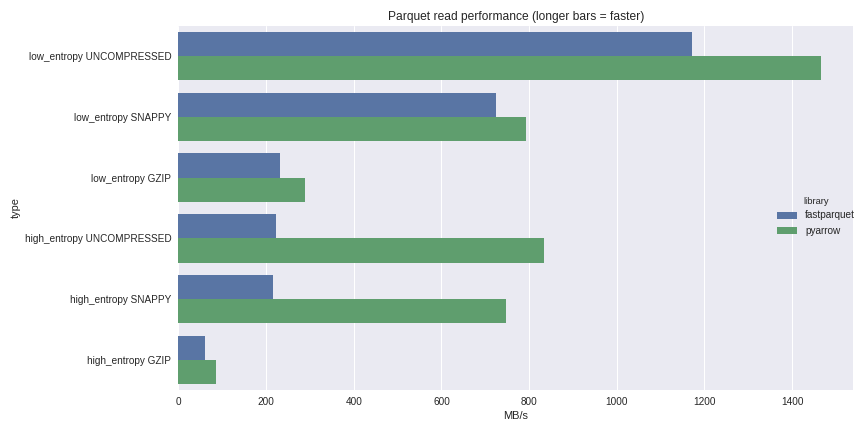

Since @lomereiter mentioned PyArrow, I will just leave this link here: Extreme IO performance with parallel Apache Parquet in Python

Thanks @frol . That there are multiple projects pushing on parquet for python is a good thing. You should also have linked to the previous posting python-parquet-update (Wes's work, not mine) which shows that fastparquet and arrow have very similar performance in many cases.

Note also that fastparquet is designed to run in parallel using dask, allowing distributed data access, and reading from remote stores such as s3.

@martindurant Thank you! I was actually looking out there for some sorts of benchmarks for fastparquet as I am going to use it with Dask. It would be very helpful to have some info about benchmarks in the documentation as "fast" suffix in the project name implies the focus on speed, but I failed to find any info on this until you pointed me to this article.

There are some raw benchmarks in https://github.com/dask/fastparquet/blob/master/fastparquet/benchmarks/columns.py

My colleagues at datashader did some benchmarking on census data at the time when we were focusing on performance. Their numbers include both loading and performing aggregations on the data.