This repository is a replication implementation of Counterfactual Generative Networks as part of the ML Reproducibility Challenge 2021.

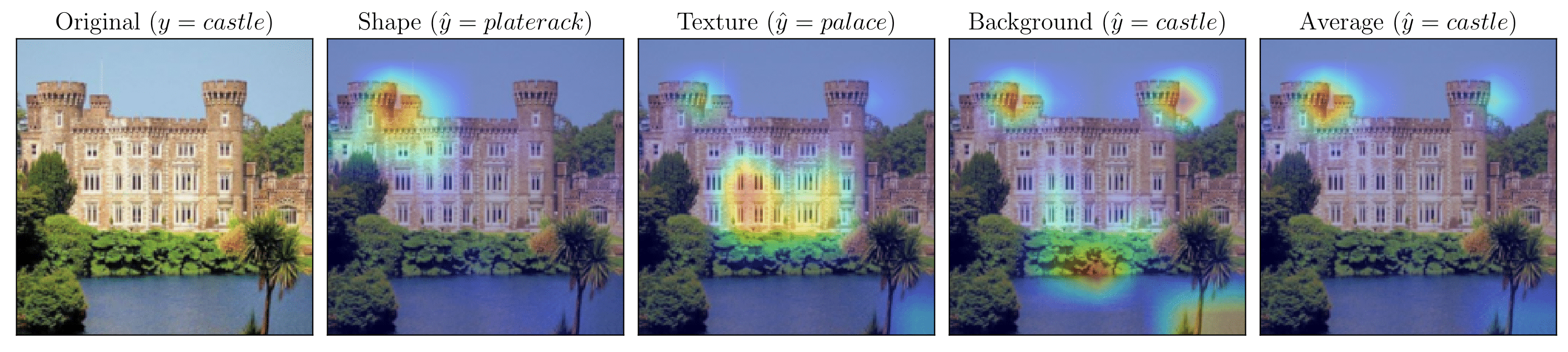

GradCAM-based heatmap visualized for shape, texture and background heads of CGN ensemble classifier for a sample image from ImageNet-mini. $y$ denotes the original label while $\hat{y}$ denotes the predicted label by each of the three heads.

GradCAM-based heatmap visualized for shape, texture and background heads of CGN ensemble classifier for a sample image from ImageNet-mini. $y$ denotes the original label while $\hat{y}$ denotes the predicted label by each of the three heads.

Clone the repository:

git clone git@github.com:danilodegoede/Re-CGN.git

cd Re-CGNDepending on whether you have a CPU/GPU machine, install a conda environment:

conda env create --file cgn_framework/environment-gpu.yml

conda activate cgn-gpu

A demo notebook that contains code to regenerate all the key reproducibility results that are presented in our paper is included in this repository. It also contains the code to download the datasets and model weights.

Start a jupyterlab session using jupyter lab and run the notebook experiments/final-demo.ipynb.

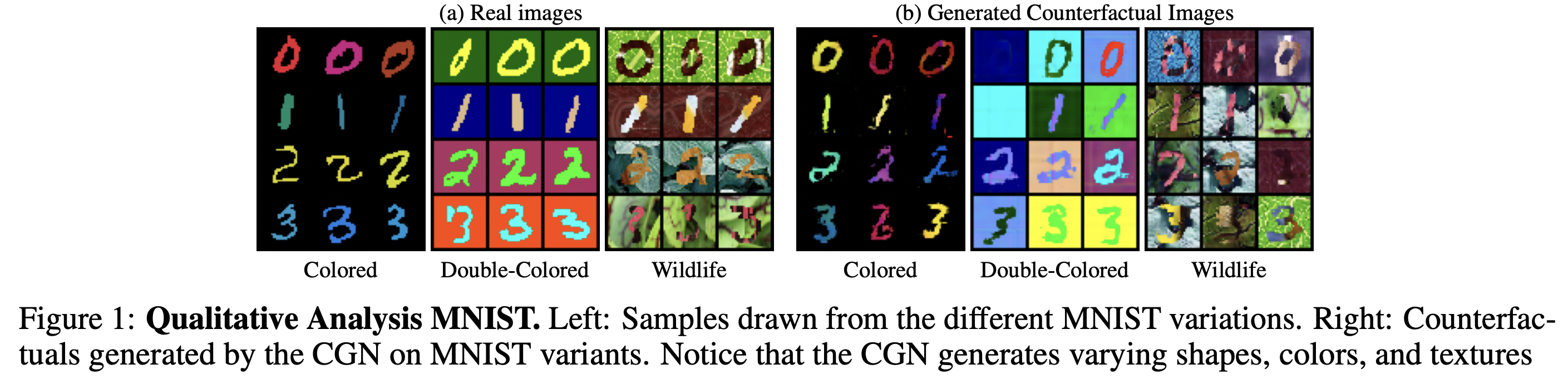

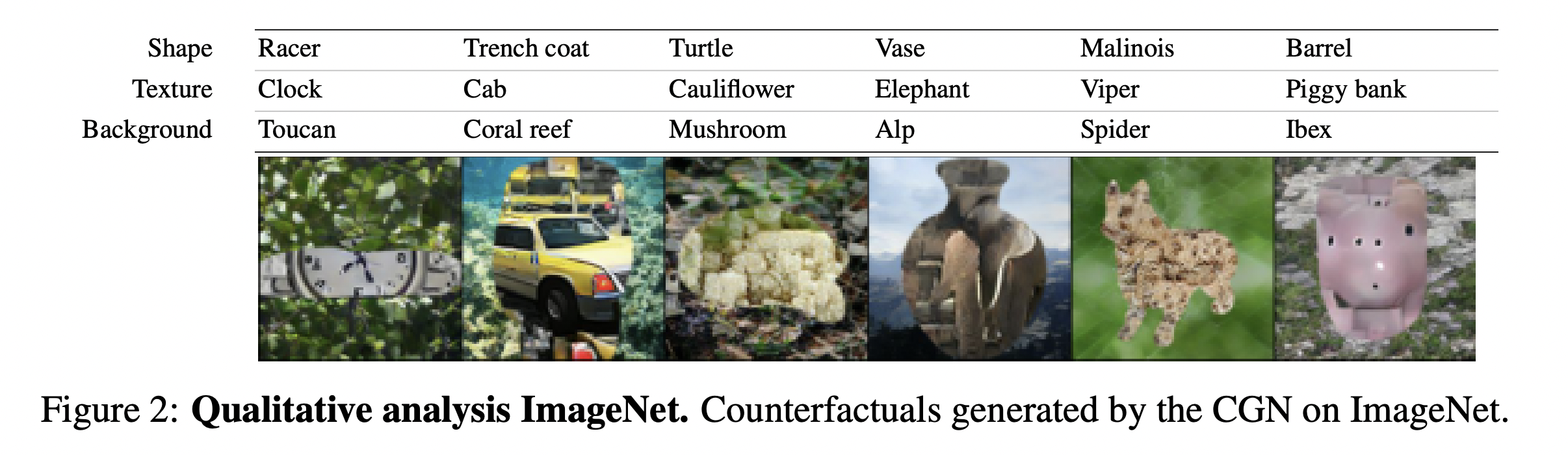

Claim 1: Generation of high-quality counterfactuals

We qualitatively evaluate the counterfactual samples generated by a CGN on variants of MNIST and ImageNet dataset.

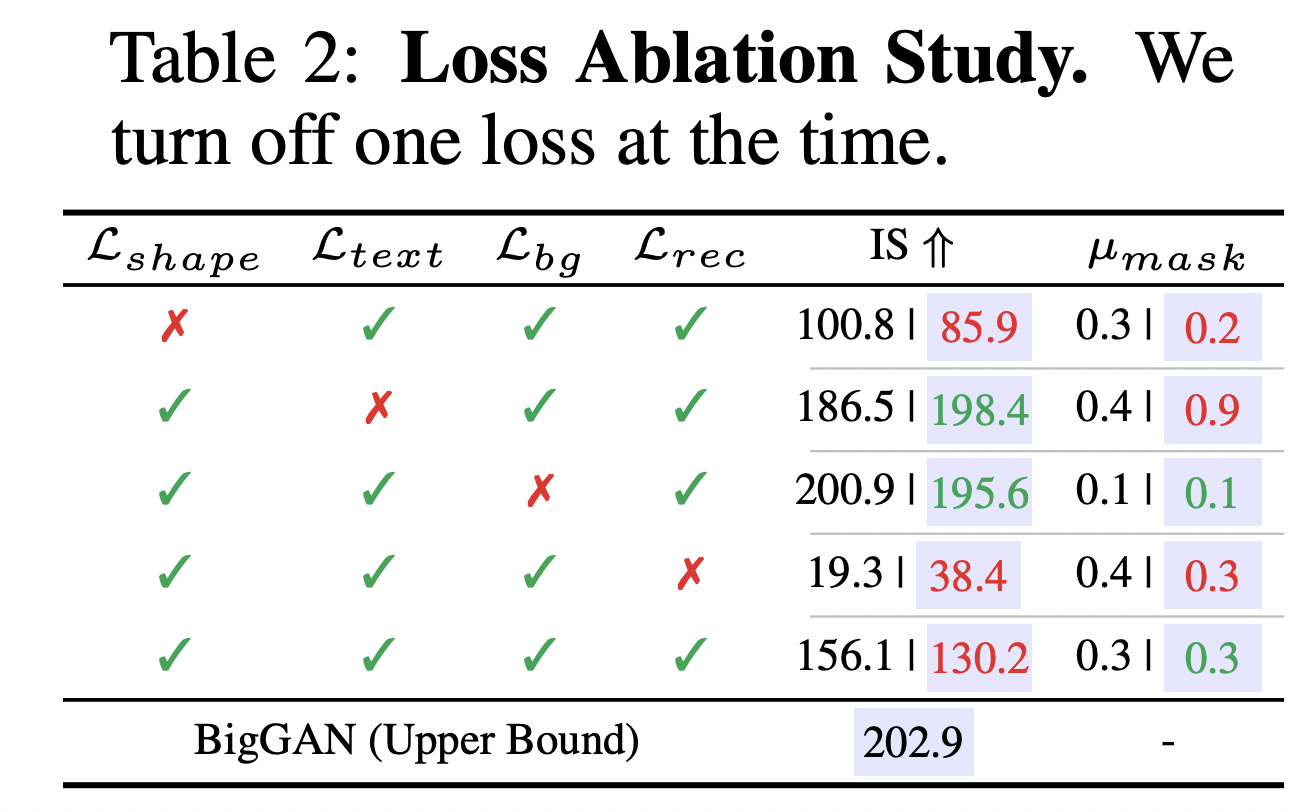

Claim 2: Inductive bias requirements

We replicate the experiment for loss ablation to verify if all inductive biases (e.g. losses) are indeed necessary for generating high-quality counterfactuals. The numbers in shaded cells (beside the reported numbers) denote the numbers borrowed from the original paper.

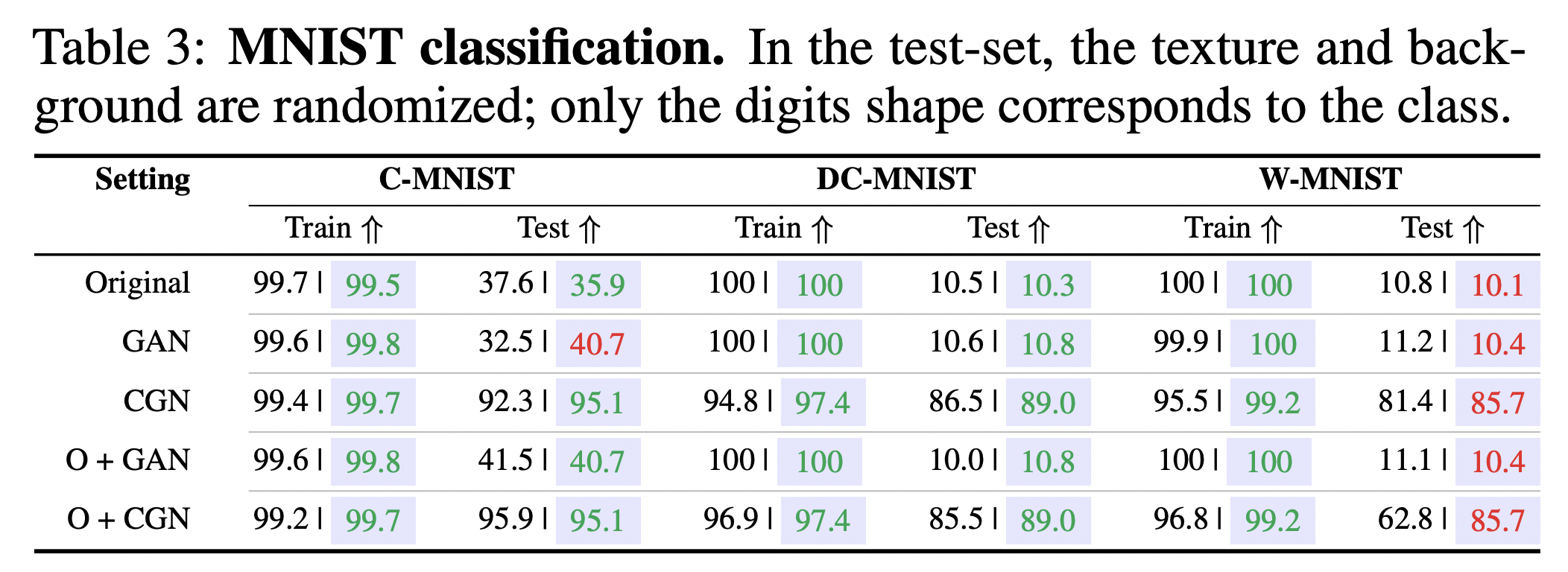

Claim 3: Out-of-distribution robustness

We evaluate how well can classifiers trained with counterfactual samples generalize to out-of-distribution datasets.

For MNISTs, we evaluate on colored MNIST, double colored MNIST and wildlife MNIST.

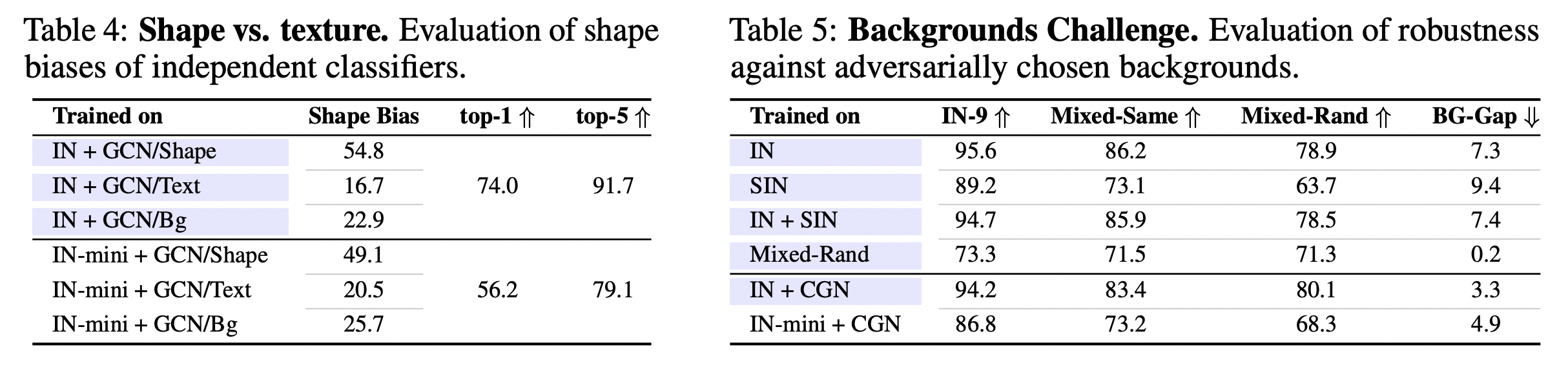

For ImageNet, due to resource constraints, we evaluate on ImageNet-mini. Replicating the paper, we do two experiments: (i) evaluating shape and texture bias of counterfactually trained classifier (Table 4), (ii) testing if such a classifier shows background invariance (Table 5).

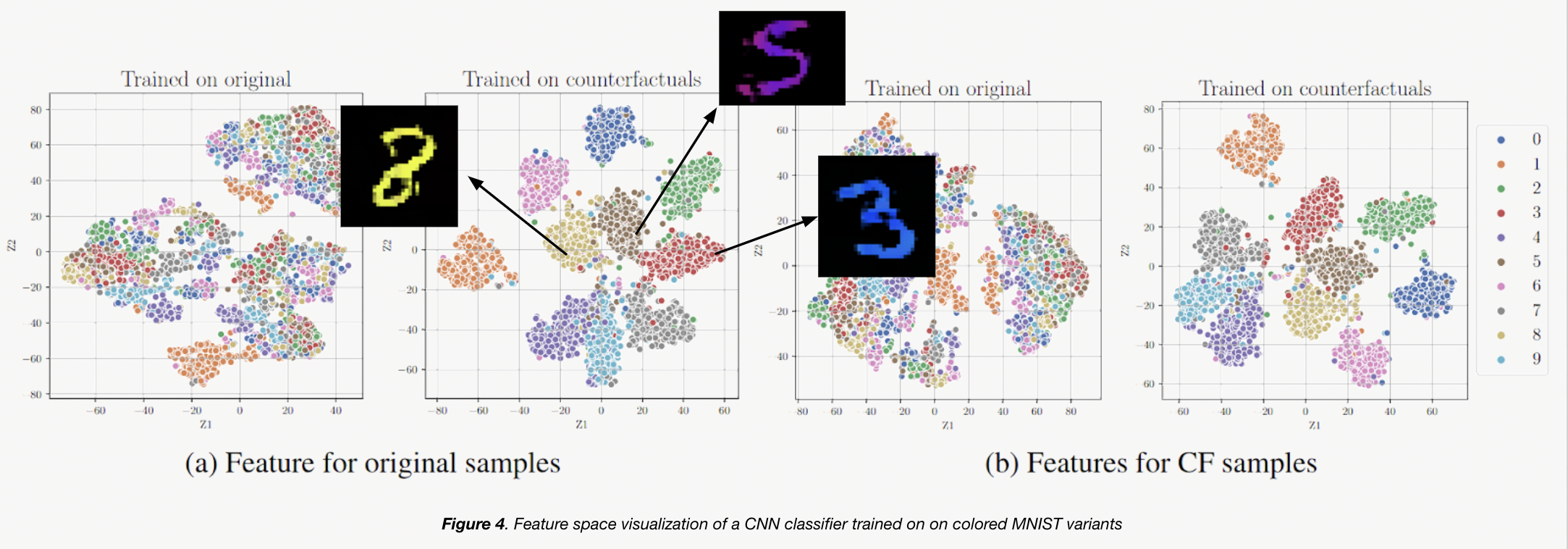

We conduct additional experiments and analysis to further investigate the main claims of the paper. A sample feature visualization for a classifier trained on original data vs that on counterfactual data is shown below. Kindly refer to the paper for more details and more such analyses.

Interestingly, digits 3-5-8 are similar in shape and their clusters are closer together as well. This strengthens the intuition that the classifier actually ignores spurious correlations (digit color) and focuses on causal correlations (digit shape).

Interestingly, digits 3-5-8 are similar in shape and their clusters are closer together as well. This strengthens the intuition that the classifier actually ignores spurious correlations (digit color) and focuses on causal correlations (digit shape).

- Template source: https://github.com/paperswithcode/releasing-research-code

- The authors of the original CGN paper: Axel Sauer and Andreas Geiger

If you use this code or find it helpful, please consider citing our work:

@inproceedings{bagad2022reproducibility,

title={Reproducibility Study of “Counterfactual Generative Networks”},

author={Bagad, Piyush and Maas, Jesse and Hilders, Paul and de Goede, Danilo},

booktitle={ML Reproducibility Challenge 2021 (Fall Edition)},

year={2022}

}