a colab of Cesar Fuentes, Benjamin Durupt and Markus Strasser

A library to interpret neural networks (pytorch). Featuring all your favorites:

-

-

For the pro and cons of these techniques: Feature Visualization

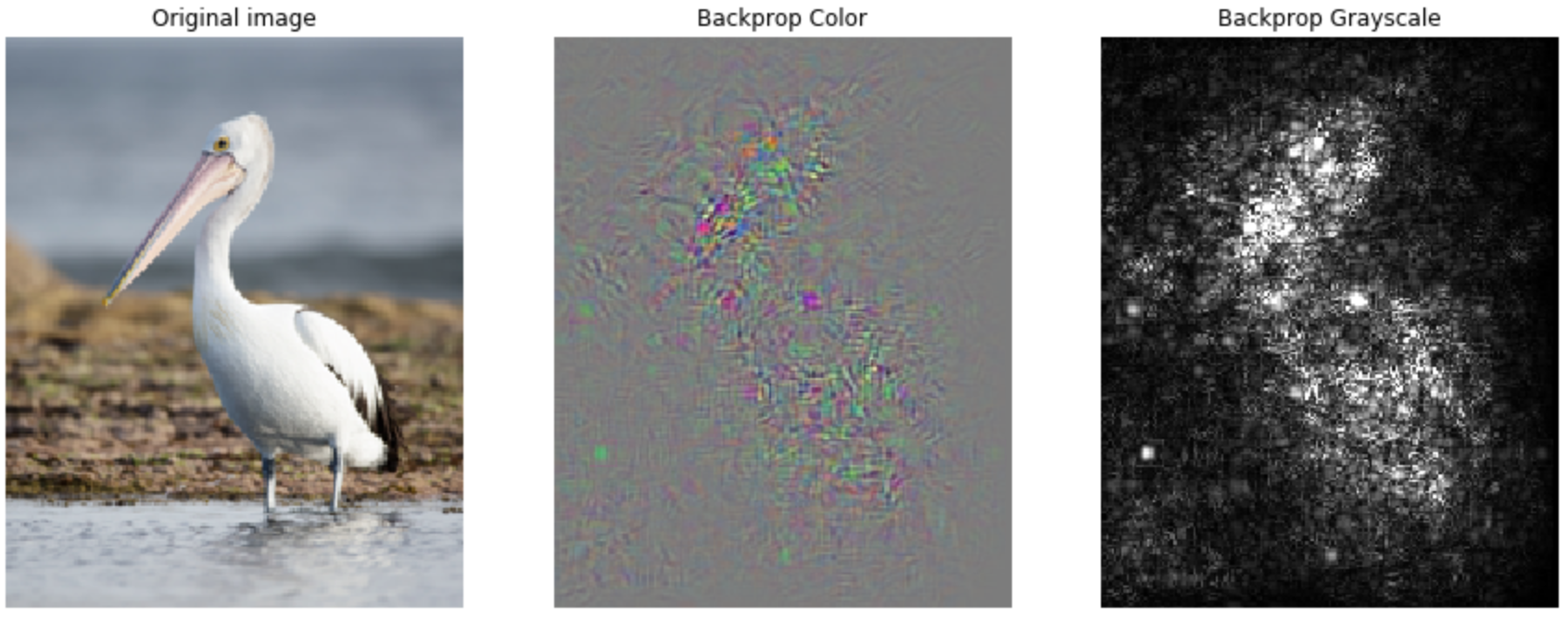

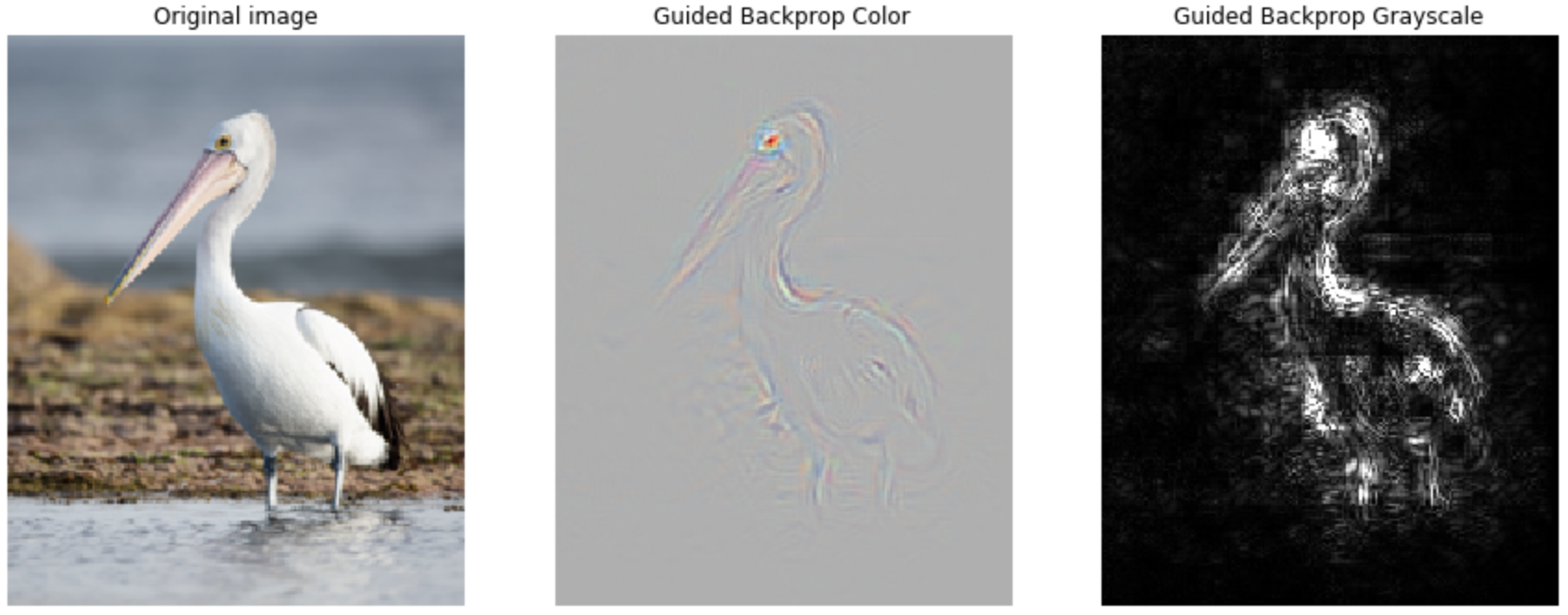

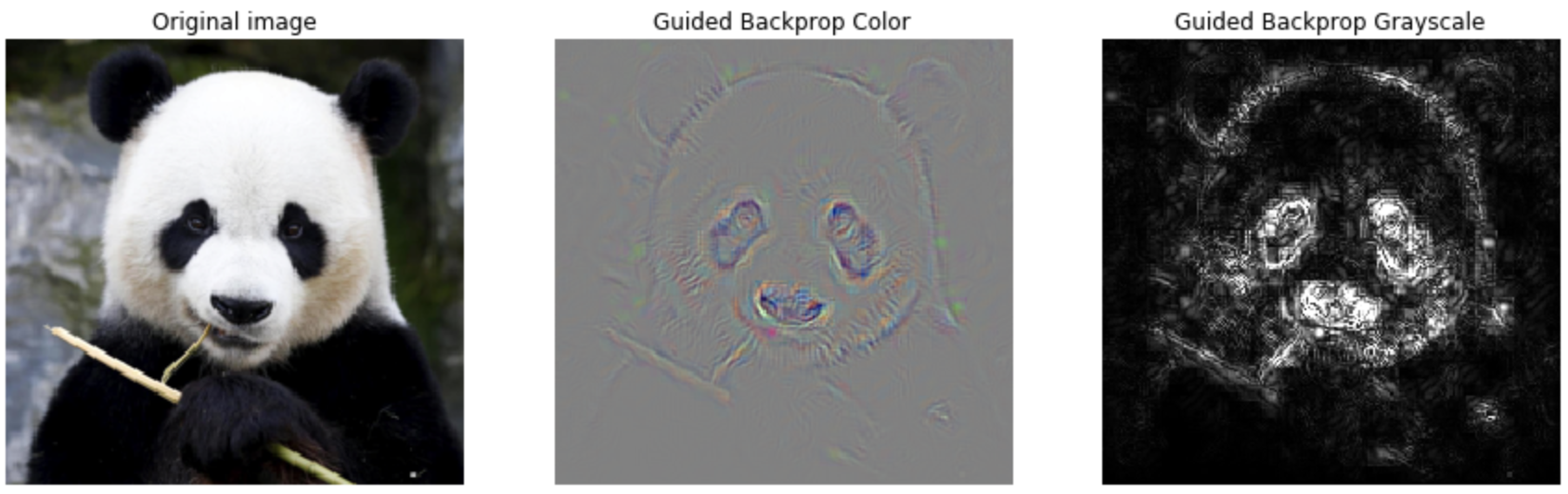

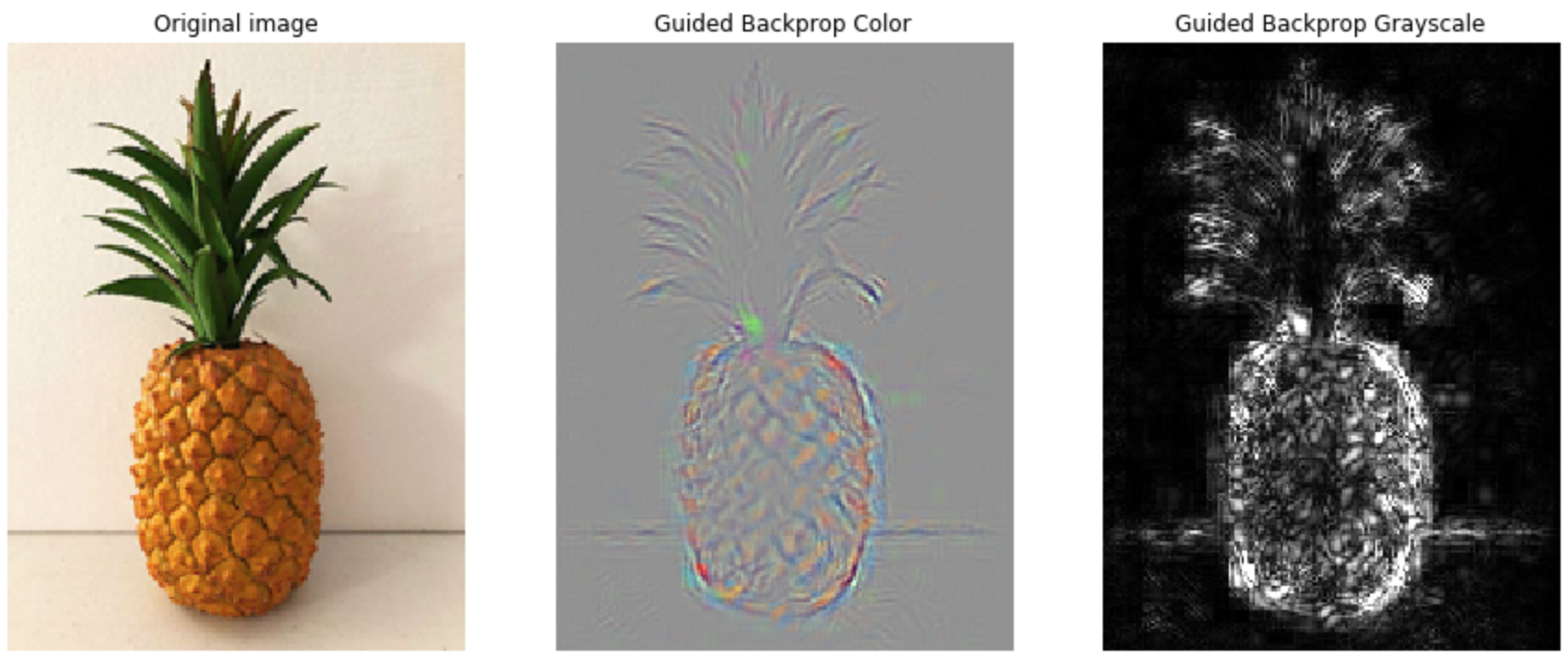

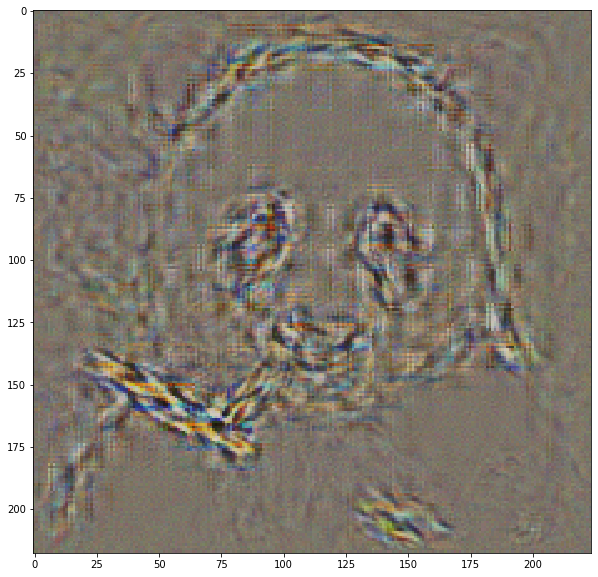

Idea: We get the relative importance of input features through calculating the gradient of the output prediction with respect to those input features

a) Given an input image, we perform the forward pass to the layer we are interested in, then set to zero all activations except one and propagate back to the image to get a reconstruction.

b) Different methods of propagating back through a ReLU nonlinearity.

c) Formal definition of different methods for propagating a output activation out back through a ReLU unit in layer l

Below are a few implementations you can try out: Vanilla

Guided

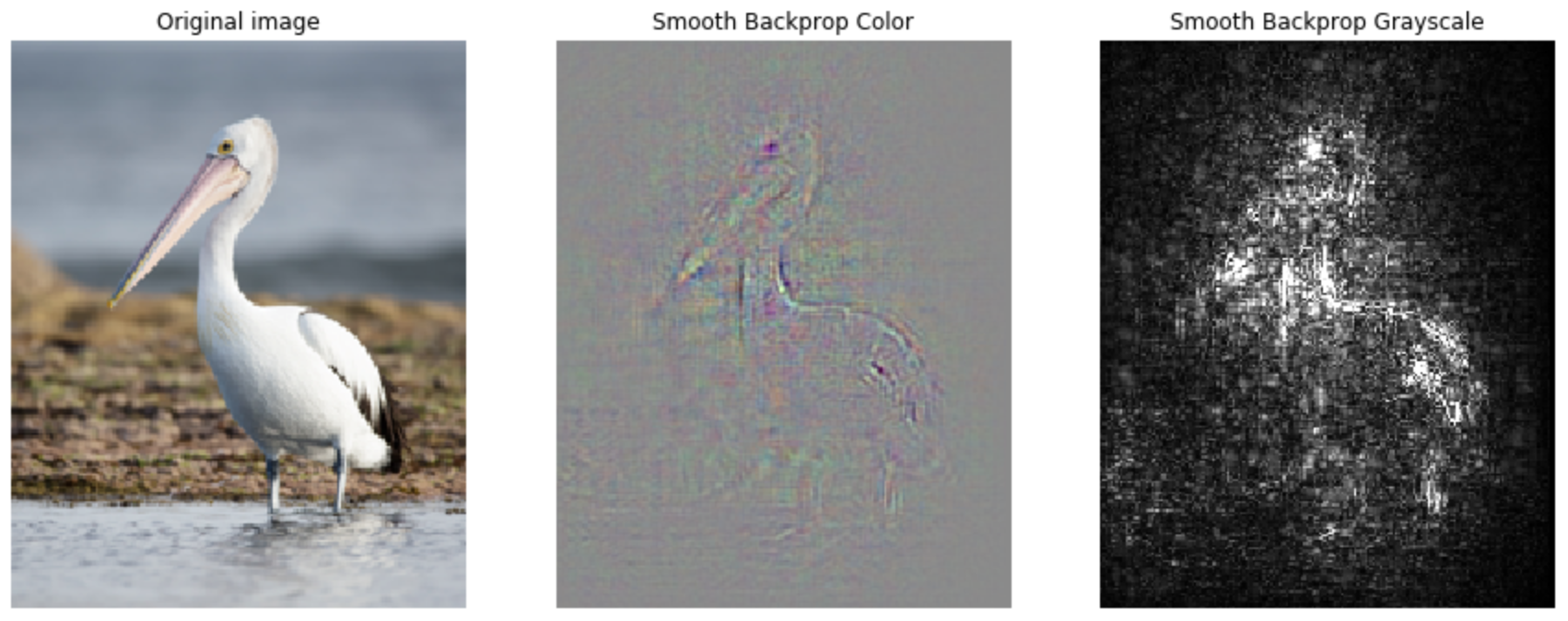

Smooth BackProp Guided

Smooth BackProp Unguided

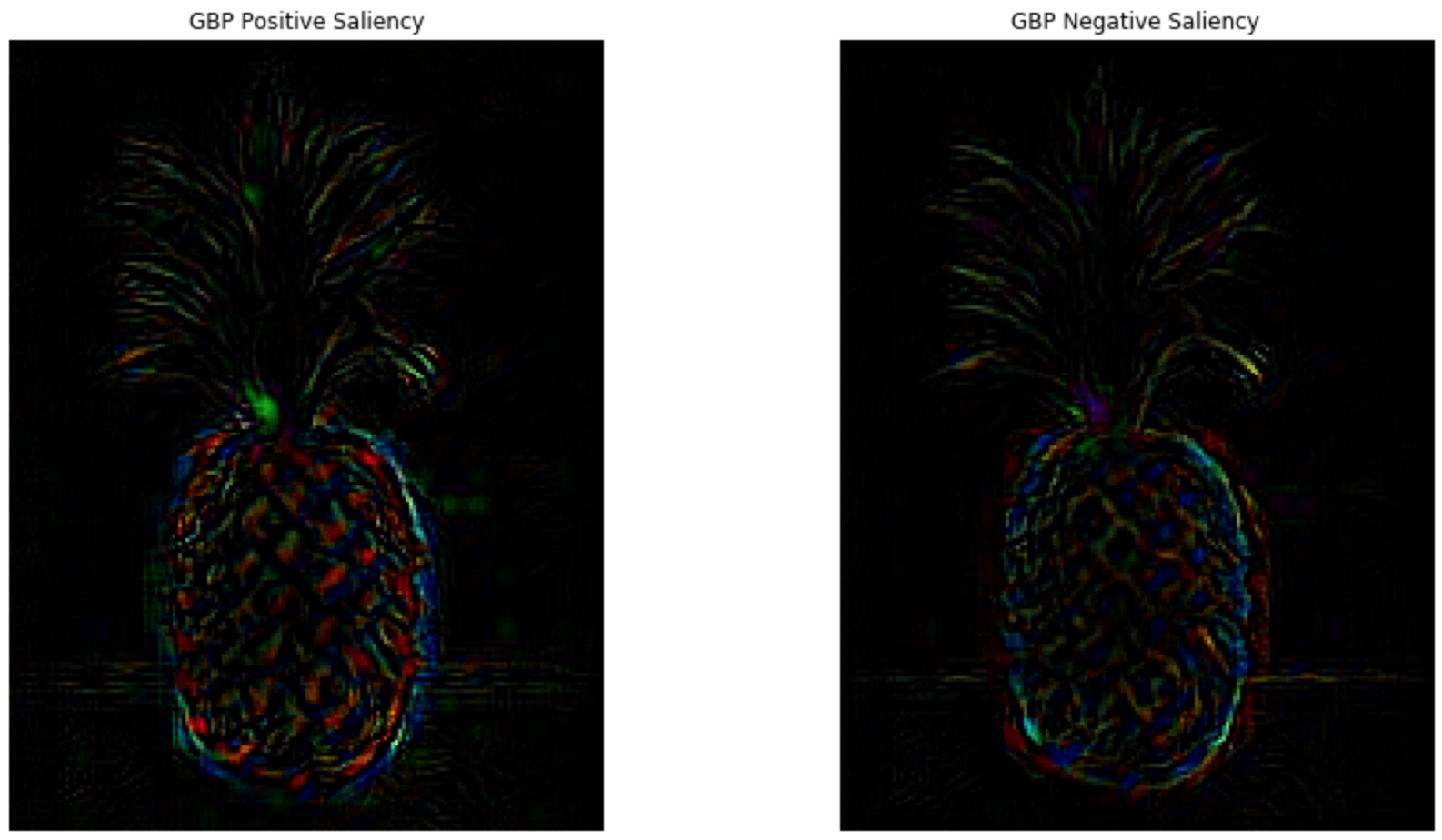

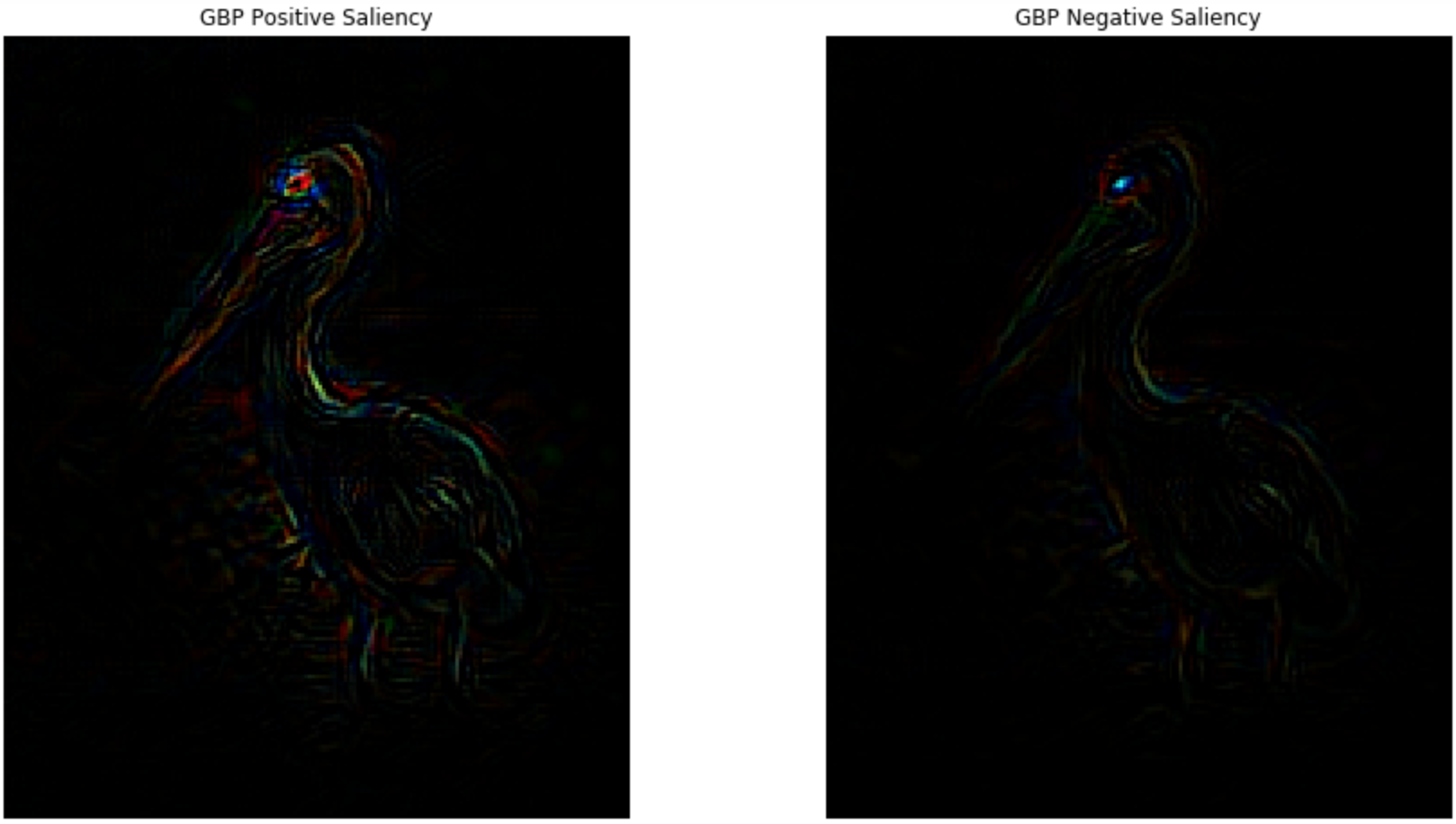

Positive and Negative Saliency

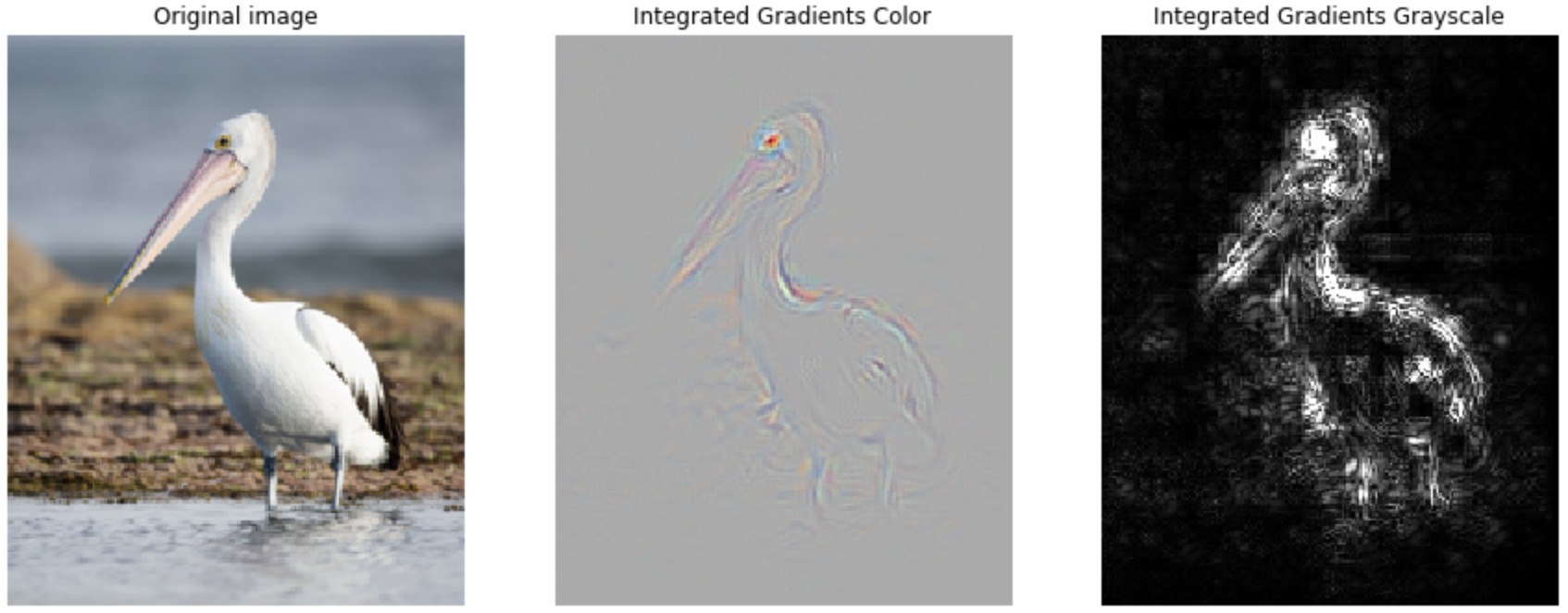

Integrated Gradient

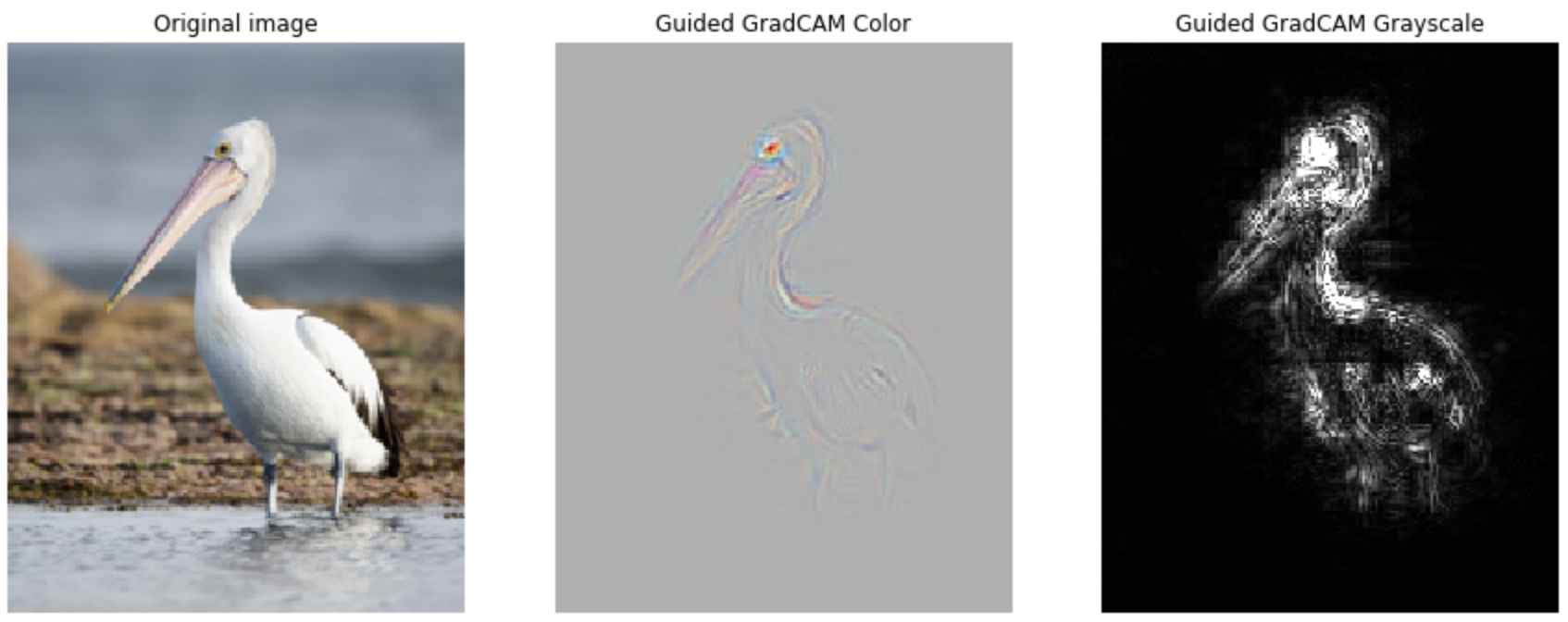

Guided GRADCAM

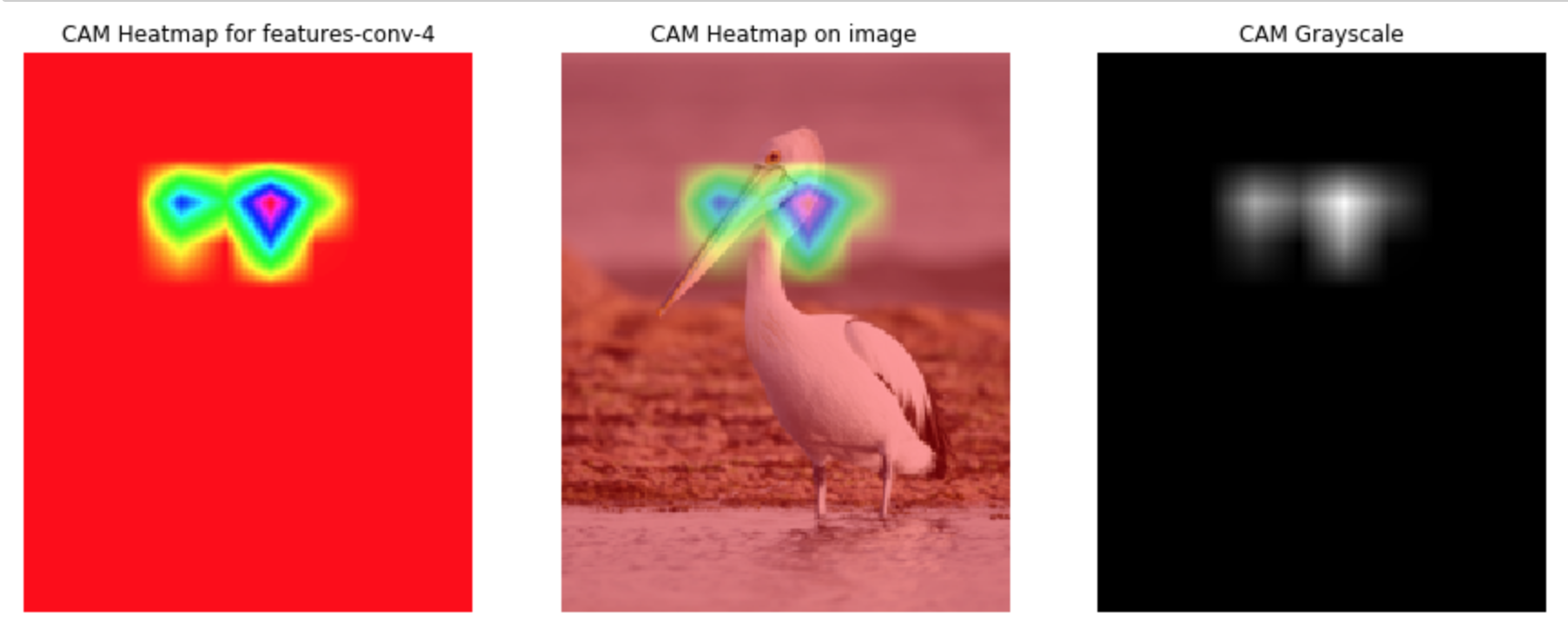

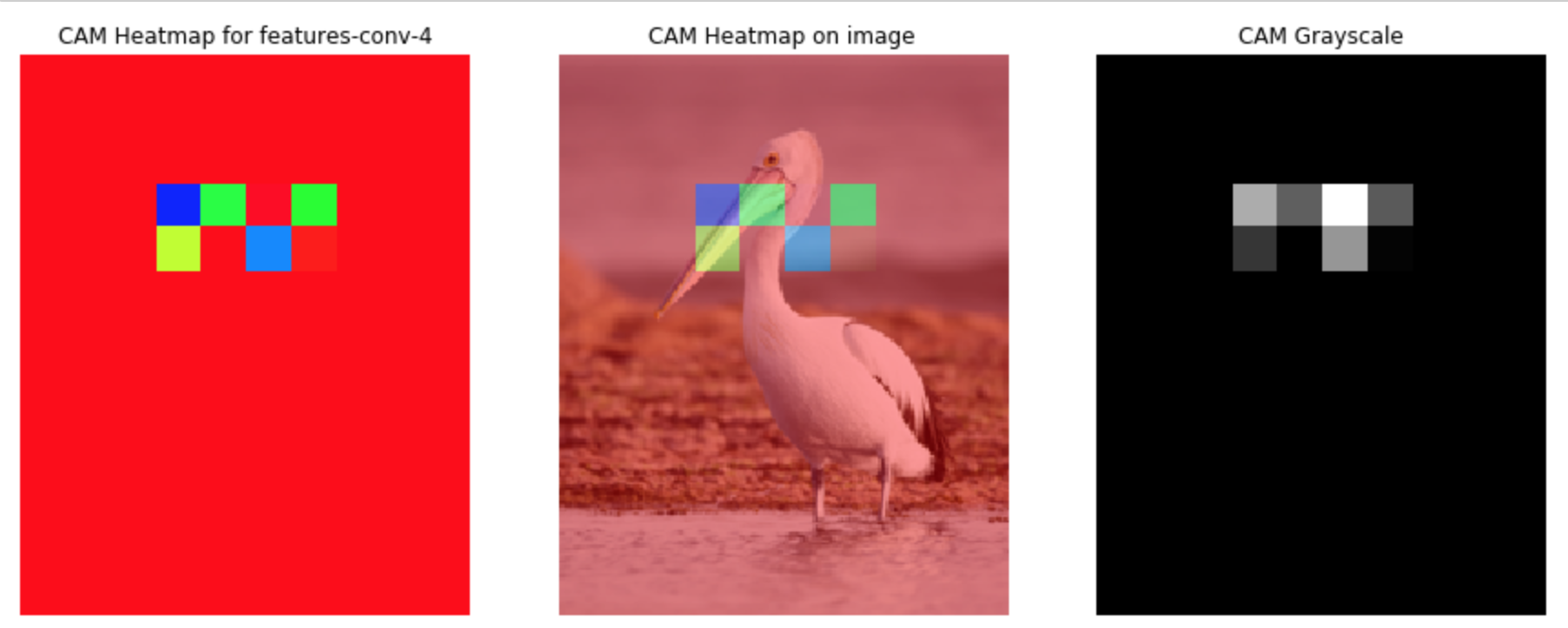

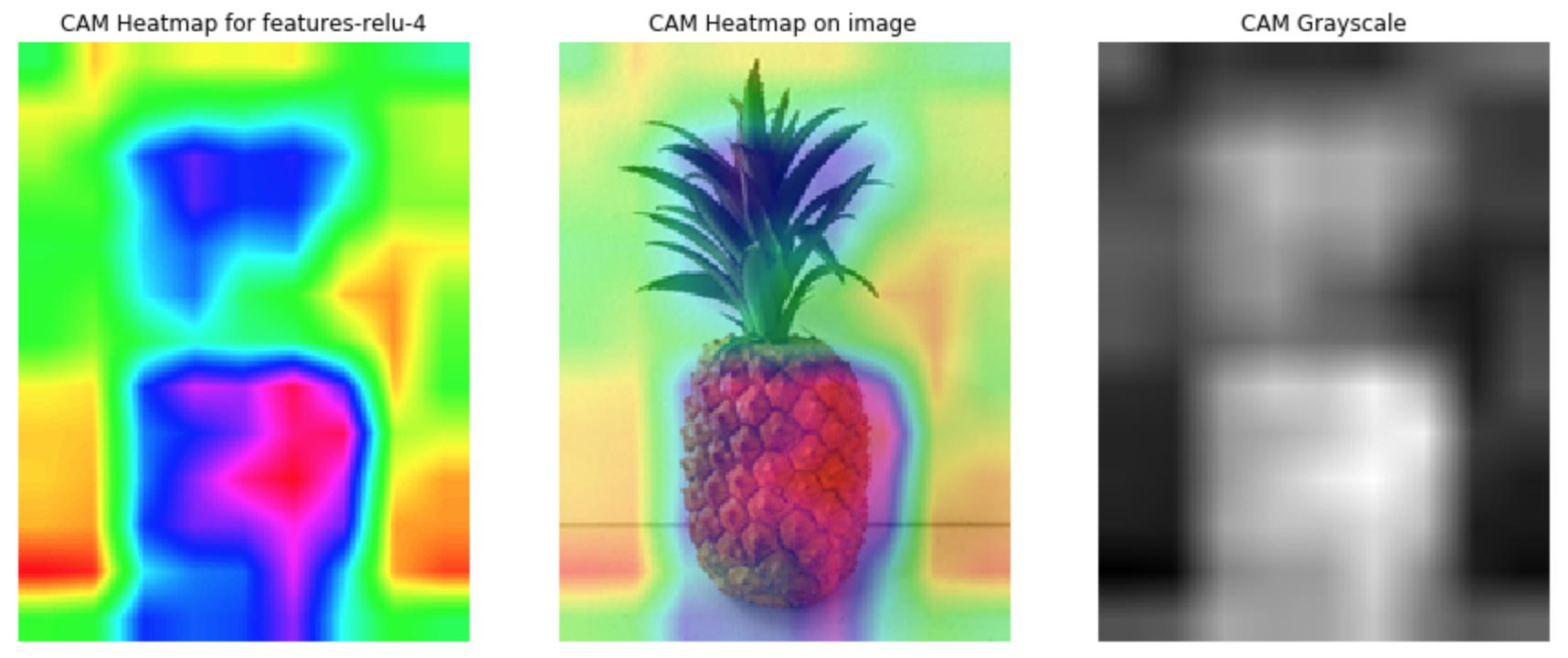

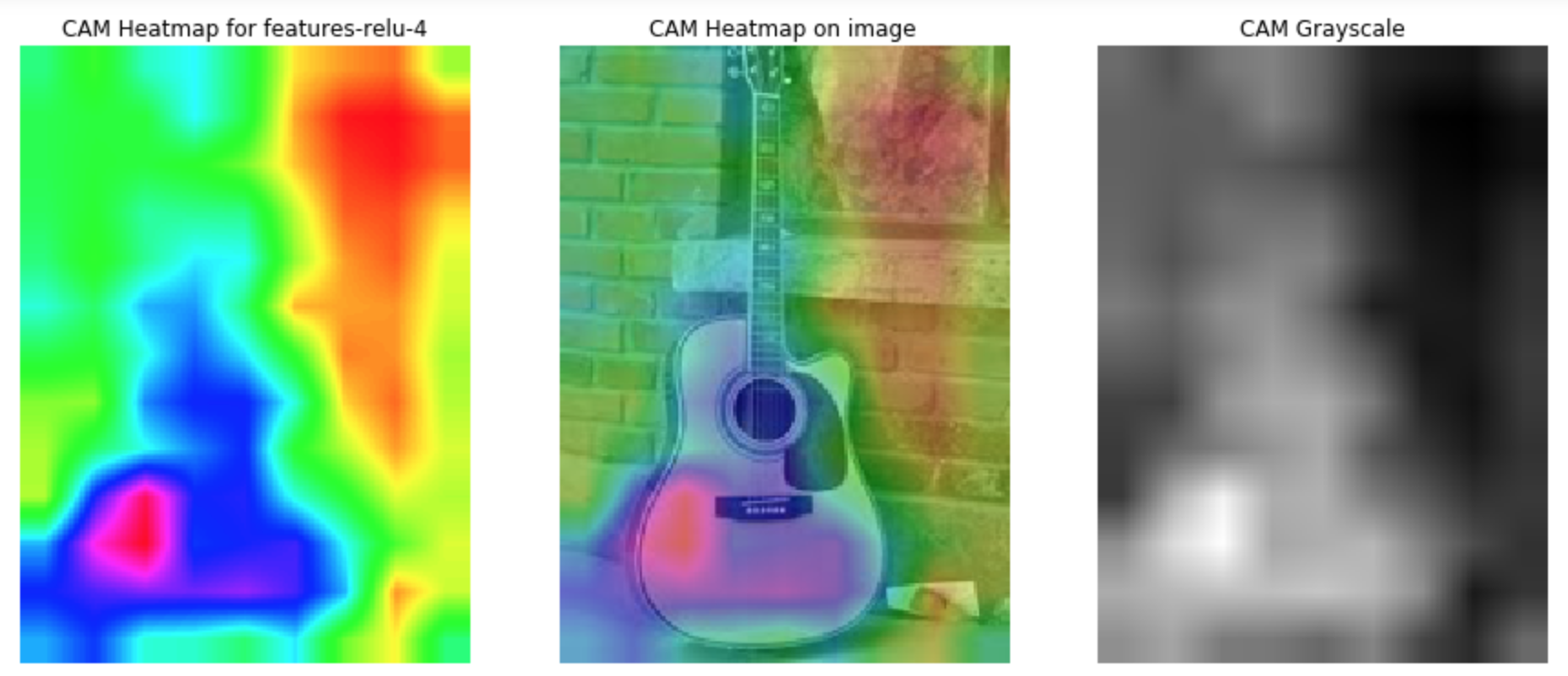

**GRADCAM for a specific features of a layer ** (ReLU-4)

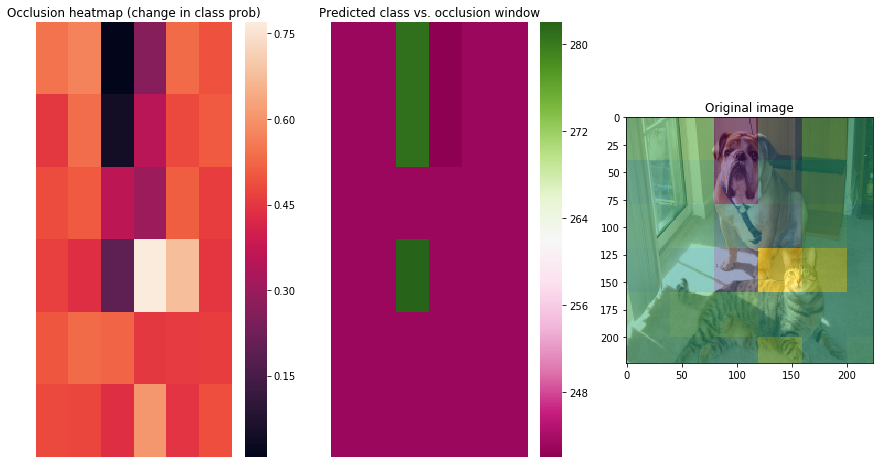

You only know how much you love -> need something, once it's gone -> occluded.

imagine something occluded

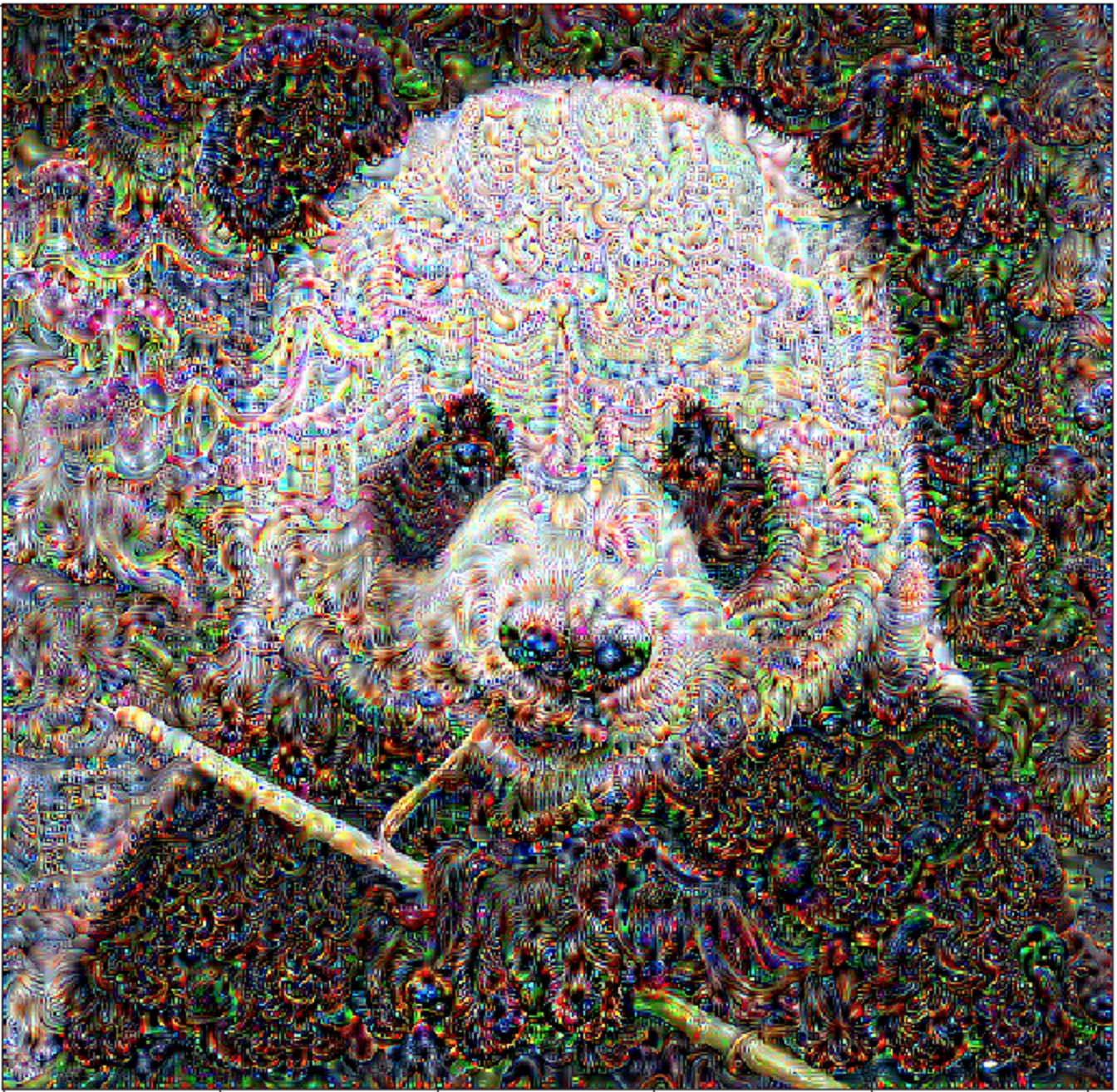

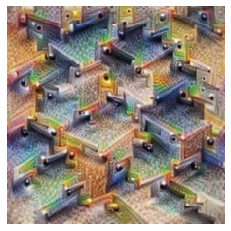

(NetDreamer) Generates an Image that maximizes a layer's activations

Optimization can give us an example input that causes the desired behavior. If we fixate the "otter" class, we get an image that is optimized to most activate that class.

Visualize what a detector (channel) is looking for.

| Some Channel | Some other Channel | Another Channel Still |

|---|---|---|

|

|

|

Neural style transfer is an optimization technique that takes two images—a content image and a style reference image (often the artwork)—and blends them together so the output image looks like the content image, but “painted” in the style of the style reference image.

As an artist, the best motive is often your muse.

| Muse | Content | Style | Output |

|---|---|---|

|

|

|

|

|

|

|

|

|

BUG :( since last architecture update:

- Unfortunately, to make

ClassandChannelvisualization work, we overhauled how images get parameterized and optimized. This worked for the interpretability stuff, but messed up some part of the style transfer (possibly normalization, fourier ladida, decorrelation...) -- very sad and hard to debug. You can get the best results if you run style transfer notebook from earlier Netlens versions.

pip install netlens

The standard image utils (convert, transform, reshape) were factored out to pyimgy

FlatModel

-

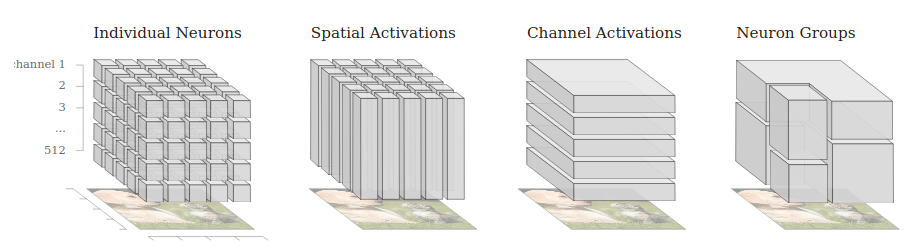

A neural network layer can be sliced up in many ways:

you can view those as the semantic units of the layer / network.

-

Pytorch does not have a nice API to access layers, channels or store their gradients (input, weights, bias).

FlatModelgives a nicer wrapper that stores the forward and backward gradients in a consistent way.

Netlens

- accesses the preprocessed

FlatModelparams to compute and display interpretations

Hooks

- abstraction and convenience for

Pytorch'shook (aka forward / backward pass callbacks) API

NetDreamer

- filter visualizations, generating images from fixed architecture snapshots. tripping out

Optim, Param and Renderers

-

General pipeline for

optimizingimages based on anobjectivewith a givenparameterization. -

used as specific case in

StyleTransfer:def generate_style_transfer(module: StyleTransferModule, input_img, num_steps=300, style_weight=1, content_weight=1, tv_weight=0, **kwargs): # create objective from the module and the weights objective = StyleTransferObjective(module, style_weight, content_weight, tv_weight) # the "parameterized" image is the image itself param_img = RawParam(input_img, cloned=True) render = OptVis(module, objective, optim=optim.LBFGS) thresh = (num_steps,) if isinstance(num_steps, int) else num_steps return render.vis(param_img, thresh, in_closure=True, callback=STCallback(), **kwargs)

StyleTransfer , an Artist's Playground

- streamlined way to run StyleTransfer experiments, which is a special case of image optimization

- many variables to configure (loss functions, weighting, style and content layers that compute loss, etc.)

Adapter, because there aren't pure functional standards for Deep Learning yet

-

We tried to make Netlens work with multiple architectures (not just VGG or AlexNet).

Still, depending on how the architectures are implemented in the libraries, some techniques work only partially. For example, the hacky, non-functional, imperative implementation of ResNet or DenseNet in Tensorflow and also Pytorch make it hard to do attribution or guided backprop (ReLu layers get mutated, Nested Blocks aren't pure functions, arbitrary flattening inside forward pass, etc...).

adapter.pyhas nursery bindings to ingest these special need cases into something theFlatModelclass can work well with.

Composable, elegant code is still seen as un-pythonic. Python is easy and productive for small, solo, interactive things and therefore the environment doesn't force users to become better programmers.

Since it's hard to understand one-off unmaintainable, imperative, throw-away code, which unfortunately is the norm (even in major deep learning frameworks), we put extra effort in making the code clean, concise and with plenty of comments. That is /netlens the main library; the examples notebooks might be different.

Don't like it? PRs welcome.

Be the change you want to see in the world - Mahatma Gandhi

You like everything breaking when you refactor or try out new things? Exactly. That's why we added some tests as sanity checks. This makes it easier to tinker with the code base.

Run pytestor pytest --disable-pytest-warnings in the console.

All Tests should be in /tests folder. Imports there are as if! from the basedirectory. Test files start with test_...

If you use Pycharm you can change your default test runner to pytest.

We came across cnn-visualizations and flashtorch before deciding to build this.

The codebase is largely written from scratch, since we wanted all the techniques to work with many models (most other projects could only work with VGG and/or AlexNet).

The optim, param, renderer abstraction is inspired by lucid

Research: distill mostly