Author: Kaicheng Yang, Jiankang Deng, Xiang An, Jiawei Li, Ziyong Feng, Jia Guo, Jing Yang, Tongliang Liu

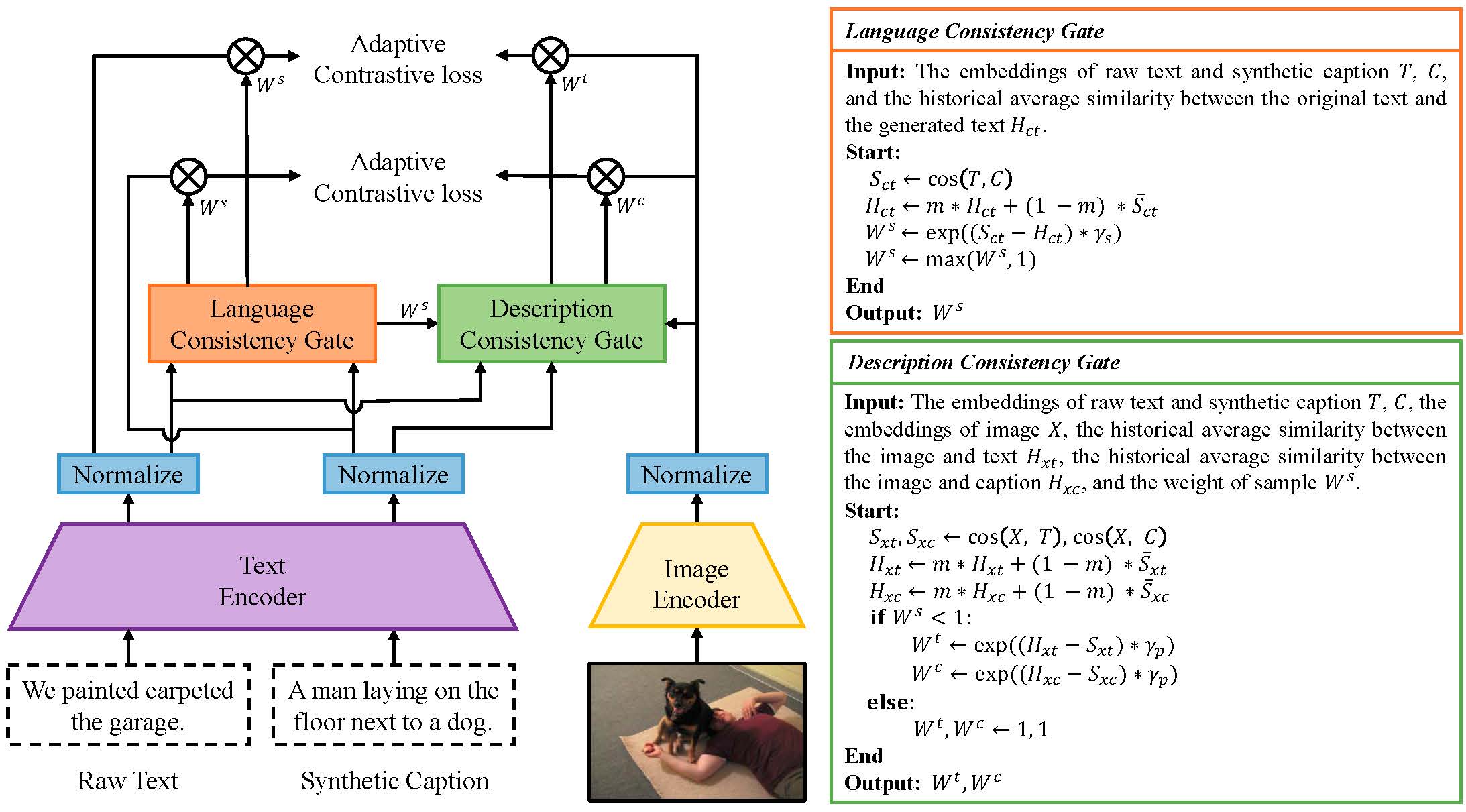

Adaptive Language-Image Pre-training (ALIP) is a bi-path model that integrates supervision from both raw text and synthetic caption. As the core components of ALIP, the Language Consistency Gate (LCG) and Description Consistency Gate (DCG) dynamically adjust the weights of samples and image-text/caption pairs during the training process. Meanwhile, the adaptive contrastive loss can effectively reduce the impact of noise data and enhances the efficiency of pre-training data.

- (2023.8.15): ✨Code has been released❗️

- (2023.7.14): ✨Our paper is accepted to ICCV2023❗️

-

pip install -r requirments.txt -

1、Download YFCC15M

The YFCC15M dataset we used is YFCC15M-DeCLIP, we download it from the repo, finally we successful donwload 15061515 image-text pairs.

2、Generate synthetic caption

In our paper, we use OFA model to generate synthetic captions. You can download model weight and scripts from the OFA project.

3、Generate rec files

To improve the training efficience, we use MXNet to save the YFCC15M dataset to rec file, and use NVIDIA DALI to accelerate data loading and pre-processing. The sample code to generate rec files is in data2rec.py.

-

You can download the pretrained model weight from Google Drive or BaiduYun, and you can find the traning log in Google Drive or BaiduYun

-

Start training by run

bash scripts/train_yfcc15m_B32_ALIP.sh -

Evaluate zero shot cross-modal retireval

bash run_retrieval.shEvaluate zero shot classification

bash run_zeroshot.sh -

Method Model MSCOCO R@1 MSCOCO R@5 MSCOCO R@10 Flickr30k R@1 Flickr30k R@5 Flickr30k R@10 CLIP B/32 20.8/13.0 43.9/31.7 55.7/42.7 34.9/23.4 63.9/47.2 75.9/58.9 SLIP B/32 27.7/18.2 52.6/39.2 63.9/51.0 47.8/32.3 76.5/58.7 85.9/68.8 DeCLIP B/32 28.3/18.4 53.2/39.6 64.5/51.4 51.4/34.3 80.2/60.3 88.9/70.7 UniCLIP B32 32.0/20.2 57.7/43.2 69.2/54.4 52.3/34.8 81.6/62.0 89.0/72.0 HiCLIP B/32 34.2/20.6 60.3/43.8 70.9/55.3 —— —— —— HiDeCLIP B/32 38.7/23.9 64.4/48.2 74.8/60.1 —— —— —— ALIP B/32 46.8/29.3 72.4/54.4 81.8/65.4 70.5/48.9 91.9/75.1 95.7/82.9 Method Model CIFAR10 CIFAR100 Food101 Pets Flowers SUN397 Cars DTD Caltech101 Aircraft Imagenet Average CLIP B/32 63.7 33.2 34.6 20.1 50.1 35.7 2.6 15.5 59.9 1.2 32.8 31.8 SLIP B/32 50.7 25.5 33.3 23.5 49.0 34.7 2.8 14.4 59.9 1.7 34.3 30.0 FILIP B/32 65.5 33.5 43.1 24.1 52.7 50.7 3.3 24.3 68.8 3.2 39.5 37.2 DeCLIP B/32 66.7 38.7 52.5 33.8 60.8 50.3 3.8 27.7 74.7 2.1 43.2 41.3 DeFILIP B/32 70.1 46.8 54.5 40.3 63.7 52.4 4.6 30.2 75.0 3.3 45.0 44.2 HiCLIP B/32 74.1 46.0 51.2 37.8 60.9 50.6 4.5 23.1 67.4 3.6 40.5 41.8 HiDeCLIP B/32 65.1 39.4 56.3 43.6 64.1 55.4 5.4 34.0 77.0 4.6 45.9 44.6 ALIP B/32 83.8 51.9 45.4 30.7 54.8 47.8 3.4 23.2 74.1 2.7 40.3 41.7

This project is based on open_clip and OFA, thanks for their works.

This project is released under the MIT license. Please see the LICENSE file for more information.

If you find this repository useful, please use the following BibTeX entry for citation.

@misc{yang2023alip,

title={ALIP: Adaptive Language-Image Pre-training with Synthetic Caption},

author={Kaicheng Yang and Jiankang Deng and Xiang An and Jiawei Li and Ziyong Feng and Jia Guo and Jing Yang and Tongliang Liu},

year={2023},

eprint={2308.08428},

archivePrefix={arXiv},

primaryClass={cs.CV}

}