Supplementary materials for the paper "Wino-X: Multilingual Winograd Schemas for Commonsense Reasoning and Coreference Resolution" (Emelin et al., 2021)

Dataset is now also available on HuggingFace: https://huggingface.co/datasets/demelin/wino_x.

Full paper is available here: https://aclanthology.org/2021.emnlp-main.670.pdf.

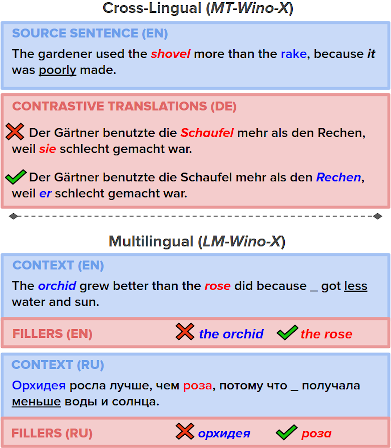

Abstract: Winograd schemas are a well-established tool for evaluating coreference resolution (CoR) and commonsense reasoning (CSR) capabilities of computational models. So far, schemas remained largely confined to English, limiting their utility in multilingual settings. This work presents Wino-X, a parallel dataset of German, French, and Russian schemas, aligned with their English counterparts. We use this resource to investigate whether neural machine translation (NMT) models can perform CoR that requires commonsense knowledge and whether multilingual language models (MLLMs) are capable of CSR across multiple languages. Our findings show Wino-X to be exceptionally challenging for NMT systems that are prone to undesirable biases and unable to detect disambiguating information. We quantify biases using established statistical methods and define ways to address both of these issues. We furthermore present evidence of active cross-lingual knowledge transfer in MLLMs, whereby fine-tuning models on English schemas yields CSR improvements in other languages.

- Train a fairseq NMT model using

model_training/train_transformer.shor use a publicly available model checkpoint. - Score the contrastive translations in the MT-Wino-X benchmark by running

model_evaluation/compute_nmt_perplexity.pywith the appropriate arguments. - Compute model accuracy and supplementary metrics by running

model_evaluation/evaluate_accuracy_nmt.pywith the appropriate arguments.

- Optionally fine-tune an MLM on a subset of LM-Wino-X using the

model_training/finetune_lm.shscript. - Score the contrastive translations in the LM-Wino-X benchmark and compute model accuracy by running

model_evaluation/compute_mlm_perplexity.pywith the appropriate arguments.

The Wino-X dataset is available at https://tinyurl.com/winox-data.. It contains two directories: mt_wino_x and lm_wino_x, designed for the evaluation of NMT models and MLLMs, respectively. For a detailed breakdown of dataset statistics, refer to 📘 Section 2 and 📘 Appendix A.3 of the paper.

See the requirements.txt file.

(:blue_book: See Section 2 of the paper.)

enforce_language_id.py: Filters out lines from the parallel dataset that do not belong to the expected languages.unpack_tilde_corpus.py: Converts the RAPID corpus into a plain text format.unpack_tsv.py: Converts .tsv files into a plain text format.

(:blue_book: See Section 2.2 of the paper.)

postprocess_translations.py: Fixes apostrophes and tokenizes translations obtained from Google Translate.translate_text.py: Translates the input document into the specified target language via the Google Translate API.

(:blue_book: See Sections 2 of the paper.)

annotate_winogrande.py: Annotates WinoGrande samples with linguistic information (e.g. coreferent animacy, compounds containing coreferents) that can be used for subsequent filtering.build_parallel_dataset.py: Constructs MT-Wino-X and LM-Wino-X datasets.check_if_animate.py: Automatically checks for WinoGrande samplesevaluate_animacy_results.py: Displays WinoGrande samples that have been identified as mentioning animate coreferents for manual evaluation.filter_full_winogrande.py: Removes test and development split samples from the full WinoGrande corpus.preprocess_data_for_aligning.py: Pre-processes parallel text files by converting them into the format expected by fast_align / awesome-align.select_source_sentences.py: Writes relevant WinoGrande samples to a file used to obtain silver target translations from Google Translate.spacy_tag_map.py: Contains SpaCy dependency parsing tags.split_challenge_sets.py: Splits the challenge set into training, development, and testing segments.test_animacy.py: Estimates whether the input item (either a word or a phrase) is animate or not based on language model perplexity of filled-out, pre-defined sentence templates.util.py: Various dataset construction helper scripts.learn_alignments.sh: Bash script for learning alignments with awesome-align.

(:blue_book: See Section 3 & 4 of the paper.)

average_checkpoints.py: Merges multiple fairseq model checkpoints into a single one via parameter averaging.fairseq_output_cleaner.py: Cleans up the translations obtained from fairseq models (called by the relevant bash scripts).finetune_lm.py: Training script for finetuning MLMs (e.g. XLM-R) on LM-Wino-X and the WinoGrande datasets.lm_utils.py: Helper scripts for fine-tuning LMs on the translation and coreference resolution tasks.train_transformer.sh: Trains / finetunes a transformer NMT model (optionally with the pronoun penalty objective).translate_transformer_base.sh: Obtains translations from the specified NMT model.translate_transformer_fair.sh: Obtains translations from a publicly available checkpoint of publicly available, pretrained FAIR NMT models.

(:blue_book: See Section 3 & 4 of the paper.)

check_pronoun_coverage.py: Checks whether fine-tuning the NMT model on a subset of MT-Wino-X improves pronoun translation accuracy.compute_nmt_perplexity.py: Computes the perplexity of the evaluated NMT model for contrasting MT-Wino-X translations.estimate_pronoun_priors: Counts the occurrence of pronoun base forms in the (Moses-tokenized) training data per grammatical gender.estimate_saliency_nmt.py: Identifies source tokens that are salient to the corresponding target pronoun translation generated by NMT models.eval_util.py: Various evaluation helper scripts.evaluate_accuracy_mbart.py: Computes MBART(50) accuracy on MT-Wino-X and estimates model preference w.r.t. coreferent gender and location.evaluate_accuracy_mlm.py: Computes MLM accuracy on LM-Wino-X and estimates model preference w.r.t. coreferent gender and location.evaluate_accuracy_nmt.py: Computes NMT model accuracy on MT-Wino-X provided a list of model prediction scores, and estimates model preference w.r.t. coreferent gender and location.translate_and_score_with_mbart50.py: Translates a test set with MBART50 and computes the (Sacre)BLUE score of the translation against a reference.

@inproceedings{emelin-sennrich-2021-wino,

title = "Wino-{X}: Multilingual {W}inograd Schemas for Commonsense Reasoning and Coreference Resolution",

author = "Emelin, Denis and

Sennrich, Rico",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

address = "Online and Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.670",

doi = "10.18653/v1/2021.emnlp-main.670",

pages = "8517--8532",

abstract = "Winograd schemas are a well-established tool for evaluating coreference resolution (CoR) and commonsense reasoning (CSR) capabilities of computational models. So far, schemas remained largely confined to English, limiting their utility in multilingual settings. This work presents Wino-X, a parallel dataset of German, French, and Russian schemas, aligned with their English counterparts. We use this resource to investigate whether neural machine translation (NMT) models can perform CoR that requires commonsense knowledge and whether multilingual language models (MLLMs) are capable of CSR across multiple languages. Our findings show Wino-X to be exceptionally challenging for NMT systems that are prone to undesirable biases and unable to detect disambiguating information. We quantify biases using established statistical methods and define ways to address both of these issues. We furthermore present evidence of active cross-lingual knowledge transfer in MLLMs, whereby fine-tuning models on English schemas yields CSR improvements in other languages.",

}