Benchmarks you can feel

We all love benchmarks, but there's nothing like a hands on vibe check. What if we could meet somewhere in the middle?

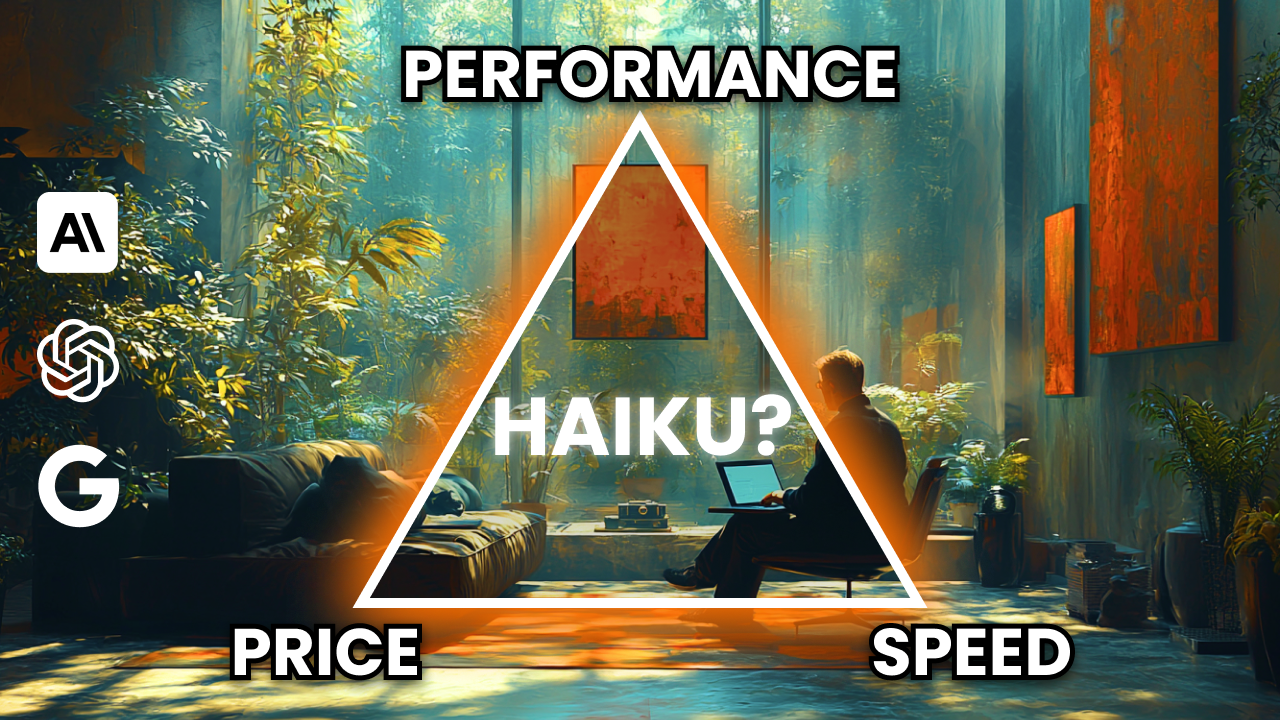

Enter BENCHY. A chill, live benchmark tool that lets you see the performance, price, and speed of LLMs in a side by side comparison for SPECIFIC use cases.

Watch the walk through video here

- Long Tool Calling

- Goal: Understand the best LLMs and techniques for LONG chains of tool calls / function calls (15+).

- Watch the walk through video here

- Multi Autocomplete

- Goal: Understand claude 3.5 haiku & GPT-4o predictive outputs compared to existing models.

- Watch the walk through video here

.env- Environment variables for API keysserver/.env- Environment variables for API keyspackage.json- Front end dependenciesserver/pyproject.toml- Server dependenciessrc/store/*- Stores all front end state and promptsrc/api/*- API layer for all requestsserver/server.py- Server routesserver/modules/llm_models.py- All LLM modelsserver/modules/openai_llm.py- OpenAI LLMserver/modules/anthropic_llm.py- Anthropic LLMserver/modules/gemini_llm.py- Gemini LLM

# Install dependencies using bun (recommended)

bun install

# Or using npm

npm install

# Or using yarn

yarn install

# Start development server

bun dev # or npm run dev / yarn dev# Move into server directory

cd server

# Create and activate virtual environment using uv

uv sync

# Set up environment variables

cp .env.sample .env

# Set EVERY .env key with your API keys and settings

ANTHROPIC_API_KEY=

OPENAI_API_KEY=

GEMINI_API_KEY=

# Start server

uv run python server.py

# Run tests

uv run pytest (**beware will hit APIs and cost money**)- See

src/components/DevNotes.vuefor limitations

- https://github.com/simonw/llm?tab=readme-ov-file

- https://github.com/openai/openai-python

- https://platform.openai.com/docs/guides/predicted-outputs

- https://community.openai.com/t/introducing-predicted-outputs/1004502

- https://unocss.dev/integrations/vite

- https://www.npmjs.com/package/vue-codemirror6

- https://vuejs.org/guide/scaling-up/state-management

- https://www.ag-grid.com/vue-data-grid/getting-started/

- https://www.ag-grid.com/vue-data-grid/value-formatters/

- https://llm.datasette.io/en/stable/index.html

- https://cloud.google.com/vertex-ai/generative-ai/docs/multimodal/get-token-count

- https://ai.google.dev/gemini-api/docs/tokens?lang=python

- https://ai.google.dev/pricing#1_5flash

- https://ai.google.dev/gemini-api/docs/structured-output?lang=python

- https://platform.openai.com/docs/guides/structured-outputs

- https://docs.anthropic.com/en/docs/build-with-claude/tool-use

- https://ai.google.dev/gemini-api/docs/models/experimental-models