This is the webpage for the working group lead by Edgar Dobriban at the IDEAL special semester on the foundations of deep learning.

Mainly focusing on the theory of adversarial robustness.

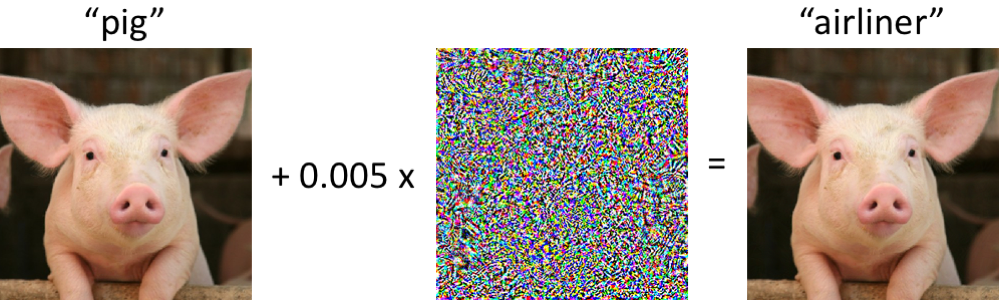

Illustration of adversarial examples, from https://gradientscience.org/intro_adversarial/, by Aleksander Mądry & Ludwig Schmidt:

What causes adversarial examples? An overview of theoretical explanations, by Edgar Dobriban (work in progress)

[Certified Robustness to Adversarial Examples with Differential Privacy](Certified Robustness to Adversarial Examples with Differential Privacy)

Overfitting or perfect fitting? risk bounds for classification and regression rules that interpolate

Robustness May Be at Odds with Accuracy

Adversarial Risk Bounds via Function Transformation

Theoretically Principled Trade-off between Robustness and Accuracy

Certified Adversarial Robustness via Randomized Smoothing

VC Classes are Adversarially Robustly Learnable, but Only Improperly

Adversarial Examples Are Not Bugs, They Are Features

Adversarial Training Can Hurt Generalization

Precise Tradeoffs in Adversarial Training for Linear Regression

A Closer Look at Accuracy vs. Robustness

Provable tradeoffs in adversarially robust classification

Obfuscated Gradients Give a False Sense of Security: Circumventing Defenses to Adversarial Examples

Motivating the Rules of the Game for Adversarial Example Research

Certified Adversarial Robustness via Randomized Smoothing

On Evaluating Adversarial Robustness

See section 6.1 of my lecture notes for a collection of materials.

Good tutorial: J. Z. Kolter and A. Madry: Adversarial Robustness - Theory and Practice (NeurIPS 2018 Tutorial). Website