We present an automated traffic analysis library. Our library provides functionality to

- Collect real-time traffic videos from the Transport for London API

- Analyse collected videos using computer vision techniques to produce traffic statistics (vehicle counts, vehicle starts, vehicle stops)

- Deploy a live web app to display collected statistics

- Evaluate videos (given annotations)

Better insight extraction from traffic data can aid the effort to understand/reduce air pollution, and allow dynamic traffic flow optimization. Currently, traffic statistics (counts of vehicle types, stops, and starts) are obtained through high-cost manual labor (i.e. individuals counting vehicles by the road) and are extrapolated to annual averages. Furthermore, they are not detailed enough to evaluate traffic/air pollution initiatives.

The purpose of the project is to create an open-source library to automate the collection of live traffic video data, and extract descriptive statistics (counts, stops, and starts by vehicle type) using computer vision techniques. With this library, project partners will be able to input traffic camera videos, and obtain real-time traffic statistics which are localized to the level of individual streets.

More details about the project goals, our technical approach, and the evaluation of our models can be found in the Technical Report.

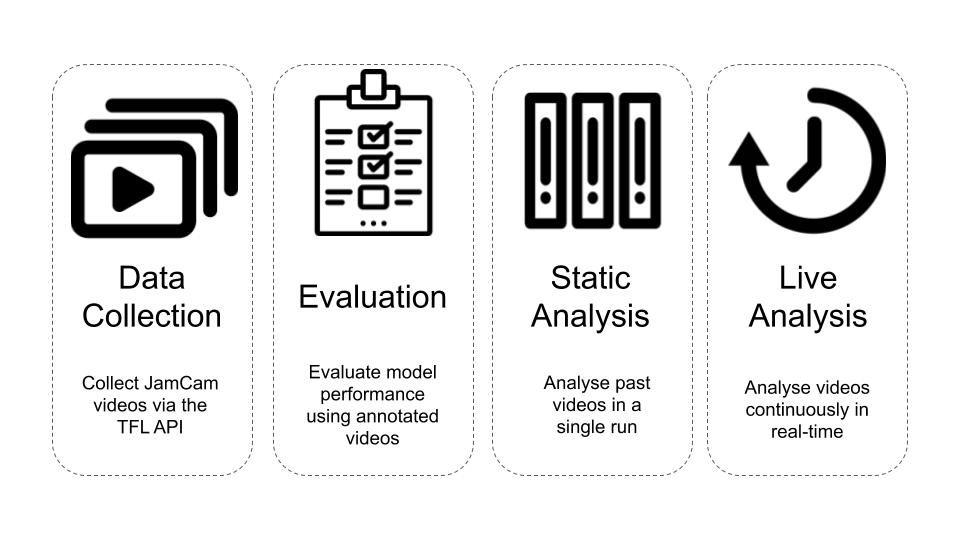

Our project is structured into four main pipelines, each of which serves a disctinct function. These pipelines are broadly described as follows:

All pipelines were run on an AWS EC2 instance and a combination of an AWS S3 bucket and PostgreSQL database were used to store data. The details of the EC2 instance can be found below:

AWS EC2 Instance Information

+ AMI: ami-07dc734dc14746eab, Ubuntu image

+ EC2 instance: c4.4xlarge

+ vCPU: 16

+ RAM: 30 GB

+ OS: ubuntu 18.04 LTS

+ Volumes: 1

+ Type: gp2

+ Size: 100 GB

+ RDS: No

#TODO Check these details with Lily

For installation and setup we are assuming that you are on a Ubuntu system. To start with you should update and upgrade your apt-get package manager.

sudo apt-get update

sudo apt-get upgrade -y

Now you can install python 3.6 and the corresponding pip version. To do this run the following commands in order:

sudo apt-get install python3.6

sudo apt-get install python3-pip

sudo apt-get install python3.6-dev

The next step is to set up a virtual environment for managing all the packages needed for this project. Run the following commands to install virtualenv:

python3.6 -m pip install virtualenv

With virtualenv installed we can now create a new environment for our packages. To do this we can run the following commands (where 'my_env' is your chosen name for the environment):

python3.6 -m virtualenv my_env

Whenever you want to run our pipelines you need to remember to activate this environment so that all the necessary packages are present. To do this you can run the command below:

source my_env/bin/activate

Run this command now because in the next step we will clone the repo and download all the packages we need into the 'my_env' environment.

To clone our repo and download all of our code run the following commands:

cd ~

git clone https://github.com/dssg/air_pollution_estimation.git

All of the required packages for this project are in the 'requirements.txt' file. To install the packages run the following commands:

cd air_pollution_estimation/

sudo apt-get install -y libsm6 libxext6 libxrender-dev libpq-dev

pip install -r requirements.txt

pip install psycopg2

With the repo cloned and the packages installed the next step is to create your private credentials file that will allow you to log into the different AWS services. This file lives in conf/local/ and is not tracked by git because it contains personal information. General parameter and path settings live in conf/base/ and are racked by git.

To create the credentials file run the following command:

touch conf/local/credentials.yml

With the file created, you need to use your preferred text editor (e.g. nano conf/local/credentials.yml) to copy the following template into the file:

dev_s3:

aws_access_key_id: YOUR_ACCESS_KEY_ID_HERE

aws_secret_access_key: YOUR_SECRET_ACCESS_KEY_HERE

email:

address: OUTWARD_EMAIL_ADDRESS

password: EMAIL_PASSWORD

recipients: ['RECIPIENT_1_EMAIL_ADDRESS', 'RECIPIENT_2_EMAIL_ADDRESS', ...]

postgres:

host: YOUR_HOST_ADDRESS

name: YOUR_DB_NAME

user: YOUR_DB_USER

passphrase: YOUR_DB_PASSWORD

With the template copied, you need to replace the placeholder values with your actual credentials. The keys in credentials.yml are described below:

dev_s3: This section contains the Amazon S3 credentials. Learn more about setting up an Amazon S3 hereaws_access_key_id:aws_secret_access_key:

email: This section is used for email notification service. The email notification service is used to send warnings or messages to recipients when data collection fails.address: the email address used to send a mail to the recipientspassword: the password of the email addressrecipients: the list of recipients(email addresses) to be notified

postgres: This section contains the credentials for the PostgreSQL database.user: The name of the user in the PostgreSQL databasepassphrase: The passphrase of the above user for the PostgreSQL database

In addition to the credentials file you also need to enter your AWS credentials using the AWS command line tool. To do this run the following command:

aws configure --profile dssg

When prompted enter your AWS access key ID and your secret access key (these will be the same as the ones you entered in the credentials.yml file). For the default region name and output format just press enter to leave them blank.

For the final setup step execute the following command to complete the required infrastructure:

python src/setup.py

In order to run the static pipeline you need to collect raw video data from the TFL API. You therefore need to run the data collection pipeline before the static pipeline will work. The data collection pipeline continuously grabs videos from the TFL API and puts them in the S3 bucket for future analysis. The longer you leave the data collection pipeline to run the more data you will have to analyse!

Run the following command to run the pipeline:

python src/data_collection_pipeline.py

As long as this process is running you will be downloading the latest JamCam videos and uploading them to the S3 bucket. The videos will be collected from all the camera and stored in folders based on their date of collection.

The optional parameters for the data collection pipeline include:

iterations: The data collection pipeline script downloads videos from TFL continuously wheniterationsis set to0. To stop the data collection pipeline afterN iterations, change theiterationsparameter in theparameters.ymltoNwhereNis a number e.g

iterations: 4

delay: Thedelayparameter is the amount of time in minutes for the data collection pipeline to wait between iterations. The defaultdelaytime is set to3 minsbecause TFL uploads new videos after~4 mins. E.g

delay: 3

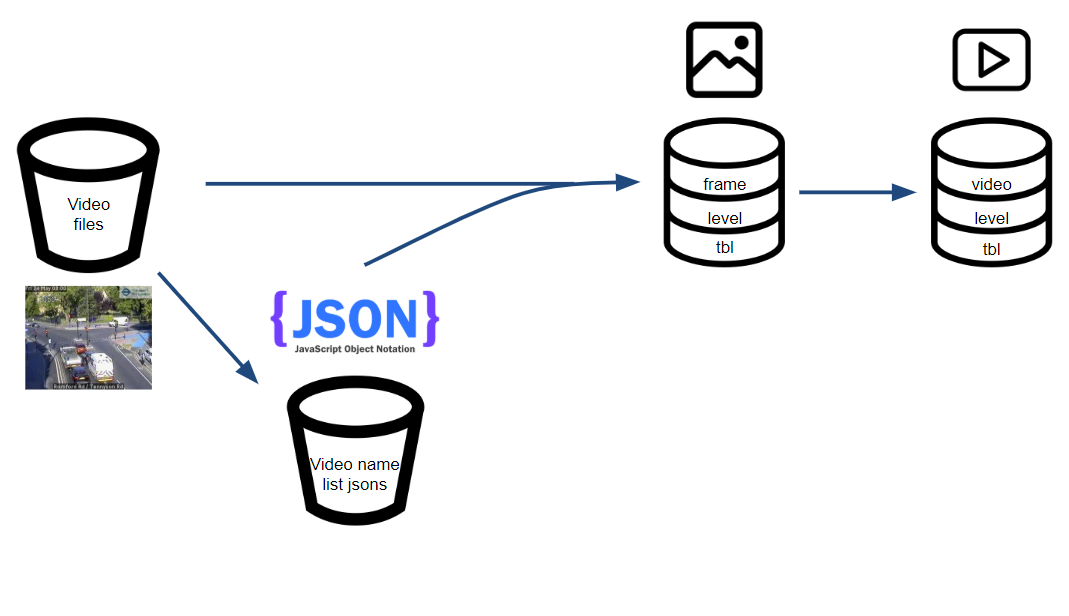

The static pipeline is used to analyse a selection of JamCam videos and put the results into the PostgreSQL database. A general outline of static pipeline can be seen in the following diagram:

In short, the pipeline first constructs a .json file containing a list of video file paths to be used for analysis. The video paths saved in the .json file are based on a particular search critera (see below). The .json file is uploaded to s3 so that we can avoid searching the videos every time we want to run the pipeline. The next step of the pipeline is to use the .json file to load the corresponding videos into memory and analyse them, producing frame and video level statistics in the PostgreSQL database.

To run the pipeline execute the following command in the terminal:

python src/run_pipeline.py

Under 'static_pipeline' heading in the parameters.yml file is a collection of parameters that are used to control which videos are saved to the .json file. These parameters are as follows:

load_ref_file- Boolean for flagging whether to create a new .json file or load an existing oneref_file_name- The name of the ref file that will be saved and/or loadedcamera_list- A list of camera IDs specifying the camera to analysefrom_date- The date to start analysing videos fromto_date- The date to stop analysing videos fromfrom_time- The time of day to start analysing videos fromto_time- THe time of day to stop analysing videos from

To edit these parameters you can use your favourite text editor e.g. (e.g. nano conf/local/credentials.yml). Remember this pipeline assumes that you have already collected videos that satisfy the requirements specified by your parameter settings.

If the search parameters are None then they default to the following:

camera_list- Defaults to all of the cameras ifNonefrom_date- Defaults to"2019-06-01"ifNoneto_date- Defaults to the current date ifNonefrom_time- Defaults to"00-00-00"ifNoneto_time- Defaults to"23-59-59"ifNone

Aside from the parameters that define the search criteria for the videos to be analysed, there are a host of other parameters in parameters.yml that affect the static pipeline. These parameters can be found under the 'modelling' heading (see the 'Key Files' section for more information about these parameters).

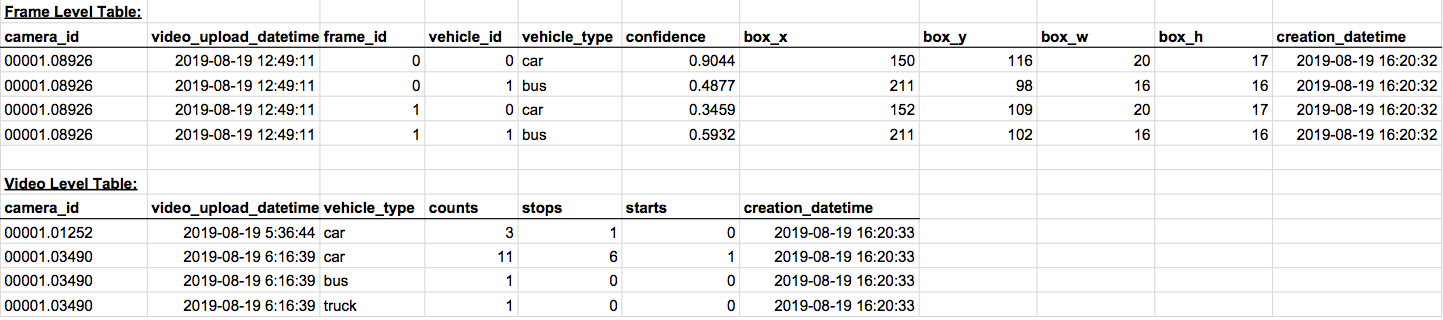

The output of the static pipeline is appended to the frame and video level tables in the PostgreSQL database. Example data from these two tables can be seen below:

Each field is described below:

Frame Level Table:

camera_id: ID of the JamCam camera. Before the decimal is the borough ID and after the decimal is the actual camera ID.video_upload_datetime: the date and time the video was recorded.frame_id: the frame number of that specific video.vehicle_id: the ID number that describes that unique vehicle.vehicle_type: the type of vehicle.confidence: how confident YOLO is in its type classification and bounding box prediction (between 0 and 1)box_x: x coordinate of the top-left corner of the predicted bounding boxbox_y: y coordinate of the top-left corner of the predicted bounding boxbox_w: width of the predicted bounding boxbox_h: height of the predicted bounding boxcreation_datetime: the date and time the row was created in the database

Video Level Table:

camera_id: ID of the JamCam camera. Before the decimal is the borough ID and after the decimal is the actual camera ID.video_upload_datetime: the date and time the video was recorded.vehicle_type: the type of vehicle.counts: the number of vehicles of that type in that specific videostops: the number of stops of that vehicle type in that specific videostarts: the number of starts of that vehicle type in that specific videocreation_datetime: the date and time the row was created in the database

The live pipeline integrates data collection with video analysis to provide real-time traffic statistics. Every 4 minutes the pipeline grabs the latest videos from the cameras listed in the parameters.yml file under data_collection: tims_camera_list: and analyses them. The results are stored in the frame-level and video-level tables in the PostgreSQL database.

To run the pipeline execute the following command in the terminal:

python src/cron_job.py

The evaluation pipeline is used to evaluate the performance of different analyser models and their respective parameter settings. The pipeline relies on hand annotated videos from the computer software Computer Vision Annotation Tool (CVAT). We used version 0.4.2 of CVAT to annotate traffic videos for evaluation. Instructions on installing and running CVAT can be found on their website.

For each video, we labelled cars, trucks, buses, motorbikes, and vans; for each object labelled, we annotated whether it was stopped or parked. For consistency we used the following instructions to annotate ground-truth videos with the CVAT tool:

Use the video name as the task name so that it dumps with the same naming convention

- As a rule of thumb, label things inside the bottom 2/3rds of the image. But if a vehicle is very obvious in the top 1/3rd, label it.

- Use the following labels when creating a CVAT task:

vehicle ~checkbox=parked:false ~checkbox=stopped:false @select=type:undefined,car,truck,bus,motorbike,van - Set Image Quality to 95

- Draw boxes around key frames and let CVAT perform the interpolation

- Press the button that looks like an eye to specify when the object is no longer in the image

- Save work before dumping task to an xml file.

Once you have the xml files containing your annotations you need to put them in your S3 bucket according to the path s3_annotations in the paths.yml file. It is important that you have the raw videos that corespond to these annotations in the S3 directory indicated by s3_video in the paths.yml file. These raw videos will be used by the pipeline to generate predictions that will then be evaluated against the annotations in the xml files. As an example of how the evaluation process works we use the setup.py script that you ran earlier to put some example videos and annotations in your S3 bucket so that the pipeline will run.

As our approach outputs both video level and frame level statistics, we perform evaluation on both the video level and the frame level. As our models did not produce statistics for vans or indicate whether a vehicle was parked, these statistics are omitted from the evaluation process.

Video level evaluation is performed for all vehicle-types (cars, trucks, buses, motorbikes) and vehicle statistics (counts, stops, starts) produced by our model. For each combination of vehicle type and vehicle statistics (e.g. car counts, car stops, car starts) we computed the following summary statistics over the evaluation data set:

- Mean absolute error

- Root mean square error

As speed was a primary consideration for our project partners, we also evaluated the average runtime for each model.

Frame level evaluation is performed for all vehicle types produced by our model. For each vehicle type and for each video, we compute the mean average precision. Mean average precision is a standard metric used by the computer vision community to evaluate the performance of object detection/object tracking algorithms. Essentially, this performance metric assesses both how well an algorithm detects existing objects as well as how accurately placed the bounding boxes around these objects are.

In the context of our project, mean average precision could be utilized to interrogate video-level model performance and diagnose issues. However, as video level statistics were the primary deliverable to our project partners, we used video level performance to select the best models.

Modifiable model parameters can be found in the parameters.yml file under modelling:. A description of these parameters can be found in the above section (Static Pipeline).

To run the evaluation pipeline you just need to execute the following command:

python src/eval_pipeline.py

s3_paths:

bucket_name: "air-pollution-uk" #s3 bucket name

>s3_video: "raw/videos/" #path to video data in s3 bucket

>s3_annotations: "ref/annotations/"

>s3_video_names: "ref/video_names/"

>s3_camera_details: "ref/camera_details/camera_details.json"

s3_frame_level: "frame_level/" # TODO DELETE THIS

>s3_profile: "dssg" # TODO: change this for user?

>s3_creds: "dev_s3" # TODO: CHANGE TO JUST S3

>s3_detection_model: "ref/model_conf/"

local_paths:

temp_video: "data/temp/videos/"

>temp_raw_video: "data/temp/raw_videos/"

>temp_frame_level: "data/temp/frame_level/"

>temp_video_level: "data/temp/video_level/"

>temp_setup: "data/temp/setup/"

video_names: "data/ref/video_names/"

>processed_video: "results/jamcams/"

>plots: "plots/"

>annotations: "annotations/"

>local_detection_model: "data/ref/detection_model/"

>setup_xml: "data/setup/annotations/xml_files/"

>setup_video: "data/setup/annotations/videos/"

db_paths:

db_host: "dssg-london.ck0oseycrr7s.eu-west-2.rds.amazonaws.com"

>db_name: "airquality"

>db_frame_level: 'frame_stats'

>db_video_level: 'video_stats'

data_collection:

jam_cam_website: URL of website for collecting the JamCam videos

>tfl_camera_api: URL of API for collecting the JamCam videos

>jamcam_url: URL of the S3 bucket containing the JamCam videos

>iterations: The number of iterations to run the data collection pipeline for

>delay: The delay in minutes between downloading the JamCam videos

static_pipeline:

load_ref_file: Boolean for loading the .JSON file containing the video names

>ref_file_name: The name of the .JSON file to load/save

>camera_list: List of cameras to analyse data for

>from_date: Start date for videos to analyse

>to_date: End date for videos to analyse

>from_time: Start time for videos to analyse

>to_time: End time for videos to analyse

>chunk_size: Number controlling the number of videos to be processed in a single batch

data_renaming:

old_path: Path for old videos

>new_path: Path for the renamed videos

>date_format: Format for the date and time of the videos

modelling:

# obj detection

>detection_model: Name of the model to use for object detection

>detection_iou_threshold: The Intersection Over Union (IOU) threshold for separating objects

>detection_confidence_threshold: The confidence threshold for accepting the prediction of the object detection algorithm

# tracking

>selected_labels: List of labels to be tracked

>opencv_tracker_type: The name of the tracker to be used by opencv

>iou_threshold: Number controlling how much two objects' bboxes must overlap to be considered the "same"

>detection_frequency: The number of tracking calls to skip between object detection calls

>skip_no_of_frames: The number of frames to skip between successive tracking calls

#stop starts

>iou_convolution_window: Number controlling the window size for computing the IOU between frames n and n+iou_convolution_windowfor a single vehicle's bounding box

>smoothing_method: Name of smoothing method for smoothing the IOU traces over time

>stop_start_iou_threshold: IOU threshold for detemrining whether a vehicle is moving or stopped

reporting:

dtype:

```camera_id```: Data type for the camera ID<br/>

video_level_column_order: ["camera_id", "video_upload_datetime", "vehicle_type", "counts", "starts", "stops", "parked"]

video_level_stats: ['counts', 'stops','starts','parked']

Below is a partial overview of our repository tree:

├── conf/

│ ├── base/

│ └── local/

├── data/

│ ├── frame_level/

│ ├── raw/

│ ├── ref/

│ │ ├── annotations/

│ │ ├── detection_model/

│ │ └── video_names/

│ └── temp/

│ └── videos/

├── notebooks/

├── requirements.txt

└── src/

├── create_dev_tables.py

├── data_collection_pipeline.py

├── eval_pipeline.py

├── run_pipeline.py

├── traffic_analysis/

│ ├── d00_utils/

│ │ ├── create_sql_tables.py

│ │ ├── data_loader_s3.py

│ │ ├── data_loader_sql.py

│ │ ├── data_retrieval.py

│ │ ├── load_confs.py

│ │ ├── ...

| | └── ...

│ ├── d01_data/

│ │ ├── collect_tims_data_main.py

│ │ ├── collect_tims_data.py

│ │ ├── collect_video_data.py

| | └── ...

│ ├── d02_ref/

│ │ ├── download_detection_model_from_s3.py

│ │ ├── load_video_names_from_s3.py

│ │ ├── ref_utils.py

│ │ ├── retrieve_and_upload_video_names_to_s3.py

│ │ └── upload_annotation_names_to_s3.py

│ ├── d03_processing/

│ │ ├── create_traffic_analyser.py

│ │ ├── update_eval_tables.py

│ │ ├── update_frame_level_table.py

│ │ └── update_video_level_table.py

│ ├── d04_modelling/

│ │ ├── classify_objects.py

│ │ ├── object_detection.py

│ │ ├── perform_detection_opencv.py

│ │ ├── perform_detection_tensorflow.py

│ │ ├── tracking/

│ │ │ ├── tracking_analyser.py

│ │ │ └── vehicle_fleet.py

│ │ ├── traffic_analyser_interface.py

│ │ └── transfer_learning/

│ │ ├── convert_darknet_to_tensorflow.py

│ │ ├── generate_tensorflow_model.py

│ │ └── tensorflow_detection_utils.py

│ ├── d05_evaluation/

│ │ ├── chunk_evaluator.py

│ │ ├── compute_mean_average_precision.py

│ │ ├── frame_level_evaluator.py

│ │ ├── parse_annotation.py

│ │ ├── report_yolo.py

│ │ └── video_level_evaluator.py

└── traffic_viz/

├── d06_visualisation/

│ ├── chunk_evaluation_plotting.py

│ ├── dash_object_detection/

│ │ ├── app.py

│ │ └── ...

│ └──

└──

The web application is built using the DASH framework.

The initial setup for the web application requires installing the dash libraries. To install the required libraries, run the following command:

pip install -r src/traffic_viz/web_app/requirements.txt

To start the application, run the following command:

python src/app.py

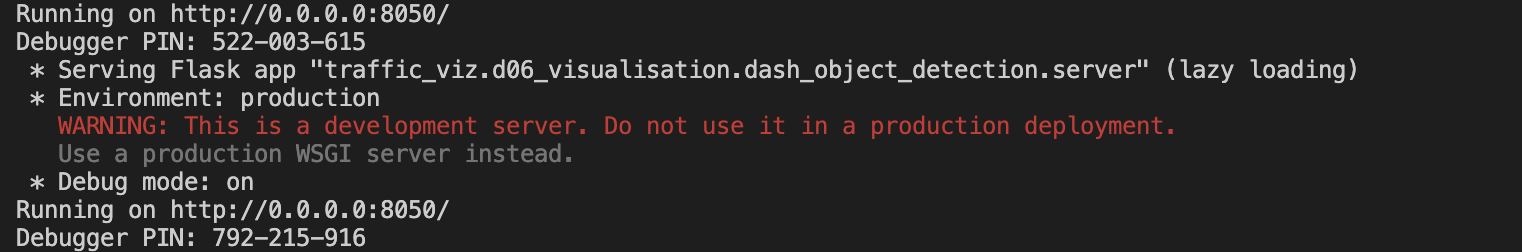

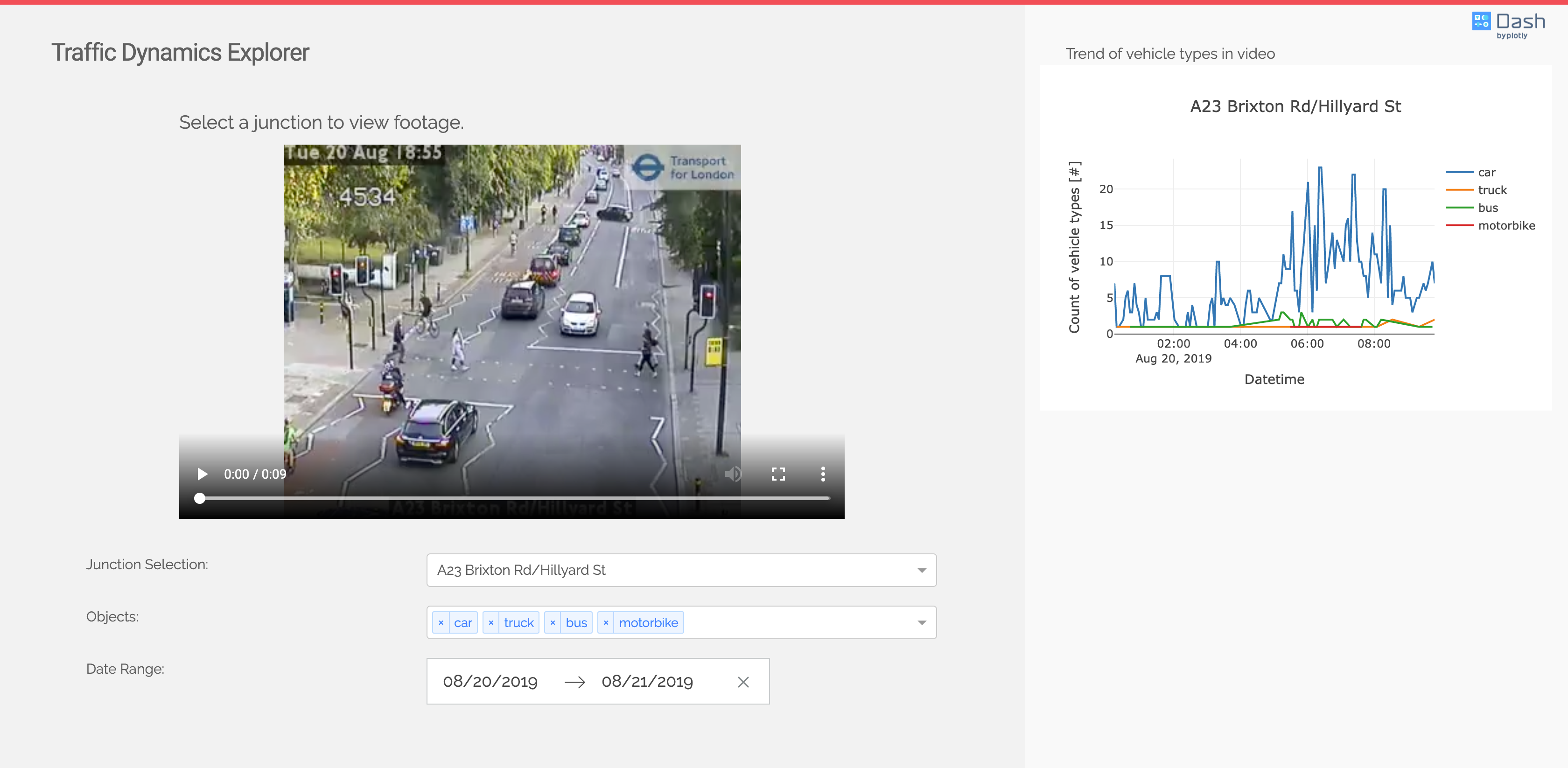

If successful, you should see something similar to the image below:

Open the link http://0.0.0.0:8050 on the browser.

The web application contains a preview section and a graph for displaying the trends of vehicle counts. Select a junction to view the current footage uploaded by TFL. When a junction is selected, a list of vehicle types will be displayed in the Vehicle Type dropdown. Use the date range to change the duration of the trends of vehicle counts.

The web application has a configuration file stored in conf/base/app_parameters.yml. An example of the configuration file is shown below:

visualization:

min_date_allowed: "2019-06-01"

start_date: "2019-06-01"

debug: True

The parameter are explained as below:

min_date_allowed: Update this parameter to change the minimum date for the date range calendar on the web application.start_date: Update this parameter to change the start date for the historical traffic data displayed on the graph in the web application.debug: The debug parameter is used to display errors and automatically reload web app while in development stage. Change this toFalsebefore deploying to production.

Our team: Jack Hensley, Oluwafunmilola Kesa, Sam Blakeman, Caroline Wang, Sam Short (Project Manager), Maren Eckhoff (Technical Mentor)

Data Science for Social Good (DSSG) summer fellowship is a 3-months long educational program of Data Science for Social Good foundation and the University of Chicago, where it originally started. In 2019, the program is organized in collaboration with the Imperial College Business School London, and Warwick University and Alan Turing Institute. Two cohorts of about 20 students each are hosted in these institutions where they are working on projects with our partners. The mission of the summer fellowship program is to train aspiring data scientists to work on data mining, machine learning, big data, and data science projects with social impact, with a special focus on the ethical consequences of the tools and systems they build. Working closely with governments and nonprofits, fellows take on real-world problems in education, health, energy, public safety, transportation, economic development, international development, and more.

-

The Transport and Environmental Laboratory (Department of Civil and Environmental Engineering, Imperial College London) has a mission to advance the understanding of the impact of transport on the environment, using a range of measurement and modelling tools.

-

Transport for London (TFL) is an integrated transport authority under the Greater London Authority, which runs the day-to-day operation of London’s public transport network and manages London’s main roads. TFL leads various air quality initiatives including the Ultra Low Emission Zone in Central London.

-

City of London Corporation (CLC) is the governing body of the City of London (also known as the Square Mile), one of the 33 administrative areas into which London is divided. Improving air quality in the Square Mile is one of the major issues the CLC will be focusing on over the next few years.

-

The Alan Turing Institute is the national institute for data science and artificial intelligence. Its mission is to advance world-class research in data science and artificial intelligence, train leaders of the future, and lead the public conversation.

Fill in later