Upon coming across the excellent pipenv

package, written by Kenneth Reitz (he of requests

fame), I wondered whether the adoption of this package by the Python Packaging

Authority as the go-to

dependency manager for Python

makes Kenneth Reitz the most influential Python contributor on PyPi. After all,

his mantra when developing a package is "<Insert programming activity here> for humans".

We will analyze the Python projects uploaded to the Python Package Index; specifically:

- The Python packages

- What packages depend on what other packages

- Who contributes to what packages using the degree centrality algorithm from graph theory to find the most influential node in the graph of Python packages, dependencies, and contributors.

After constructing the graph and

analyzing the degree centrality,

despite his requests package being far and away the most influential Python package

in the graph, Kenneth Reitz is only the 47th-most-influential Contributor.

The Contributor with the highest degree centrality is

Jon Dufresne, with the only score above 3 million; below are the

top 10:

| Contributor | GitHub login | Degree Centrality Score |

|---|---|---|

| Jon Dufresne | jdufresne | 3154895 |

| Marc Abramowitz | msabramo | 2898454 |

| Felix Yan | felixonmars | 2379577 |

| Hugo | hugovk | 2209779 |

| Donald Stufft | dstufft | 1940369 |

| Adam Johnson | adamchainz | 1867240 |

| Jason R. Coombs | jaraco | 1833095 |

| Ville Skyttä | scop | 1661774 |

| Jakub Wilk | jwilk | 1617028 |

| Benjamin Peterson | benjaminp | 1598444 |

What no doubt contributed to @jdufresne's top score is

that he contributes to 7 of the 10 most-influential projects from

a degree centrality perspective. The hypothesized most-influential

Contributor, Kenneth Reitz, contributes to only 2 of these projects,

and ends up being nowhere near the top 10 with the 47th-highest degree

centrality score.

For more context around this finding and how it was reached, read on.

PyPi is the repository for Python packages that developers

know and love. Analogously to PyPi, other programming languages have their respective package

managers, such as CRAN for R. As a natural exercise in abstraction,

Libraries.io is a meta-repository for

package managers. From their website:

Libraries.io gathers data from 36 package managers and 3 source code repositories. We track over 2.7m unique open source packages, 33m repositories and 235m interdependencies between [sic] them. This gives Libraries.io a unique understanding of open source software. An understanding that we want to share with you.

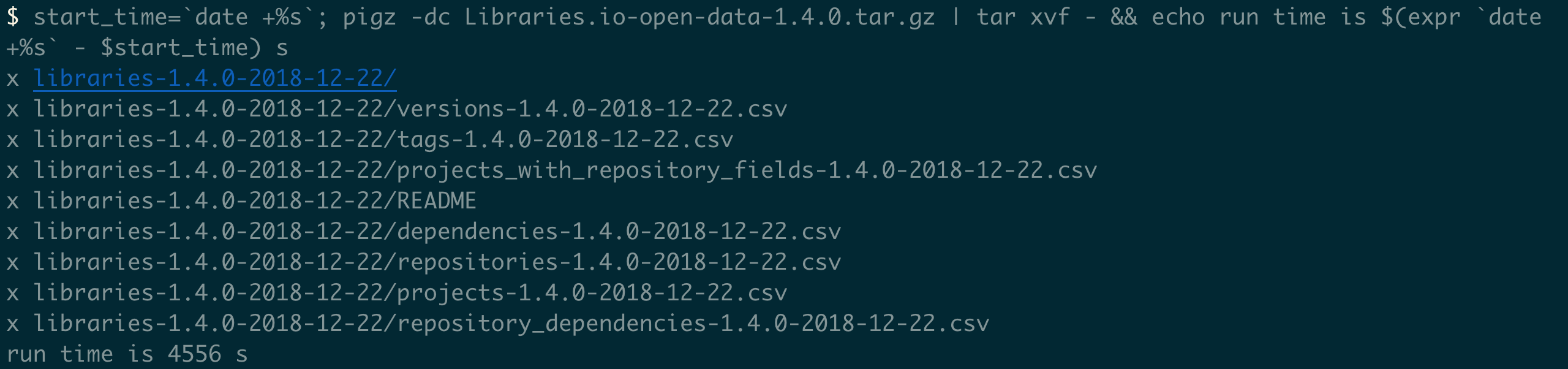

Libraries.io has an easy-to-use API, but given that PyPi is the fourth-most-represented package manager in the Open Data with 200,000+ packages, the number of API calls to various endpoints to collate the necessary data is not appealing (also, Libraries.io rate limits to 60 requests per minute). Fortunately, Jeremy Katz on Zenodo maintains snapshots of the Libraries.io Open Data source. The most recent version is a snapshot from 22 December 2018, and contains the following CSV files:

- Projects (3 333 927 rows)

- Versions (16 147 579 rows)

- Tags (52 506 651 rows)

- Dependencies (105 811 885 rows)

- Repositories (34 061 561 rows)

- Repository dependencies (279 861 607 rows)

- Projects with Related Repository Fields (3 343 749 rows)

More information about these CSVs is in the README file included in the Open

Data tar.gz, copied here.

There is a substantial reduction in the data when subsetting these CSVs just

to the data pertaining to PyPi; find the code used to subset them and the

size comparisons here.

WARNING: The tar.gz file that contains these data is 13 GB itself, and

once downloaded takes quite a while to untar; once uncompressed, the data

take up 64 GB on disk!

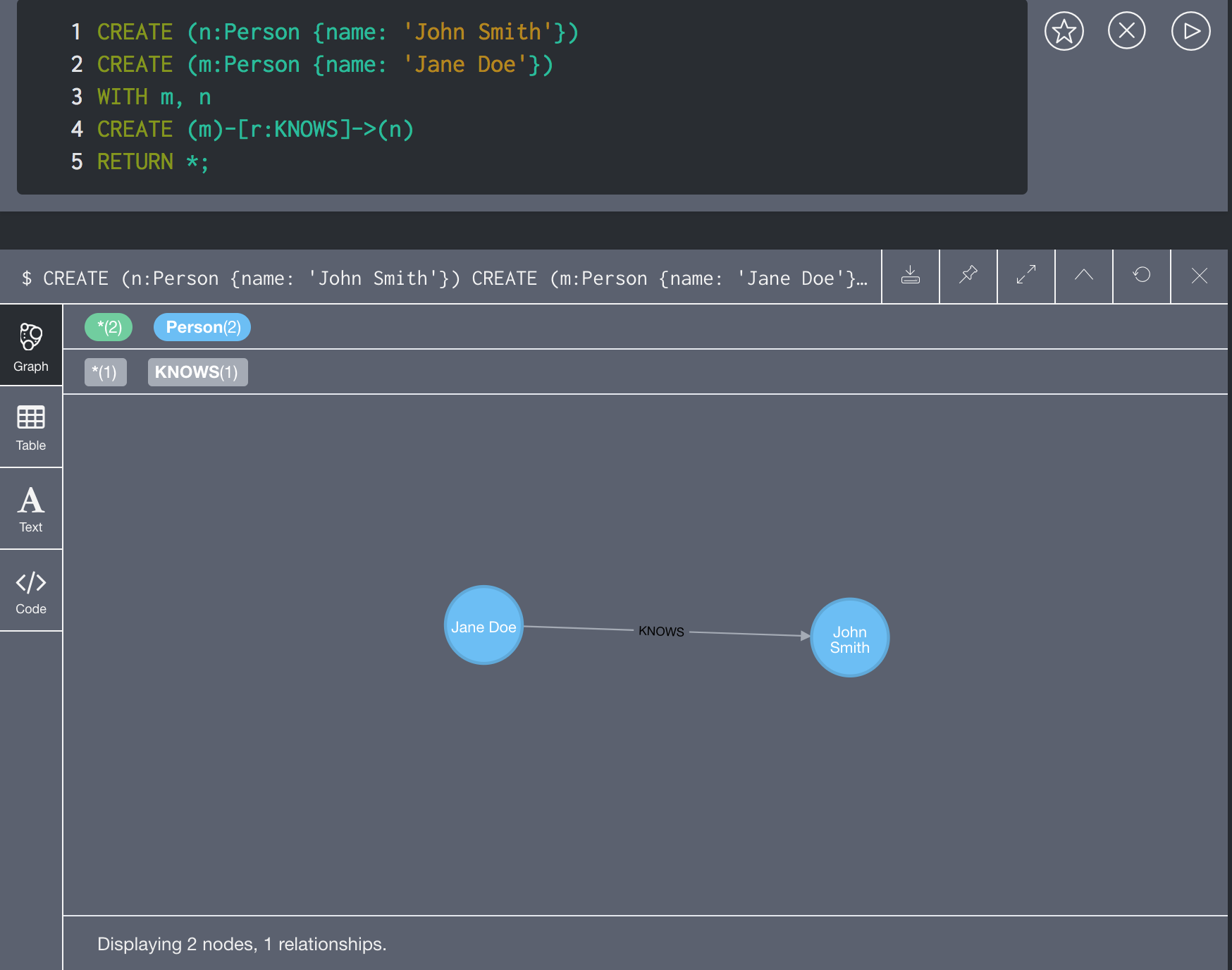

Because of the interconnected nature of software packages (dependencies, versions, contributors, etc.), finding the most influential "item" in that web of data make graph databases and graph theory the ideal tools for this type of analysis. Neo4j is the most popular graph database according to DB engines, and is the one that we will use for the analysis. Part of the reason for its popularity is that its query language, Cypher, is expressive and simple:

Terminology that will be useful going forward:

Jane DoeandJohn Smithare nodes (equivalently: vertexes)- The above two nodes have label

Person, with propertyname - The line that connects the nodes is an relationship (equivalently: edge)

- The above relationship is of type

KNOWS KNOWS, and all Neo4j relationships, are directed; i.e.Jane DoeknowsJohn Smith, but not the converse

On MacOS, the easiest way to use Neo4j is via the Neo4j Desktop app, available

as the neo4j cask on Homebrew.

Neo4j Desktop is a great IDE for Neo4j, allowing simple installation of different

versions of Neo4j as well as plugins that are optional

(e.g. APOC) but

are really the best way to interact with the graph database. Moreover, the

screenshot above is taken from the Neo4j Browser, a nice interactive

database interface as well as query result visualization tool.

Before we dive into the data model and how the data are loaded, Neo4j's default configuration isn't going to cut it for the packages and approach that we are going to use, so the customized configuration file can be found here, corresponding to Neo4j version 3.5.7.

Importing from CSV

is the most common way to populate a Neo4j graph, and is how we will

proceed given that the Open Data snapshot untars into CSV files. However,

first a data model is necessary— what the entities that will be

represented as labeled nodes with properties and the relationships

among them are going to be. Moreover, some settings of Neo4j

will have to be customized for proper and timely import from CSV.

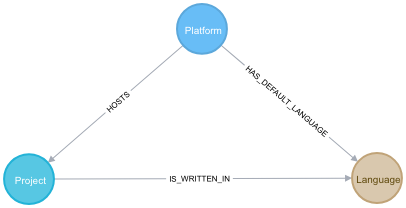

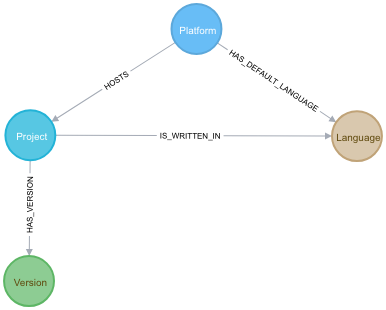

Basically, when translating a data paradigm into graph data form, the nouns become nodes and how the nouns interact (the verbs) become the relationships. In the case of the Libraries.io data, the following is the data model (produced with the Arrow Tool):

So, a Platform HOSTS a Project, which IS_WRITTEN_IN a Language,

and HAS_VERSION Version. Moreover, a Project DEPENDS_ON other

Projects, and Contributors CONTRIBUTE_TO Projects. With respect to

Versions, the diagram communicates a limitation of the Libraries.io

Open Data: that Project nodes are linked in the dependencies CSV to other

Project nodes, despite the fact that different versions of a project

depend on varying versions of other projects. Take, for example, this row

from the dependencies CSV:

| ID | Project_Name | Project_ID | Version_Number | Version_ID | Dependency_Name | Dependency_Kind | Optional_Dependency | Dependency_Requirements | Dependency_Project_ID |

|---|---|---|---|---|---|---|---|---|---|

| 34998553 | 1pass | 31613 | 0.1.2 | 22218 | pycrypto | runtime | false | * | 68140 |

I.e.; Version 0.1.2 of Project 1pass depends on Project pycrypto.

There is no demarcation of which version of pycrypto it is that

version 0.1.2 of 1pass depends on, other than * which forces the

modeling decision of Projects depending on other Projects, not Versions.

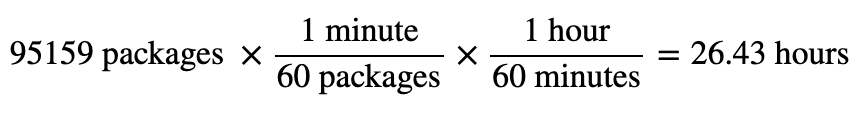

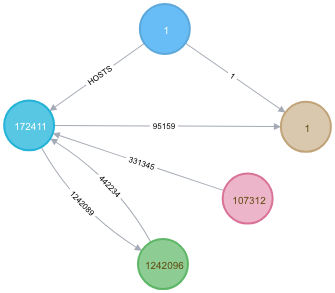

It is impossible to answer the question of what contributor to Pypi is most influential without, obviously, data on contributors. However, the Open Data dataset lacks this information. In order to connect the Open Data dataset with contributors data will require calls to the Libraries.io API. As mentioned above, there is a rate limit of 60 requests per minute. If there are

$ mlr --icsv --opprint filter '$Platform == "Pypi" && $Language == "Python"' then uniq -n -g "ID" projects-1.4.0-2018-12-22.csv

95159Python-language Pypi packages, each of which sends one request to the Contributors endpoint of the Libraries.io API, at "maximum velocity", it will require

to get contributor data for each project.

Following the example of

this blog,

it is possible to use the aforementioned APOC utilities for Neo4j to

load data from web APIs,

but I found it to be unwieldy and difficult to monitor. So, I used

Python's requests and SQLite packages to send requests to the

endpoint and store the responses in a long-running Bash process

(code for this here).

Analogously to the unique constraint in a relational database, Neo4j has a

uniqueness constraint

which is very useful in constraining the number of nodes created. Basically,

it isn't useful, and hurts performance, to have two different nodes representing the

platform Pypi (or the language Python, or the project pipenv, ...) because

it is a unique entity. Moreover, uniqueness constraints enable

more performant queries.

The following

Cypher commands

add uniqueness constraints on the properties of the nodes that should be unique

in this data paradigm:

CREATE CONSTRAINT on (platform:Platform) ASSERT platform.name IS UNIQUE;

CREATE CONSTRAINT ON (project:Project) ASSERT project.name IS UNIQUE;

CREATE CONSTRAINT ON (project:Project) ASSERT project.ID IS UNIQUE;

CREATE CONSTRAINT ON (version:Version) ASSERT version.ID IS UNIQUE;

CREATE CONSTRAINT ON (language:Language) ASSERT language.name IS UNIQUE;

CREATE CONSTRAINT ON (contributor:Contributor) ASSERT contributor.uuid IS UNIQUE;

CREATE INDEX ON :Contributor(name);All of the ID properties come from the first column of the CSVs and are

ostensibly primary key values. The name property of Project nodes is

also constrained to be unique so that queries seeking to match nodes on

the property name— the way that we think of them— are performant as well.

Likewise, query for Contributors is most naturally done via their names,

but as first name, last name pairs are not necessarily unique, a Neo4j

index

will speed up queries without necessitating uniqueness of the property.

With the constraints, indexes, plugins, and configuration of Neo4j in place,

the Libaries.io Open Data dataset can be loaded. Loading CSVs to Neo4j

can be done with the default

LOAD CSV command,

but in the APOC plugin there is an improved version,

apoc.load.csv,

which iterates over the CSV rows as map objects instead of arrays;

when coupled with

periodic execution

(a.k.a. batching), loading CSVs can be done in parallel, as well.

As all projects that are to be loaded are hosted on Pypi, the first

node to be created in the graph is the Pypi Platform node itself:

CREATE (:Platform {name: 'Pypi'});Not all projects hosted on Pypi are written in Python, but those are

the focus of this analysis, so we need a Python Language node:

CREATE (:Language {name: 'Python'});With these two, we create the first relationship of the graph:

MATCH (p:Platform {name: 'Pypi'})

MATCH (l:Language {name: 'Python'})

CREATE (p)-[:HAS_DEFAULT_LANGUAGE]->(l);Now we can load the rest of the entities in our graph, connecting them

to these as appropriate, starting with Projects.

The key operation when loading data to Neo4j is the MERGE clause. Using the property specified in the query, MERGE either MATCHes the node/relationship with the property, and, if it doesn't exist, duly CREATEs the node/relationship. If the property in the query has a uniqueness constraint, Neo4j can thus iterate over possible duplicates of the "same" node/relationship, only creating it once, and "attaching" nodes to the uniquely-specified node on the go.

This is a double-edged sword, though, in the situation of creating relationships between unique nodes; if the participating nodes are not specified exactly, to MERGE a relationship between them will create new node(s) that are duplicates. This is undesirable from an ontological perspective, as well as a database efficiency perspective. So, all this to say that, to create unique node-relationship-node entities requires three passes over a CSV: the first to MERGE the first node type, the second to MERGE the second node type, and the third to MATCH node type 1, MATCH node type 2, and MERGE the relationship between them.

Lastly, for the same reason as the above, it is necessary to create "base" nodes

before creating nodes that "stem" from them. For example, if we had not created

the Python Language node above (with unique property name), for every Python

project MERGED from the projects CSV, Neo4j would create a new Language node

with name 'Python' and a relationship between it and the Python Project node.

This duplication can be useful in some data models, but in the interest of

parsimony, we will load data in the following order:

ProjectsVersions- Dependencies among

Projects andVersions Contributors

First up is the Project nodes. The source CSV for this type of node is

pypi_projects.csv

and the queries are in

this file.

Neo4j loads the CSVs data following the instructions of the file with the

apoc.cypher.runFile command; i.e.

CALL apoc.cypher.runFile('/path/to/libraries_io/cypher/projects_apoc.cypher') yield row, result return 0;

The result of this set of queries is that the following portion of our graph is populated:

Next are the Versions of the Projects. The source CSV for this type

of node is pypi_versions.csv

and the queries are in

this file.

These queries are run with

CALL apoc.cypher.runFile('/path/to/libraries_io/cypher/versions_apoc.cypher') yield row, result return 0;

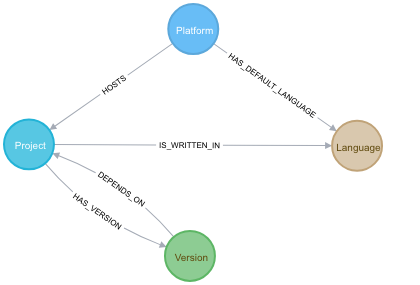

The result of this set of queries is that the graph has grown to include the following nodes and relationships:

Now that there are Project nodes and Version nodes, it's time to

link their dependencies. The source CSV for these data is

pypi_dependencies.csv

and this query is in

this file.

Because the Projects and Versions already exist, this operation

is just the one MATCH-MATCH-MERGE query, creating relationships. It is run with

CALL apoc.cypher.runFile('/path/to/libraries_io/cypher/dependencies_apoc.cypher') yield row, result return 0;

The result of this set of queries is that the graph has grown to include

the DEPENDS_ON relationship:

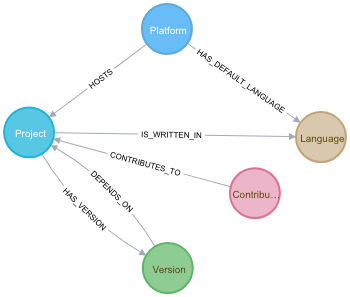

Because the data corresponding to Python Project Contributors was

retrieved from the API, it is not run with Cypher from a file, but

in a Python script, particularly

this section.

After executing this process, the graph is now in its final form:

The below is the same image of the full schema, but with node and relationship counts instead of labels (the labels of nodes and relationships are color-coded still):

On the way to understanding the most influential Contributor,

it is useful to find the most influential Project. Intuitively,

the most influential Project node should be the node with the

most (or very many) incoming DEPENDS_ON relationships; however,

the degree centrality algorithm is not as simple as just counting

the number of relationships incoming and outgoing and ordering by

descending cardinality (although that is a useful metric for

understanding a [sub]graph). This is because the subgraph that

we are considering to understand the influence of Project nodes

also contains relationships to Version nodes.

So, using the Neo4j Graph Algorithm plugin's

algo.degree

procedure, all we need are a node label and a relationship type.

The arguments to this procedure could be as simple as two strings,

one for the node label, and one for the relationship type. However,

as mentioned above, there are two node labels at play here, so we

will use the alternative syntax

of the algo.degree procedure in which we pass Cypher statements

returning the set of nodes and the relationships among them.

To run the degree centrality algorithm on the Projects written

in Python that are hosted on Pypi, the syntax

(found here)

is:

call algo.degree(

"MATCH (:Language {name:'Python'})<-[:IS_WRITTEN_IN]-(p:Project)<-[:HOSTS]-(:Platform {name:'Pypi'}) return id(p) as id",

"MATCH (p1:Project)-[:HAS_VERSION]->(:Version)-[:DEPENDS_ON]->(p2:Project) return id(p2) as source, id(p1) as target",

{graph: 'cypher', write: true, writeProperty: 'pypi_degree_centrality'}

)

;It is crucially important to alias as source the Project

node MATCHed in the second query as the end node of the

DEPENDS_ON relationship, and the start node of the

relationship as target. This is not officially documented,

but the example in the documentation has it as such, and I ran

into Java errors if not aliased exactly that way. This query

sets the pypi_degree_centrality property on each Project

node, and this query

MATCH (:Language {name: 'Python'})<-[:IS_WRITTEN_IN]-(p:Project)<-[:HOSTS]-(:Platform {name: 'Pypi'})

WITH p ORDER BY p.pypi_degree_centrality DESC

WITH collect(p) as projects

UNWIND projects as project

SET project.pypi_degree_centrality_rank = apoc.coll.indexOf(projects, project) + 1

;sets a rank property on each Project corresponding to its degree centrality

score. Thus, it is trivial to return the top 10 projects in terms of

pypi_degree_centrality_rank:

| Project | Degree Centrality Score |

|---|---|

| requests | 18184 |

| six | 11918 |

| python-dateutil | 4426 |

| setuptools | 4120 |

| PyYAML | 3708 |

| click | 3159 |

| lxml | 2308 |

| futures | 1726 |

| boto3 | 1701 |

| Flask | 1678 |

There are two Projects that are far ahead of the others in

terms of degree centrality: requests and six. That six

is so influential is not surprising; since the release of

Python 3, many developers find it necessary to import this

Project in order for their code to be interoperable between

Python 2 and Python 3. By a good margin, though, requests is

the most influential— this comes as no surprise to me, because

in my experience, requests is the package that Python users

reach for first when interacting with APIs or of any kind— I

used requests in this very project to send GET requests to

the Libraries.io API!

With requests as the most influential Project, it bodes

well for the hypothesis that Kenneth Reitz (the author of

requests) be the most influential Contributor. To finally

answer this question, the degree centrality algorithm will be

run again, this time focusing on the Contributor nodes, and

their contributions to Projects. The query

(found here)

is:

call algo.degree(

"MATCH (:Platform {name:'Pypi'})-[:HOSTS]->(p:Project) with p MATCH (:Language {name:'Python'})<-[:IS_WRITTEN_IN]-(p)<-[:CONTRIBUTES_TO]-(c:Contributor) return id(c) as id",

"MATCH (c1:Contributor)-[:CONTRIBUTES_TO]->(:Project)-[:HAS_VERSION]->(:Version)-[:DEPENDS_ON]->(:Project)<-[:CONTRIBUTES_TO]-(c2:Contributor) return id(c2) as source, id(c1) as target",

{graph: 'cypher', write: true, writeProperty: 'pypi_degree_centrality'}

)

;(the file linked to has a subsequent query to create a property that is the

rank of every contributor in terms of pypi_degree_centrality)

and the resulting top 10 in terms of degree centrality score are:

| Contributor | GitHub login | Degree Centrality Score | # Top-10 Contributions | # Total Contributions | Total Contributions Rank |

|---|---|---|---|---|---|

| Jon Dufresne | jdufresne | 3154895 | 7 | 154 | 219th |

| Marc Abramowitz | msabramo | 2898454 | 6 | 501 | 26th |

| Felix Yan | felixonmars | 2379577 | 5 | 210 | 154th |

| Hugo | hugovk | 2209779 | 4 | 296 | 93rd |

| Donald Stufft | dstufft | 1940369 | 5 | 116 | 300th |

| Adam Johnson | adamchainz | 1867240 | 4 | 496 | 28th |

| Jason R. Coombs | jaraco | 1833095 | 3 | 194 | 160th |

| Ville Skyttä | scop | 1661774 | 3 | 135 | 252nd |

| Jakub Wilk | jwilk | 1617028 | 4 | 154 | 221st |

| Benjamin Peterson | benjaminp | 1598444 | 3 | 38 | 1541st |

| ... | ... | ... | ... | ... | ... |

| Kenneth Reitz | kennethreitz | 803087 | 2 | 119 | 337th |

Contributor Jon Dufresne has the highest score which is

the only score that crests 3 million! Kenneth Reitz, the

author of the most-influential Project

node comes in 47th place based on this query. What no doubt

contributed to @jdufresne's top score is

that he contributes to 7 of the 10 most-influential projects from

a degree centrality perspective. The hypothesized most-influential

Contributor, Kenneth Reitz, contributes to only 2 of these projects.

It turns out that 7 is the most top-10 Projects contributed to out

of all Contributors, and only 1603 Contributors

CONTRIBUTES_TO a top-10 Project at all (query

here).

As this table hints, there is a high correlation between the degree

centrality score and the number of top-10 Projects contributed

to, but not such a meaningful correlation between number of

total projects

(query here and rank query here) contributed to.

Indeed, using the algo.similarity.pearson function:

MATCH (:Language {name: 'Python'})<-[:IS_WRITTEN_IN]-(p:Project)<-[:HOSTS]-(:Platform {name: 'Pypi'})

MATCH (p)<-[ct:CONTRIBUTES_TO]-(c:Contributor)

WHERE p.pypi_degree_centrality_rank <= 10

WITH distinct c, count(ct) as num_top_10_contributions

WITH collect(c.pypi_degree_centrality) as dc, collect(num_top_10_contributions) as tc

RETURN algo.similarity.pearson(dc, tc) AS degree_centrality_top_10_contributions_correlation_estimate

;yields an estimate of 0.5996, whereas

MATCH (:Language {name: 'Python'})<-[:IS_WRITTEN_IN]-(p:Project)<-[:HOSTS]-(:Platform {name: 'Pypi'})

MATCH (p)<-[ct:CONTRIBUTES_TO]-(c:Contributor)

WITH distinct c, count(distinct ct) as num_total_contributions

WITH collect(c.pypi_degree_centrality) as dc, collect(num_total_contributions) as tc

RETURN algo.similarity.pearson(dc, tc) AS degree_centrality_total_contributions_correlation_estimate

;is only 0.2254.

All this goes to show that, in a network, the centrality of a node is determined by contributing to the right nodes, not necessarily the most nodes.

Using the Libraries.io Open Data dataset, the Python projects on PyPi and their contributors were analyzed using Neo4j– in particular, the degree centrality algorithm– to find out which contributor is the most influential to the graph of Python packages, versions, dependencies, and contributors. That contributor is Jon Dufresne (GitHub username @jdufresne) and by a wide margin.

This analysis did not take advantage of a commonly-used feature of

graph data; weights of the edges between nodes. A future improvement

of this analysis would be to use the number of versions of a project,

say, as the weight in the degree centrality algorithm to down-weight

those projects that have few versions as opposed to the projects that

have verifiable "weight" in the Python community, e.g. requests.

Similarly, it was not possible to delineate the type of contribution

made in this analysis; more accurate findings would no doubt result

from the distinction between a package's author, for example, and a

contributor who merged a small pull request to fix a typo.

Moreover, the data used in this analysis are just a snapshot of the state of PyPi from December 22, 2018: needless to say the number of versions and projects and contributions is always in flux and so behooves updating. However, the Libraries.io Open Data are a good window into the dynamics of one of programming's most popular communities.