Version 0.1.5-alpha (Sep 2, 2025) - Major Update

- Performance: Complete async backend migration (2-3x faster)

- Stability: 50+ bug fixes and mission recovery improvements

- Documentation: Complete overhaul with example reports and guides

- UI/UX: Enhanced interface with LaTeX support and better navigation

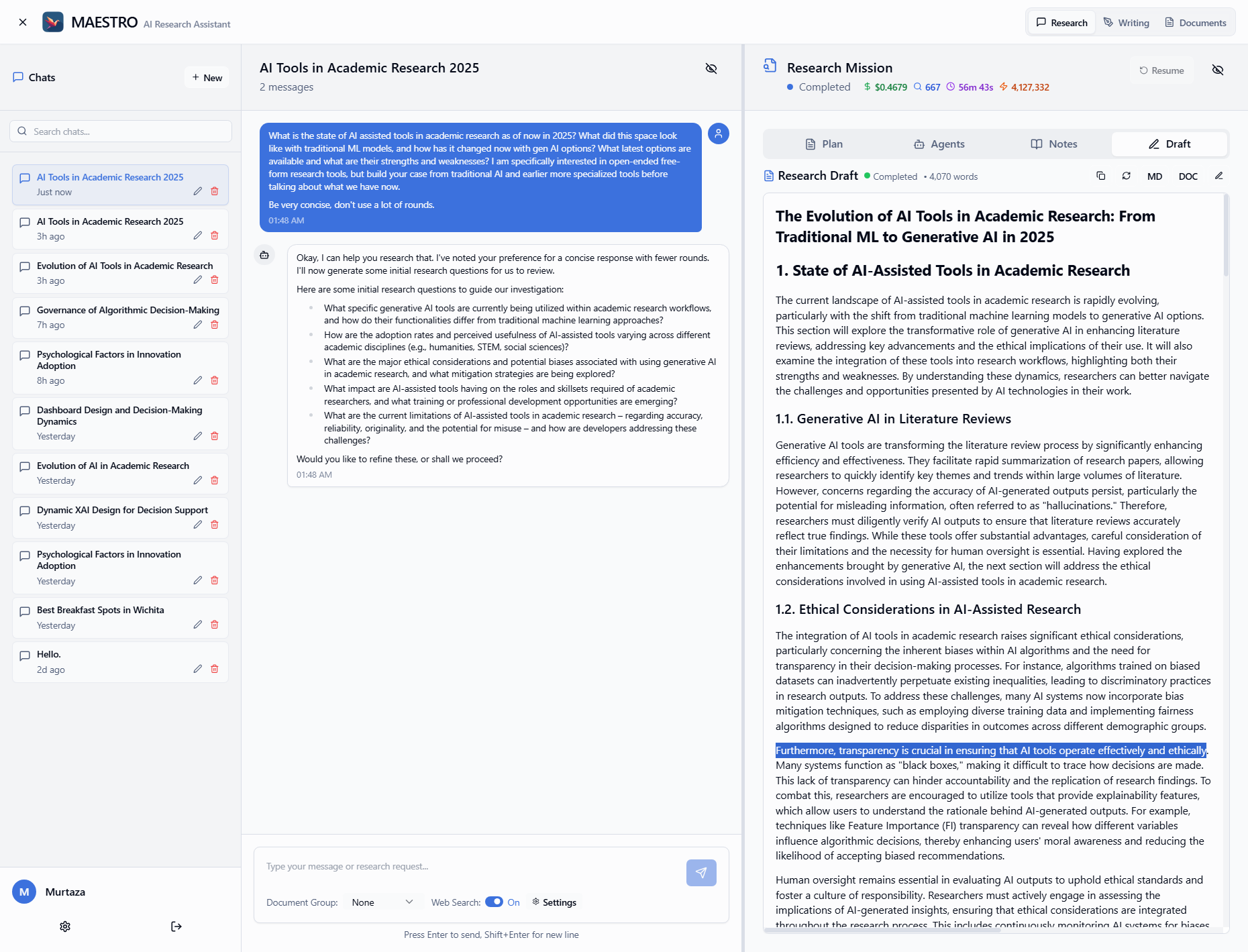

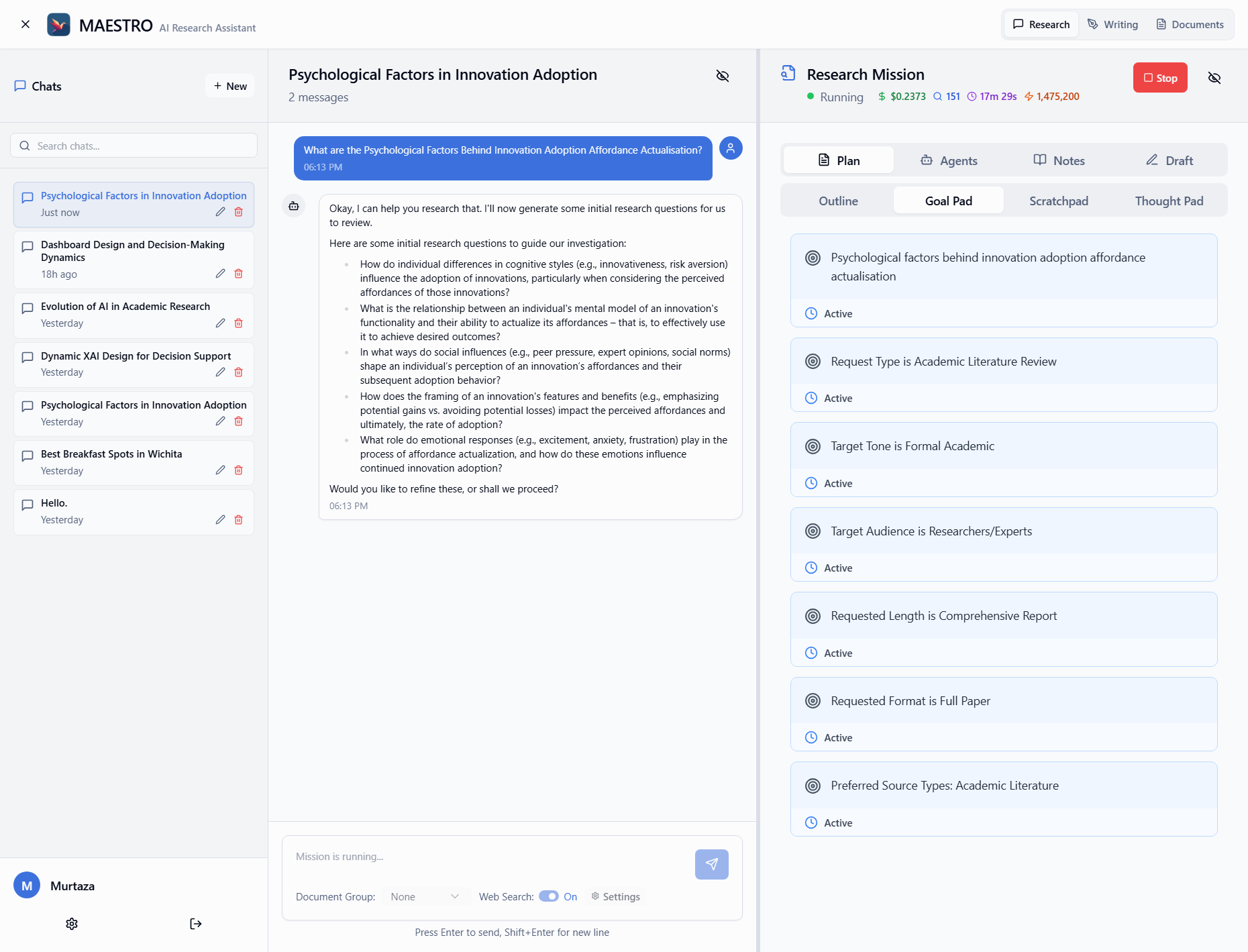

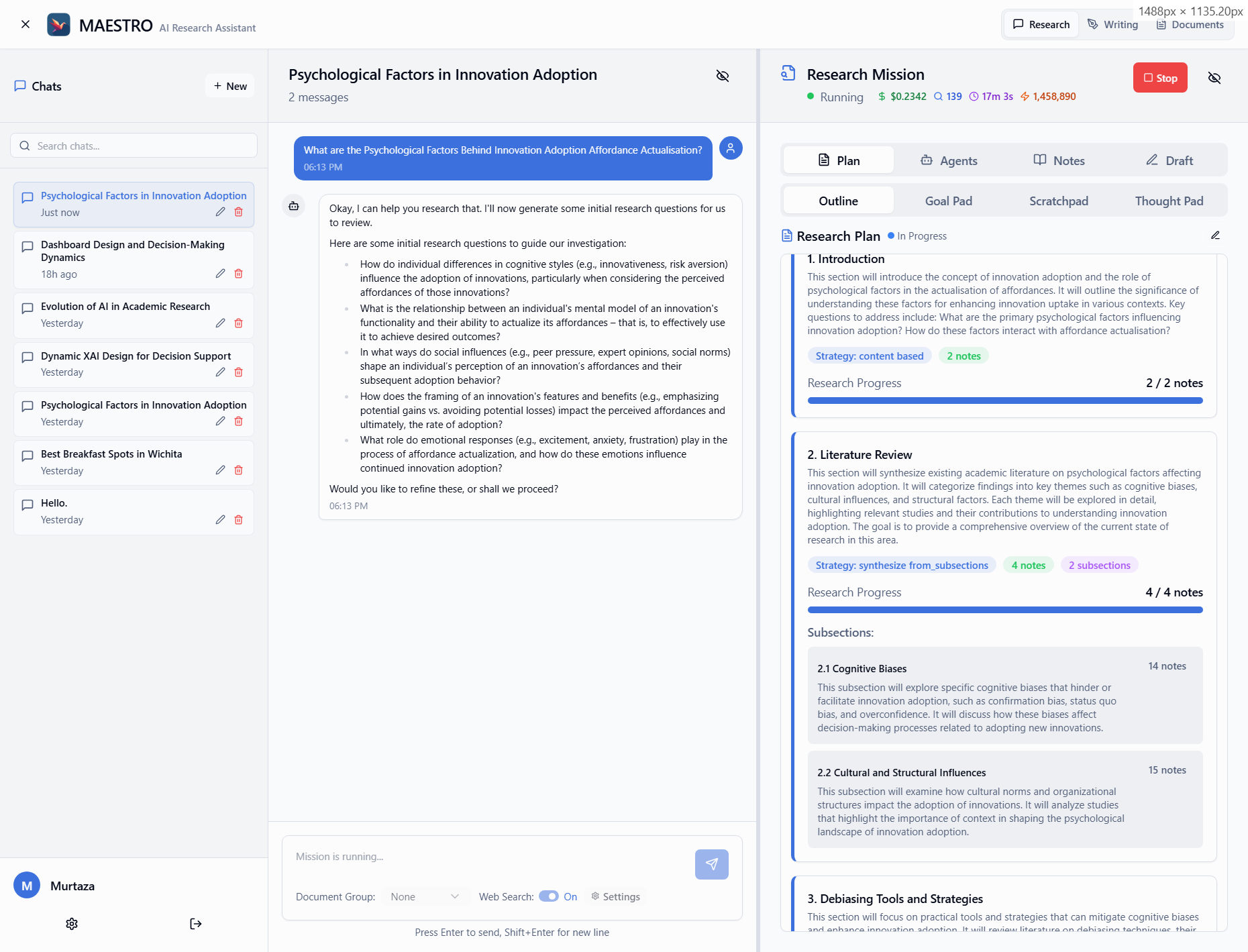

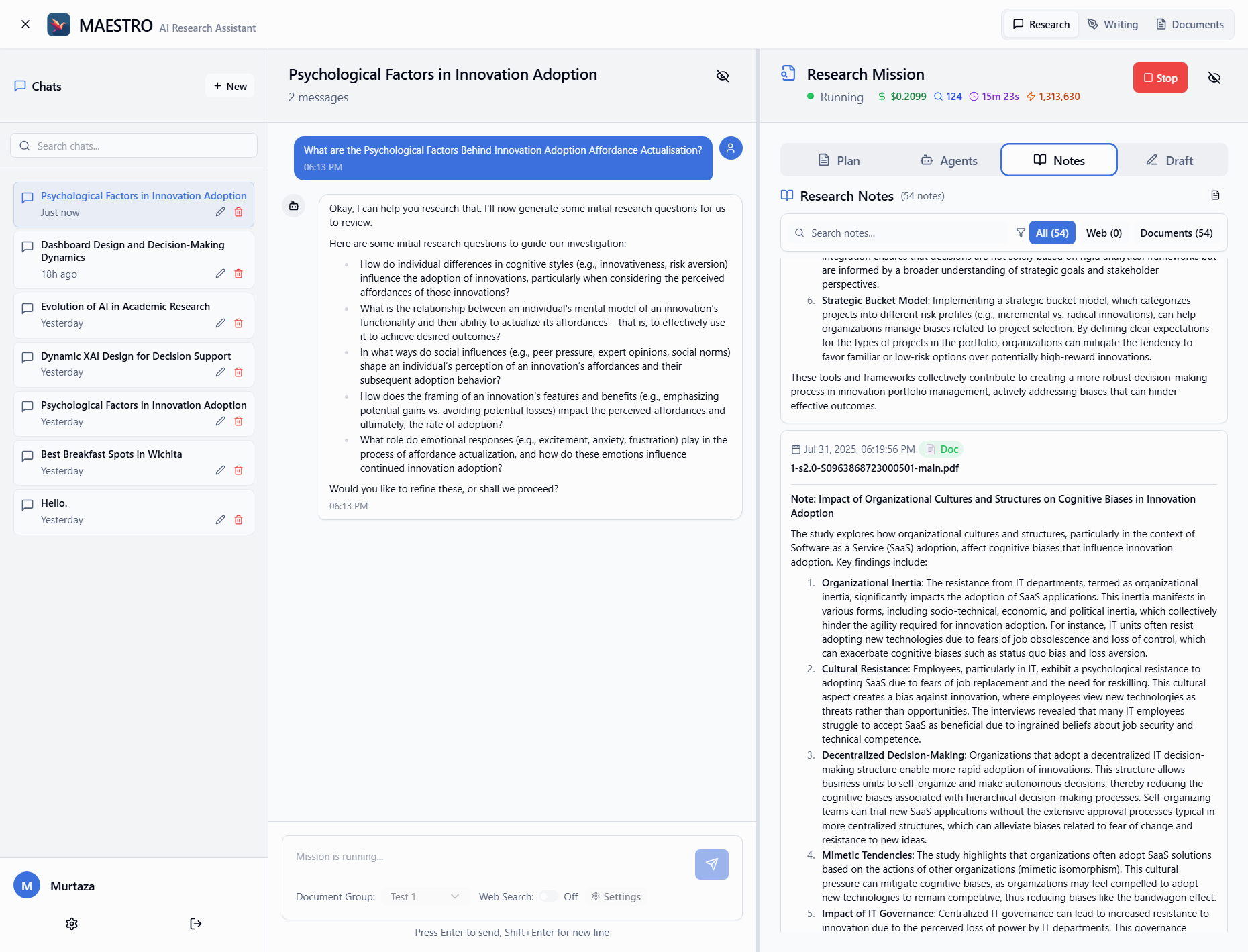

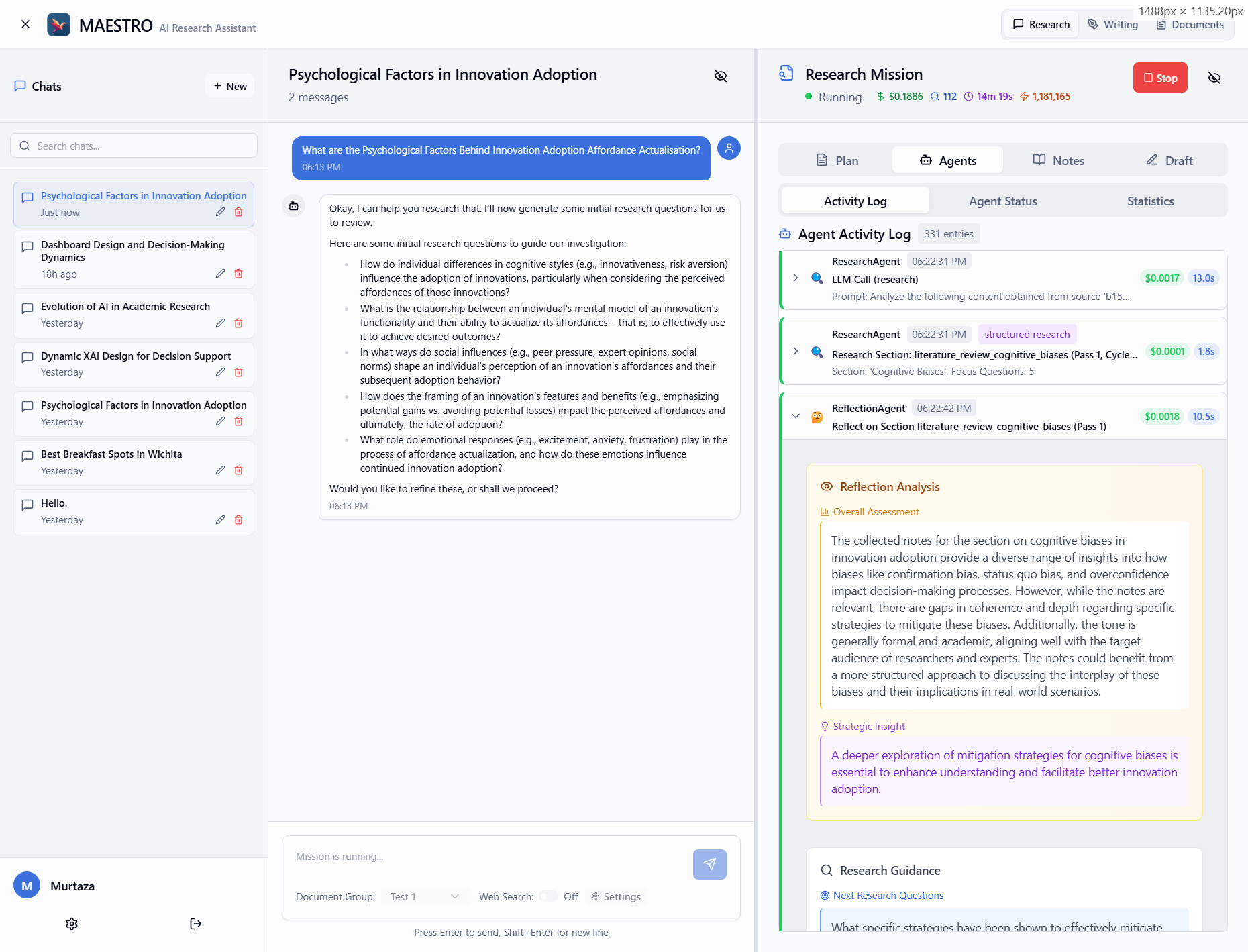

MAESTRO is an AI-powered research platform you can host on your own hardware. It's designed to manage complex research tasks from start to finish in a collaborative research environment. Plan your research, let AI agents carry it out, and watch as they generate detailed reports based on your documents and sources from the web.

- Quick Start - Get up and running in minutes

- Installation - Platform-specific setup

- Configuration - AI providers and settings

- User Guide - Complete feature guide

- Example Reports - Sample outputs from various models

- Troubleshooting - Common issues and solutions

- Docker and Docker Compose (v2.0+)

- 16GB RAM minimum (32GB recommended)

- 30GB free disk space

- API keys for at least one AI provider

# Clone and setup

git clone https://github.com/murtaza-nasir/maestro.git

cd maestro

./setup-env.sh # Linux/macOS

# or

.\setup-env.ps1 # Windows PowerShell

# Start services

docker compose up -d

# Monitor startup (takes 5-10 minutes first time)

docker compose logs -f maestro-backendAccess at http://localhost • Default: admin / pass found in .env

For detailed installation instructions, see the Installation Guide.

- CPU Mode: Use

docker compose -f docker-compose.cpu.yml up -d - GPU Support: Automatic detection on Linux/Windows with NVIDIA GPUs

- Network Access: Configure via setup script options

For troubleshooting and advanced configuration, see the documentation.

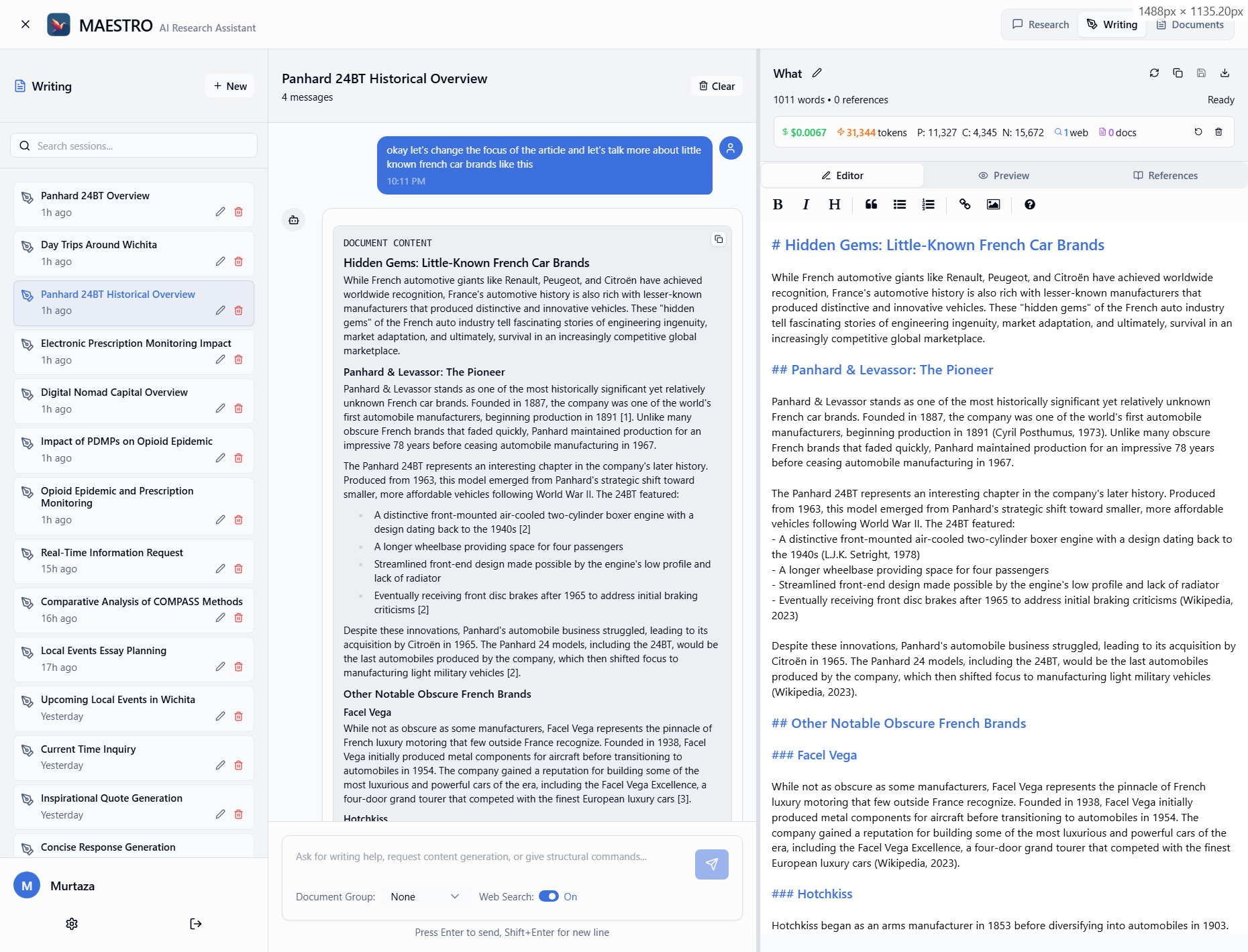

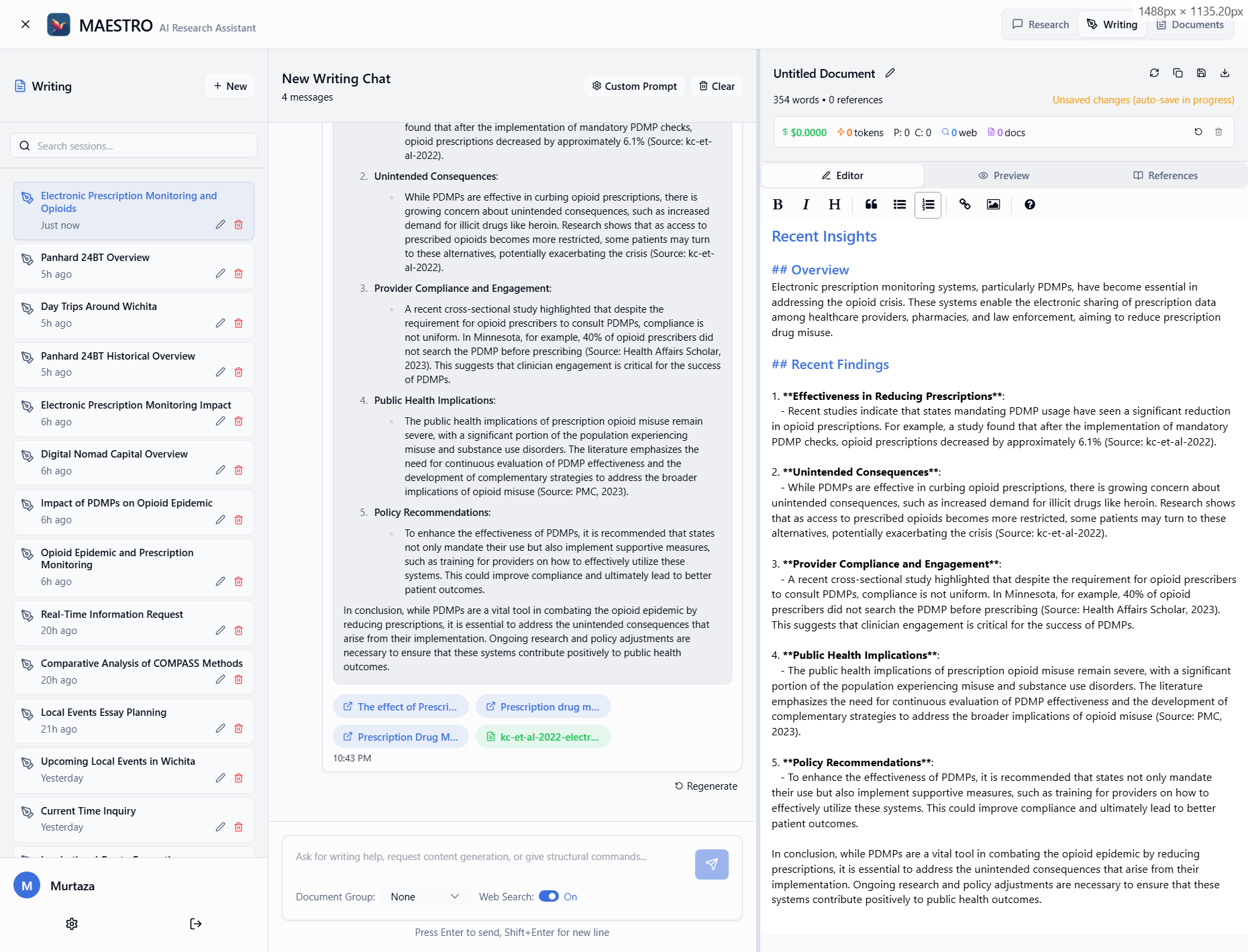

- Multi-Agent Research System: Planning, Research, Reflection, and Writing agents working in concert

- Advanced RAG Pipeline: Dual BGE-M3 embeddings with PostgreSQL + pgvector

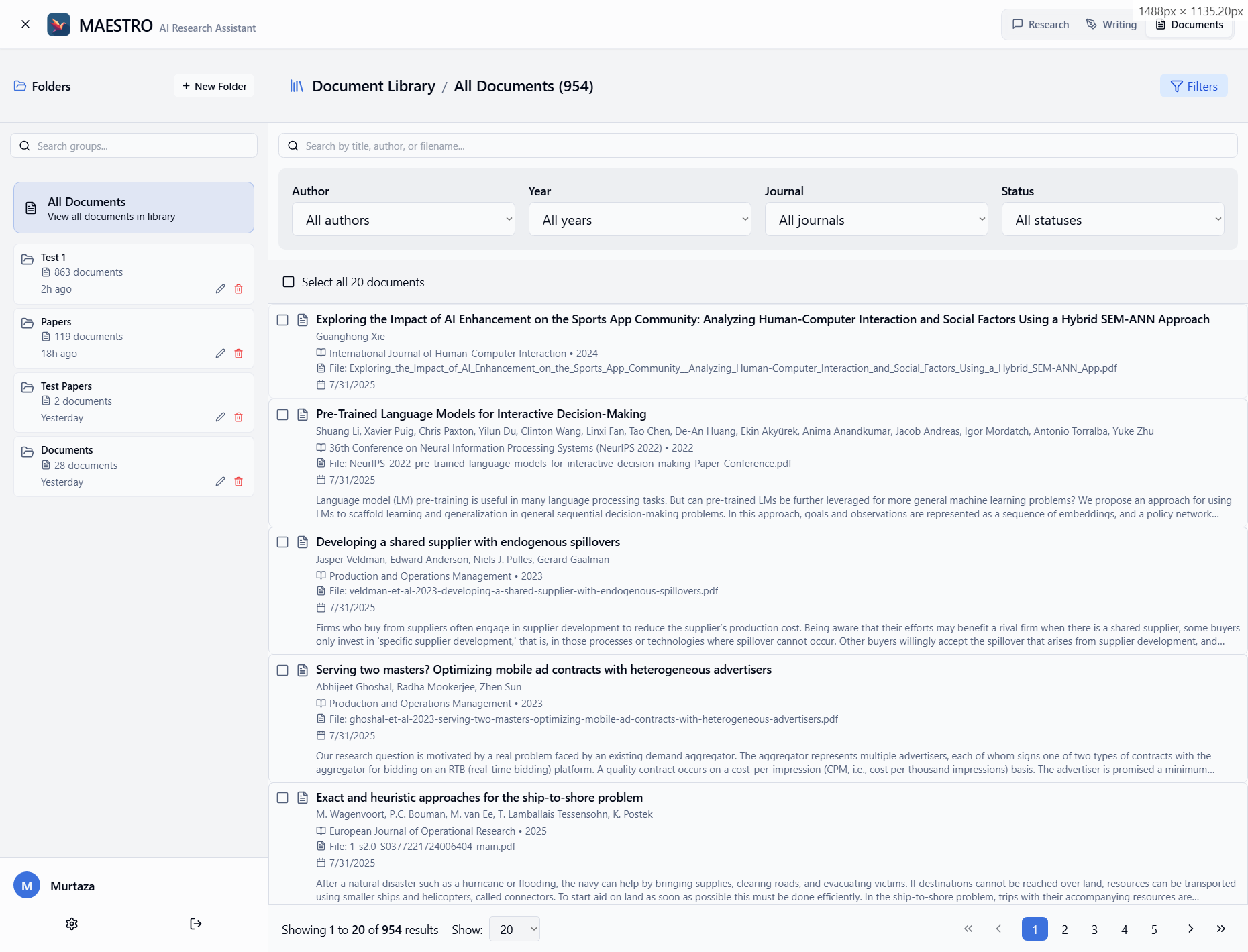

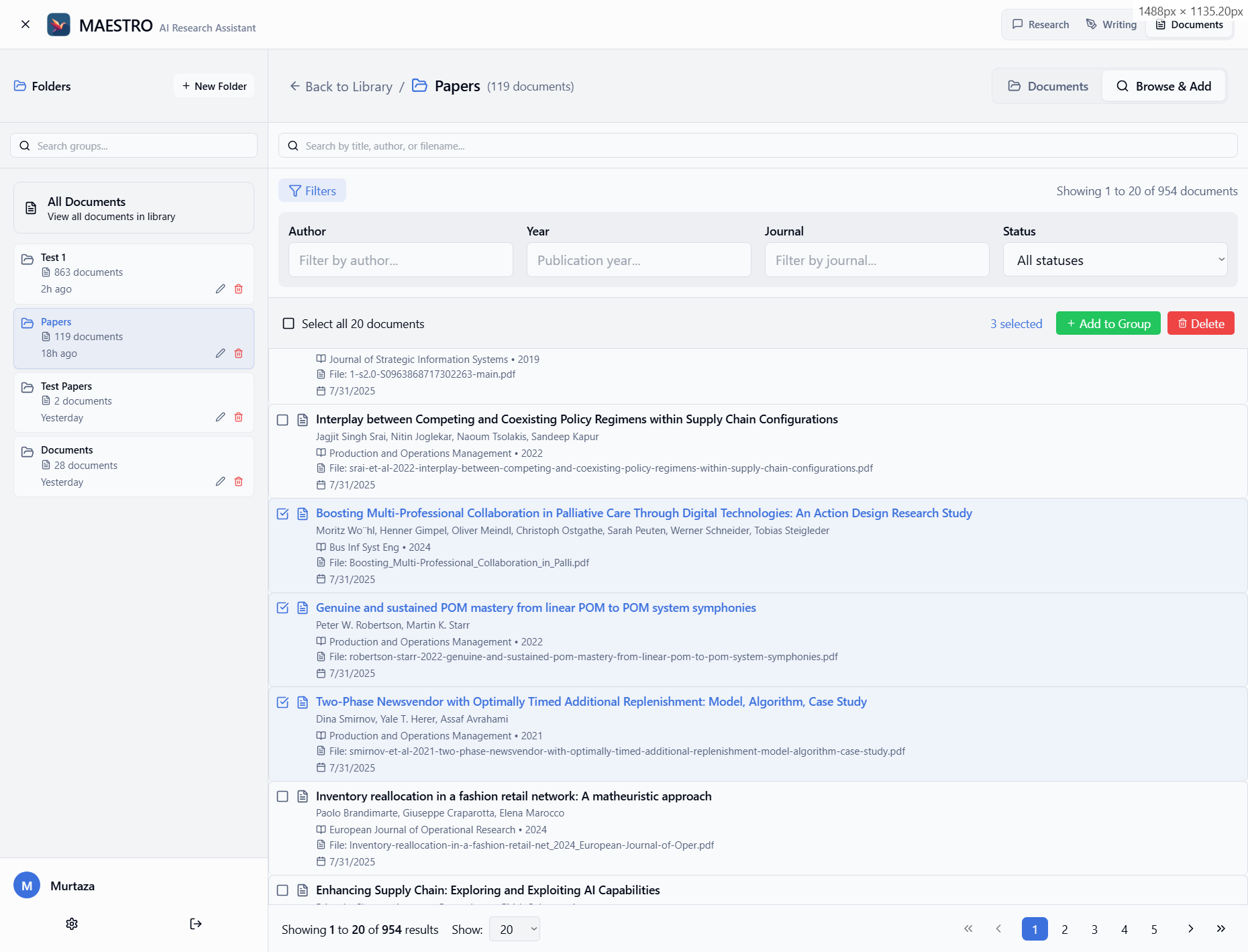

- Document Management: PDF, Word, and Markdown support with semantic search

- Web Integration: Multiple search providers (Tavily, LinkUp, Jina, SearXNG)

- Self-Hosted: Complete control over your data and infrastructure

- Local LLM Support: OpenAI-compatible API for running your own models

This project is dual-licensed:

- GNU Affero General Public License v3.0 (AGPLv3): MAESTRO is offered under the AGPLv3 as its open-source license.

- Commercial License: For users or organizations who cannot comply with the AGPLv3, a separate commercial license is available. Please contact the maintainers for more details.

Feedback, bug reports, and feature suggestions are highly valuable. Please feel free to open an Issue on the GitHub repository.