Sudden drop in TPS after total 14k transactions

drandreaskrueger opened this issue · 30 comments

Hello geth team, perhaps you have ideas where this sudden drop in TPS in quorum might come from:

Consensys/quorum#479 (comment)

System information

Geth version: quorum Geth/v1.7.2-stable-3f1817ea/linux-amd64/go1.10.1

OS & Version: Linux

Expected behaviour

constant TPS

Actual behaviour

For the first 14k transactions, we see averages of ~465 TPS, but then it suddenly goes down considerably, to an average of ~270 TPS.

Steps to reproduce the behaviour

See https://gitlab.com/electronDLT/chainhammer/blob/master/quorum-IBFT.md

We've made 14 releases to go-ethereum since then, also I've no idea what modifications quorum made to our code. I'd gladly debug Ethereum related issues, but I can't help debug a codebase that's a derivative of ours.

Thx.

Not asking for debugging, but asking for ideas what else to try out.

Not a single idea crossed your mind when you saw that?

Would be nice to see how it behaves on top of the latest go-ethereum code.

Yes.

For that I am looking for something like the quorum 7nodes example or the blk.io crux-quorum-4nodes-docker-compose.

Perhaps this works: https://medium.com/@javahippie/building-a-local-ethereum-network-with-docker-and-geth-5b9326b85f37 any better suggestion?

@karalabe please reopen

I am seeing the exact same problem in geth v1.8.13-stable-225171a4

will document it now

it does not only happen in quorum, but also in geth v1.8.13:

Details here:

oh, and I asked - yes, Quorum is going to catch up soon.

@drandreaskrueger It wouldn't drop if you send transactions via mining node ( aka signer )

Here is the live testnet stats https://test-stats.eos-classic.io from eosclassic POA Testnet https://github.com/eosclassic/eosclassic/commit/7d76e6c86f5b0385755e492240eea4b3fba8d60d

with 1s block time and 100 mil gas limit

explorer: https://test-explorer.eos-classic.io/home

Current tps around 200 tps

if you send transactions via mining node ( aka signer )

I am.

In geth submitting them to :8545 in this network https://github.com/javahippie/geth-dev

In quorum submitting them to :22001 in this network https://github.com/drandreaskrueger/crux/commits/master

See https://gitlab.com/electronDLT/chainhammer/blob/master/geth.md and https://gitlab.com/electronDLT/chainhammer/blob/master/quorum-IBFT.md#crux-docker-4nodes

So ... the question is still open: Why the drop?

eosclassic POA Testnet

Interesting.

Does it make sense to also benchmark that one?

It is a public testnet, right? How can I get testnetcoins? How to add my node?

Is it a unique PoA algorithm, or one of those which I have already benchmarked ?

Sorry for the perhaps naive question, I will hopefully be able to look deeper when I am back in the office on Monday.

eosclassic POA Testnet

Is there perhaps also a dockerized/vagrant-virtualbox version of such a network with a private chain - like the 7nodes example of quorum, or the above mentioned crux 4nodes (which both run complete networks and all nodes on my one one machine). If yes, then I would rather benchmark that. (My tobalaba.md benchmarking has shown that public PoA chains can lead to very unstable results.)

@drandreaskrueger It is a public testnet with clique engine ( and some modifications for 0 fee transactions ).

There is a testnet faucet running so you can download the node here https://github.com/eosclassic/eosclassic/releases/tag/v2.1.1 and earn some testnet coins here https://faucet.eos-classic.io/

Right now there is no dockerized/vagrant-virtualbox version for our testnet but we are planning to improve them with AWS / Azure template support soon.

Our purpose of benchmark is to improve existing consensus algorithm for public use and implement modified version of DPOS under ethereum environment, integrating DPOS will be done after specification of DPOS.

So feel free to use our environment, I will list our testnet/development resources here.

EOS Classic POA Testnet Resources

Explorer: https://test-explorer.eos-classic.io/

Faucet: https://faucet.eos-classic.io/

Wallet: https://wallet.eos-classic.io/

RPC-Node: https://test-node.eos-classic.io/

Network stats: https://test-stats.eos-classic.io

EOS - see

- https://gitlab.com/electronDLT/chainhammer/blob/master/eos.md and

- https://github.com/eosclassic/eosclassic/issues/19

but please keep this thread to answering my geth question!

.

the question is still open: Why the drop?

Might have time for it next week, please share any idea, thanks.

any new ideas about that?

You can now super-easily reproduce my results, in less than 10 minutes, with my Amazon AMI image:

https://gitlab.com/electronDLT/chainhammer/blob/master/reproduce.md#readymade-amazon-ami

@drandreaskrueger perhaps a silly question, but one that hasn't been asked: have you eliminated the possibility that this is artefact of using a docker/VM-based network? Not directly related, but I have in the past had problems when developing on fabric due to VM memory limitations after a buildup of transactions. It probably isn't the problem, but it's something to check, which is what you originally asked for.

@jdparkinson93 no, I haven't. Not a silly question. It is good to eliminate all possible factors.

Please send the exact commandline/s for creating a self-sufficient node/network without docker.

Then the benchmarking can be run on that, to see whether this replicates or not.

Thanks.

And do reproduce my results, in less than 10 minutes, with my Amazon AMI image:

https://gitlab.com/electronDLT/chainhammer/blob/master/reproduce.md#readymade-amazon-ami

or same file scroll up ... for instructions how to do it on any Linux system.

Then you can do experiments yourself.

@jdparkinson93 no, I haven't. Not a silly question. It is good to eliminate all possible factors.

Please send the exact commandline/s for creating a self-sufficient node/network without docker.

Then the benchmarking can be run on that, to see whether this replicates or not.

Thanks.

I attempted to do this in the laziest possible way by leveraging ganache, but submitting that many transactions at once caused it a problem. However, drip-feeding them in with batches of 100 I was able to reach and exceed 14k txs.

Time (as reported by time unix command) to run the first few batches of 100 txs:

15.02 real 3.55 user 0.28 sys

15.19 real 3.61 user 0.28 sys

15.51 real 3.69 user 0.29 sys

15.65 real 3.57 user 0.27 sys

15.96 real 3.65 user 0.28 sys

16.32 real 3.76 user 0.33 sys

16.29 real 3.53 user 0.27 sys

16.62 real 3.56 user 0.27 sys

16.15 real 3.70 user 0.28 sys

15.99 real 3.53 user 0.27 sys

16.22 real 3.51 user 0.27 sys

After 14000 txs:

21.64 real 3.63 user 0.29 sys

21.19 real 3.62 user 0.28 sys

20.97 real 3.65 user 0.27 sys

20.70 real 3.62 user 0.29 sys

23.41 real 3.67 user 0.29 sys

22.51 real 3.81 user 0.30 sys

21.03 real 3.64 user 0.27 sys

21.76 real 3.77 user 0.30 sys

21.38 real 3.76 user 0.28 sys

21.69 real 3.75 user 0.30 sys

21.67 real 3.65 user 0.28 sys

20.89 real 3.75 user 0.30 sys

21.12 real 3.68 user 0.29 sys

So as we can see, the time does increase somewhat... but I'd argue that's expected in this case because the transactions I sent in were non-trivial and the execution time per transaction is expected to grow as there are more transactions (since the use-case involves matching related things, which in turn involves some iteration).

Apologies that I didn't use simple Ether/ERC20 transfers for the test, as in hindsight that would have been a better comparison. Nevertheless, I'm not convinced I am seeing a massive (unexpected) slowdown of the magnitude your graphs suggest.

Thanks a lot, @jdparkinson93 - great that you try to reproduce that dropoff with different means.

Please also do reproduce my results, in less than 10 minutes, with my Amazon AMI image:

https://gitlab.com/electronDLT/chainhammer/blob/master/reproduce.md#readymade-amazon-ami

Or with a tiny bit more time ... on any linux system

https://gitlab.com/electronDLT/chainhammer/blob/master/reproduce.md

Thanks.

Today, I had done a quorum run on a really fast AWS machine:

https://gitlab.com/electronDLT/chainhammer/blob/master/reproduce.md#quorum-crux-ibft

And got these results:

https://gitlab.com/electronDLT/chainhammer/blob/master/quorum-IBFT.md#amazon-aws-results

with a mild drop of TPS in the last third.

@drandreaskrueger There isn't really any drop in your latest test - if anything, it appeared to incrementally speed up and then reach a ceiling. What are your thoughts regarding these new results?

After 14000 txs ... So as we can see, the time does increase somewhat

Yes, interesting. Thanks a lot, @jdparkinson93 !

You see a (21-15) / 15 = 40% increase in "real time".

I see a (350 - 220) / 350 ~40% drop in TPS.

Could be the same signal, no?

Could you plot your first column over the whole range of 20k transactions?

I would like to see whether you also see a rather sudden drop, like in most of my earlier experiments.

didn't use simple Ether/ERC20 transfers for the test

I am always overwriting one uint in a smart contract. Easiest to understand here; but then later optimized here.

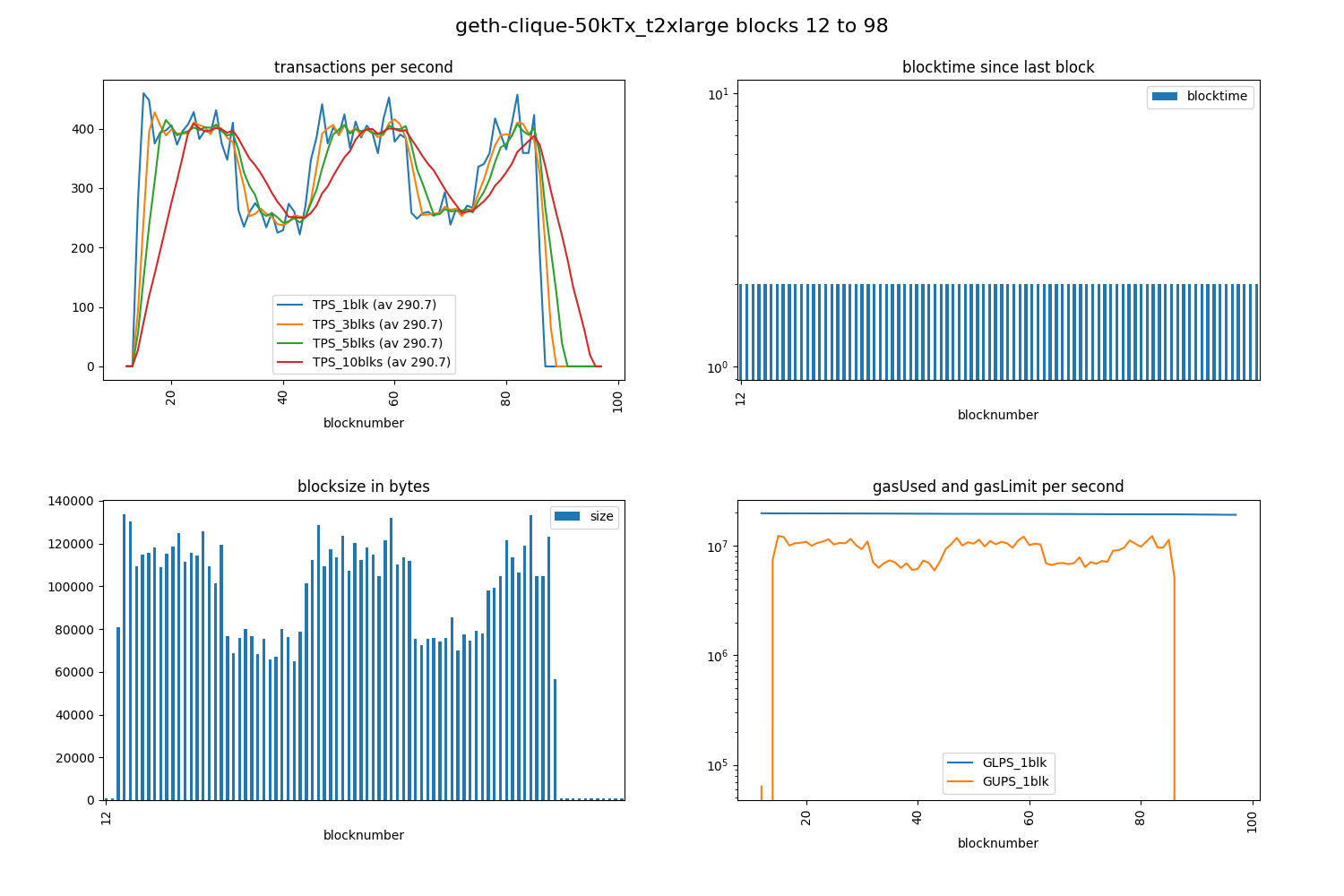

I have new information for you guys.

I ran the same thing again, BUT this time with 50k transactions (not only 20k).

Have a look at this:

= https://github.com/drandreaskrueger/chainhammer/blob/eb97301c6f054cbe6e6278c91a138d10fa04a779/reader/img/geth-clique-50kTx_t2xlarge_tps-bt-bs-gas_blks12-98.png

Isn't that odd?

I would not have expected that.

TPS is dropping considerably after ~14k transactions - but then it actually recovers. Rinse repeat.

More infos, log of whole experiment, etc:

https://github.com/drandreaskrueger/chainhammer/blob/master/geth.md#run-2

EDIT: sorry guys, after massive reorg, some paths had changed. Repaired that now, image is visible again.

sorry guys, after massive reorg, some paths had changed. Repaired that now, image is visible again.

Thank you for putting so much work into debugging this issue.

Thank you for putting so much work into debugging this issue.

Happy to help. I don't speak go yet, and I am not debugging it myself ... but I provide you with a tool that makes debugging this problem ... much easier.

chainhammer - I have just published a brand new version v55:

https://github.com/drandreaskrueger/chainhammer/#quickstart

Instead of installing everything to your main work computer, better use (a virtualbox Debian/Ubuntu installation or) my Amazon AMI to spin up a t2.medium machine, see docs/cloud.md#readymade-amazon-ami.

Then all you need is this line:

CH_TXS=50000 CH_THREADING="threaded2 20" ./run.sh "YourNaming-Geth" geth-clique

and afterwards check results/runs/ to find an autogenerated results page, with time series diagrams.

Hope that helps! Keep me posted please.

@drandreaskrueger We recently did some changes in the txpool s.th. local transactions are not stalled for that long, and some improvements to the memory mgmt. Previously we would need to reheap the txpool whenever a new tx was send, this created a lot of pressure on the garbage collector. I don't know how to use AWS and have no account there. Could maybe rerun your chainhammer once again with the newest release? Thank you very much for all your work!

Thank you very much for your message, @MariusVanDerWijden. Happy to be of use.

Right now, it's not my (paid) focus, honestly - but ... I intended to rerun 'everything chainhammer' again with the -then newest- versions of all available Ethereum clients too, at some time in the future anyways. I could also imagine to then publish all the -updated- results in some paper, so ... this is good to know. Great. Thanks for getting back to me. Much appreciated.

Cool that you made some changes here, and that perhaps this issue might have something to do with those symptoms, and you improved the client. Great.

Oh, and ... AWS is really not important here. If you want to, try yourself now - I recommend to first watch the (boring) 15 minutes video, also these step by step instructions had been really helpful for other users.

It shouldn't be difficult for you to get new geth results, using chainhammer. It could also be a useful tool for you, to identify and fix further bottlenecks, especially because it is so simplistic.

This ticket/investigation is getting quite old, and I suspect maybe not relevant with the current code. I'm going to close this for now.