CVPR 2025

Project Page | Paper | Data | Checkpoints

Chih-Hao Lin1,2,

Jia-Bin Huang1,3,

Zhengqin Li1,

Zhao Dong1,

Christian Richardt1,

Tuotuo Li1,

Michael Zollhöfer1,

Johannes Kopf1,

Shenlong Wang2,

Changil Kim1

1Meta, 2University of Illinois at Urbana-Champaign, 3University of Maryland, College Park

The code has been tested on:

- OS: Ubuntu 22.04.4 LTS

- GPU: NVIDIA GeForce RTX 4090

- Driver Version: 535

- CUDA Version: 12.2

- nvcc: 11.7

Please install anaconda/miniconda and run the following script to set up the environment:

bash scripts/conda_env.shThe package information details can be found in environment.yml.

- Please download datasets and edit the paths (

DATASET_ROOT) in training/rendering scripts accordingly. We provide 8 scenes in total, including 2 real scenes from ScanNet++ (scannetpp/), 2 real scenes from FIPT (fipt/real/), and 4 synthetic scenes from FIPT (fipt/indoor_synthetic/).- Geometry is reconstructed and provided for each scene.

- While HDR images (

*.exr) are not used by IRIS, they are also provided for FIPT scenes.

- Please download checkpoints and put under

checkpoints. We provide checkpoints of all the scenes in the dataset.

The training scripts are scripts/{dataset}/{scene}/train.sh. For example, please run the following to train at bathroom2 scene in ScanNet++:

bash scripts/scannetpp/bathroom2/train.shThe hyper-parameters are listed in configs/config.py, and can be adjusted at the top of the scripts. The training contains several stages:

- Bake surface light field (SLF), and save as

checkpoints/{exp_name}/bake/vslf.npz:

python slf_bake.py --dataset_root $DATASET_ROOT --scene $SCENE\

--output checkpoints/$EXP/bake --res_scale $RES_SCALE\

--dataset $DATASET- Extract emitter mask, and save as

checkpoints/{exp_name}/bake/emitter.pth:

python extract_emitter_ldr.py \

--dataset_root $DATASET_ROOT --scene $SCENE\

--output checkpoints/$EXP/bake \

--dataset $DATASET --res_scale $RES_SCALE\

--threshold 0.99 - Initialize BRDF and emitter radiance:

python initialize.py --experiment_name $EXP --max_epochs 5 \

--dataset $DATASET $DATASET_ROOT --scene $SCENE \

--voxel_path checkpoints/$EXP/bake/vslf.npz \

--emitter_path checkpoints/$EXP/bake/emitter.pth \

--has_part $HAS_PART --val_frame $VAL_FRAME\

--SPP $SPP --spp $spp --crf_basis $CRF_BASIS --res_scale $RES_SCALE- Update emitter radiance:

python extract_emitter_ldr.py --mode update\

--dataset_root $DATASET_ROOT --scene $SCENE\

--output checkpoints/$EXP/bake --res_scale $RES_SCALE\

--ckpt checkpoints/$EXP/init.ckpt\

--dataset $DATASET- Bake shading maps, and save in

outputs/{exp_name}/shading:

python bake_shading.py \

--dataset_root $DATASET_ROOT --scene $SCENE \

--dataset $DATASET --res_scale $RES_SCALE\

--slf_path checkpoints/$EXP/bake/vslf.npz \

--emitter_path checkpoints/$EXP/bake/emitter.pth \

--output outputs/$EXP/shading - Optimize BRDF and camera CRF:

python train_brdf_crf.py --experiment_name $EXP \

--dataset $DATASET $DATASET_ROOT --scene $SCENE\

--has_part $HAS_PART --val_frame $VAL_FRAME --res_scale $RES_SCALE\

--max_epochs 2 --dir_val val_0 \

--ckpt_path checkpoints/$EXP/init.ckpt \

--voxel_path checkpoints/$EXP/bake/vslf.npz \

--emitter_path checkpoints/$EXP/bake/emitter.pth \

--cache_dir outputs/$EXP/shading \

--SPP $SPP --spp $spp --lp 0.005 --la 0.01 \

--l_crf_weight 0.001 --crf_basis $CRF_BASIS- Refine surface light field:

python slf_refine.py --dataset_root $DATASET_ROOT --scene $SCENE \

--output checkpoints/$EXP/bake --load vslf.npz --save vslf_0.npz \

--dataset $DATASET --res_scale $RES_SCALE\

--ckpt checkpoints/$EXP/last_0.ckpt --crf_basis $CRF_BASIS- Refine emitter radiance:

python train_emitter.py --experiment_name $EXP \

--dataset $DATASET $DATASET_ROOT --scene $SCENE\

--has_part $HAS_PART --val_frame $VAL_FRAME --res_scale $RES_SCALE\

--max_epochs 1 --dir_val val_0_emitter \

--ckpt_path checkpoints/$EXP/last_0.ckpt \

--voxel_path checkpoints/$EXP/bake/vslf_0.npz \

--emitter_path checkpoints/$EXP/bake/emitter.pth \

--SPP $SPP --spp $spp --crf_basis $CRF_BASIS- Refine shading maps:

python refine_shading.py \

--dataset_root $DATASET_ROOT --scene $SCENE \

--dataset $DATASET --res_scale $RES_SCALE\

--slf_path checkpoints/$EXP/bake/vslf_0.npz \

--emitter_path checkpoints/$EXP/bake/emitter.pth \

--ckpt checkpoints/$EXP/last_0.ckpt \

--output outputs/$EXP/shadingThe rendering scripts are scripts/{dataset}/{scene}/render.sh. For example, please run the following for bathroom2 scene in ScanNet++:

bash scripts/scannetpp/bathroom2/render.shThe scripts contain three parts:

- Render train/test frames, including RGB, BRDF, and emission maps. The output is saved at

outputs/{exp_name}/output/{split}:

python render.py --experiment_name $EXP --device 0\

--ckpt last_1.ckpt \

--dataset $DATASET $DATASET_ROOT --scene $SCENE\

--res_scale $RES_SCALE\

--emitter_path checkpoints/$EXP/bake\

--output_path 'outputs/'$EXP'/output'\

--split 'test'\

--SPP $SPP --spp $spp --crf_basis $CRF_BASIS - Render videos of RGB, BRDF, and emission maps. The output is saved at

outputs/{exp_name}/video:

python render_video.py --experiment_name $EXP --device 0\

--ckpt last_1.ckpt \

--dataset $DATASET $DATASET_ROOT --scene $SCENE \

--res_scale $RES_SCALE\

--emitter_path checkpoints/$EXP/bake\

--output_path 'outputs/'$EXP'/video'\

--split 'test'\

--SPP $SPP --spp $spp --crf_basis $CRF_BASIS - Render relighting videos. The output is saved at

outputs/{exp_name}/relight:

python render_relight.py --experiment_name $EXP --device 0\

--ckpt last_1.ckpt --mode traj\

--dataset $DATASET $DATASET_ROOT --scene $SCENE \

--res_scale $RES_SCALE \

--emitter_path checkpoints/$EXP/bake\

--output_path 'outputs/'$EXP'/relight/video_relight_0'\

--split 'test'\

--light_cfg 'configs/scannetpp/bathroom2/relight_0.yaml' \

--SPP $SPP --spp $spp --crf_basis $CRF_BASIS - The relighting config files are

configs/{dataset}/{scene}/*.yaml, and are input for parameter--light_cfg, generating different relighting results. - For object insertion, the emitter geometry and average radiance are extracted with

scripts/extract_emitter.sh. The assets for insertion can be downloaded here and put underoutputs.

The inverse rendering metric of FIPT synthetic scenes is calculated by (please modify the paths accordingly):

python -m utils.metric_brdf- The camera poses can be estimated with NeRFstudio pipeline (

transforms.json). - The surface albedo is estimated with IRISFormer, and can be replaced with RGB-X for even better performance.

- Surface normal is estimated with OmniData for geometry reconstruction, and can be replaced with more recent works for even better performance.

- Geometry is reconstructed with BakedSDF in SDFStudio. We use customized version and run the following:

# Optimize SDF

python scripts/train.py bakedsdf-mlp \

--output-dir [output_dir] --experiment-name [experiment_name] \

--trainer.steps-per-eval-image 5000 --trainer.steps-per-eval-all-images 50000 \

--trainer.max-num-iterations 250001 --trainer.steps-per-eval-batch 5000 \

--pipeline.model.sdf-field.bias 1.5 \

--pipeline.model.sdf-field.inside-outside True \

--pipeline.model.eikonal-loss-mult 0.01 \

--pipeline.model.num-neus-samples-per-ray 24 \

--machine.num-gpus 1 \

--pipeline.model.mono-normal-loss-mult 0.1 \

panoptic-data \

--data [path_to_data] \

--panoptic_data False --mono_normal_data True --panoptic_segment False \

--orientation-method none --center-poses False --auto-scale-poses False

# Extract mesh

python scripts/extract_mesh.py --load-config [exp_dir]/config.yml \

--output-path [exp_dir]/mesh.ply \

--bounding-box-min -2.0 -2.0 -2.0 --bounding-box-max 2.0 2.0 2.0 \

--resolution 2048 --marching_cube_threshold 0.001 \

--create_visibility_mask True --simplify-mesh TrueIRIS is CC BY-NC 4.0 licensed, as found in the LICENSE file.

If you find our work useful, please consider citing:

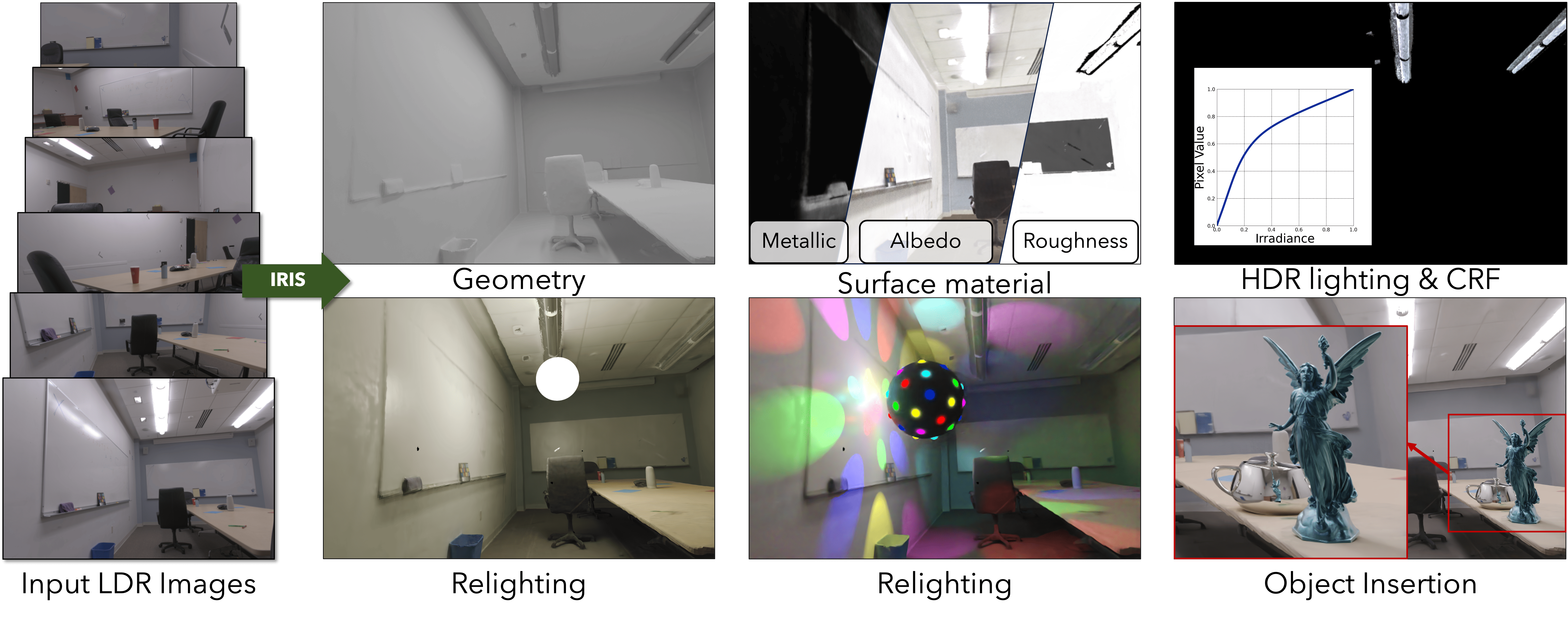

@inproceedings{lin2025iris,

title = {{IRIS}: Inverse rendering of indoor scenes from low dynamic range images},

author = {Lin, Chih-Hao and Huang, Jia-Bin and Li, Zhengqin and Dong, Zhao and

Richardt, Christian and Li, Tuotuo and Zollh{\"o}fer, Michael and

Kopf, Johannes and Wang, Shenlong and Kim, Changil},

booktitle = {Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2025}

}Our code is based on FIPT. Some experiment results are provided by the authors of I^2-SDF and Li et al. We thank the authors for their excellent work!