Quick and dirty implementations of some interesting works related to deep learning. Contribution is always welcome, you could just add links to your repository.

You may use any of the deep learning frameworks, such as pytorch, theano, mxnet and tensorflow. However, you should keep the implementation as simple as possible.

Comparison of various SGD algorithms on logistic regression and MLP. The relation of these algorithms is shown in the following figure, please refer to sgd-comparison for the details.

Refer to jcjohnson.

Use neural network to approximate functions. The approximated functions are shown in the following figures, please refer to function-approximation for the details.

Refer to karpathy or johnarevalo.

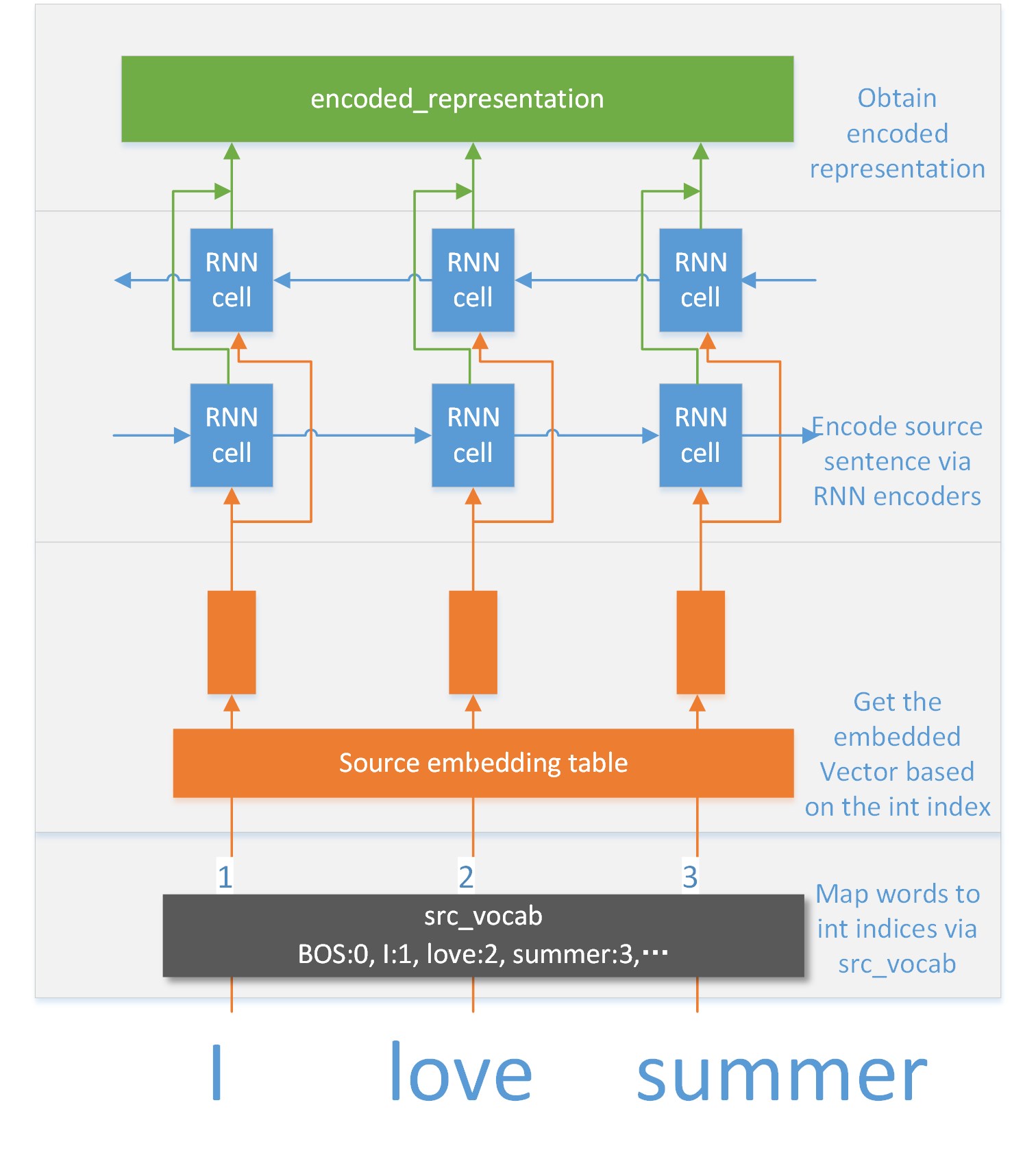

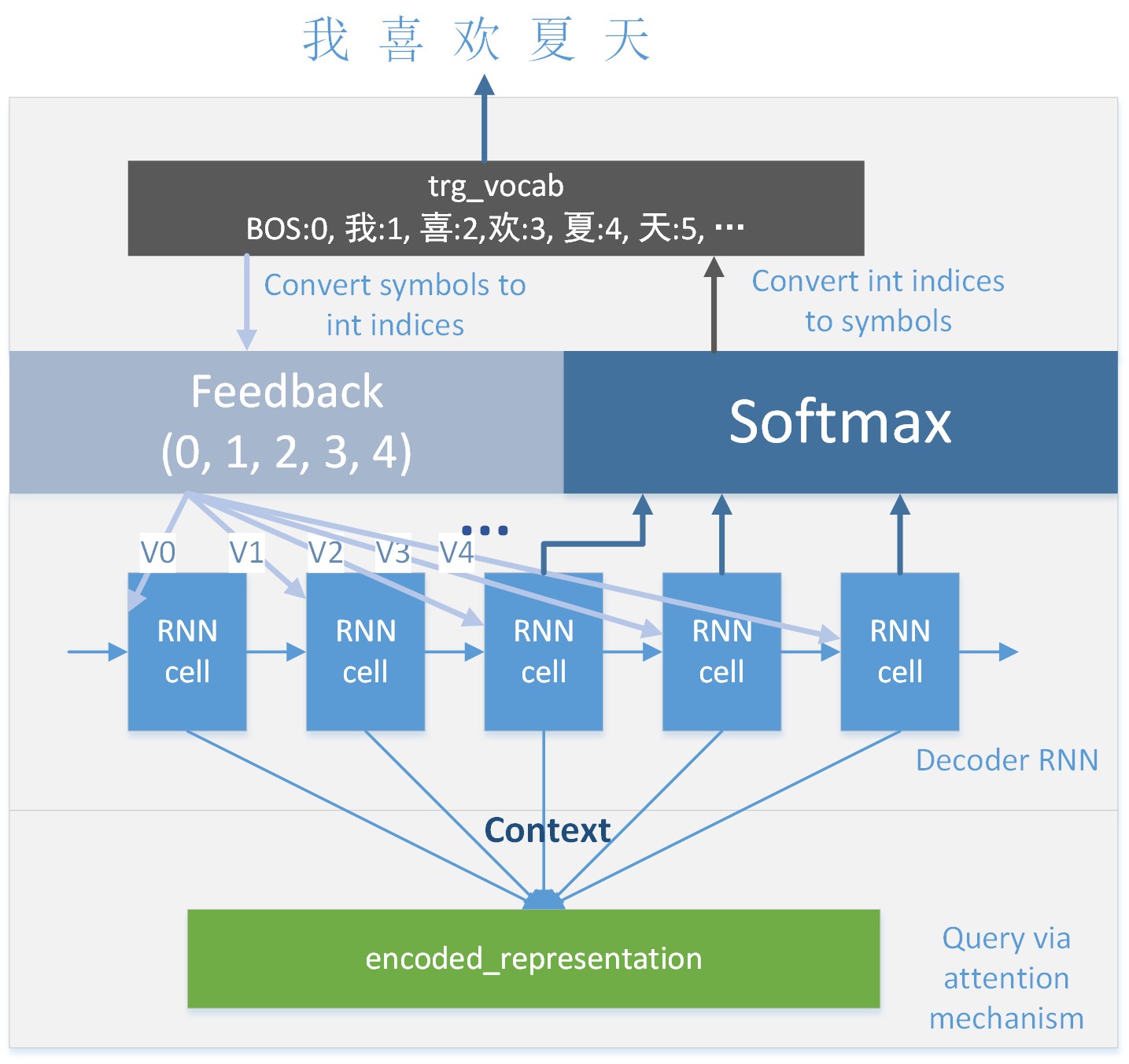

It is really hard to simplify because of the attention mechanism. We have try our best to simlify the encoder-decoder architecture, which is demonstrated in the following figures.

Please refer to Sequencing for more details.

Refer to Keras.

A simple demonstration of Generative Adversarial Networks (GAN), maybe problematic.

According to the paper, we also use GAN to generate Gaussian distribution which shown in the left figure. Then we try to generate digits based on MNIST dataset, however, we encouter "the Helvetica scenario" in which G collapses too many values of z to the same value of x. Nevertheless, it is a simple demonstration, please see the details.

Updating.... Please help us to implement these examples, we need a simplified implementation. Or you have other nice examples, please add to this list.