The sinking of the RMS Titanic is one of the most infamous shipwrecks in history. On April 15, 1912, during her maiden voyage, the Titanic sank after colliding with an iceberg, killing 1502 out of 2224 passengers and crew. This sensational tragedy shocked the international community and led to better safety regulations for ships.

One of the reasons that the shipwreck led to such loss of life was that there were not enough lifeboats for the passengers and crew. Although there was some element of luck involved in surviving the sinking, some groups of people were more likely to survive than others, such as women, children, and the upper-class.

In this challenge, we ask you to complete the analysis of what sorts of people were likely to survive. In particular, we ask you to apply the tools of machine learning to predict which passengers survived the tragedy.

Problem Reference : https://www.kaggle.com/c/titanic

- numpy

- pandas

- matplotlib

- scikit-learn

- jupyter notebook

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import matplotlib# loading training dataset with labels

df = pd.read_csv("data/train.csv")

df.head(n=5).dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| PassengerId | Survived | Pclass | Name | Sex | Age | SibSp | Parch | Ticket | Fare | Cabin | Embarked | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 3 | Braund, Mr. Owen Harris | male | 22.0 | 1 | 0 | A/5 21171 | 7.2500 | NaN | S |

| 1 | 2 | 1 | 1 | Cumings, Mrs. John Bradley (Florence Briggs Th... | female | 38.0 | 1 | 0 | PC 17599 | 71.2833 | C85 | C |

| 2 | 3 | 1 | 3 | Heikkinen, Miss. Laina | female | 26.0 | 0 | 0 | STON/O2. 3101282 | 7.9250 | NaN | S |

| 3 | 4 | 1 | 1 | Futrelle, Mrs. Jacques Heath (Lily May Peel) | female | 35.0 | 1 | 0 | 113803 | 53.1000 | C123 | S |

| 4 | 5 | 0 | 3 | Allen, Mr. William Henry | male | 35.0 | 0 | 0 | 373450 | 8.0500 | NaN | S |

#Remove the most incompleted fields

df_reduced = df.drop(['PassengerId','Name','Ticket','Cabin'],axis=1)

df_clean = df_reduced.dropna()

df_clean.head(n=5).dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| Survived | Pclass | Sex | Age | SibSp | Parch | Fare | Embarked | |

|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 3 | male | 22.0 | 1 | 0 | 7.2500 | S |

| 1 | 1 | 1 | female | 38.0 | 1 | 0 | 71.2833 | C |

| 2 | 1 | 3 | female | 26.0 | 0 | 0 | 7.9250 | S |

| 3 | 1 | 1 | female | 35.0 | 1 | 0 | 53.1000 | S |

| 4 | 0 | 3 | male | 35.0 | 0 | 0 | 8.0500 | S |

from sklearn.feature_extraction import DictVectorizer# feature extraction from datasets

vec = DictVectorizer(sparse=False)

df_dict = df_clean.to_dict(orient = 'records')

df_dict_feature = vec.fit_transform(df_dict)

df_feature = vec.get_feature_names()

print df_feature

df_dict_feature['Age', 'Embarked=C', 'Embarked=Q', 'Embarked=S', 'Fare', 'Parch', 'Pclass', 'Sex=female', 'Sex=male', 'SibSp', 'Survived']

array([[ 22., 0., 0., ..., 1., 1., 0.],

[ 38., 1., 0., ..., 0., 1., 1.],

[ 26., 0., 0., ..., 0., 0., 1.],

...,

[ 19., 0., 0., ..., 0., 0., 1.],

[ 26., 1., 0., ..., 1., 0., 1.],

[ 32., 0., 1., ..., 1., 0., 0.]])

df_final = pd.DataFrame(df_dict_feature,columns=df_feature)

X = df_final.drop('Survived',axis=1)

y = df_final['Survived']

df_final.head(n=5) #final prepared dataset with features and labels.dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| Age | Embarked=C | Embarked=Q | Embarked=S | Fare | Parch | Pclass | Sex=female | Sex=male | SibSp | Survived | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 22.0 | 0.0 | 0.0 | 1.0 | 7.2500 | 0.0 | 3.0 | 0.0 | 1.0 | 1.0 | 0.0 |

| 1 | 38.0 | 1.0 | 0.0 | 0.0 | 71.2833 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 |

| 2 | 26.0 | 0.0 | 0.0 | 1.0 | 7.9250 | 0.0 | 3.0 | 1.0 | 0.0 | 0.0 | 1.0 |

| 3 | 35.0 | 0.0 | 0.0 | 1.0 | 53.1000 | 0.0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 |

| 4 | 35.0 | 0.0 | 0.0 | 1.0 | 8.0500 | 0.0 | 3.0 | 0.0 | 1.0 | 0.0 | 0.0 |

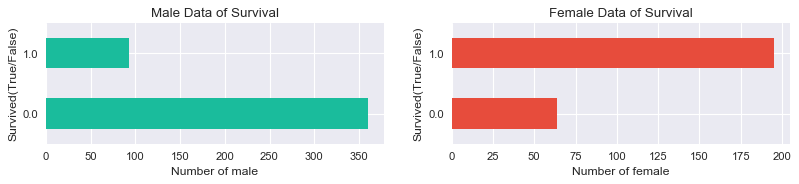

#plotting of some insight of data male vs female

plt.figure(figsize=(12,2),dpi=80)

plt.subplot(1,2,1)

df_male=df_final[df_final['Sex=male']==1]

df_female=df_final[df_final['Sex=female']==1]

df_male['Survived'].value_counts().sort_index().plot(kind='barh',color='#1abc9c')

plt.xlabel('Number of male')

plt.ylabel('Survived(True/False)')

plt.title('Male Data of Survival')

plt.subplot(1,2,2)

df_female['Survived'].value_counts().sort_index().plot(kind='barh',color='#e74c3c')

plt.xlabel('Number of female')

plt.ylabel('Survived(True/False)')

plt.title('Female Data of Survival')

plt.show()ratio_male= df_male['Survived'].sum()/len(df_male)

ratio_female = df_female['Survived'].sum()/len(df_female)

print 'Ratio of male survival',ratio_male

print 'Ratio of female survival',ratio_female

#As we see female have good chance of survival if you are female then kudos !! sorry boysRatio of male survival 0.205298013245

Ratio of female survival 0.752895752896

from sklearn.model_selection import train_test_split

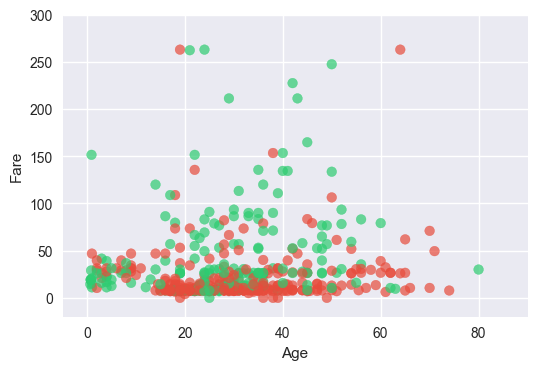

X_train,X_test,y_train,y_test = train_test_split(X,y,test_size=0.33,random_state=42) plt.figure(figsize=(6,4),dpi=100)

colors= ['#e74c3c','#2ecc71']

label_name = set(y_train)

plt.scatter(X_train.Age,X_train.Fare,c=y_train,alpha=0.7,cmap=matplotlib.colors.ListedColormap(colors))

plt.xlim(-5,90)

plt.ylim(-20,300)

plt.xlabel('Age')

plt.ylabel('Fare')

plt.show()#Using Random Forest Classifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

clf1 = RandomForestClassifier(n_estimators=15)

clf1.fit(X_train,y_train)

y_predict = clf1.predict(X_train)

print 'Model accuracy_score: ',accuracy_score(y_train,y_predict)

y_test_predict = clf1.predict(X_test)

print 'Test accuracy score',accuracy_score(y_test,y_test_predict)Model accuracy_score: 0.985324947589

Test accuracy score 0.774468085106

# Support vector machine for classification

from sklearn import svm

from sklearn.metrics import accuracy_score

clf2 = svm.SVC(gamma=0.00016,C=1000)

clf2.fit(X_train,y_train)

y_predict = clf2.predict(X_train)

print 'Model accuracy_score: ',accuracy_score(y_train,y_predict)

y_test_predict = clf2.predict(X_test)

print 'Test accuracy score',accuracy_score(y_test,y_test_predict)Model accuracy_score: 0.834381551363

Test accuracy score 0.782978723404

y_test_main_predict = clf2.predict(X_test)#final classifier with some good hyperparameter which we learn from tuning

clf3 = svm.SVC(gamma=0.00016,C=1000)

clf3.fit(X,y)

y_trainCSV_predict = clf3.predict(X)

print 'Model accuracy_score: ',accuracy_score(y,y_trainCSV_predict)Model accuracy_score: 0.824438202247

# Cleaning test data

df_test = pd.read_csv("data/test_corrected.csv")

df_reduced_test = df_test.drop(['Unnamed: 11','Unnamed: 12','Unnamed: 13','PassengerId','Name','Ticket','Cabin','Unnamed: 0'],axis=1)

df_clean_test = df_reduced_test.dropna()

vec_test = DictVectorizer(sparse=False)

df_dict_test = df_clean_test.to_dict(orient = 'records')

df_dict_feature_test = vec_test.fit_transform(df_dict_test)

df_feature_test = vec_test.get_feature_names()

df_final_test = pd.DataFrame(df_dict_feature_test,columns=df_feature_test)

df_final_test.head(n=5).dataframe thead th {

text-align: left;

}

.dataframe tbody tr th {

vertical-align: top;

}

| Age | Embarked=C | Embarked=Q | Embarked=S | Fare | Parch | Pclass | Sex=female | Sex=male | SibSp | |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 34.5 | 0.0 | 1.0 | 0.0 | 7.8292 | 0.0 | 3.0 | 0.0 | 1.0 | 0.0 |

| 1 | 47.0 | 0.0 | 0.0 | 1.0 | 7.0000 | 0.0 | 3.0 | 1.0 | 0.0 | 1.0 |

| 2 | 62.0 | 0.0 | 1.0 | 0.0 | 9.6875 | 0.0 | 2.0 | 0.0 | 1.0 | 0.0 |

| 3 | 27.0 | 0.0 | 0.0 | 1.0 | 8.6625 | 0.0 | 3.0 | 0.0 | 1.0 | 0.0 |

| 4 | 22.0 | 0.0 | 0.0 | 1.0 | 12.2875 | 1.0 | 3.0 | 1.0 | 0.0 | 1.0 |

#After clean test data and we see lots of age are missings .lets write model for predicting age

y_test_predict_final = clf3.predict(df_final_test)

df_test['Survived'] = y_test_predict_final

df_result = df_test[['PassengerId','Survived']]

df_result.to_csv('data/result.csv')The kaggle test data on this prediction model strategy gives accuracy of 76.56 % .