⚠️ Disclaimer: This project is experimental and not intended for use in production applications.

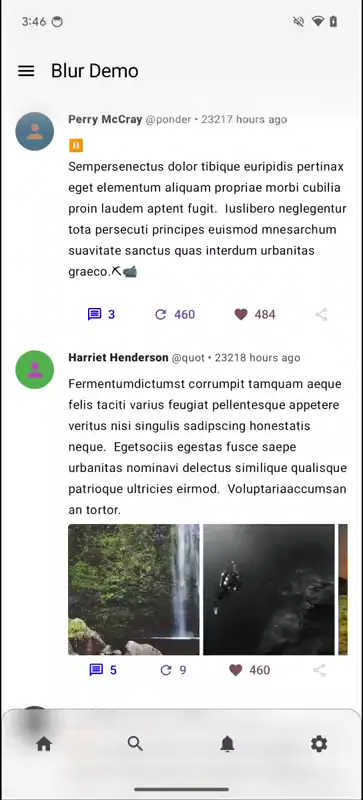

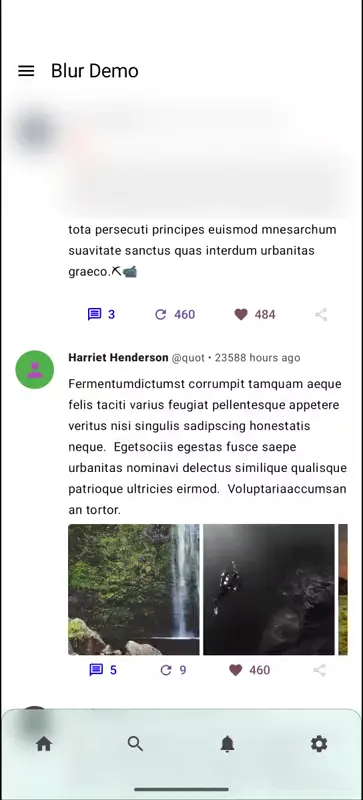

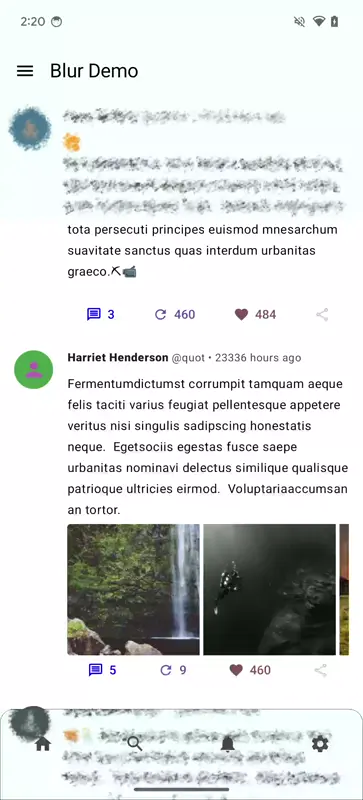

Imla (Ukrainian for "Haze", pronounced [ˈimlɑ] (eem-lah)) is an experimental project exploring GPU-accelerated view blurring on Android. It aims to implement efficient blurring effects using OpenGL, targeting devices from Android 6 (API 23) onwards.

The project serves as a playground for experimenting with GPU rendering and post-processing effects, with the potential to evolve into a full-fledged library in the future.

- Gamma corrected blurring;

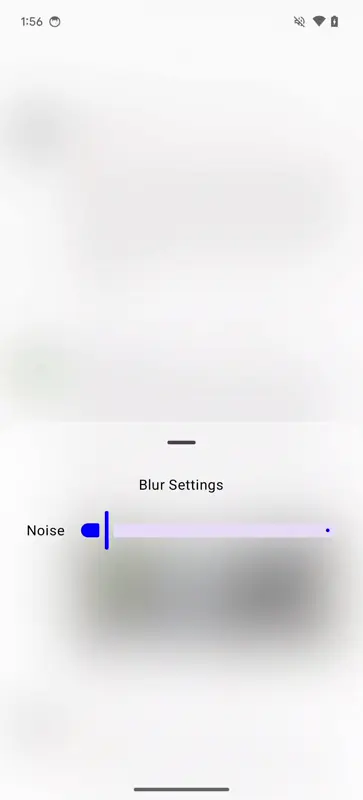

- Adjustable blur radius;

- Color tinting of blurred areas;

- Blending with a noise mask for a frosted glass effect;

- Setting blurring masks for gradient blur effects;

- Supports Android 6 (API 23) onwards.

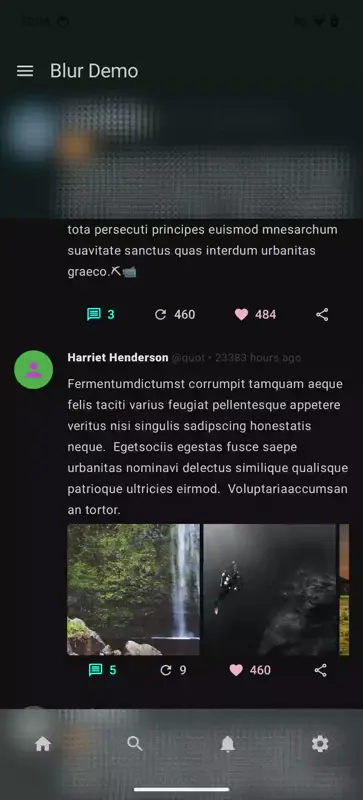

| Pixel 6 | |

|---|---|

|

|

|

|

|

|

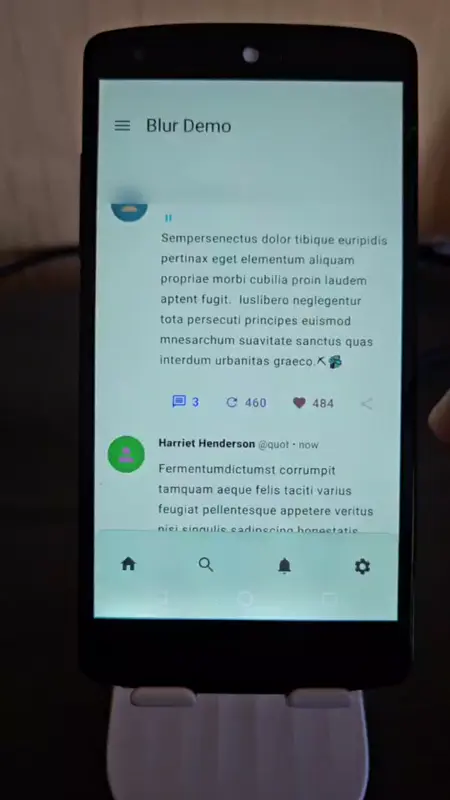

| Nexus 5 |

|---|

|

|

|

Imla uses a combination of GraphicsLayer from Jetpack Compose and OpenGL ES 3.0 to achieve fast,

GPU-accelerated blurring. The processing pipeline does multiple steps to achieve blurred effect:

- A specified view is rendered as a background texture using

SurfaceandSurfaceTexture( see RenderableRootLayer.kt). - The rendered texture is copied to a post-processing framebuffer.

- The BackdropBlur composable wraps child composable elements that need a blurred background.

- The blurred texture is rendered as a SurfaceView background to the wrapped elements, creating the illusion of a blurred backdrop.

The post-processing pipeline includes:

- Down-sampling the background texture, RenderableRootLayer.kt;

- Applying a two-pass blur algorithm with gamma correction, BlurEffect;

- Blending with a noise texture for a frosted glass effect, NoiseEffect;

- (Optional) Application of a mask for progressive or gradient blur effects, MaskEffect.

Importantly, all blur color processing is performed in the linear color space, with appropriate gamma decoding and encoding applied to ensure colors blend naturally, preserving vibrancy and contrast.

The project reuses the OpenGL abstractions from another experimental project: desugar-64/android-opengl-renderer. This repo is a playground to learn graphics and OpenGL, including some convenient abstractions for setting up OpenGL data structures and calling various OpenGL functions.

The current implementation uses a fully dynamic renderer, which pushes vertex data each frame. While this approach offers flexibility, it introduces some performance overhead. Future iterations aim to optimize this aspect of the rendering pipeline.

Current performance metrics for the blur effect on a Pixel 6 device:

BlurEffect#applyEffect: ~1.19msRenderObject#onRender: ~4.842ms

| Trace |

|---|

|

|

|

These timings indicate that the blur effect and rendering process are relatively fast, but there's still room for optimization.

[ ] Implement Dual Kawase Blurring Filter for improved performance; [ ] Optimize the rendering pipeline and OpenGL abstractions; [ ] Address synchronization issues between the main thread and OpenGL thread.

This project is open to suggestions and contributions. Feel free to open issues or submit pull requests on GitHub.

This project is licensed under the MIT License. See the LICENSE file for details.

For project development updates and history, refer to this Twitter thread.