Accepted in Conference of Robot Learning (CoRL) 2021.

Harshit Sikchi, Wenxuan Zhou, David Held

- PyTorch 1.5

- OpenAI Gym

- MuJoCo

- tqdm

- D4RL dataset

- LOOP (Core method)

-

- Training code (Online RL):

train_loop_sac.py

- Training code (Online RL):

-

- Training code (Offline RL):

train_loop_offline.py

- Training code (Offline RL):

-

- Training code (safe RL):

train_loop_safety.py

- Training code (safe RL):

-

- Policies (online/offline/safety):

policies.py

- Policies (online/offline/safety):

-

- ARC/H-step lookahead policy:

controllers/

- ARC/H-step lookahead policy:

- Environments:

envs/ - Configurations:

configs/

- All the experiments are to be run under the root folder.

- Config files in

configs/are used to specify hyperparameters for controllers and dynamics. - Please keep all the other values in yml files consistent with hyperparamters given in paper to reproduce the results in our paper.

python train_loop_sac.py --env=<env_name> --policy=LOOP_SAC_ARC --start_timesteps=<initial exploration steps> --exp_name=<location_to_logs>

Environments wrappers with their termination condition can be found under envs/

Download CRR trained models from Link into the root folder.

python train_loop_offline.py --env=<env_name> --policy=LOOP_OFFLINE_ARC --exp_name=<location_to_logs> --offline_algo=CRR --prior_type=CRR

Currently supported for d4rl MuJoCo locomotions tasks only.

python train_loop_safety.py --env=<env_name> --policy=safeLOOP_ARC --exp_name=<location_to_logs>

Safety environments can be found under envs/safety_envs.py

If you find this work useful, please use the following citation:

@inproceedings{sikchi2022learning,

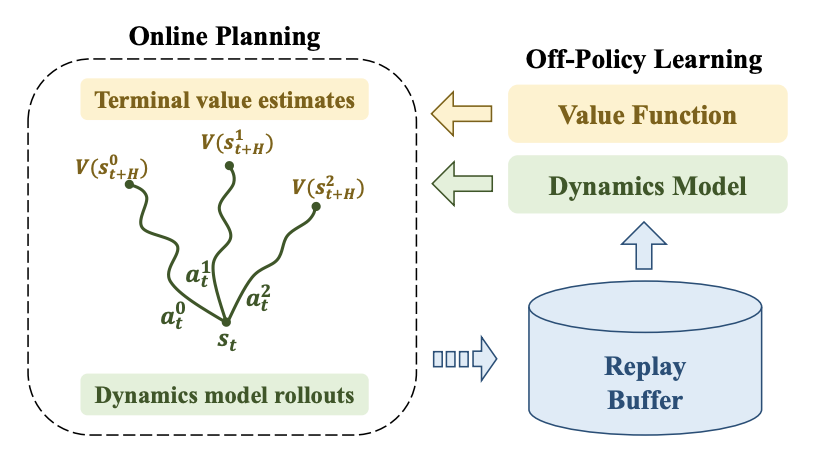

title={Learning off-policy with online planning},

author={Sikchi, Harshit and Zhou, Wenxuan and Held, David},

booktitle={Conference on Robot Learning},

pages={1622--1633},

year={2022},

organization={PMLR}

}