runtime: CPU instruction "NOP" utilizes ~50% CPU and pprof doesn't explain that

xaionaro opened this issue · 6 comments

What version of Go are you using (go version)?

$ go version go version go1.12 linux/amd64

Does this issue reproduce with the latest release?

Yes.

What operating system and processor architecture are you using (go env)?

go env Output

$ go env GOARCH="amd64" GOBIN="" GOCACHE="/home/xaionaro/.cache/go-build" GOEXE="" GOFLAGS="" GOHOSTARCH="amd64" GOHOSTOS="linux" GOOS="linux" GOPATH="/home/xaionaro/go" GOPROXY="" GORACE="" GOROOT="/home/xaionaro/.gimme/versions/go1.12.linux.amd64" GOTMPDIR="" GOTOOLDIR="/home/xaionaro/.gimme/versions/go1.12.linux.amd64/pkg/tool/linux_amd64" GCCGO="gccgo" CC="gcc" CXX="g++" CGO_ENABLED="1" GOMOD="" CGO_CFLAGS="-g -O2" CGO_CPPFLAGS="" CGO_CXXFLAGS="-g -O2" CGO_FFLAGS="-g -O2" CGO_LDFLAGS="-g -O2" PKG_CONFIG="pkg-config" GOGCCFLAGS="-fPIC -m64 -pthread -fmessage-length=0 -fdebug-prefix-map=/tmp/go-build465181040=/tmp/go-build -gno-record-gcc-switches"

What did you do?

go get github.com/xaionaro-go/atomicmap

cd "$(go env GOPATH)"/src/github.com/xaionaro-go/atomicmap

git pull # just in case

git checkout performance_experiments

go get ./...

go test ./ -bench=Benchmark_atomicmap_Get_intKeyType_blockSize16777216_keyAmount1048576_trueThreadSafety -benchmem -benchtime 5s -timeout 60s -cpuprofile /tmp/cpu.prof

go tool pprof /tmp/cpu.prof

(pprof) web

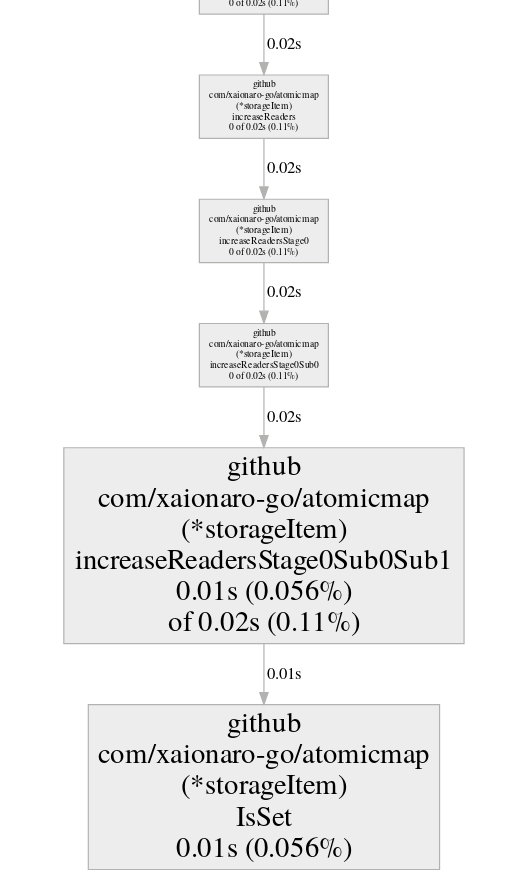

(pprof) web increaseReadersStage0Sub0

(pprof) web increaseReadersStage0Sub0Sub0

(pprof) web increaseReadersStage0Sub0Sub1

What did you expect to see?

I expect to see any information about what is utilizing CPU

What did you see instead?

I see an empty function (that doesn't do anything by itself) which utilizes ~50% CPU. Or to be more specific instruction "NOPL" utilizes the CPU. It doesn't make any sense.

The method:

func (slot *storageItem) increaseReadersStage0Sub0() isSet {

slot.increaseReadersStage0Sub0Sub0()

return slot.increaseReadersStage0Sub0Sub1()

}

According to pprof (see screenshots) both of this sub-calls doesn't utilizes anything essential. But the method utilizes about 50% of CPU.

I separated this functions this way intentionally to demonstrate the problem. The problem exists if I remove this extra calling levels (even if I manually inline that code to method getByHashValue), too.

. . 107:func (slot *storageItem) increaseReadersStage0Sub0() isSet {

. 30ms 108: slot.increaseReadersStage0Sub0Sub0()

9.05s 9.10s 109: return slot.increaseReadersStage0Sub0Sub1()

. . 110:}

Or another try (I separated the return line on two and removed type isSet) -- the same result:

. . 107:func (slot *storageItem) increaseReadersStage0Sub0() uint32 {

. 10ms 108: slot.increaseReadersStage0Sub0Sub0()

8.16s 8.89s 109: r := slot.increaseReadersStage0Sub0Sub1()

. . 110: return r

. . 111:}

disasm

ROUTINE ======================== github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders

4.61s 14.29s (flat, cum) 191.04% of Total

. . 4bb370: MOVQ FS:0xfffffff8, CX ;storage.go:146

10ms 10ms 4bb379: CMPQ 0x10(CX), SP ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:146

. . 4bb37d: JBE 0x4bb3ef ;storage.go:146

. . 4bb37f: SUBQ $0x18, SP

. . 4bb383: MOVQ BP, 0x10(SP)

. . 4bb388: LEAQ 0x10(SP), BP

10ms 4.59s 4bb38d: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:147

. 4.58s 4bb38e: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0 storage.go:113

. 10ms 4bb38f: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0Sub0 storage.go:108

. . 4bb390: MOVL $0x1, AX ;storage.go:147

10ms 10ms 4bb395: MOVQ 0x20(SP), CX ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0Sub0Sub0 storage.go:102

. . 4bb39a: LOCK XADDL AX, 0x4(CX) ;storage.go:102

4.07s 4.57s 4bb39f: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0Sub0 storage.go:109

490ms 500ms 4bb3a0: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0Sub0Sub1 storage.go:105

10ms 10ms 4bb3a1: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).IsSet storage.go:41

. . 4bb3a2: MOVL 0(CX), AX ;storage.go:21

. . 4bb3a4: TESTL AX, AX ;storage.go:117

. . 4bb3a6: JNE 0x4bb3d4

. . 4bb3a8: MOVL $-0x1, DX ;storage.go:118

. . 4bb3ad: LOCK XADDL DX, 0x4(CX)

. . 4bb3b2: CMPL $0xa, AX ;storage.go:148

. . 4bb3b5: JE 0x4bb3c5

10ms 10ms 4bb3b7: MOVL AX, 0x28(SP) ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:151

. . 4bb3bb: MOVQ 0x10(SP), BP ;storage.go:151

. . 4bb3c0: ADDQ $0x18, SP

. . 4bb3c4: RET

. . 4bb3c5: MOVQ CX, 0(SP) ;storage.go:149

. . 4bb3c9: CALL github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage1(SB)

. . 4bb3ce: MOVL 0x8(SP), AX

. . 4bb3d2: JMP 0x4bb3b7

. . 4bb3d4: CMPL $0x1, AX ;storage.go:115

. . 4bb3d7: JE 0x4bb3b2

. . 4bb3d9: CMPL $0x4, AX ;storage.go:117

. . 4bb3dc: JE 0x4bb3a8

. . 4bb3de: MOVL $-0x1, DX ;storage.go:121

. . 4bb3e3: LOCK XADDL DX, 0x4(CX)

. . 4bb3e8: MOVL $0xa, AX

. . 4bb3ed: JMP 0x4bb3b2 ;storage.go:147

. . 4bb3ef: CALL runtime.morestack_noctxt(SB) ;storage.go:146

. . 4bb3f4: ?

. . 4bb3f5: JA 0x4bb3f6

. . 4bb3f7: ?

Just in case:

The same problem if I remove LOCK XADDL:

ROUTINE ======================== github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders

4.48s 13.27s (flat, cum) 175.99% of Total

. . 4bb370: MOVQ FS:0xfffffff8, CX ;storage.go:147

10ms 10ms 4bb379: CMPQ 0x10(CX), SP ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:147

. . 4bb37d: JBE 0x4bb3e8 ;storage.go:147

. . 4bb37f: SUBQ $0x18, SP

. . 4bb383: MOVQ BP, 0x10(SP)

10ms 10ms 4bb388: LEAQ 0x10(SP), BP ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:147

20ms 4.42s 4bb38d: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:148

. 4.39s 4bb38e: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0 storage.go:114

. . 4bb38f: NOPL ;storage.go:109

. . 4bb390: MOVQ 0x20(SP), AX ;storage.go:148

. . 4bb395: INCL 0x4(AX) ;storage.go:102

4.39s 4.39s 4bb398: NOPL ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0Sub0 storage.go:110

. . 4bb399: NOPL ;storage.go:106

. . 4bb39a: NOPL ;storage.go:41

. . 4bb39b: MOVL 0(AX), CX ;storage.go:21

10ms 10ms 4bb39d: TESTL CX, CX ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage0 storage.go:118

. . 4bb39f: JNE 0x4bb3cd ;storage.go:118

. . 4bb3a1: MOVL $-0x1, DX ;storage.go:119

. . 4bb3a6: LOCK XADDL DX, 0x4(AX)

. . 4bb3ab: CMPL $0xa, CX ;storage.go:149

. . 4bb3ae: JE 0x4bb3be

. . 4bb3b0: MOVL CX, 0x28(SP) ;storage.go:152

. . 4bb3b4: MOVQ 0x10(SP), BP

. . 4bb3b9: ADDQ $0x18, SP

40ms 40ms 4bb3bd: RET ;github.com/xaionaro-go/atomicmap.(*storageItem).increaseReaders storage.go:152

. . 4bb3be: MOVQ AX, 0(SP) ;storage.go:150

. . 4bb3c2: CALL github.com/xaionaro-go/atomicmap.(*storageItem).increaseReadersStage1(SB)

. . 4bb3c7: MOVL 0x8(SP), CX

. . 4bb3cb: JMP 0x4bb3b0

. . 4bb3cd: CMPL $0x1, CX ;storage.go:116

. . 4bb3d0: JE 0x4bb3ab

. . 4bb3d2: CMPL $0x4, CX ;storage.go:118

. . 4bb3d5: JE 0x4bb3a1

. . 4bb3d7: MOVL $-0x1, DX ;storage.go:122

. . 4bb3dc: LOCK XADDL DX, 0x4(AX)

. . 4bb3e1: MOVL $0xa, CX

. . 4bb3e6: JMP 0x4bb3ab ;storage.go:148

. . 4bb3e8: CALL runtime.morestack_noctxt(SB) ;storage.go:147

. . 4bb3ed: ?

I have a similar situation in os.openFileNolog where is shows 1.92s in a NOPL.

The same function also shows a different cumulative time in disasm mode than the normal list mode. (a 1.94 seconds difference, which is the same as all the combined NOPLs)

`

ROUTINE ======================== os.openFileNolog

40ms 5.03s (flat, cum) 13.38% of Total

. . 4c7f70: MOVQ FS:0xfffffff8, CX ;file_unix.go:190

10ms 10ms 4c7f79: CMPQ 0x10(CX), SP ;os.openFileNolog file_unix.go:190

. . 4c7f7d: JBE 0x4c814b ;file_unix.go:190

. . 4c7f83: SUBQ $0x58, SP

. . 4c7f87: MOVQ BP, 0x50(SP)

. . 4c7f8c: LEAQ 0x50(SP), BP

. 1.92s 4c7f91: NOPL ;os.openFileNolog file_unix.go:201

. . 4c7f92: NOPL ;file_unix.go:201

. . 4c7f93: NOPL ;file_posix.go:38

. . 4c7f94: MOVQ $-0x64, 0(SP) ;file_unix.go:201

. . 4c7f9c: MOVQ 0x60(SP), AX ;syscall_linux.go:138

. . 4c7fa1: MOVQ AX, 0x8(SP)

. . 4c7fa6: MOVQ 0x68(SP), CX

. . 4c7fab: MOVQ CX, 0x10(SP)

. . 4c7fb0: MOVQ 0x70(SP), DX ;file_unix.go:201

. . 4c7fb5: BTSQ $0x13, DX

. . 4c7fba: MOVQ DX, 0x18(SP) ;syscall_linux.go:138

. . 4c7fbf: MOVL 0x78(SP), DX ;types.go:105

. . 4c7fc3: MOVL DX, BX

. . 4c7fc5: ANDL $0x1ff, DX

. . 4c7fcb: MOVL DX, SI ;file_posix.go:40

. . 4c7fcd: BTSL $0xb, DX

;file_posix.go:39

. . 4c7fd1: TESTL $gitlab.com/golang-commonmark/linkify.match+24016(SB), BX

. . 4c7fd7: CMOVNE DX, SI ;file_unix.go:201

. . 4c7fda: MOVL SI, DX ;file_posix.go:43

. . 4c7fdc: BTSL $0xa, SI

. . 4c7fe0: TESTL $0x400000, BX ;file_posix.go:42

. . 4c7fe6: CMOVNE SI, DX ;file_unix.go:201

. . 4c7fe9: MOVL DX, SI ;file_posix.go:46

. . 4c7feb: BTSL $0x9, DX

. . 4c7fef: TESTL $0x100000, BX ;file_posix.go:45

. . 4c7ff5: CMOVNE DX, SI ;file_unix.go:201

. . 4c7ff8: MOVL SI, 0x20(SP) ;syscall_linux.go:138

. 1.92s 4c7ffc: CALL syscall.openat(SB) ;syscall.Open syscall_linux.go:138

. . 4c8001: MOVQ 0x38(SP), AX ;file_unix.go:201

10ms 10ms 4c8006: MOVQ 0x30(SP), CX ;os.openFileNolog file_unix.go:201

. . 4c800b: MOVQ 0x28(SP), DX ;file_unix.go:201

. . 4c8010: TESTQ CX, CX ;file_unix.go:202

. . 4c8013: JE 0x4c8103 ;file_posix.go:39

. . 4c8019: MOVQ AX, 0x48(SP) ;file_unix.go:201

. . 4c801e: MOVQ CX, 0x40(SP)

. . 4c8023: LEAQ 0x4a3eb6(IP), AX ;file_unix.go:213

. . 4c802a: MOVQ AX, 0(SP)

. . 4c802e: CALL runtime.newobject(SB)

. . 4c8033: MOVQ 0x8(SP), DI

. . 4c8038: MOVQ $0x4, 0x8(DI)

. . 4c8040: CMPL $0x0, runtime.writeBarrier(SB)

. . 4c8047: JNE 0x4c80f2

. . 4c804d: LEAQ 0x4f8509(IP), CX

. . 4c8054: MOVQ CX, 0(DI)

. . 4c8057: MOVQ 0x68(SP), CX

. . 4c805c: MOVQ CX, 0x18(DI)

. . 4c8060: CMPL $0x0, runtime.writeBarrier(SB)

. . 4c8067: JNE 0x4c80d6

. . 4c8069: MOVQ 0x60(SP), CX

. . 4c806e: MOVQ CX, 0x10(DI)

. . 4c8072: MOVQ 0x40(SP), CX

. . 4c8077: MOVQ CX, 0x20(DI)

. . 4c807b: CMPL $0x0, runtime.writeBarrier(SB)

. . 4c8082: JNE 0x4c80ba

. . 4c8084: MOVQ 0x48(SP), AX

. . 4c8089: MOVQ AX, 0x28(DI)

. . 4c808d: MOVQ $0x0, 0x80(SP)

. . 4c8099: LEAQ go.itab.*os.PathError,error(SB), AX

. . 4c80a0: MOVQ AX, 0x88(SP)

. . 4c80a8: MOVQ DI, 0x90(SP)

. . 4c80b0: MOVQ 0x50(SP), BP

. . 4c80b5: ADDQ $0x58, SP

. . 4c80b9: RET

. . 4c80ba: LEAQ 0x28(DI), CX

. . 4c80be: MOVQ DI, AX

. . 4c80c1: MOVQ CX, DI

. . 4c80c4: MOVQ AX, DX

. . 4c80c7: MOVQ 0x48(SP), AX

. . 4c80cc: CALL runtime.gcWriteBarrier(SB)

. . 4c80d1: MOVQ DX, DI

. . 4c80d4: JMP 0x4c808d

. . 4c80d6: LEAQ 0x10(DI), CX

. . 4c80da: MOVQ DI, AX

. . 4c80dd: MOVQ CX, DI

. . 4c80e0: MOVQ AX, DX

. . 4c80e3: MOVQ 0x60(SP), AX

. . 4c80e8: CALL runtime.gcWriteBarrier(SB)

. . 4c80ed: MOVQ DX, DI

. . 4c80f0: JMP 0x4c8072

. . 4c80f2: LEAQ 0x4f8464(IP), AX

. . 4c80f9: CALL runtime.gcWriteBarrier(SB)

. . 4c80fe: JMP 0x4c8057

10ms 10ms 4c8103: MOVQ DX, 0(SP) ;os.openFileNolog file_unix.go:227

. . 4c8107: MOVQ 0x60(SP), AX ;file_unix.go:227

. . 4c810c: MOVQ AX, 0x8(SP)

. . 4c8111: MOVQ 0x68(SP), AX

. . 4c8116: MOVQ AX, 0x10(SP)

. . 4c811b: MOVQ $0x1, 0x18(SP)

. 1.15s 4c8124: CALL os.newFile(SB) ;os.openFileNolog file_unix.go:227

. . 4c8129: MOVQ 0x20(SP), AX ;file_unix.go:227

. . 4c812e: MOVQ AX, 0x80(SP)

10ms 10ms 4c8136: XORPS X0, X0 ;os.openFileNolog file_unix.go:227

. . 4c8139: MOVUPS X0, 0x88(SP) ;file_unix.go:227

. . 4c8141: MOVQ 0x50(SP), BP

. . 4c8146: ADDQ $0x58, SP

. . 4c814a: RET

. . 4c814b: CALL runtime.morestack_noctxt(SB) ;file_unix.go:190

. . 4c8150: ?

. . 4c8151: SBBL SI, DI

. . 4c8153: ?

`

EDIT: github formatting issues???

Speculation: those nops are inlining marks, the subroutine is growing the stack (slow) and the newstack call attribution isn’t working in conjunction with the inlining marks.

Due it its use of ITIMER_PROF, pprof results have a skid of up to several instructions. We cannot correct the skid in Go because the amount of skid is not deterministic. We could perhaps document the limitation better.

In #30708 (comment), the usage is almost certainly actually on the LOCK XADD. When #30708 (comment) removed the atomic, the usage is still almost certainly on the INCL. Despite not being locked, the x86 memory model is pretty strong, so the cross-core contention on the counter is likely slowing down the operation significantly.