骆驼(Luotuo) is the Chinese pinyin(pronunciation) of camel

A Chinese finetuned instruction LLaMA. Developed by 冷子昂 @ 商汤科技, 陈启源 @ 华中师范大学(Third year undergraduate student) and 李鲁鲁 @ 商汤科技

(email: chengli@sensetime.com, zaleng@bu.edu, chenqiyuan1012@foxmail.com)

This is NOT an official product of SenseTime

This project only made a slightly change on the Japanese-Alpaca-LoRA

We named project in Camel because both LLaMA and alpaca are all belongs to Artiodactyla-Camelidae(偶蹄目-骆驼科)

We just released the first version luotuo-lora-7b-0.1 model! try it in the quick start.

| Model Name | Training Data and Setting |

|---|---|

| luotuo-lora-7b-0.1 | Trained on Translated alpaca 52k data |

| luotuo-lora-7b-0.3 | (In Plan) cleaned alpaca 52k + 10% guanaco |

| luotuo-lora-7b-0.9 | (In Plan) cleaned alpaca 52k + full guanaco |

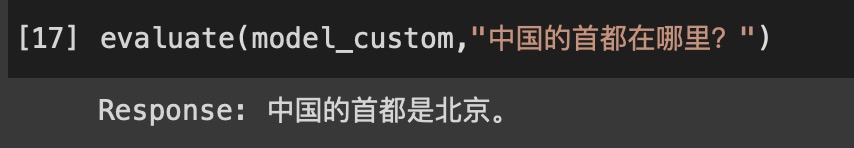

Evaluation code are now in notebook/evaluation_code.ipynb

Input: **的首都在哪里?

Luotuo-Output: **的首都是北京。

Input: 麦当劳是哪个国家的?

Luotuo-Output: 美国。

Input: 2017年美国总统是谁

Luotuo-Output: 2017年美国总统是特朗普

Input: 商汤科技是做什么的?

Luotuo-Output: 商汤科技是一种技术,它用于创建互联网应用程序和服务。

Input: 猫和狗的关系是什么

Luotuo-Output: 猫和狗之间的关系是友好的。它们通常在一起休息或玩耍。猫和狗之间的关系通常很好,它们通常在一起休息或玩耍。猫和狗之间的关系通常很好,它们通常在一起休息或玩耍。猫和狗之间的关系通常很好,它们通常在一起休息或玩耍。猫和狗之间的关系通常很好,它们通常在一起休息或玩耍。猫和狗之间的关系通常很好,它们通常在一起休息或玩耍。猫和狗之间的关系通常

Input: 华中师范大学在哪里?

Luotuo-Output: 华中师范大学位于北京

We have tuned a Chinese LLaMA model baed on LLaMA, Stanford Alpaca, Alpaca LoRA, cabrita, Japanese-Alpaca-LoRA

The training code in in cleaning, if you are in very hurry, check the Japanese project and simply change the json training data file name.

This is an inbuilding project

A. 0.1 version model was trained on translated data, which translate the alpaca_data.json to Chinese using ChatGPT API. We paid around US $30-45 to translate the full dataset to chinese. Translated data is available. (trans_chinese_alpaca_data.json)

B. We are also plan to consider the data in Guanaco hikariming's alpaca_chinese_dataset and carbonz0‘s alpaca-chinese-dataset, may updated it into later version.

We plan to upload two different models A and B, because the provider of B claim the clean data will bring significant improvement.

inbuilding project

- translate alpaca json data into Chinese

- finetuning with lora(model 0.1)

- release 0.1 model (model A)

- model to hugging face, GUI demo

- train lora with more alpaca data(model 0.3)

- train lora with more alpaca data(model 0.9)

If you find this project useful in your research, please consider citing:

@inproceedings{leng2023luotuo-ch-alpaca,

title={Luotuo: Chinese-alpaca-lora},

author={Ziang Leng, Qiyuan Chen and Cheng Li},

url={https://github.com/LC1332/Chinese-alpaca-lora},

year={2023}

}