This repository contains the implementation of the paper:

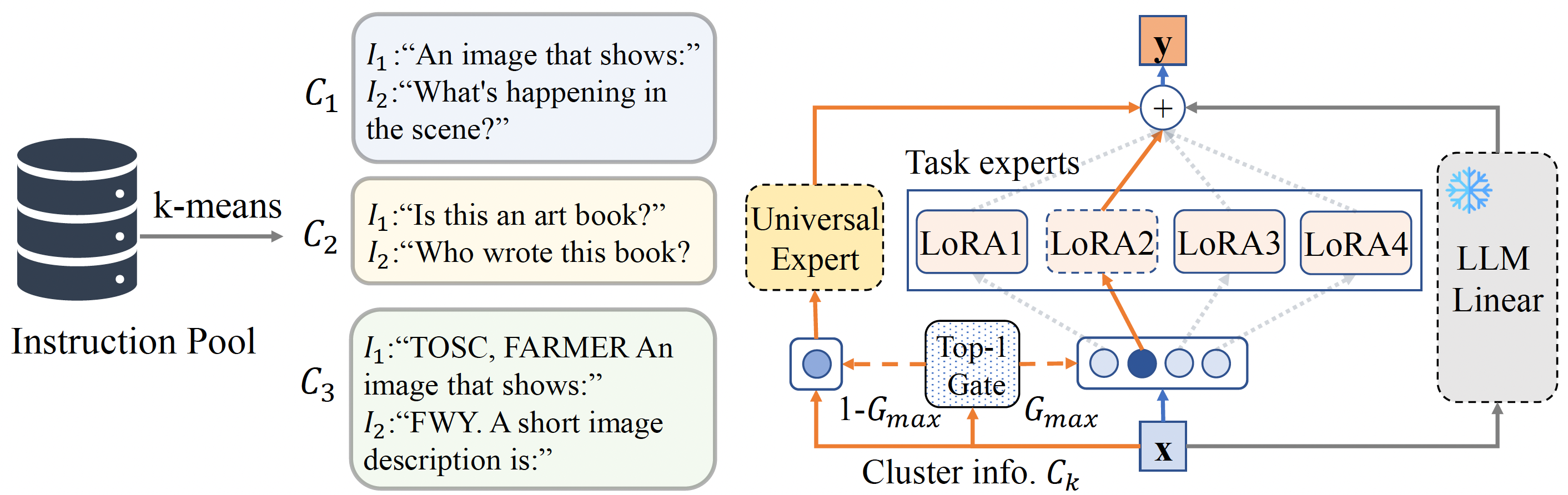

MoCLE: Mixture of Cluster-conditional LoRA Experts for Vision-language Instruction Tuning

Yunhao Gou, Zhili Liu, Kai Chen, Lanqing Hong, Hang Xu, Aoxue Li, Dit-Yan Yeung, James T. Kwok, Yu Zhang

-

Install LAVIS to the current directory, the primary codebase on which MoCLE is built.

conda create -n lavis python=3.8 conda activate lavis git clone https://github.com/salesforce/LAVIS.git cd LAVIS pip install -e .

-

Clone the repository of MoCLE.

git clone https://github.com/gyhdog99/mocle.git

-

Build our modified PEFT package.

cd mocle cd peft-main pip install -e .

-

Copy

mocle.pyandmocle.yamlin this repository into the LAVIS directory following the architecture below:cd ../ cp mocle.py ../lavis/models/blip2_models cp mocle.yaml ../lavis/configs/models/blip2 -

Modify

../lavis/models/__init__.pyin LAVIS as follows:- Add

from lavis.models.blip2_models.mocle import MoCLEin the beginning of the file. - Add

"MoCLE"to__all__ = [...,...].

- Add

-

MoCLE is based on Vicuna-7B-v1.1. Download the corresponding LLM checkpoint here.

-

Set the

llm_modelargument in../lavis/configs/mocle.yamlto the local path towards the downloaded Vicuna checkpoint. -

Download the pre-trained checkpoint of MoCLE.

# Clusters Temperature Main Model Clustering Model 16 0.05 c16_t005 c16 64 0.05 c64_t005 c64 64 0.10 c64_t010 c64 -

Set

finetunedandkmeans_ckptin../lavis/configs/mocle.yamlto the weights of the downloaded main model and clustering model, respectively. (Please adjust thetotal_tasksandgates_tmpparameters as# ClustersandTemperatureaccordingly).

-

Load an image locally

import torch from PIL import Image # setup device to use device = torch.device("cuda") if torch.cuda.is_available() else "cpu" # load sample image raw_image = Image.open(".../path_to_images/").convert("RGB")

-

Load the models

from lavis.models import load_model_and_preprocess # loads MoCLE model model, vis_processors, _ = load_model_and_preprocess(name="mocle", model_type="mocle", is_eval=True, device=device) # prepare the image image = vis_processors["eval"](raw_image).unsqueeze(0).to(device)

-

Generate

response = model.generate({"image": image, "prompt": ["Your query about this image"]}) print(response)

Coming soon.

- LAVIS: Implementations of our MoCLE are built upon LAVIS.

- PEFT: Implementations of our Mixture of LoRA experts are based on PEFT.

If you're using MoCLE in your research or applications, please cite using this BibTeX:

@article{gou2023mixture,

title={Mixture of cluster-conditional lora experts for vision-language instruction tuning},

author={Gou, Yunhao and Liu, Zhili and Chen, Kai and Hong, Lanqing and Xu, Hang and Li, Aoxue and Yeung, Dit-Yan and Kwok, James T and Zhang, Yu},

journal={arXiv preprint arXiv:2312.12379},

year={2023}

}