A PyTorch implementation of Human-level control through deep reinforcement learning

├── agents

| └── dqn.py # the main training agent for the dqn

├── graphs

| └── models

| | └── dqn.py

| └── losses

| | └── huber_loss.py # contains huber loss definition

├── datasets # contains all dataloaders for the project

├── utils # utilities folder containing input extraction, replay memory, config parsing, etc

| └── assets

| └── replay_memory.py

| └── env_utils.py

├── main.py

└── run.sh

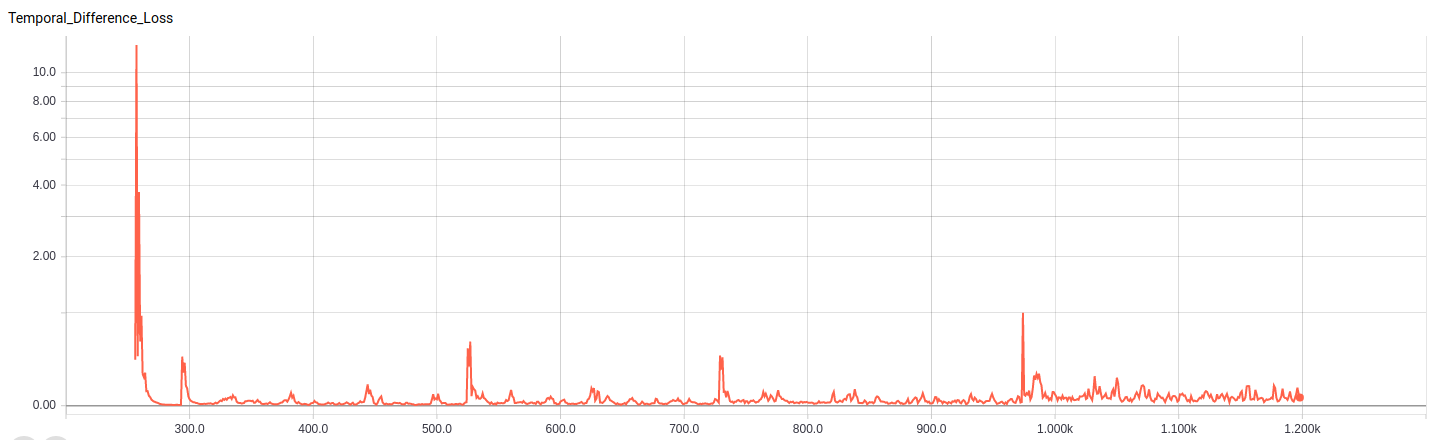

Loss during training:

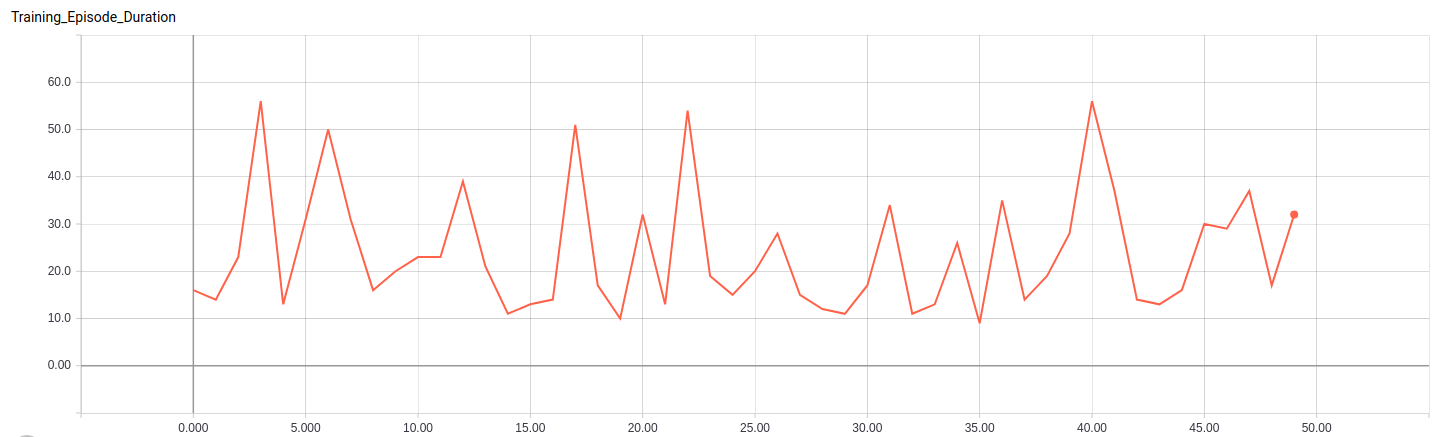

Number of durations per Episode:

- To run the project, you need to add your configurations into the folder

configs/as fround here sh run.sh- To run on a GPU, you need to enable cuda in the config file.

- Pytorch: 0.4.0

- torchvision: 0.2.1

- tensorboardX: 0.8

Check requirements.txt.

- Test DQN on a more complex environment such as MS-Pacman

- Pytorch official example: https://pytorch.org/tutorials/intermediate/reinforcement_q_learning.html

- Pytorch-dqn: https://github.com/transedward/pytorch-dqn/blob/master/dqn_learn.py

This project is licensed under MIT License - see the LICENSE file for details.