Welcome to the Third Situated Interactive Multimodal Conversations (SIMMC 2.1) Track page for DSTC11 2022.

The SIMMC challenge aims to lay the foundations for the real-world assistant agents that can handle multimodal inputs, and perform multimodal actions. Specifically, we focus on the task-oriented dialogs that encompass a situated multimodal user context in the form of a co-observed & immersive virtual reality (VR) environment. The conversational context is dynamically updated on each turn based on the user actions (e.g. via verbal interactions, navigation within the scene).

The Second SIMMC challenge ended successfully, receiving a number of new state-of-the-art models in the novel multimodal dialog task. Building upon the success of the previous editions of the SIMMC challenges, we propose a third edition of the SIMMC challenge for the community to tackle and continue the effort towards building a successful multimodal assistant agent.

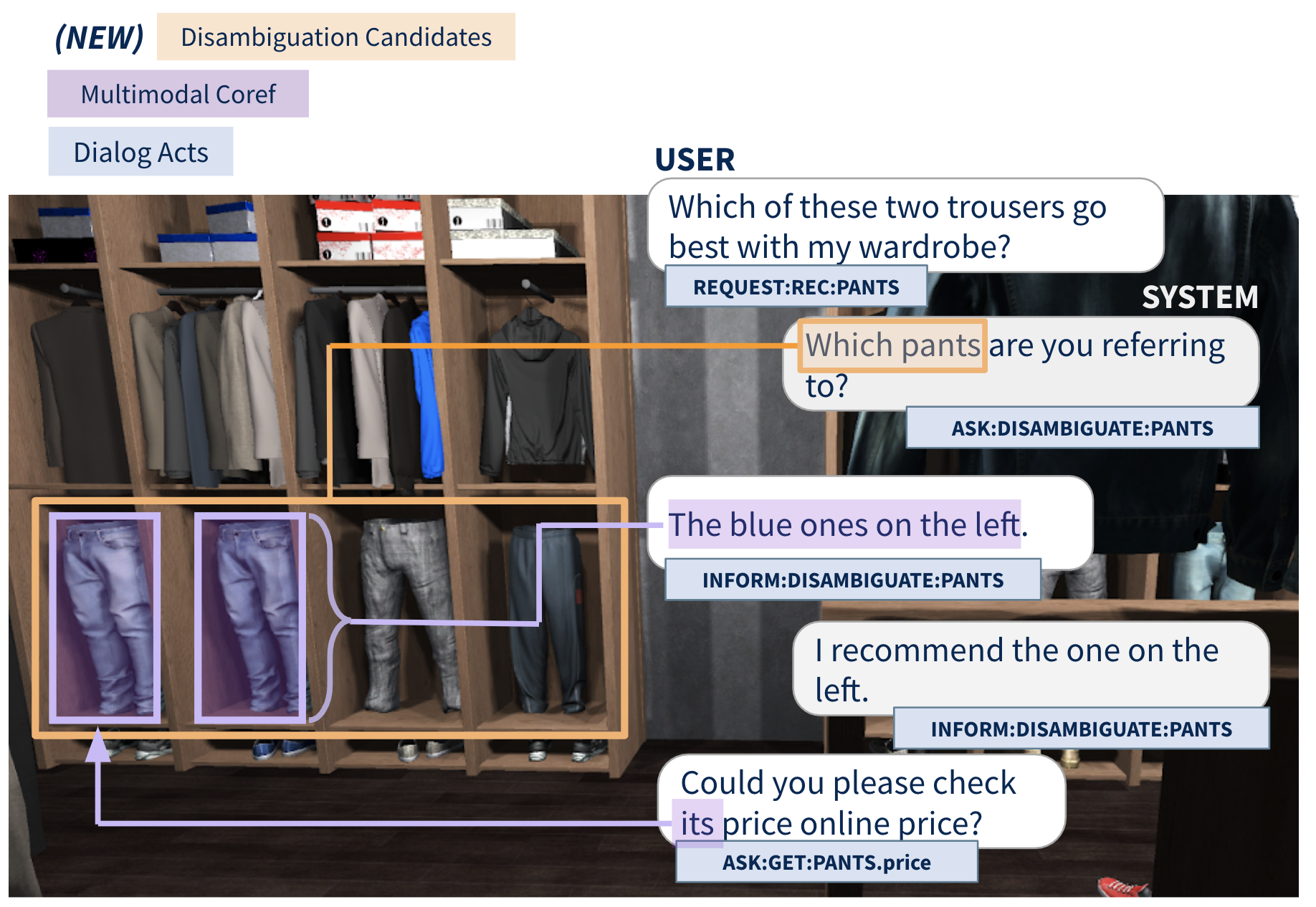

In this edition of the challenge, we specifically focus on the key challenge of fine-grained visual disambiguation, which adds an important skill to assistant agents studied in the previous SIMMC challenge. To accommodate for this challenge, we provide the improved version of the of the dataset, SIMMC 2.1, where we augment the SIMMC 2.0 dataset with additional annotations (i.e. identification of all possible referent candidates given ambiguous mentions) and corresponding re-paraphrases to support the study and modeling of visual disambiguation (SIMMC 2.1).

Organizers: Seungwhan Moon, Satwik Kottur, Babak Damavandi, Alborz Geramifard

Illustration of the SIMMC 2.1 Dataset- [June 28, 2022] DSTC11-SIMMC2.1 Challenge announcement. Training / development datasets (SIMMC v2.1) are released.

- SIMMC 2.1 Challenge Proposal (DSTC11)

- Task Description Paper (EMNLP 2021)

- Data Formats

For this edition of the challenge, we focus on four sub-tasks primarily aimed at replicating human-assistant actions in order to enable rich and interactive shopping scenarios.

For more detailed information on the new SIMMC 2.1 dataset and the instructions, please refer to the DSTC11 challenge proposal document.

| Sub-Task #1 | Ambiguous Canaidate Identification (New) |

|---|---|

| Goal | Given ambiguous object mentions, to resolve referent objects to thier canonical ID(s). |

| Input | Current user utterance, Dialog context, Multimodal context |

| Output | Canonical object IDs |

| Metrics | Object Identification F1 / Precision / Recall |

| Sub-Task #2 | Multimodal Coreference Resolution |

|---|---|

| Goal | To resolve referent objects to thier canonical ID(s) as defined by the catalog. |

| Input | Current user utterance, Dialog context, Multimodal context |

| Output | Canonical object IDs |

| Metrics | Coref F1 / Precision / Recall |

| Sub-Task #3 | Multimodal Dialog State Tracking (MM-DST) |

|---|---|

| Goal | To track user belief states across multiple turns |

| Input | Current user utterance, Dialogue context, Multimodal context |

| Output | Belief state for current user utterance |

| Metrics | Slot F1, Intent F1 |

| Sub-Task #4 | Multimodal Dialog Response Generation |

|---|---|

| Goal | To generate Assistant responses |

| Input | Current user utterance, Dialog context, Multimodal context, (Ground-truth API Calls) |

| Output | Assistant response utterance |

| Metrics | BLEU-4 |

Please check the task input file for a full description of inputs for each subtask.

We will provide the baselines for all the four tasks to benchmark their models. Feel free to use the code to bootstrap your model.

| Subtask | Name | Baseline Results |

|---|---|---|

| #1 | Ambiguous Candidate Identification | Link |

| #2 | Multimodal Coreference Resolution | Link |

| #3 | Multimodal Dialog State Tracking (MM-DST) | Link |

| #4 | Multimodal Dialog Response Generation | Link |

- Git clone our repository to download the datasets and the code. You may use the provided baselines as a starting point to develop your models.

$ git lfs install

$ git clone https://github.com/facebookresearch/simmc2.git

- Also please feel free to check out other open-sourced repositories from the previous SIMMC 2.0 challenge here.

Please contact simmc@fb.com, or leave comments in the Github repository.

If you want to get the latest updates about DSTC10, join the DSTC mailing list.

If you want to publish experimental results with our datasets or use the baseline models, please cite the following articles:

@inproceedings{kottur-etal-2021-simmc,

title = "{SIMMC} 2.0: A Task-oriented Dialog Dataset for Immersive Multimodal Conversations",

author = "Kottur, Satwik and

Moon, Seungwhan and

Geramifard, Alborz and

Damavandi, Babak",

booktitle = "Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing",

month = nov,

year = "2021",

address = "Online and Punta Cana, Dominican Republic",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2021.emnlp-main.401",

doi = "10.18653/v1/2021.emnlp-main.401",

pages = "4903--4912",

}

NOTE: The paper (EMNLP 2021) above describes in detail the datasets, the collection process, and some of the baselines we provide in this challenge.

SIMMC 2 is released under CC-BY-NC-SA-4.0, see LICENSE for details.