TGDoc: Text-Grounding Document Understanding with MLLMs

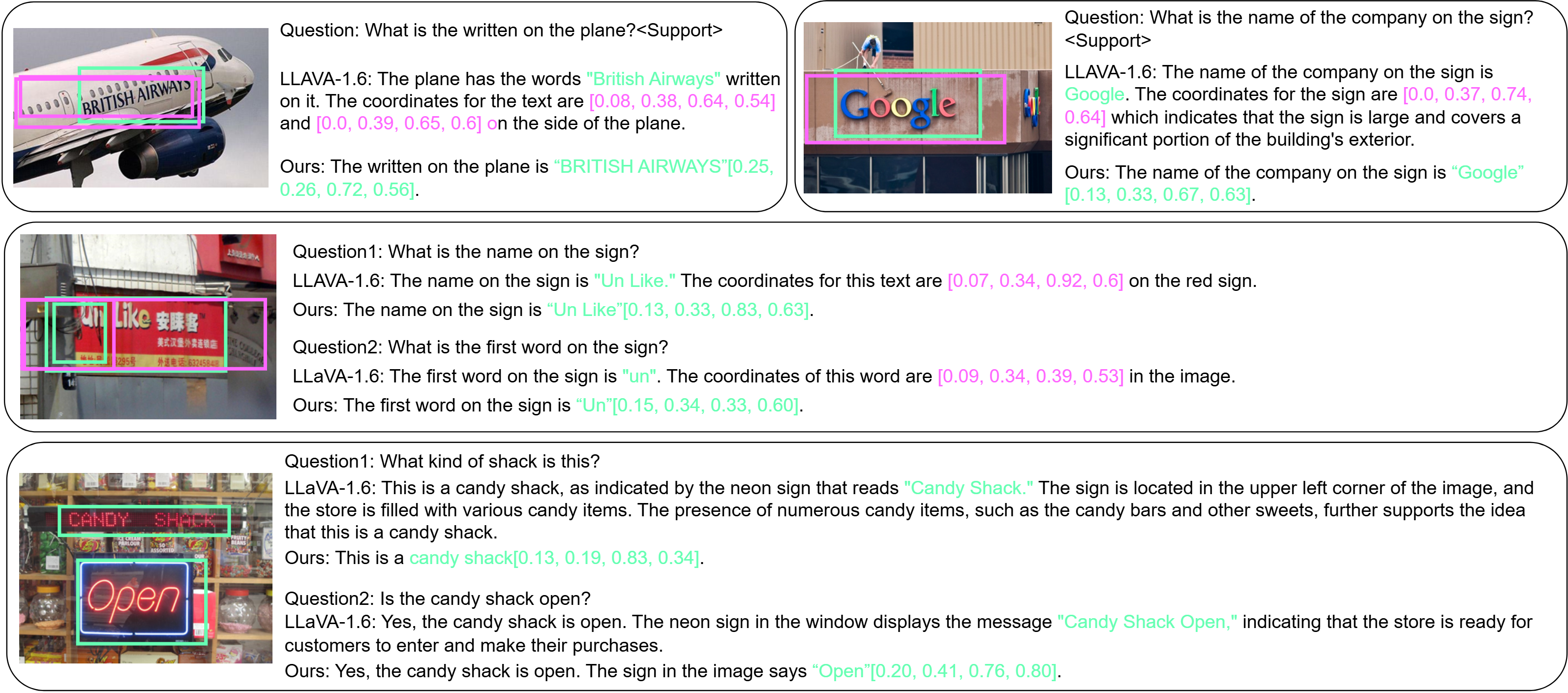

TGDoc is a model that enhances document understanding by implementing text-grounding capabilities in Multimodal Large Language Models (MLLMs). Our approach improves the ability to process and comprehend document-based information.

This project builds upon LLaVA. Set up your environment:

git clone https://github.com/haotian-liu/LLaVA.git

cd LLaVA

conda create -n llava python=3.10 -y

conda activate llava

pip install --upgrade pip

pip install -e .

pip install flash-attn --no-build-isolationdeepspeed==0.9.5

peft==0.4.0

transformers==4.31.0

accelerate==0.21.0

bitsandbytes==0.41.0

| Model Name | Download Link | Access Code |

|---|---|---|

| tgdoc-7b-finetune-336 | Download | xhif |

- Complete dataset: Download (Access Code: gxqt)

- Includes LLaVA and LLaVAR datasets

- Configure settings in

llava/data/config.py - Select appropriate training script:

bash scripts/pretrain.shandbash scripts/finetune.sh

python test.pyWe use MultimodalOCR for validation, appending each question with: "Support your reasoning with the coordinates [xmin, ymin, xmax, ymax]"

@article{wang2023towards,

title={Towards Improving Document Understanding: An Exploration on Text-Grounding via MLLMs},

author={Wang, Yonghui and Zhou, Wengang and Feng, Hao and Zhou, Keyi and Li, Houqiang},

journal={arXiv preprint arXiv:2311.13194},

year={2023}

}This project builds upon LLaVA. We thank the original authors for their great work.