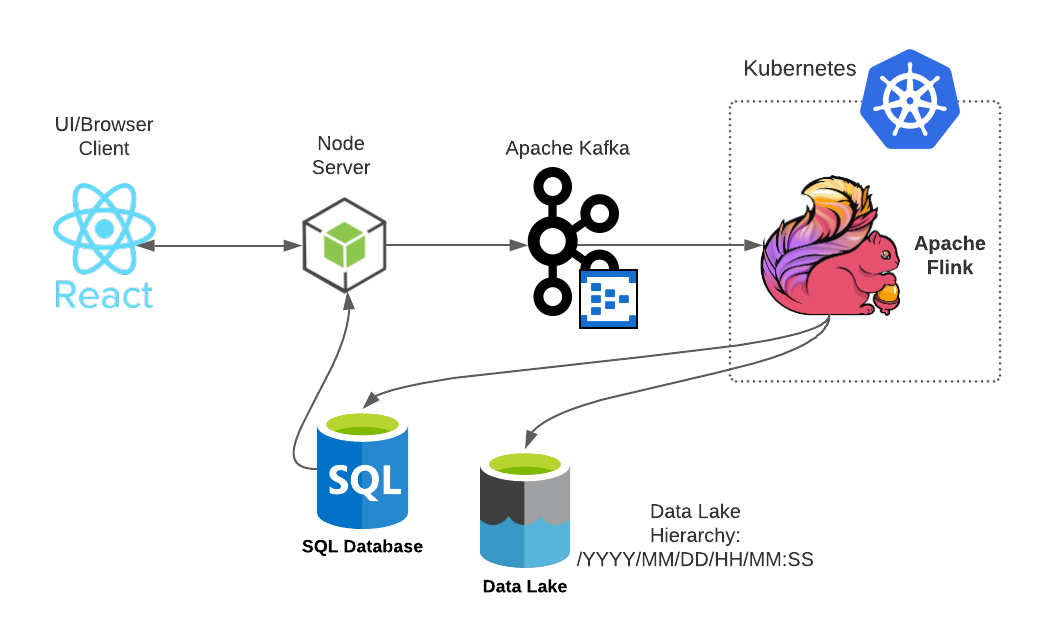

Streams and uploads unique images out of large set for every 30 seconds tumbling window using Apache Flink, Apache Kafka (Azure Event Hub), Data Lake (ADLS). Saves upload summary in a Microsoft SQL Database (Azure SQL) for every window and displays it in React UI with a image upload form.

- React UI

- User selectS one or more images at once (each image should be less than 300kb). These images are sent to Node API.

- Fetches latest upload summary every 10 seconds from SQL database via Node server.

- Node Server

- Provides POST API for React to post images.

- Posted images are pushed in batch into the Apache Kafka topic.

- Provides GET API for fetching upload summary for every 30 second of time window.

- Kafka (Azure Event hub)

- Provides a topic to stream images.

- Flink + Kubernetes

- Pulls images from Apache Kafka topic.

- Irrespective of image names, finds unique images by checking content and maintains count. Does this aggregation for every 30 second tumbling window.

- Uploads these images in Data Lake (Azure Data Lake Gen 2).

- Inserts these 30 second upload summary (Image name, count, Data lake URL, time) in SQL database.

- Data Lake

- Directory structure is in the form of YYYY/MM/DD/HH/MM:SS. So that images are organized based on time.

- URL of accessing each uploaded image is saved in SQL database for upload summary in UI.

- SQL

- Has a table

UploadSummarywhich contains FileName, Count, Url for accessing this image, Time. This table is displayed in UI below the form.

- Has a table

-

React UI

- Update Node server URL (

baseUrlvalue) in client/api.js

- Update Node server URL (

-

Node server

In server/app.js:

- Update Kafka endpoint URL (

brokers) - Update Kafka

username,password,topicName. - Update

sqlConnectionString

- Update Kafka endpoint URL (

-

Flink

- In flink/src/main/java/com/flink/app/Upload.java - Update Kafka topic in

CONSUMER_TOPIC, Datalake container account key, account name and container name inACCOUNT_KEY,ACCOUNT_NAME, andCONTAINER_NAMErespectively. - In flink/src/main/resources/database.properties - Update jdbc connection string in

urland Kafkauser,password. - In flink/src/main/resources/Kafka.properties - Update Kafka endpoint URL in

urland Kafkauser,password.

- In flink/src/main/java/com/flink/app/Upload.java - Update Kafka topic in

-

Kubernetes:

- In flink/kubernetes/flink-configuration-config.yaml: Update Kafka properties like

bootstrap.servers, Kafka username, password insasl.jaas.config. Update database properties like jdbc connection string inurlanduser,password.

- In flink/kubernetes/flink-configuration-config.yaml: Update Kafka properties like

-

React UI

npm run buildto create a production build, andserve -s buildto run this build.npm startto run dev build.

-

Node

npm startto run.

-

Flink

For local:

- Setup flink cluster, follow this. I used Flink 1.12 scala 2.11 Java 11 based version.

mvn clean install -Pbuild-jarto create a jar. This jar will be generated as flink/target/flink-upload-1.0-SNAPSHOT.jar. Deploy this jar in your cluster. If you're new for deploying, you can check this beginner example.

For Cloud:

- If you're new, you can read this to deploy Apache Flink on a Kubernetes cluster. I have provided all YAML files in flink/kubernetes folder.

-

Kafka

- Create Kafka cluster or endpoint (I used Azure Event Hub) and created a topic in it. Provided YAML template.

-

Data Lake

- Create a data lake and a container inside it (I used Azure Data Lake Gen 2) to store images. Provided YAML template.

- Unit test cases

- Logging

- Partitioning in Kafka Topic

- Parallelism in Flink service

- Managed identity or token based authentication

- CI/CD Automation

- Typescript