✅ State-of-the-art of databases used in Multimodal Emotion Recognition (MER) and comprised at least the visual and vocal modalities.

✅ Focus on the representation of ambiguity in emotional models for each database. We define emotional ambiguity as the difficulty for humans to identify, express and recognize an emotion with certainty.

- Emotional Models: Brief summary of the discrete and continuous emotion representation

- Databases with Discrete Emotions: General description and position with respect to the representation of emotional ambiguity

- Databases with Continuous Emotions: General description and position with respect to the representation of emotional ambiguity

The full article describes the main emotional models and proposes a literature review of the most used trimodal databases (images, voice, text), with a study of emotional ambiguity representation.

Hélène Tran, Lisa Brelet, Issam Falih, Xavier Goblet, & Engelbert Mephu Nguifo (2022). L'ambiguïté dans la représentation des émotions : état de l'art des bases de données multimodales. Revue des Nouvelles Technologies de l'Information, Extraction et Gestion des Connaissances, RNTI-E-38, 87-98. https://editions-rnti.fr/?inprocid=1002719

Bonus! Our 3-minute presentation video (:fr:) is here: https://www.youtube.com/watch?v=pDQ1hpQJF8Q&list=PLApKApkHPMWHARfMnXMfYRyskRIv_huxM&index=10

Feel free to contact us at: helene.tran@doctorant.uca.fr

Please cite the following paper if our work is useful for your research:

@article{RNTI/papers/1002719,

author = {Hélène Tran and Lisa Brelet and Issam Falih and Xavier Goblet and Engelbert Mephu Nguifo},

title = {L'ambiguïté dans la représentation des émotions : état de l'art des bases de données multimodales},

journal = {Revue des Nouvelles Technologies de l'Information},

volume = {Extraction et Gestion des Connaissances, RNTI-E-38},

year = {2022},

pages = {87-98}

}🔗 Anchor Links:

- Discrete Model: Description of discrete emotions and the main representations

- Continuous Model: Description of continuous emotions and the main representations

Emotions are represented by discrete affective states. This approach is the most natural way for humans to define their own emotions. According to the evolutionary perspective, there are two main categories of emotions:

-

Primary emotions (or basic emotions): they are innate and universal emotions, short-lived and quickly triggered in response to an emotional stimulus. The list of primary emotions varies greatly depending on the discipline and the author. The most common ones are:

-

The six primary emotions of Ekman (1992): fear, anger, joy, sadness, disgust and surprise.

-

Plutchik (2001)'s Wheel of Emotions with eight primary emotions grouped into four pairs of opposing emotions: joy and sadness, confidence and disgust, fear and anger, anticipation and surprise.

Plutchik's Wheel of Emotions

-

-

Secondary emotions (or complex emotions): they are derived from the feelings of the primary emotions, are acquired through learning and confrontation with reality, and are culture-dependent. As an example, after feeling anger, we may then feel shame or guilt. Secondary emotions are usually combinations of primary emotions (Nugier et al., 2009).

Primary emotions are frequently chosen as classes for the development of recognition models, thus avoiding a possible overlap of emotional states. Although the discrete approach remains widely favoured in research due to its intelligibility, limiting oneself to the recognition of a primary emotion is not sufficient to describe the whole spectrum of emotions.

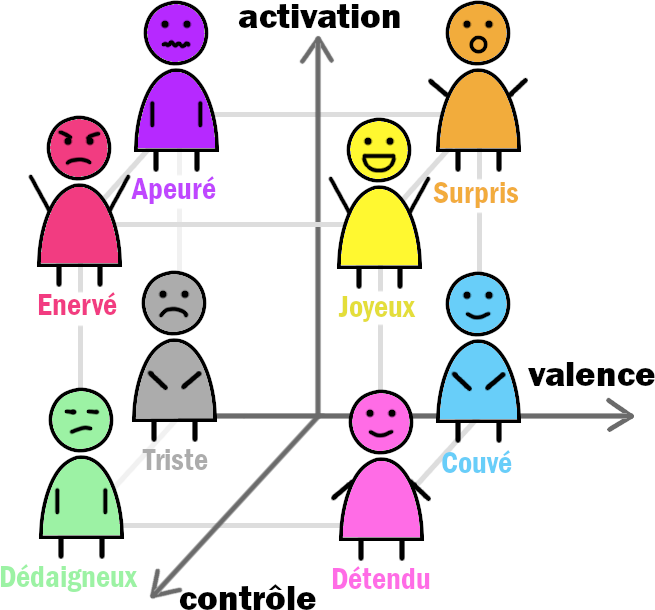

Emotions are placed in a multidimensional space. A large majority of researchers working on the continuous model agree on the two following dimensions:

-

Valence corresponds to the pleasantness of the emotion. For example, disgust is an unpleasant emotion and is therefore associated with a negative valence. In contrast, a positive valence is related to a pleasant emotion such as joy.

-

Arousal (or activation) refers to the physiological intensity felt. Sadness, which leads to withdrawal, is associated with low activation. Anger activation is high because it releases an influx of energy that allows the individual to prepare for battle.

These two dimensions are not sufficient to characterize the emotion (e.g. both anger and fear have a negative valence and high arousal), hence the need of a third dimension. However, this one does not create a consensus in the research.

- According to Russell et Mehrabian (1977), it could correspond to control. Thus, an angry individual feels in control of the situation (positive control) while a person overwhelmed by sadness seems to see the situation slipping away (negative control). The resulting model is called PAD (Pleasure, Arousal, Dominance) and is sufficient to describe all emotions according to the authors.

The Pleasure-Arousal-Dominance model (Source: Buechel et Hahn, 2016)

The Pleasure-Arousal-Dominance model, french version (based on Karg et al., 2013)

- According to Schlosberg (1954), it should be the attention-rejection dimension. Rejection is related to a strong attempt to exclude the external object, while attention is the active opposite of rejection.

In addition to better temporal resolution, the continuous approach allows for a wide range of emotional states to be represented and for variations in these states over time to be handled (Gunes et al., 2011). These advantages have led to a growing interest in affective computing.

🔗 Anchor Links:

- Description of the Databases: General information of the databases that have chosen discrete emotions

- Databases and Emotional Ambiguity: Position of the databases with respect to the representation of emotional ambiguity

🚩 Databases marked with a * offer discrete and continuous representation of emotions.

| Name | Year | Language | Modalities | Classes | Number sentences | Description |

|---|---|---|---|---|---|---|

| CMU-MOSEAS (paper) | 2020 | French, Spanish, Portuguese, German | Vocal, Visual, Textual | Happiness, Anger, Sadness, Fear, Disgust, Surprise | 10000 per language | Monologue videos from YouTube. High diversity of topics covered by a large number of speakers. Annotations on sentiments, emotions, subjectivity and 12 personality traits. Each emotion annotated on a [0,3] Likert scale for its degree of presence. |

| CMU-MOSEI (paper) | 2018 | English | Vocal, Visual, Textual | Happiness, Anger, Sadness, Fear, Disgust, Surprise | 23453 | Monologue videos from YouTube. High diversity of topics covered by a large number of speakers. Annotations on emotions and sentiments. Each emotion annotated on a [0,3] Likert scale for its degree of presence. |

| MELD (paper) | 2018 | English | Vocal, Visual, Textual | Happiness, Anger, Sadness, Fear, Disgust, Surprise, Neutral | 13708 | Clips from the TV series Friends. One of the largest databases involving more than two people in a conversation. |

| OMG-Emotions* (paper) | 2018 | English | Vocal, Visual, Textual | Happiness, Anger, Sadness, Fear, Disgust, Surprise, Neutral | 2400 | Monologue videos from YouTube. Uses gradual annotations with a focus on contextual emotion expressions. |

| RAVDESS (paper) | 2018 | English | Vocal, Visual | For speech: Calm, Happy, Sad, Angry, Fearful, Surprise, Disgust, Neutral ; For song: Calm, Happy, Sad, Angry, Fearful, Neutral | For each modality (vocal only, visual only, audio-visual): 1440 for speech, 1012 for song | Isolated sentences uttered by professional actors in studio. Designed for emotion recognition in speech and songs. |

| GEMEP (paper full set, paper core set) | 2010 | French | Vocal, Visual | Admiration, amusement, tenderness, anger, disgust, despair, pride, shame, anxiety, interest, irritation, joy, contempt, fear, pleasure, relief, surprise, sadness | 1260 (full set) / 154 (core set) portrayals1 | Professional actors playing out emotional scenarios. Emotions are chosen so that all the values of the valence-arousal pairs are represented (positive/negative valence, high/low arousal). |

| IEMOCAP* (paper) | 2008 | English | Vocal, Visual, Textual, Markers on face, head and hand | Happiness, Anger, Sadness, Neutral, Frustration | 10039 | Emotions are played out by professional actors. Widely used in affective computer research. |

| eNTERFACE'05 (paper) | 2006 | English | Vocal, Visual | Happiness, Anger, Sadness, Fear, Disgust, Surprise, Neutral | 1166 | Isolated sentences uttered by naive subjects from 14 nations. Mood induction by listening to short stories. Black background. |

| Name | Year | Final annotation | # annotations per sentence | Aggregation of annotations |

|---|---|---|---|---|

| CMU-MOSEAS | 2020 | Many emotions possible per sentence | 3 | - |

| CMU-MOSEI | 2018 | Many emotions possible per sentence | 3 | - |

| MELD | 2018 | One emotion per sentence | 3 | Majority vote |

| OMG-Emotions* | 2018 | One emotion per sentence | 5 | Majority vote |

| RAVDESS | 2018 | One emotion per sentence with 2 intensities (normal, strong) | 10 | - |

| GEMEP | 2010 | One or two emotion(s) per portrayal with 4 intensities (full set) / One emotion per portrayal with 5 intensities (core set) | 23 (audio-video), 23 (audio only), 25 (video only) | - |

| IEMOCAP* | 2008 | One emotion per sentence | 3 | Majority vote |

| eNTERFACE'05 | 2006 | One emotion per sentence | No annotation | - |

🔗 Anchor Links:

- Description of the Databases: General information of the databases that have chosen continuous emotions

- Databases and Emotional Ambiguity: Position of the databases with respect to the representation of emotional ambiguity

🚩 Databases marked with a * offer discrete and continuous representation of emotions.

| Name | Year | Language | Modalities | Dimensions | Number sentences | Description |

|---|---|---|---|---|---|---|

| MuSe-CaR (paper) | 2021 | English | Vocal, Visual, Textual | Valence, Arousal, Trustworthiness | 28295 | Car reviews from YouTube. In-the-wild characteristics (e.g. reviewer visibility, ambient noises, domain-specific terms). Designed for sentiment recognition, emotion-target engagement and trustworthiness detection. |

| SEWA (paper) | 2019 | English, German, Hungarian, Greek, Serbian, Chinese | Vocal, Visual, Textual | Valence, Arousal, Liking | 5382 | Ordinary people from the same culture discuss advertisements via video conference. Database created for emotion recognition but also for human behavior analysis, including cultural studies. |

| OMG-Emotions* (paper) | 2018 | English | Vocal, Visual, Textual | Valence, Arousal | 2400 | Monologue videos from YouTube. Uses gradual annotations with a focus on contextual emotion expressions. |

| RECOLA (paper) | 2013 | French | Vocal, Visual, ECG3, EDA4 | Valence, Arousal | 3,8 hours1 | Online dyadic interactions where participants need to collaborate to solve a survival task. Mood Induction Procedure to elicit emotion. |

| SEMAINE (paper) | 2012 | English | Vocal, Visual, Textual | Valence, Arousal, Expectation, Power, Intensity | 190 videos1 | Volunteers interact with an artificial character to which a personality trait has been assigned (angry, happy, gloomy, sensible). |

| IEMOCAP* (paper) | 2008 | English | Vocal, Visual, Textual, Markers on face, head and hand | Valence, Arousal, Dominance | 10039 | Emotions are played out by professional actors. Widely used in affective computer research. |

| SAL (paper) | 2008 | English | Vocal, Visual | Valence, Arousal | 1692 turns1 | Natural human-SAL conversations involving artificial characters with different emotional personalities (angry, sad, gloomy, sensitive). |

| VAM (paper) | 2008 | German | Vocal, Visual | Valence, Arousal, Dominance | 1018 | Videos from a German TV talk show: spontaneous emotions from unscripted discussion. Audio and face annotated separately. |

| Name | Year | Final annotation | # annotations per sentence | Aggregation of annotations |

|---|---|---|---|---|

| MuSe-CaR | 2021 | Point in the emotional space | 5 | Evaluator Weighted Estimator |

| SEWA | 2019 | Point in the emotional space | At least 3 | Canonical Time Warping |

| OMG-Emotions* | 2018 | Point in the emotional space | 5 | Evaluator Weighted Estimator |

| RECOLA | 2013 | Point in the emotional space | 6 | Mean Filtering |

| SEMAINE | 2012 | Point in the emotional space | 3 - 8 | - |

| IEMOCAP* | 2008 | Single element from SAM5 | 2 | Z-normalisation |

| SAL | 2008 | Point in the emotional space | 4 | Z-normalisation |

| VAM | 2008 | Single element from SAM5 | 6 - 17 (audio) ; 8 - 34 (face) | - |

Buechel, S. et U. Hahn (2016). Emotion Analysis as a Regression Problem — Dimensional Models and Their Implications on Emotion Representation and Metrical Evaluation. In ECAI 2016, pp.1114–1122. IOS Press. https://doi.org/10.3233/978-1-61499-672-9-1114

Ekman, P. (1992). An Argument for Basic Emotions. Cognition & Emotion 6(3-4), 169–200. https://doi.org/10.1080/02699939208411068

Gunes, H., B. Schuller, M. Pantic, et R. Cowie (2011). Emotion representation, analysis and synthesis in continuous space : A survey. In 2011 IEEE International Conference on Automatic Face & Gesture Recognition (FG), pp. 827–834. IEEE. https://doi.org/10.1109/FG.2011.5771357

Karg, M., Samadani, A. A., Gorbet, R., Kühnlenz, K., Hoey, J., & Kulić, D. (2013). Body movements for affective expression: A survey of automatic recognition and generation. IEEE Transactions on Affective Computing, 4(4), 341-359.

Nugier, A. (2009). Histoire et grands courants de recherche sur les émotions. Revue électronique de Psychologie Sociale 4(4), 8–14. https://doi.org/10.1109/FG.2011.5771357

Plutchik, R. (2001). The Nature of Emotions : Human emotions have deep evolutionary roots, a fact that may explain their complexity and provide tools for clinical practice. American Scientist 89(4), 344–350. https://www.jstor.org/stable/27857503

Russell, J. A. et A. Mehrabian (1977). Evidence for a Three-Factor Theory of Emotions. Journal of Research in Personality 11(3), 273–294. https://doi.org/10.1016/0092-6566(77)90037-X

Schlosberg, H. (1954). Three dimensions of emotion. Psychological Review, 61(2), 81–88. https://doi.org/10.1037/h0054570