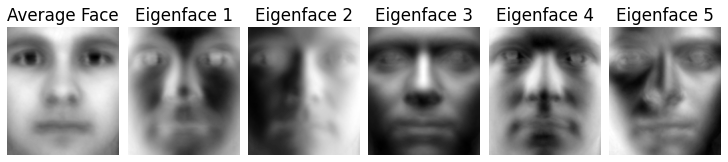

Principal component analysis (PCA) is an example of dimensionality reduction. Autoencoders generalize the idea to non-linear transformations. I have compared the two approaches' ability for feature generation in facial recognition tasks.

The interactive Jupyter notebooks discover underlying structures with PCA and Facebook's DeepFace model and performs facial recognition on the Yale Data Base B.

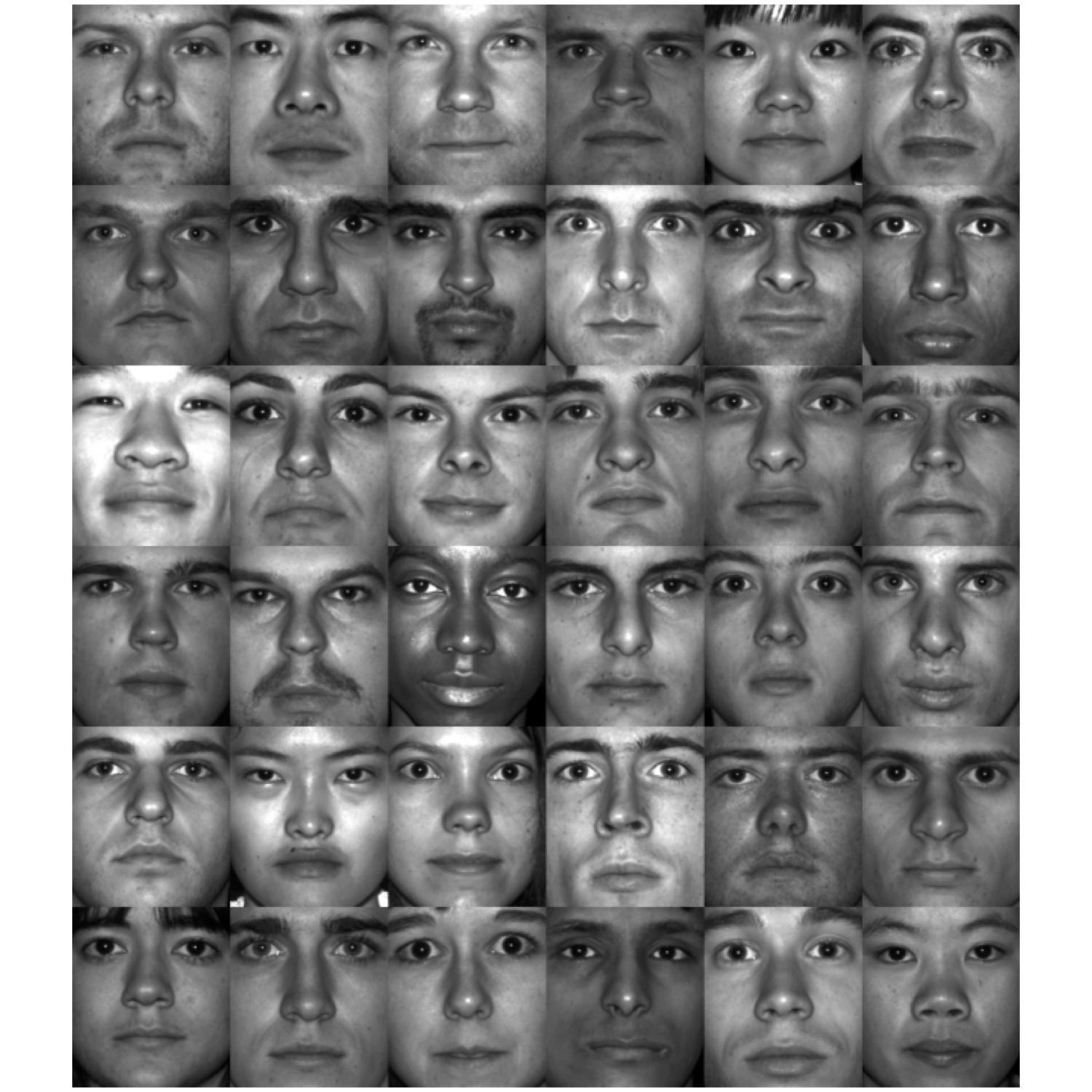

The experimentation is performed on the Extended Yale Dataset B. But a preprocessed version is already included in the repository.

For optimal insights in the algorithms (especially PCA), the notebooks should be viewed in the following order:

yalefaces.ipynb: Get an overview on the dataset's distributioneigenfaces.ipynb: Explore how PCA decomposes face images into eigenfaces and understand their intuitive meaningPCA.ipynb: Perform facial recognition with PCA generated featuresAutoencoder.ipynb: Perform facial recognition with Autoencoder generated features

In order to run the notebooks, you will need the following packages in your python environment:

- openCV

- tensorflow

- scipy

The accompanying report is automatically built in the CI pipeline using GitHub Actions.

This is my final project for the lab course Python for Engineering Data Analysis - from Machine Learning to Visualization offered by the Associate Professorship Simulation of Nanosystems for Energy Conversion at the Technical University of Munich.

I want to thank everyone responsible for this course, giving me a very hands-on introduction to Data Science and Machine Learning.