This repo contains code for experiments in the paper Multigroup Robustness by Lunjia Hu, Charlotte Peale, and Judy Hanwen Shen. This paper will appear at FORC 2024 and ICML 2024.

We include a new implementation of empirical multiaccuracy boosting algorithm that works with any sklearn base classifier. The algorithm is implement in models.py and run_group_robust.py demonstrates how to use the algorithm. how to use the algorithm.

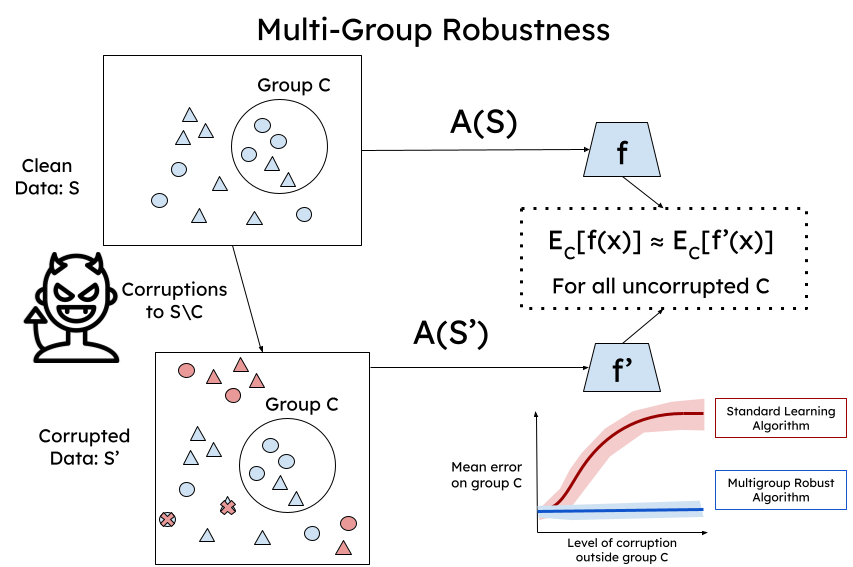

We consider two different attacks that reflect the two types of adversaries we consider:

- Random Label Flipping: This attack is based on label flipping attacks that appear in prior work Xiao et. al. 2012. In our implementation of the attack, labels of a class are randomly flipped. This corresponds to the weak adversary in our work where the adversary only has access to the distribution but not the empirical dataset.

- Data Addition: We also use a more targeted attack that corresponds to the strong adversary in our work that can modify the training data set. We follow the approach of Jagielski et. al. in identifying which data points in the group we are able to modifiy to add in order to affect the target group.

We consider the following models:

- Logistic Regression

- K-Nearest Neighbors

- XGBoost

- MLP

- Decision Trees

- Robust Linear Regression Feng et. al. In addition, we implement data sanitization as an option before training each of the models Paudice et. al. as well as an empirical multi accuracy boosting algorithm from uniform labels.

We evaluate our algorithms on the following standard datasets:

To run the baselines and boosting algorithm on the income dataset with the label flipping on the white male group:

mkdir results

python run_group_robust.py --dataset=income --shift --modify='white-male' --num_runs=5

To run the baselines and boosting algorithm on the income dataset with label sanitization under the addition attack by modifying the white male group and targeting the white female group:

mkdir results/sanitize

python run_group_robust.py --dataset=income --addition --target='white-female' --modify="white-male" --num_runs=5 --target="white-female" --sanitize