This repository contains official implementation of our paper Towards Reasoning in Large Language Models via Multi-Agent Peer Review Collaboration.

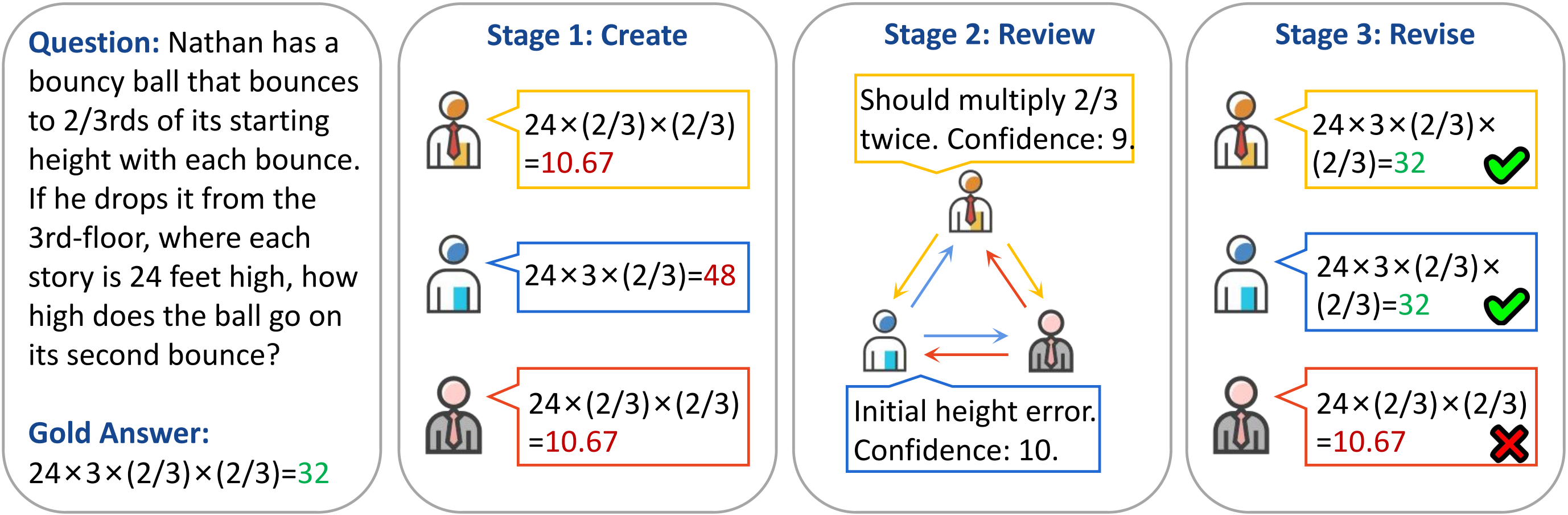

We introduce a multi-agent collaboration strategy that emulates the academic peer review process. Each agent independently constructs its own solution, provides reviews on the solutions of others, and assigns confidence levels to its reviews. Upon receiving peer reviews, agents revise their initial solutions.

Extensive experiments on three different types of reasoning tasks show that our collaboration approach delivers superior accuracy across all ten datasets compared to existing methods.

If you have any question, please feel free to contact us by e-mail: xuzhenran.hitsz@gmail.com or submit your issue in the repository.

[Nov 14, 2023] We release the codes and the results of our method.

conda create -n MAPR python=3.9

conda activate MAPR

pip install -r requirements.txt

Take GSM8K dataset as an example.

python peer_review.py --task GSM8K --openai_key YOUR_KEY --openai_organization YOUR_ORG

python debate.py --task GSM8K --openai_key YOUR_KEY --openai_organization YOUR_ORG

python feedback.py --task GSM8K --openai_key YOUR_KEY --openai_organization YOUR_ORG

python self_correction.py --task GSM8K --openai_key YOUR_KEY --openai_organization YOUR_ORG

python single_agent.py --task GSM8K --openai_key YOUR_KEY --openai_organization YOUR_ORG

Take GSM8K dataset as an example.

python eval.py --task GSM8K --method peer_review --time_flag 1113