A selection of first layer weight filters learned during the pretraining

The purpose of this repo is to explore the functionality of Google's recently open-sourced "sofware library for numerical computation using data flow graphs", TensorFlow. We use the library to train a deep autoencoder on the MNIST digit data set. For background and a similar implementation using Theano see the tutorial at http://www.deeplearning.net/tutorial/SdA.html.

The main training code can be found in autoencoder.py along with the AutoEncoder class that creates and manages the Variables and Tensors used.

In order to avoid platform issues it's highly encouraged that you run the example code in a Docker container. Follow the Docker installation instructions on the website. Then run:

$ git clone https://github.com/cmgreen210/TensorFlowDeepAutoencoder

$ cd TensorFlowDeepAutoencoder

$ docker build -t tfdae -f cpu/Dockerfile .

$ docker run -it -p 80:6006 tfdae python run.pyNavigate to http://localhost:80 to explore TensorBoard and view the training progress.

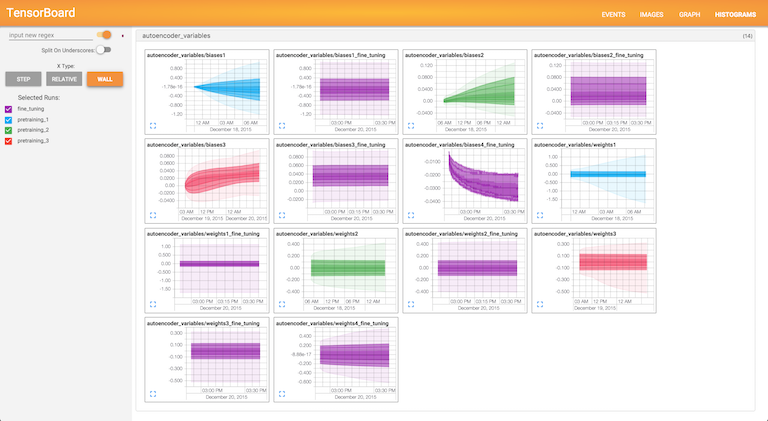

View of TensorBoard's display of weight and bias parameter progress.

## Customizing You can play around with the run options, including the neural net size and shape, input corruption, learning rates, etc. in [flags.py](https://github.com/cmgreen210/TensorFlowDeepAutoencoder/blob/master/code/ae/utils/flags.py).It is expected that Python2.7 is installed and your default python version.

$ git clone https://github.com/cmgreen210/TensorFlowDeepAutoencoder

$ cd TensorFlowDeepAutoencoder

$ sudo chmod +x setup_linux

$ sudo ./setup_linux # If you want GPU version specify -g or --gpu

$ source venv/bin/activate $ git clone https://github.com/cmgreen210/TensorFlowDeepAutoencoder

$ cd TensorFlowDeepAutoencoder

$ sudo chmod +x setup_mac

$ sudo ./setup_mac

$ source venv/bin/activate To run the default example execute the following command. NOTE: this will take a very long time if you are running on a CPU as opposed to a GPU

$ python code/run.pyNavigate to http://localhost:6006 to explore TensorBoard and view training progress.

View of TensorBoard's display of weight and bias parameter progress.

## Customizing You can play around with the run options, including the neural net size and shape, input corruption, learning rates, etc. in [flags.py](https://github.com/cmgreen210/TensorFlowDeepAutoencoder/blob/master/code/ae/utils/flags.py).