This repository is to support contributions for tools that generated the D2A dataset hosted on IBM Data Asset eXchange.

- Introduction

- Downloading the D2A Dataset and the Splits

- Sample Description and Dataset Stats

- Using the Dataset

- D2A Leaderboard

- Annotating More Projects

- D2A Paper and Citation

D2A is a differential analysis based approach to label issues reported by inter-procedural static analyzers as ones that are more likely to be true positives and ones that are more likely to be false positives. Our goal is to generate a large labeled dataset that can be used for machine learning approaches for code understanding and vulnerability detection.

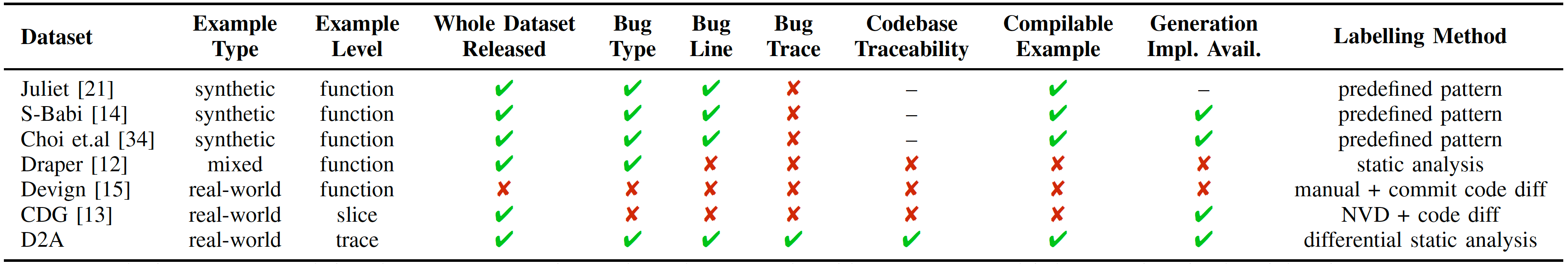

Given programs can exhibit diverse behaviors, training machine learning models for code understanding and vulnerability detection requires large datasets. However, according to a recent survey, lacking good and real-world datasets has become a major barrier for this field. Many existing works created self-constructed datasets based on different criteria and may just release partial datasets. The following table summarizes the characteristics of a few popular datasets for software vulnerability detection tasks.

Due to the lack of oracle, there is no perfect dataset that is large enough and has 100% correct labels for AI-based vulnerability detection tasks. Datasets generated from manual reviews have better quality labels in general. However, limited by their nature, they are usually not large enough for model training. On the other hand, although the quality of the D2A dataset is bounded by the capacity of static analysis, D2A can produce large datasets with better labels comparing to the ones labeled solely by static analysis, and complement existing manually labelled datasets.

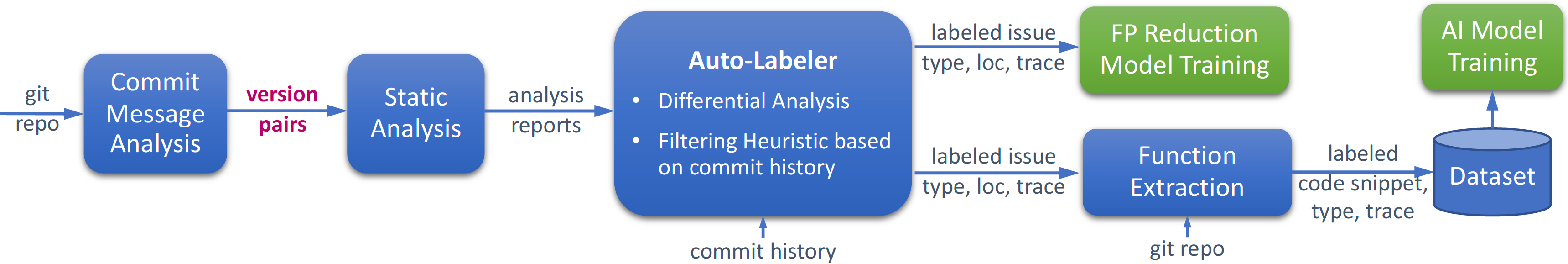

For projects with commit histories, we assume some commits are code changes that fix bugs. We run static analysis on the versions before and after such commits. If some issues detected in a before-commit version disappear in the corresponding after-commit version, they are very likely to be real bugs that got fixed by the commit. If we analyze a large number of consecutive version pairs and aggregate the results, some issues found in a before-commit version never disappear in an after-commit version. We say they are not very likely to be real bugs because they were never fixed. Then, we de-duplicate the issues found in all versions and adjust their classifications according to the commit history. Finally, we label the issues that are very likely to be real bugs as positives and the remaining ones as negatives.

The following figure shows the overview of the D2A dataset generation pipeline.

-

Commit Message Analysis (scripts/infer_pipeline/commit_msg_analyzer) analyzes the commit messages and identifies the commits that are more likely to refer to vulnerability fixes.

-

Pairwise Static Analysis (scripts/infer_pipeline) run the analyzer on the before-commit and after-commit versions for the commit hashes selected in the previous step.

-

Auto-labeler (scripts/auto_labeler) merges the analysis results for all selected commit versions and label each issue based on differential logic and commit history heuristics.

-

Function Extractor (scripts/dataset_generator) extracts the bodies of the functions involved in the trace.

There are two types of samples:

-

Samples based on static analyzer outputs. Such samples are reported by the static analyzer and thus all have analyzer outputs including bug traces. We extract the functions mentioned in the trace together with other information. We use "label_source": "auto_labeler" to denote such samples. The labels can be

0(e.g., auto-labeler_0.json) or1(e.g., auto-labeler_1.json) according to the auto-labeler. Please refer to Sec.III-C in the D2A paper for details. -

Samples from the fixed versions. Such samples are not directly generated from static analysis outputs because they are not reported by the analyzer. Therefore, they do not contain static analyzer outputs. Instead, given samples with positive auto-labeler labels (i.e.

label_source == "auto_labeler" && label == 1) found in the before-fix version, we extract the corresponding functions in the after-fix version and label them0(e.g., after_fix_0.json). We use "label_source": "after_fix_extractor" to denote such samples. More information can be found in the Sec.III-D in the D2A paper.

The D2A dataset and the global splits can be downloaded from IBM Data Asset eXchange.

The latest version: v1.0.0.

Details could be found in Sample Description and Dataset Stats.

Please refer to Dataset Usage Examples for details.

Please refer to Running the Dataset Generation Pipeline.

D2A: A Dataset Built for AI-Based Vulnerability Detection Methods Using Differential Analysis

Please cite the following paper, if the D2A dataset or generation pipeline is useful for your research.

@inproceedings{D2A,

author = {Zheng, Yunhui and Pujar, Saurabh and Lewis, Burn and Buratti, Luca and Epstein, Edward and Yang, Bo and Laredo, Jim and Morari, Alessandro and Su, Zhong},

title = {D2A: A Dataset Built for AI-Based Vulnerability Detection Methods Using Differential Analysis},

year = {2021},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {ICSE-SEIP '21},

booktitle = {Proceedings of the ACM/IEEE 43rd International Conference on Software Engineering: Software Engineering in Practice}

}