This repository contains all of the tools necessary to replicate the following results:

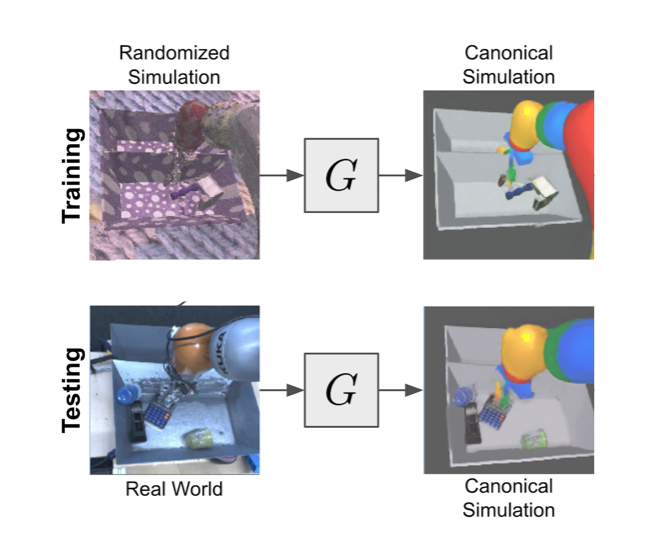

The project is insiped by James et al., 2019 - Sim-to-Real via Sim-to-Sim: Data-efficient Robotic Grasping via Randomized-to-Canonical Adaptation Networks. However, instead of training a GAN loss, it uses a Perceptual (Feature) Loss objective. model, which is usually used for image segmentation tasks. In this case, instead of classifying each pixel (i.e. predict the segmentation mask), we will make the model convert a domain-randomized image into a canonical (non-randomized) version.

I did not have a robotic arm, hence it's only trained on box with random objects.

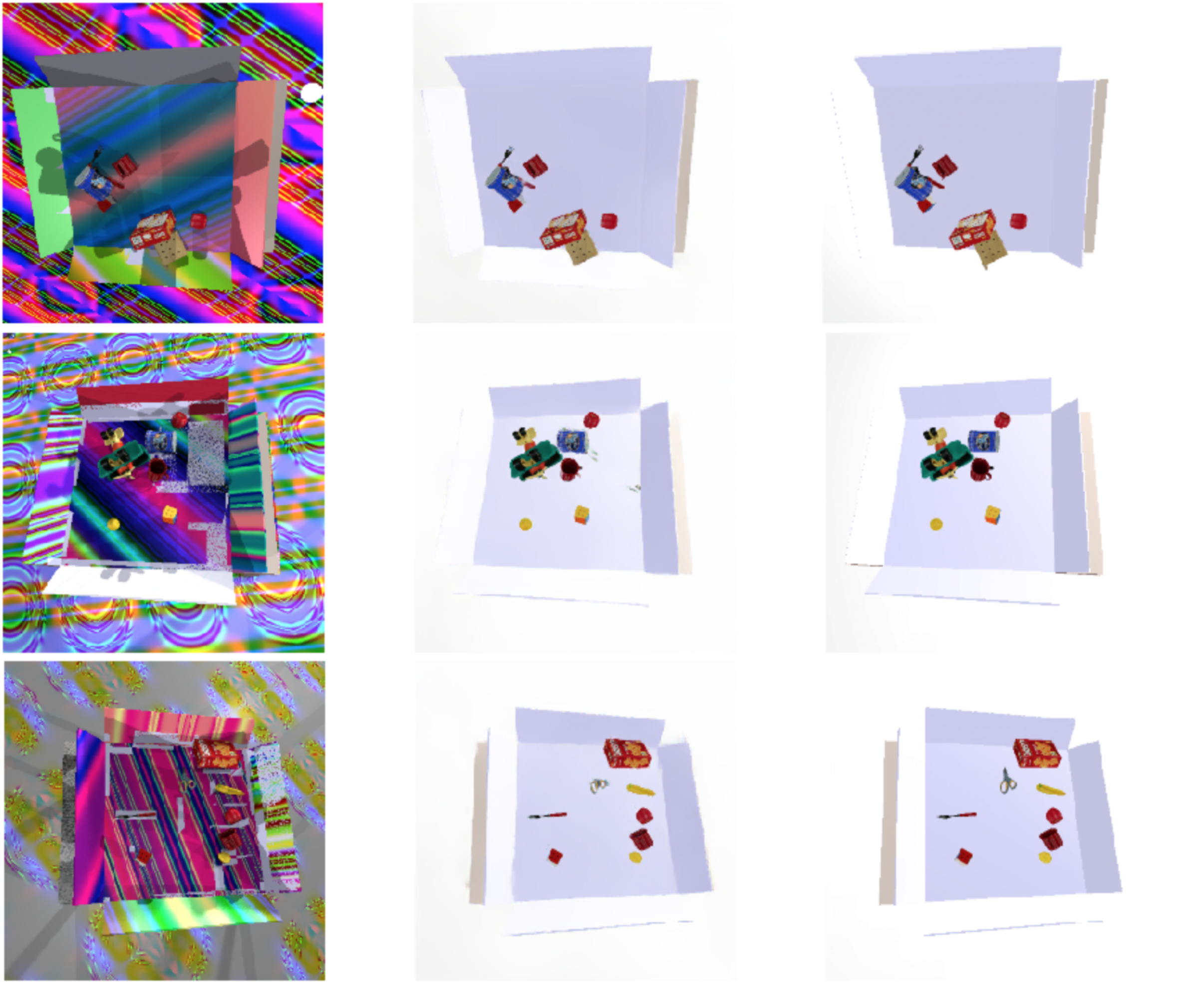

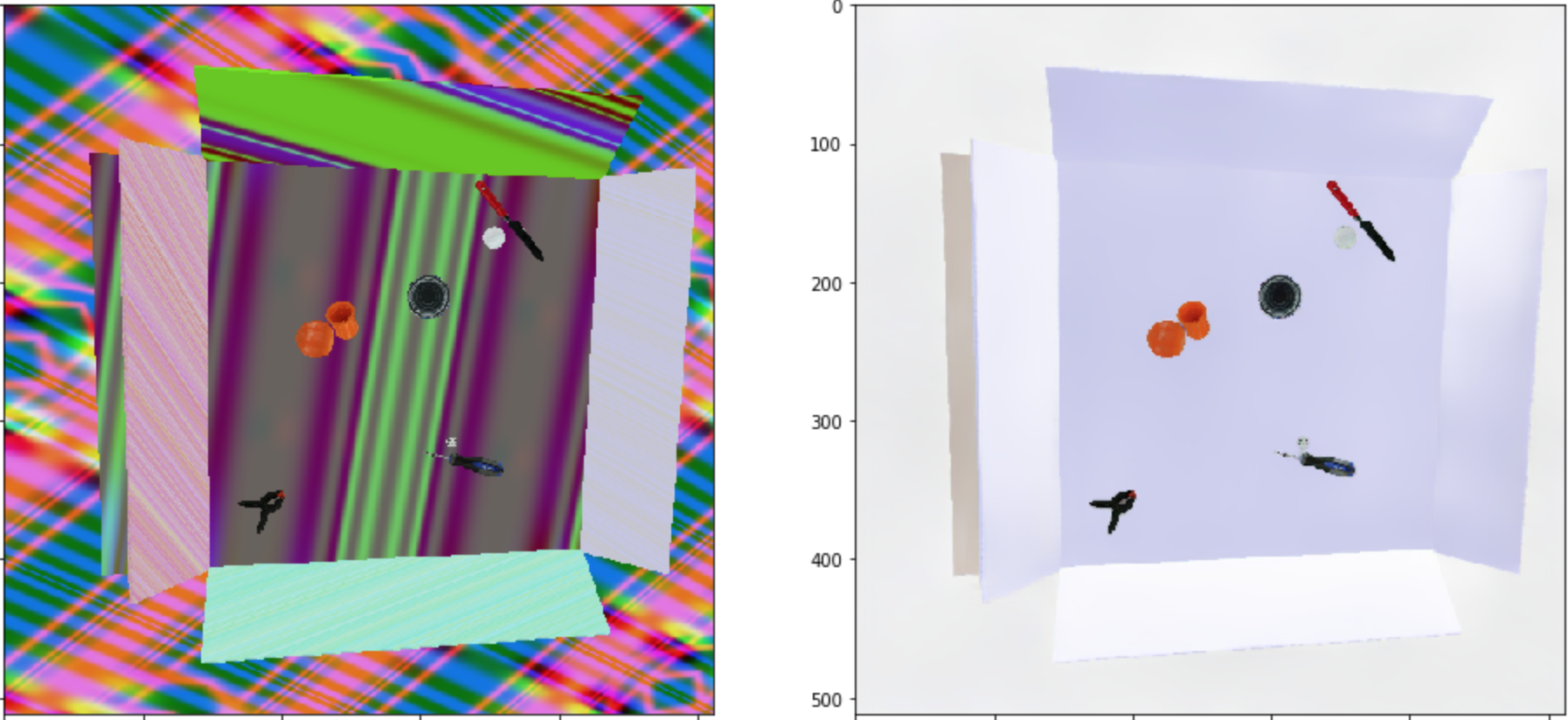

Below are few examples (input, output and ground-truth):

Sim-to-sim - (randomized simulated image to canonical):

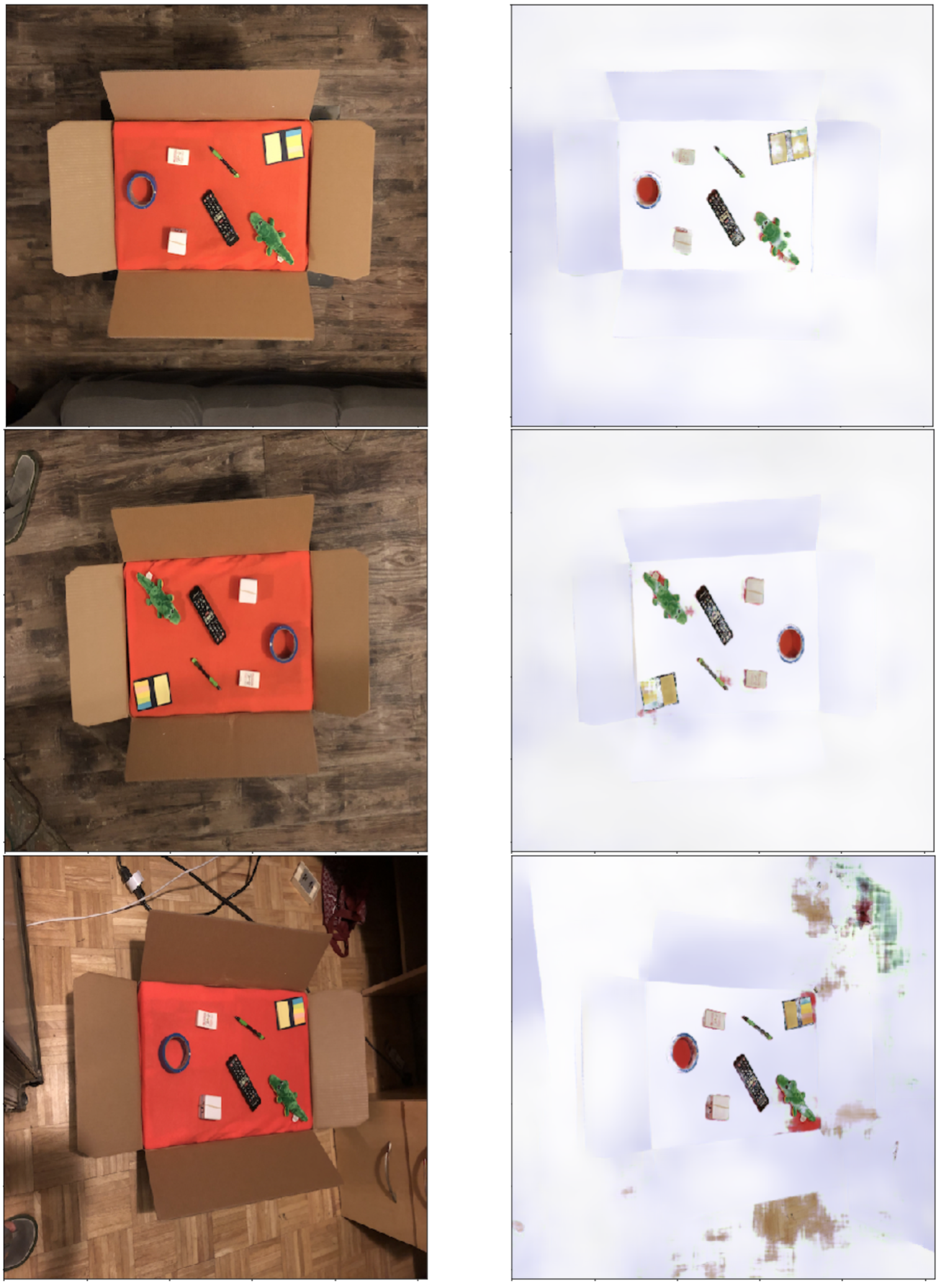

Real-to-sim - (real photo to canonical) - :

* note model has never seen these objects in the scene, hence the noise.

* note model has never seen these objects in the scene, hence the noise.

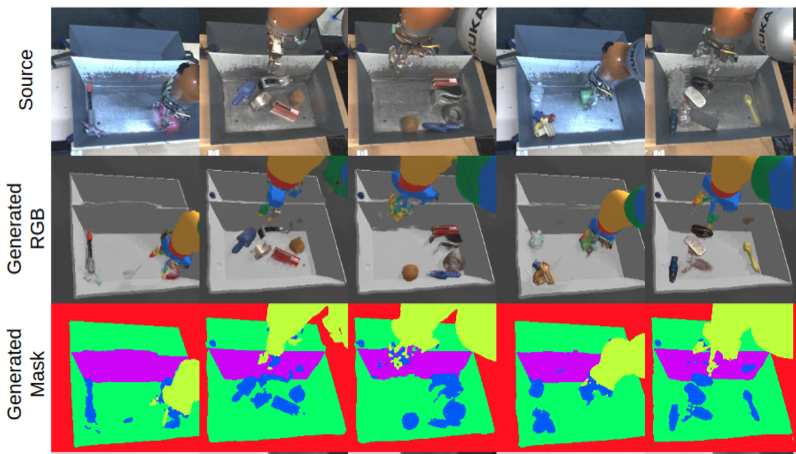

For a reference here are the results from the original paper (they also have a mask generated):

- Upload requirements.txt

- Instructions for image generation

- Instructions for model training