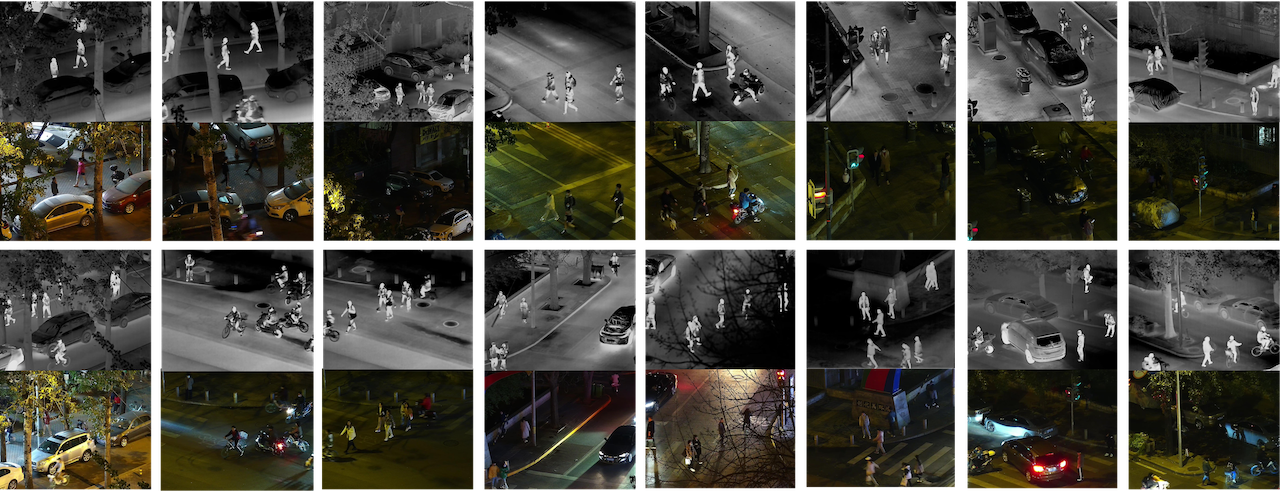

LLVIP: A Visible-infrared Paired Dataset for Low-light Vision

Project | Arxiv | Benchmarks|

News

- ⚡(2021-09-01): We have released the dataset, please visit homepage for access to the dataset. (Note that we removed some low-quality images from the original dataset, and for this version there are 30976 images.)

Citation

If you use this data for your research, please cite our paper LLVIP: A Visible-infrared Paired Dataset for Low-light Vision:

@article{jia2021llvip,

title={LLVIP: A Visible-infrared Paired Dataset for Low-light Vision},

author={Jia, Xinyu and Zhu, Chuang and Li, Minzhen and Tang, Wenqi and Zhou, Wenli},

journal={arXiv preprint arXiv:2108.10831},

year={2021}

}

Image Fusion

Baselines

Pedestrian Detection

Baselines

Yolov5

Preparation

Linux and Python>=3.6.0

- Install requirements

git clone https://github.com/bupt-ai-cz/LLVIP.git cd LLVIP/yolov5 pip install -r requirements.txt - File structure

We provide a script named

yolov5 ├── ... └──LLVIP ├── labels | ├──train | | ├── 010001.txt | | ├── 010002.txt | | └── ... | └──val | ├── 190001.txt | ├── 190002.txt | └── ... └── images ├──train | ├── 010001.jpg | ├── 010002.jpg | └── ... └── val ├── 190001.jpg ├── 190002.jpg └── ...xml2txt_yolov5.pyto convert xml files to txt files, remember to modify the file path before using.

Train

python train.py --img 1280 --batch 8 --epochs 200 --data LLVIP.yaml --weights yolov5l.pt --name LLVIP_exportSee more training options in train.py. The pretrained model yolov5l.pt can be downloaded from here. The trained model will be saved in ./runs/train/LLVIP_export/weights folder.

Test

python val.py --data --img 1280 --weights last.pt --data LLVIP.yamlRemember to put the trained model in the same folder as val.py.

- Click Here for the tutorial of Yolov3.

Results

We retrained and tested Yolov5l and Yolov3 on the updated dataset (30976 images).

Where AP means the average of AP at IoU threshold of 0.5 to 0.95, with an interval of 0.05.

The figure above shows the change of AP under different IoU thresholds. When the IoU threshold is higher than 0.7, the AP value drops rapidly. Besides, the infrared image highlights pedestrains and achieves a better effect than the visible image in the detection task, which not only proves the necessity of infrared images but also indicates that the performance of visible-image pedestrian detection algorithm is not good enough under low-light conditions.We also calculated log average miss rate based on the test results and drew the miss rate-FPPI curve.

Image-to-Image Translation

Baseline

pix2pixGAN

Preparation

- Install requirements

cd pix2pixGAN pip install -r requirements.txt - Prepare dataset

- File structure

pix2pixGAN ├── ... └──datasets ├── ... └──LLVIP ├── train | ├── 010001.jpg | ├── 010002.jpg | ├── 010003.jpg | └── ... └── test ├── 190001.jpg ├── 190002.jpg ├── 190003.jpg └── ...

Train

python train.py --dataroot ./datasets/LLVIP --name LLVIP --model pix2pix --direction AtoB --batch_size 8 --preprocess scale_width_and_crop --load_size 320 --crop_size 256 --gpu_ids 0 --n_epochs 100 --n_epochs_decay 100Test

python test.py --dataroot ./datasets/LLVIP --name LLVIP --model pix2pix --direction AtoB --gpu_ids 0 --preprocess scale_width_and_crop --load_size 320 --crop_size 256See ./pix2pixGAN/options for more train and test options.

Results

We retrained and tested pix2pixGAN on the updated dataset(30976 images). The structure of generator is unet256, and the structure of discriminator is the basic PatchGAN as default.

License

This LLVIP Dataset is made freely available to academic and non-academic entities for non-commercial purposes such as academic research, teaching, scientific publications, or personal experimentation. Permission is granted to use the data given that you agree to our license terms.

Call For Contributions

Welcome to point out errors in data annotation. Also welcome to contribute more data annotations, such as segmentation. Please contact us.

Contact

email: shengjie.Liu@bupt.edu.cn, czhu@bupt.edu.cn, jiaxinyujxy@qq.com, tangwenqi@bupt.edu.cn