AWS Virtual Kubelet provides an extension to your Kubernetes cluster that can provision and maintain EC2 based Pods. These EC2 pods can run arbitrary applications which might not otherwise fit into containers.

This expands the management capabilities of Kubernetes, enabling use-cases such as macOS native application lifecycle control via standard Kubernetes tooling.

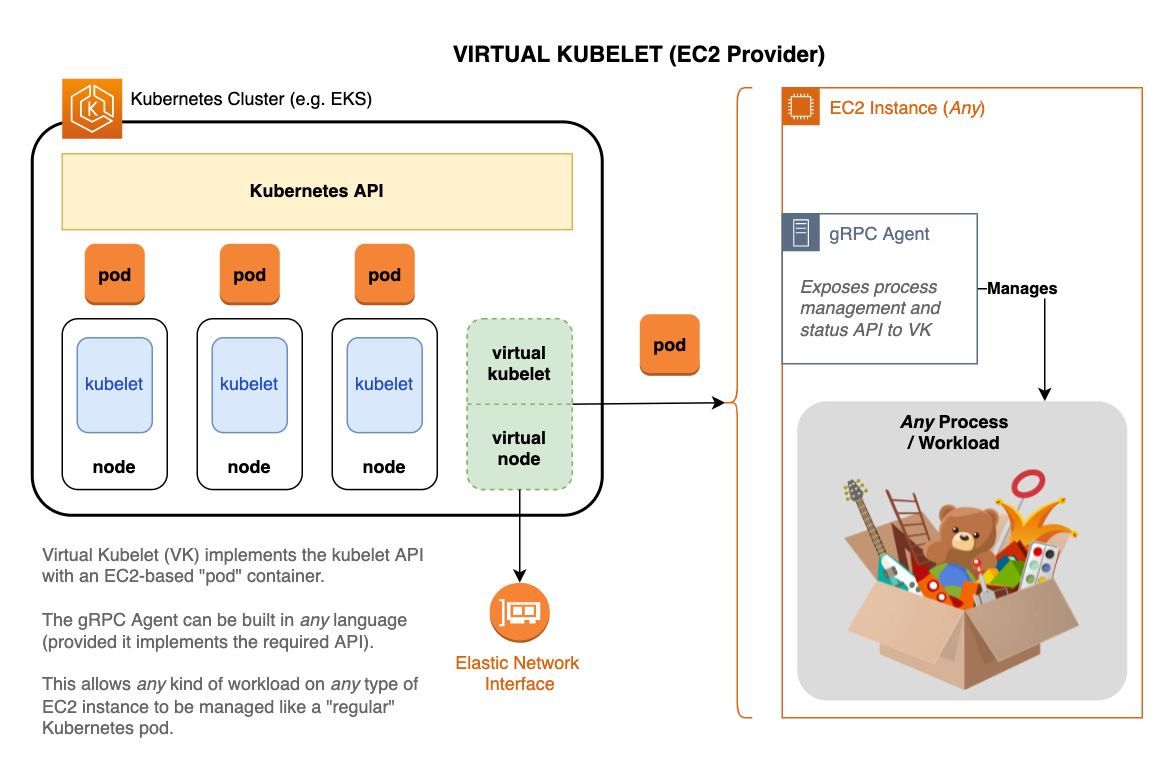

A typical EKS Kubernetes (k8s) cluster is shown in the diagram below. It consists of a k8s API layer, a number of nodes which each run a kubelet process, and pods (one or more containerized apps) managed by those kubelet processes.

Using the Virtual Kubelet library, this EC2 provider implements a virtual kubelet which looks like a typical kubelet to k8s. API requests to create workload pods, etc. are received by the virtual kubelet and passed to our custom EC2 provider.

This provider implements pod-handling endpoints using EC2 instances and an agent that runs on them. The agent is responsible for launching and terminating "containers" (applications) and reporting status. The provider ↔ agent API contract is defined using the Protocol Buffers spec and implemented via gRPC. This enables agents to be written in any support language and run on a variety of operating systems and architectures 1.

Nodes are represented by ENIs that maintain a predictable IP address used for naming and consistent association of workload pods with virtual kubelet instances.

See Software Architecture for an overview of the code organization and general behavior. For detailed coverage of specific aspects of system/code behavior, see implemented RFCs.

- Virtual Kubelet (VK)

- Upstream library / framework for implementing custom Kubernetes providers

- Virtual Kubelet Provider (VKP)

- This EC2-based provider implementation (sometimes referred to as virtual-kubelet or VK also)

- Virtual Kubelet Virtual Machine (VKVM)

- The Virtual Machine providing compute for this provider implementation (i.e. an Amazon EC2 Instance)

- Virtual Kubelet Virtual Machine Agent (VKVMA)

- The gRPC agent that exposes an API to manage workloads on EC2 instances (also VKVMAgent, or just Agent)

kubelet → Virtual Kubelet library + this custom EC2 provider

node → Elastic Network Interface (managed by VKP)

pod → EC2 Instance + VKVMAgent + Custom Workload

The following are required to build and deploy this project. Additional tools may be needed to utilize examples or set up a development environment.

Tested with Go v1.12, 1.16, and 1.17. See the Go documentation for installation steps.

Docker is a container virtualization runtime.

See Get Started in the docker documentation for setup steps.

The provider interacts directly with AWS APIs and launches EC2 instances so an AWS account is needed. Click Create an AWS Account at https://aws.amazon.com/ to get started.

Some commands utilize the AWS CLI. See the AWS CLI page for installation and configuration instructions.

EKS is strongly recommended, though any k8s cluster with sufficient access to make AWS API calls and communicate over the network with gRPC agents could work.

To get the needed infrastructure up and running quickly, see the deploy README which details using the AWS CDK Infrastructure-as-Code framework to automatically provision the required resources.

Once the required infrastructure is in place, follow the steps in this section to build the VK provider.

This project comes with a Makefile to simplify build-related tasks.

Run make in this directory to get a list of subcommands and their description.

Some commands (such as make push) require appropriately set Environment Variables to function correctly. Review variables at the top of the Makefile with ?= and set in your shell/environment before running these commands.

- Run

make buildto build the project. This will also generate protobuf files and other generated files if needed. - Next run

make dockerto create a docker image with thevirtual-kubeletbinary. - Run

make pushto deploy the docker image to your Elastic Container Registry.

Now we're ready to deploy the VK provider using the steps outlined in this section.

Some commands below utilize the kubectl tool to manage and configure k8s. Other tools such as Lens may be used if desired (adapt instructions accordingly).

Example files that require updating placeholders with actual (environment-specific) data are copied to ./local before modification. The local directory's contents are ignored, which prevents accidental commits and leaking account numbers, etc. into the GitHub repo.

The ClusterRole and Binding give VK pods the necessary permissions to manage k8s workloads.

- Run

kubectl apply -f deploy/vk-clusterrole_binding.yamlto deploy the cluster role and binding.

The ConfigMap provides global and default VK/VKP configuration elements. Some of these settings may be overridden on a per-pod basis.

-

Copy the provided examples/config-map.yaml to the

./localdir and modify as-needed. See Config for a detailed explanation of the various configuration options. -

Next, run

kubectl apply -f local/config-map.yamlto deploy the config map.

This configuration will deploy a set of VK providers using the docker image built and pushed earlier.

- Copy the provided examples/vk-statefulset.yaml file to

./local. - Replace these placeholders in the

image:reference with the values from your account/environmentAWS_ACCOUNT_IDAWS_REGIONDOCKER_TAG

- Run

kubectl apply -f local/vk-statefulset.yamlto deploy the VK provider pods.

At this point you should have at least one running VK provider pod running successfully. This section describes how to launch EC2-backed pods using the provider.

examples/pods contains both a single (unmanaged) pod example and a pod Deployment example.

NOTE It is strongly recommended that workload pods run via a supervisory management construct such as a Deployment (even for single-instance pods). This will help minimize unexpected loss of pod resources and allow Kubernetes to efficiently use resources.

- Copy the desired pod example(s) to

./local2. Runkubectl apply -f <filename>(replacing<filename>with the actual file name).

See the Cookbook for more usage examples.

This project serves as a translation and mediation layer between Kubernetes and EC2-based pods. It was created in order to run custom workloads directly on any EC2 instance type/size available via AWS (e.g. Mac Instances).

- Follow the steps in this README to get all the infrastructure and requirements in place and working with the example agent.

- Using the example agent as a guide, implement your own gRPC agent to support the desired workloads.

Take a look at the good first issue Issues. Read the CONTRIBUTING guidelines and submit a Pull Request! 🚀

Yes. See RFCs for improvement proposals and EdgeCases for known issues / workarounds.

BacklogFodder contains additional items that may become roadmap elements.

Yes. See Metrics for details.

See CONTRIBUTING for more information.

This project is licensed under the Apache-2.0 License.

gofmt formatting is enforced via GitHub Actions workflow.

Footnotes

-

A Golang sample agent is included. ↩