Terraform EC2 Image Builder Container Hardening Pipeline summary

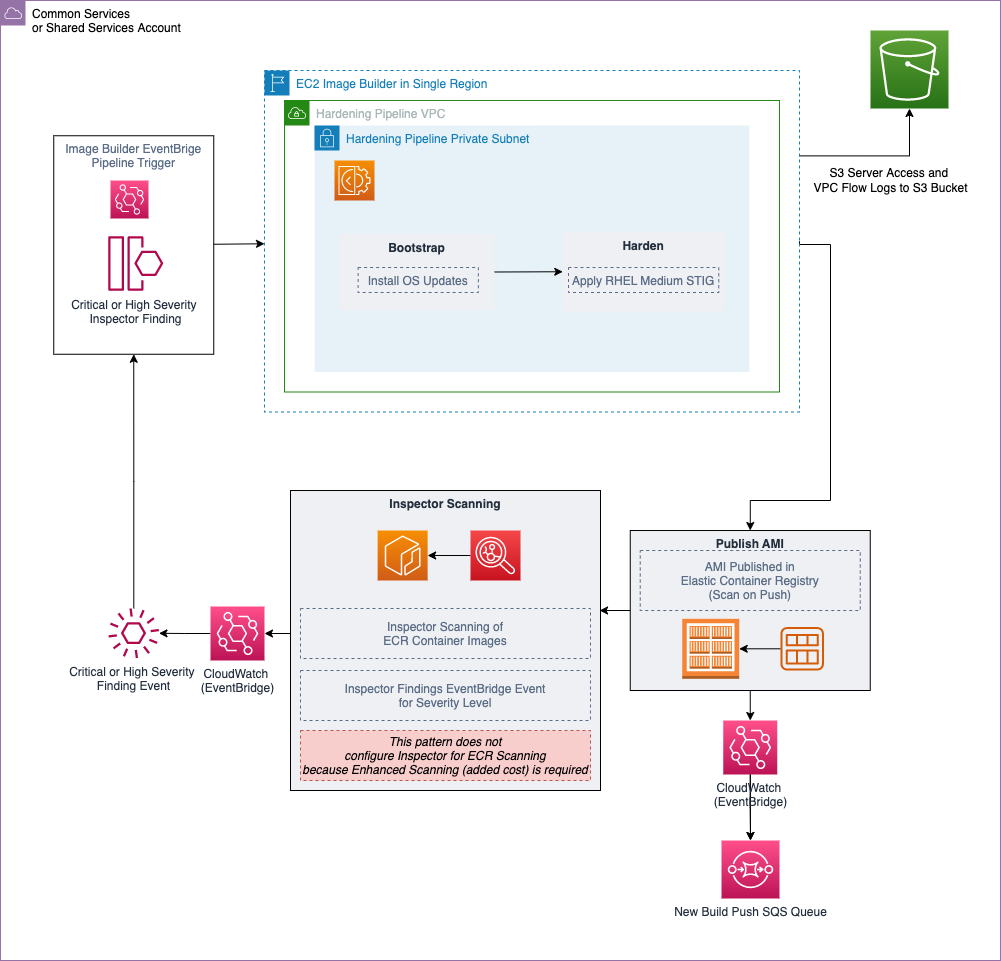

This solution builds an EC2 Image Builder Pipeline with an Amazon Linux 2 Baseline Container Recipe, which is used to deploy a Docker based Amazon Linux 2 Container Image that has been hardened according to RHEL 7 STIG Version 3 Release 7 - Medium. See the "STIG-Build-Linux-Medium version 2022.2.1" section in Linux STIG Components for details. This is commonly referred to as a "Golden" container image.

The solution includes two Cloudwatch Event Rules. One which triggers the start of the Container Image pipeline based on an Inspector Finding of "High" or "Critical" so that insecure images are replaced, if Inspector and Amazon Elastic Container Registry "Enhanced Scanning" are both enabled. The other Event Rule sends notifications to an SQS Queue after a successful Container Image push to the ECR Repository, to better enable consumption of new container images.

Prerequisites

- Terraform v.15+. Download and setup Terraform. Refer to the official Terraform instructions to get started.

- AWS CLI installed for setting your AWS Credentials for Local Deployment.

- An AWS Account to deploy the infrastructure within.

- Git (if provisioning from a local machine).

- A role within the AWS account that you are able create AWS resources with

- Ensure the .tfvars file has all variables defined or define all variables at "Terraform Apply" time

Target technology stack

- Two S3 Buckets, 1 for the Pipeline Component Files and 1 for Server Access and VPC Flow logs

- An ECR Repository

- A VPC, a Public and Private subnet, Route tables, a NAT Gateway, and an Internet Gateway

- An EC2 Image Builder Pipeline, Recipe, and Components

- A Container Image

- A KMS Key for Image Encryption

- An SQS Queue

- Four roles, one for the EC2 Image Builder Pipeline to execute as, one instance profile for EC2 Image Builder, and one for EventBridge Rules, and one for VPC Flow Log collection.

- Two Cloudwatch Event Rules, one which triggers the start of the pipeline based on an Inspector Finding of "High" or "Critical," and one which sends notifications to an SQS Queue for a successful Image push to the ECR Repository

- This pattern creates 43 AWS Resources total

Limitations

VPC Endpoints cannot be used, and therefore this solution creates VPC Infrastructure that includes a NAT Gateway and an Internet Gateway for internet connectivity from its private subnet. This is due to the bootstrap process by AWSTOE.

Operating systems

This Pipeline only contains a recipe for Amazon Linux 2.

- Amazon Linux 2

Structure

├── pipeline.tf

├── image.tf

├── infr-config.tf

├── dist-config.tf

├── components.tf

├── recipes.tf

├── LICENSE

├── README.md

├── hardening-pipeline.tfvars

├── config.tf

├── files

│ └── assumption-policy.json

├── roles.tf

├── kms-key.tf

├── main.tf

├── outputs.tf

├── sec-groups.tf

├── trigger-build.tf

└── variables.tfModule details

hardening-pipeline.tfvarscontains the Terraform variables to be used at apply time.pipeline.tfcreates and manages an EC2 Image Builder pipeline in Terraform.image.tfcontains the definitions for the Base Image OS, this is where you can modify for a different base image pipeline.infr-config.tfanddist-config.tfcontain the resources for the minimum AWS infrastructure needed to spin up and distribute the image.components.tfcontains an S3 upload resource to upload the contents of the /files directory, and where you can modularly add custom component YAML files as well.recipes.tfis where you can specific different mixtures of components to create a different container recipe.trigger-build.tfcontains the EventBridge rules and SQS queue resources.roles.tfcontains the IAM policy definitions for the EC2 Instance Profile and Pipeline deployment role.infra-network-config.tfcontains the minimum VPC infrastructure to deploy the container image into./filesis intended to contain the.ymlfiles which are used to define any custom components used in components.tf.

Target architecture

Automation and scale

-

This terraform module set is intended to be used at scale. Instead of deploying it locally, the Terraform modules can be used in a multi-account strategy environment, such as in an AWS Control Tower with Account Factory for Terraform environment. In that case, a backend state S3 bucket should be used for managing Terraform state files, instead of managing the configuration state locally.

-

To deploy for scaled use, deploy the solution to one central account, such as "Shared Services/Common Services" from a Control Tower or Landing Zone account model and grant consumer accounts permission to access the ECR Repo/KMS Key, see this blog post explaining the setup. For example, in an Account Vending Machine or Account Factory for Terraform, add permissions to each account baseline or account customization baseline to have access to that ECR Repo and Encryption key.

-

This container image pipeline can be simply modified once deployed, using EC2 Image Builder features, such as the "Component" feature, which will allow easy packaging of more components into the Docker build.

-

The KMS Key used to encrypt the container image should be shared across accounts which the container image is intended to be used in

-

Support for other images can be added by simply duplicating this entire Terraform module, and modifying the

recipes.tfattributes,parent_image = "amazonlinux:latest"to be another parent image type, and modifying the repository_name to point to an existing ECR repository. This will create another pipeline which deploys a different parent image type, but to your existing ECR repostiory.

Deployment steps

Local Deployment

-

Setup your AWS temporary credentials.

See if the AWS CLI is installed:

$ aws --version

aws-cli/1.16.249 Python/3.6.8...AWS CLI version 1.1 or higher is fine.

If you instead received command not found then install the AWS CLI.

- Run aws configure and provide the following values:

$ aws configure

AWS Access Key ID [*************xxxx]: <Your AWS Access Key ID>

AWS Secret Access Key [**************xxxx]: <Your AWS Secret Access Key>

Default region name: [us-east-1]: <Your desired region for deployment>

Default output format [None]: <Your desired Output format>-

Clone the repository with HTTPS or SSH

HTTPS

git clone https://github.com/aws-samples/terraform-ec2-image-builder-container-hardening-pipeline.gitSSH

git clone git@github.com:aws-samples/terraform-ec2-image-builder-container-hardening-pipeline.git- Navigate to the directory containing this solution before running the commands below:

cd terraform-ec2-image-builder-container-hardening-pipeline- Update the placeholder variable values in hardening-pipeline.tfvars. You must provide your own

account_id,kms_key_alias, andaws_s3_ami_resources_bucket, however, you should also modify the rest of the placeholder variables to match your environment and your desired configuration.

account_id = "<DEPLOYMENT-ACCOUNT-ID>"

aws_region = "us-east-1"

vpc_name = "example-hardening-pipeline-vpc"

kms_key_alias = "image-builder-container-key"

ec2_iam_role_name = "example-hardening-instance-role"

hardening_pipeline_role_name = "example-hardening-pipeline-role"

aws_s3_ami_resources_bucket = "example-hardening-ami-resources-bucket-0123"

image_name = "example-hardening-al2-container-image"

ecr_name = "example-hardening-container-repo"

recipe_version = "1.0.0"

ebs_root_vol_size = 10- The following command initializes, validates and applies the terraform modules to the environment using the variables defined in your .tfvars file:

terraform init && terraform validate && terraform apply -var-file *.tfvars -auto-approve- After successful completion of your first Terraform apply, if provisioning locally, you should see this snippet in your local machine’s terminal:

Apply complete! Resources: 43 added, 0 changed, 0 destroyed.- (Optional) Teardown the infrastructure with the following command:

terraform init && terraform validate && terraform destroy -var-file *.tfvars -auto-approveTroubleshooting

When running Terraform apply or destroy commands from your local machine, you may encounter an error similar to the following:

Error: configuring Terraform AWS Provider: error validating provider credentials: error calling sts:GetCallerIdentity: operation error STS: GetCallerIdentity, https response error StatusCode: 403, RequestID: 123456a9-fbc1-40ed-b8d8-513d0133ba7f, api error InvalidClientTokenId: The security token included in the request is invalid.This error is due to the expiration of the security token for the credentials used in your local machine’s configuration.

See "Set and View Configuration Settings" from the AWS Command Line Interface Documentation to resolve.

Author

- Mike Saintcross msaintcr@amazon.com