- 所有参数已支持通过

yaml文件配置! - 在提取墙面点时,引入了法向量信息,支持大入射角时墙面点的准确提取。

- 支持任意线数的旋转式机械激光雷达,已用16线和32线激光雷达数据充分测试(32线所用测试数据集 UrbanLoco/HK-Data20190117)。

yaml文件已预置了VLP-16和HDL-32e两型激光雷达的参数,可直接使用。 - 支持激光雷达以任意姿态安装,而不仅仅是水平姿态,只需要在

yaml文件中配置好kExtrinsicRot和kExtrinsicTrans即可。 - 添加了外参旋转矩阵的单位正交属性检查,如果非单位正交阵,程序报错并退出。

- 新版的测试视频即将发布。

- Now you can configure all the parameters simply through a

segmentation_params.yamlfile! - Take normal info into consideration when identifying wall points, which has proven to be very usefull especially with large incident angle!

- Support Multi-Line Spinning LiDAR with any lines! Just modify LiDAR related parameters in

yamlfile. For now, 16-line and 32-line LiDARs, takingVLP-16andHDL-32as examples respectively, have been fully tested with home-made dataset and UrbanLoco dataset(HK-Data20190117). - You can mount your LiDAR in any pose, not just horizentally. All you need to do is re-write

kExtrinsicRotandkExtrinsicTransinyamlfile. - Check whether the input rotation matrix is a special orthogonal matrix(SO3 group), if not, the program report error and return.

- New test vedio coming soon.

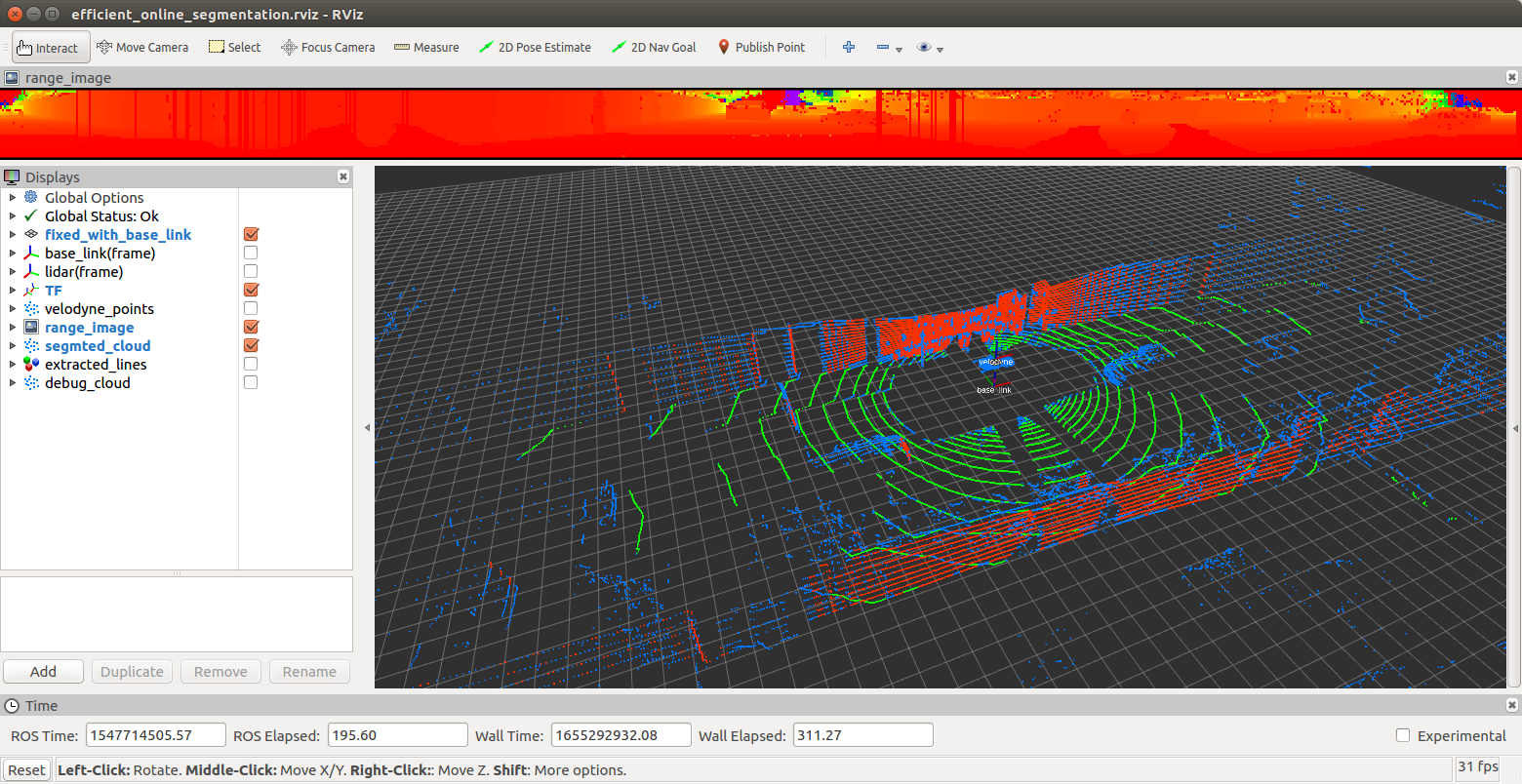

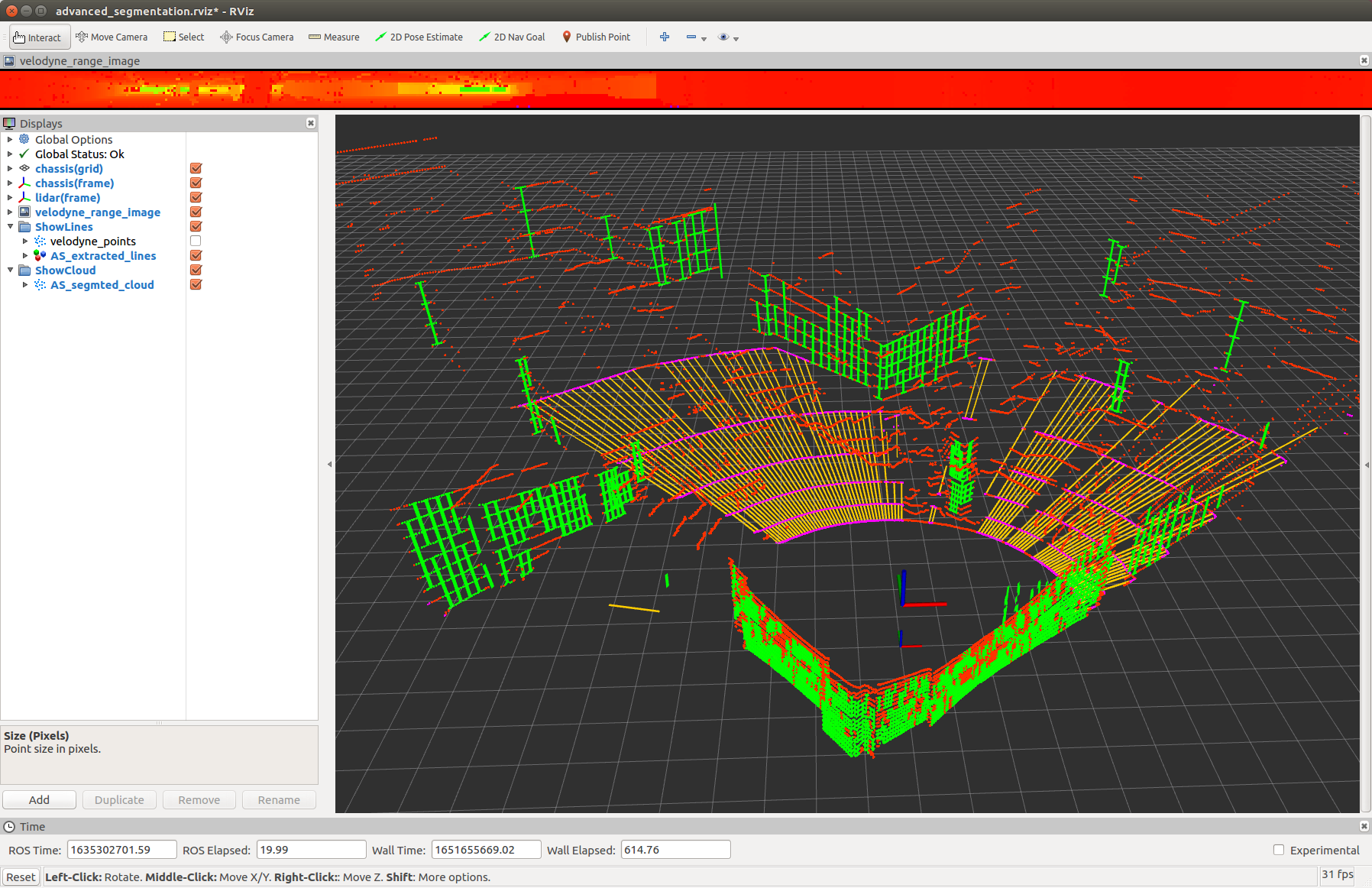

UrbanLoco测试截图(Here is a screenshot of test on UrbanLoco dataset):

This is a light-weight & efficient online segmentation algorithm for segmenting ground points and wall points out of an LiDAR point cloud.

This algorithm is inspired by "M. Himmelsbach, F. v. Hundelshausen and H. -. Wuensche, Fast segmentation of 3D point clouds for ground vehicles, 2010 IEEE Intelligent Vehicles Symposium, 2010", an original implementation can be accessed here linefit_ground_segmentation.

However, I have extended the original idea to extract wall points, not only the ground ones. Also, considering the elevation angle distribution is fixed for multi-line spinning LiDARs, we can use this info to set the size of bins within each segment exactly equal with the size of LiDAR lines (or, rings). By this way, the total size of bins for line fitting search is impressively reduced, thus making my implementation way faster.

See the picture below for a first look, where yellow lines(also magenta points) indicate ground points, and green lines(also green points) indicate wall points.

coming soon ...

A test video can be seen on my personal Zhihu homepage, click here.

coming soon ...

A chinese version can be seen on my personal Zhihu homepage, click here.

This project is light-weight in dependencies, only PCL & OpenCV are required, you can easily install these two by apt-get install. Note that PCL is essential while OpenCV is only for visualization of range image. A developer should take no trouble to remove OpenCV dependency with just a little bit modification on original codes.

Once you have solved the dependencies problem, just execute catkin_make and roslaunch efficient_online_segmentation efficient_online_segmentation.launch. See the .launch file for detail.

By default, VLP-16 LiDAR and HDL-32 LiDAR are supported. If you want to see how 64/128-line LiDAR scanner work, just modify LiDAR related parameters in yaml file.

Not any more after I took the normal info into consideration for wall points extraction. I would be grateful if you happen to have some ideas and willing to share them with me.