- This is a neural image caption generator based on the paper Show and Tell: A Neural Image Caption Generator by Vinyals et al.

- The model is trained on the Flickr8k dataset.

- The pytorch implementation can be found in

encoder_decoder.py.

- The encoder is an EfficientNet with weights pretrained on ImageNet.

- The final layer of the EfficientNet is removed all prior layers are frozen for the duration of the training process.

- The image embedding is passed through a linear layer to reduce the dimensionality of the feature vector to the dimensionality of the joint embedding space.

- This final layer is jointly trained along with the decoder in order to learn the joint embedding space.

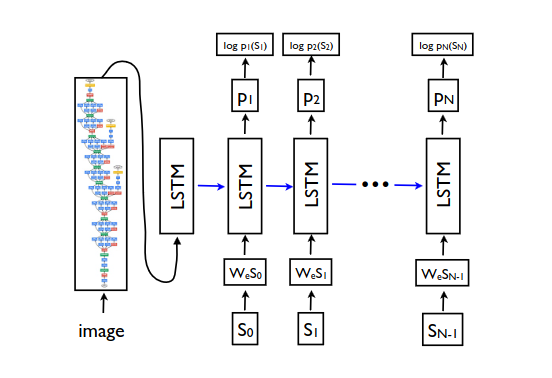

- The decoder is an LSTM which generates a caption for the image.

- At the start of the decoding process, the feature vector from the encoder is passed through the LSTM to allow the hidden state to view the embedded representation of the image.

- A linear layer is added in order to map the hidden state outputs to the vocabulary space, in order to generate a probability distribution over the next word in the caption.