Bayesian Opt Tuner does not show the same results for 'get_best_hyperparameters' and 'get_best_models' methods

Joueswant opened this issue · 1 comments

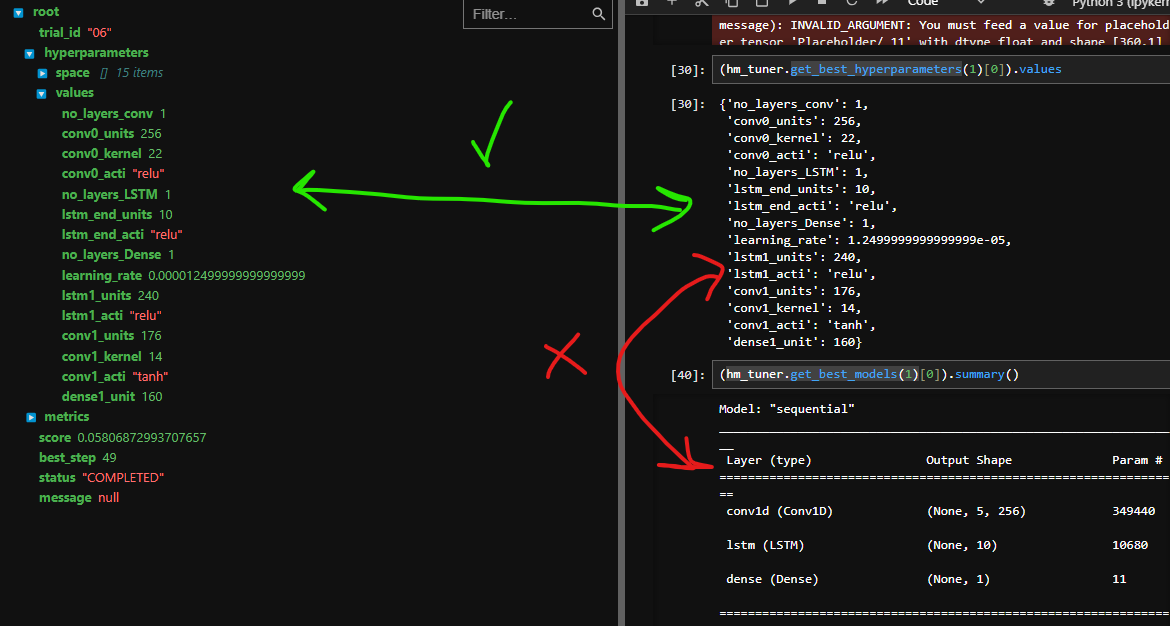

I am tuning a HM with Keras-Tuner BO, once searched the space, I realized that the 'tuner' object I used does not seems to return the same HM when I write tuner.get_best_hyperparameters(1) compared with tuner.get_best_models(). Am I interpreting something wrong?

I would expect that once searched the space with the Bayesian tuner, the variable containg the tuner displays the same hypermodel with both methods: 'get_best_hyperparameters' and 'get_best_models'

Additional context

After some checking, I am thinking this might be the hypermodel itself. I believe I am not understanding the intrinsic of creating conditional parameters through a for-loop (i.e. number of stacked layers)

In my case, I assume that a hypermodel containing this:

## 2. Consequent LSTM layer/s

for c in range(HP.Int('no_layers_LSTM', min_value = 0,

max_value = 3,

step = 1)):

if c == 0:

pass

else:

mod.add(kr.layers.LSTM(

units = HP.Int(f'lstm{c}_units', min_value=16,

max_value=512,

step=16),

activation = HP.Choice(f'lstm{c}_acti', ('tanh', 'relu',)),

kernel_initializer = layer_weights_init,

return_sequences = True,

))

Will create a new hyperparameters, more specifically 3, the first one, when c==0, which means "no LSTM" layers. The second one with a single LSTM layer (c==1) and the last one with 2 LSTM layers (c==2). Yet, it seems after checking that the mismatch seen above comes from this loop