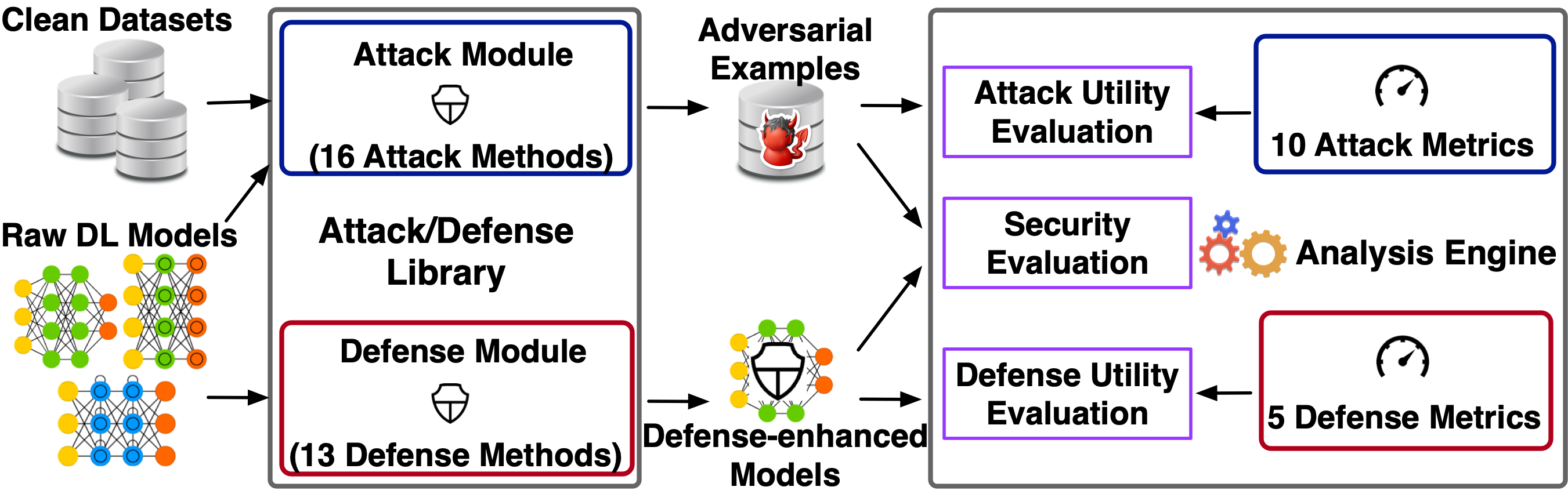

DEEPSEC is the first implemented uniform evaluating and securing system for deep learning models, which comprehensively and systematically integrates the state-of-the-art adversarial attacks, defenses and relative utility metrics of them.

Xiang Ling, Shouling Ji, Jiaxu Zou, Jiannan Wang, Chunming Wu, Bo Li and Ting Wang, DEEPSEC: A Uniform Platform for Security Analysis of Deep Learning Models, IEEE S&P, 2019

RawModels/contains code used to train models and trained models will be attacked;CleanDatasets/contains code used to randomly select clean samples to be attacked;Attacks/contains the implementations of attack algorithms.AdversarialExampleDatasets/are collections of adversarial examples that are generated by all kinds of attacks;Defenses/contains the implementations of defense algorithms;DefenseEnhancedModels/are collections of defense-enhanced models (re-trained models);Evaluations/contains code used to evaluate the utility of attacks/defenses and the security performance between attack and defenses.

Make sure you have installed all of following packages or libraries (including dependencies if necessary) in you machine:

- PyTorch 0.4

- TorchVision 0.2

- numpy, scipy, PIL, skimage, tqdm ...

- Guetzli: A Perceptual JPEG Compression Tool

We mainly employ two benchmark dataset MNIST and CIFAR10.

We firstly train and save the deep learning models for MNIST and CIFAR10 here, and then randomly select and save the clean samples that will be attacked here.

Taking the trained models and the clean samples as the input, we can generate corresponding adversarial examples for each kinds of adversarial attacks that we have implemented in ./Attacks/, and we save these adversarial examples here.

With the defense parameter settings, we obtain the corresponding defense-enhanced model from the original model for each kinds of defense methods that we have implemented in ./Defenses/, and we save those re-trained defense-enhanced models into here.

After generating adversarial examples and obtained the defense-enhanced models, we evaluate the utility performance of attacks or defenses,respectively.

In addition, we can test the security performance between attacks and defenses, i.e., whether or to what extent the state-of-the-art defenses can defend against attacks. All codes can be found ./Evaluations/.

If you want to contribute any new algorithms or updated implementation of existing algorithms, please also let us know.

UPDATE: All codes have been re-constructed for better readability and adaptability.