[Paper, ICCV 2019] [Presentation Video]

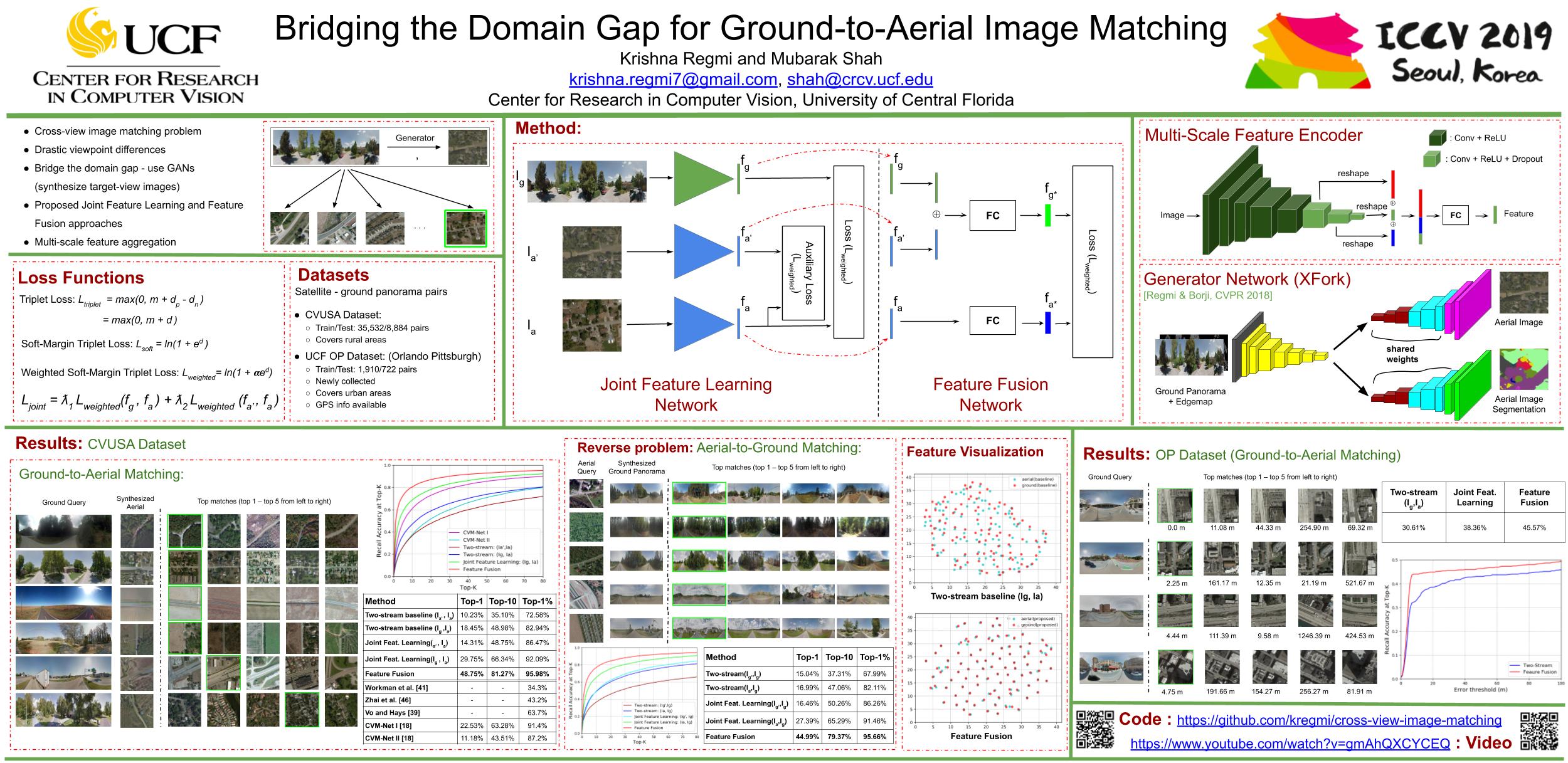

The visual entities in cross-view (e.g. ground and aerial)images exhibit drastic domain changes due to the differences in viewpoints each set of images is captured from. Existing state-of-the-art methods address the problem by learning view-invariant image descriptors. We propose a novel method for solving this task by exploiting the generative powers of conditional GANs to synthesize an aerial representation of a ground-level panorama query and use it to minimize the domain gap between the two views. The synthesized image being from the same view as the reference (target) image, helps the network to preserve important cues in aerial images following our Joint Feature Learning approach. We fuse the complementary features from a synthesized aerial image with the original ground-level panorama features to obtain a robust query representation. In addition, we employ multi-scale feature aggregation in order to preserve image representations at different scales useful for solving this complex task. Experimental results show that our proposed approach performs significantly better than the state-of-the-art methods on the challenging CVUSA dataset in terms of top-1 and top-1% retrieval accuracies. Furthermore, we evaluate the generalization of the proposed method for urban landscapes on our newly collected cross-view localization dataset with geo-reference information.

Code to synthesize cross-view images, i.e generate an aerial image for a given ground panorama and vice versa. The code is borrowed from cross-view image synthesis. This is implemented in Torch LUA. Refer the repo for basic instructions regarding how to get started with the code.

Code to train the two-stream baseline network.

Code to jointly learn the features for ground panorama (query) and synthesized aerial from the query and also for aerial images. (Need to generate the aerial images first).

Code to learn fused representations for ground panorama and synthesized aerial from ground to obtain robust query descriptor and the aerial image descriptor to use them for image matching.

The code on image matching is partly borrowed from cvmnet. This is implemented in Tensorflow.

The original datasets are available here:

Pretrained models can be downloaded individually here: [xview-synthesis] [two-stream] [joint_feature_learning] [feature_fusion]

All these models can be downloaded at once using this link (~ 4.2 GB). [CVUSA Pretrained Models]

Coming soon...

For ease of comparison of our method by future researchers on CVUSA and CVACT datasets, we provide the following:

Feature files for test set of CVUSA:[CVUSA Test Features]

We also conducted experiments on [CVACT Dataset] and provide the feature files here: [CVACT Test Features]

If you find our works useful for your research, please cite the following papers:

-

Bridging the Domain Gap for Ground-to-Aerial Image Matching, ICCV 2019 pdf, bibtex

-

Cross-View Image Synthesis Using Conditional GANs, CVPR 2018 pdf, bibtex

-

Cross-view image synthesis using geometry-guided conditional GANs, CVIU 2019 pdf, bibtex

Please contact: 'krishna.regmi7@gmail.com'