Use this repository to deploy TKG to vSphere 6.7u3, leveraging these Terraform scripts.

This repository is also compatible with Tanzu Community Edition (TCE), the open source version of TKG.

Download TKG bits to your workstation. The following components are required:

- Tanzu CLI for Linux: includes the

tanzuCLI used to operate TKG and workload clusters from the jumpbox VM - OS node OVA: used for TKG nodes (based on Photon OS and Ubuntu)

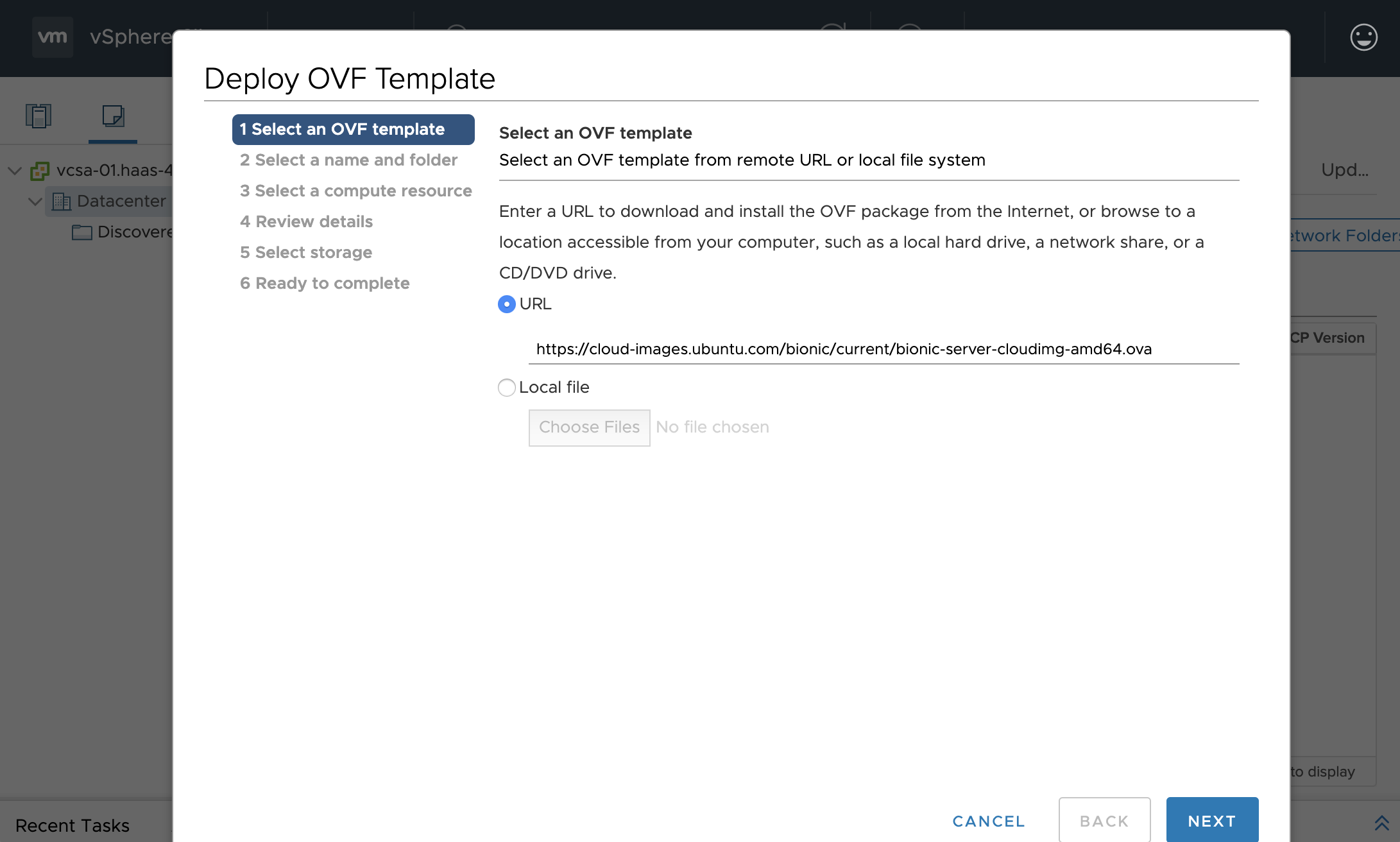

- Ubuntu server cloud image OVA: used for the jumpbox VM

Make sure to copy the Tanzu CLI archive (tanzu-cli-bundle-*.tar) to this repository.

First, make sure DHCP is enabled: this service is required for all TKG nodes.

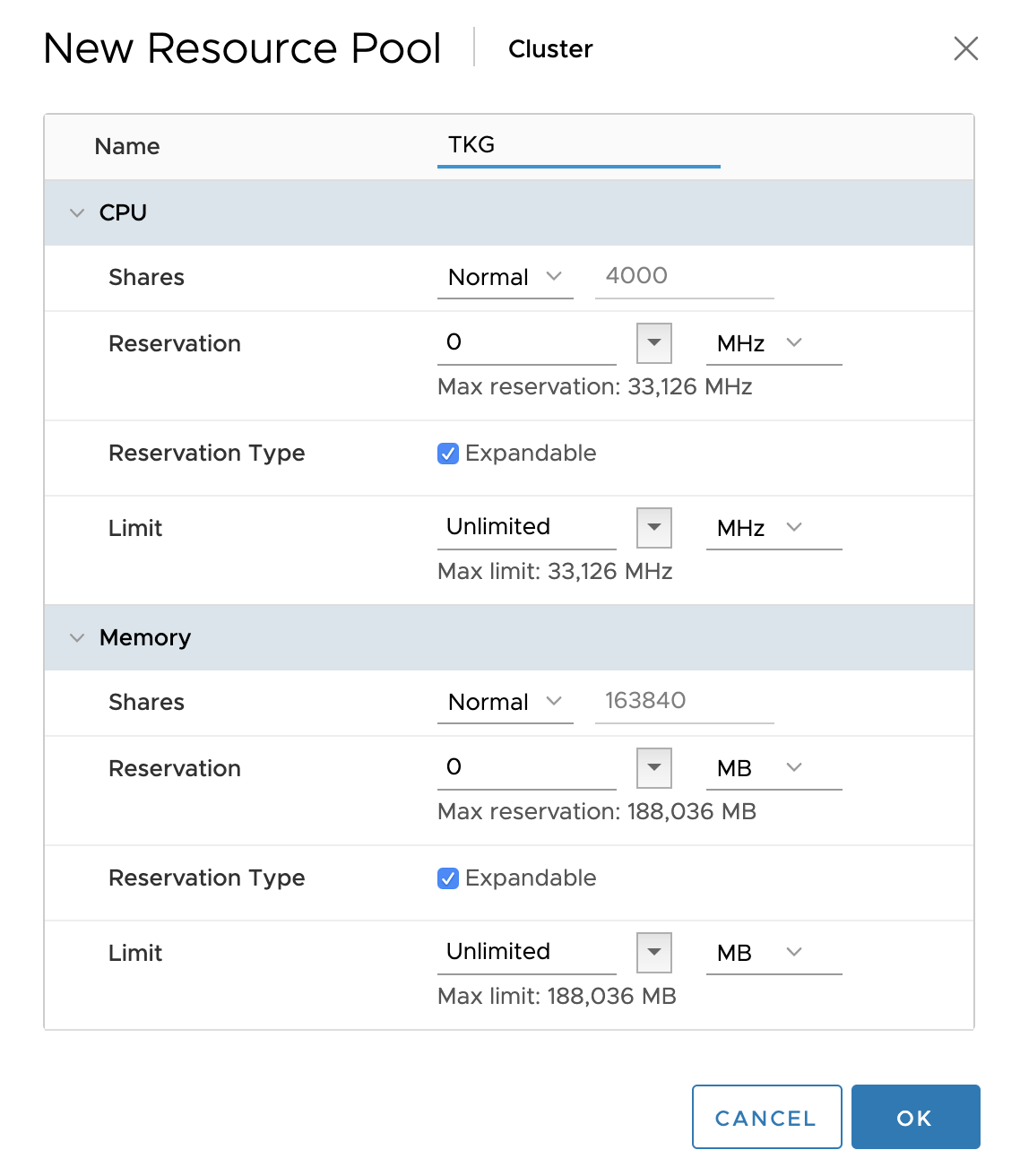

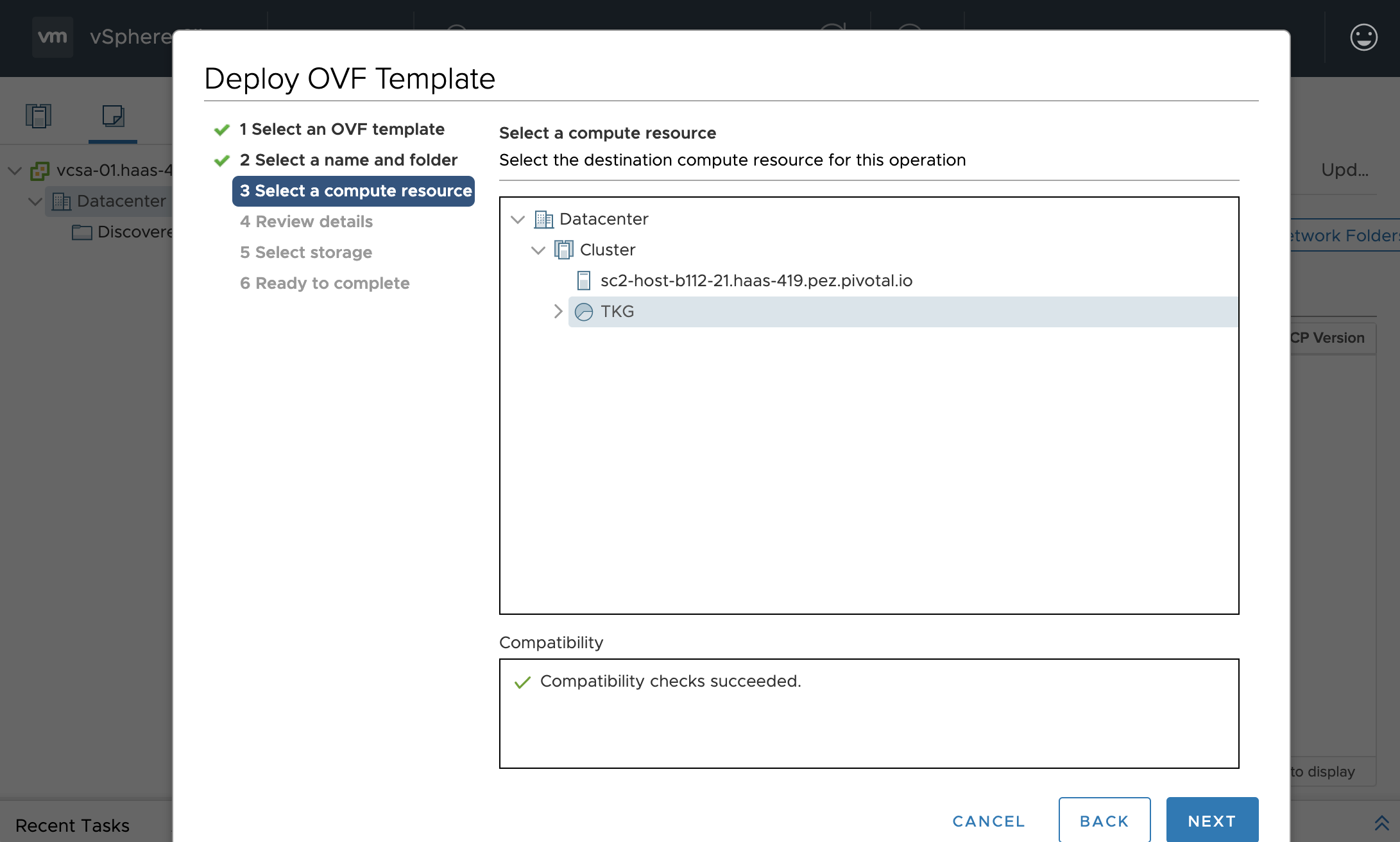

Create a resource pool under the cluster where TKG is deployed to: use name TKG.

All TKG VMs will be deployed to this resource pool.

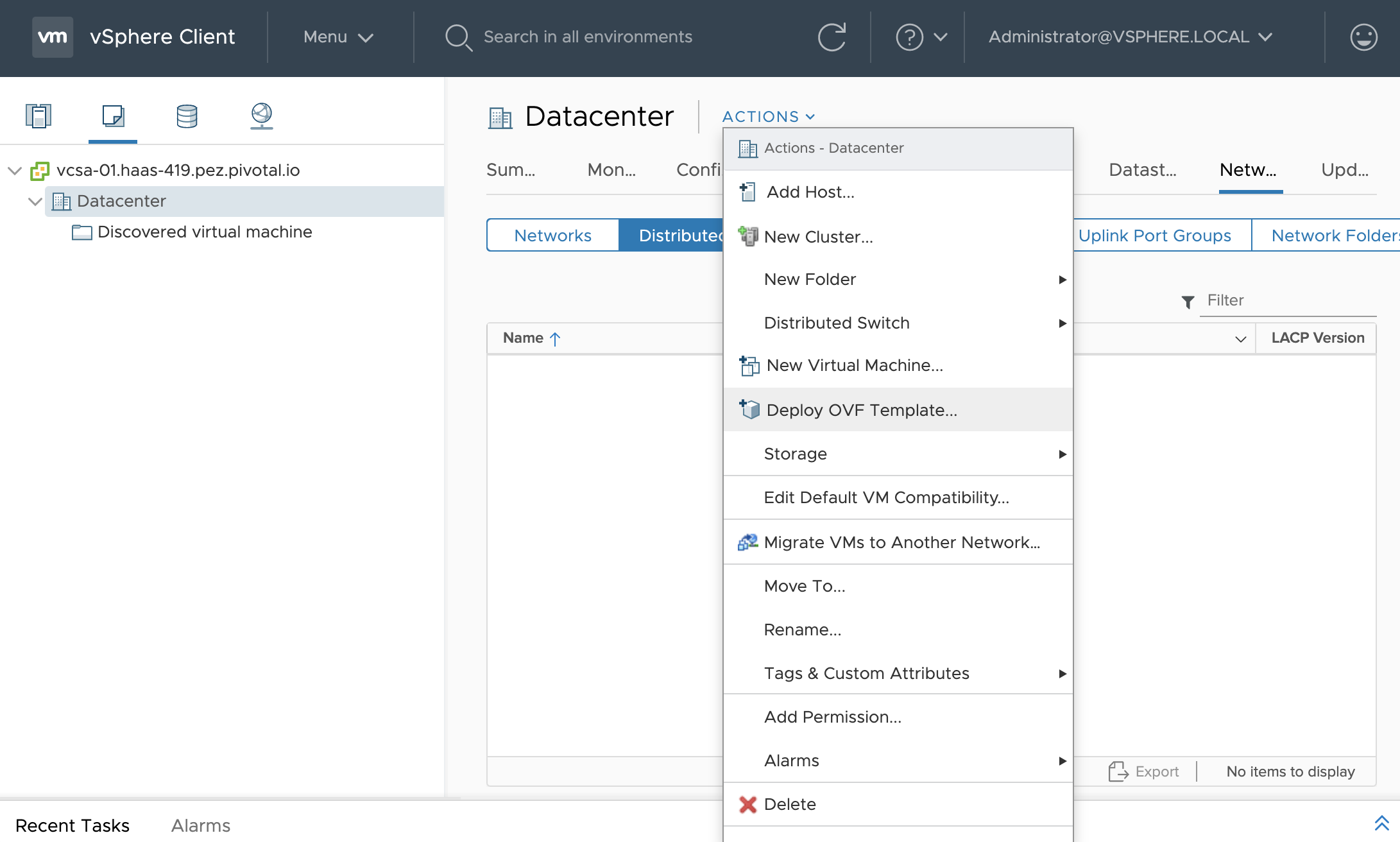

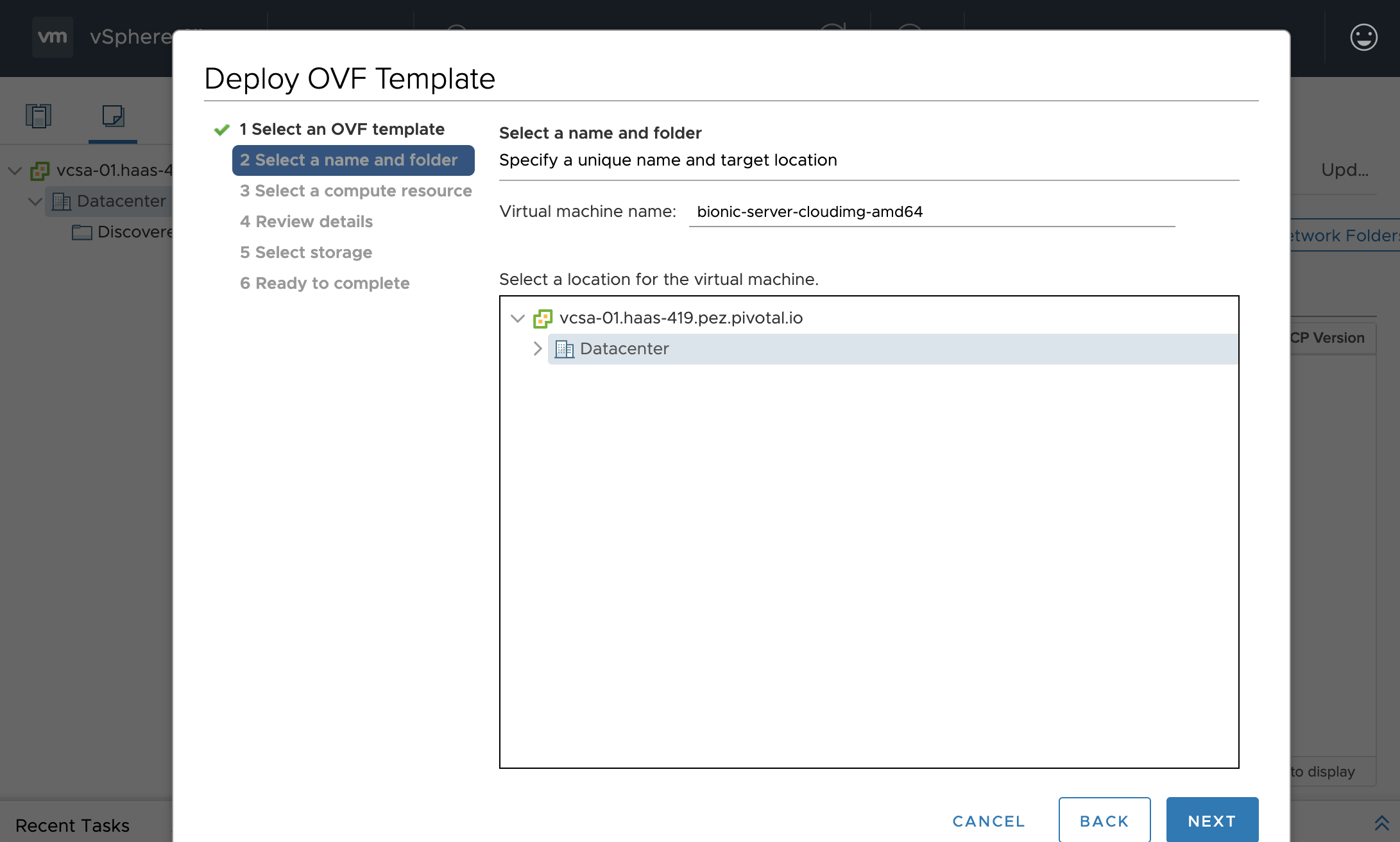

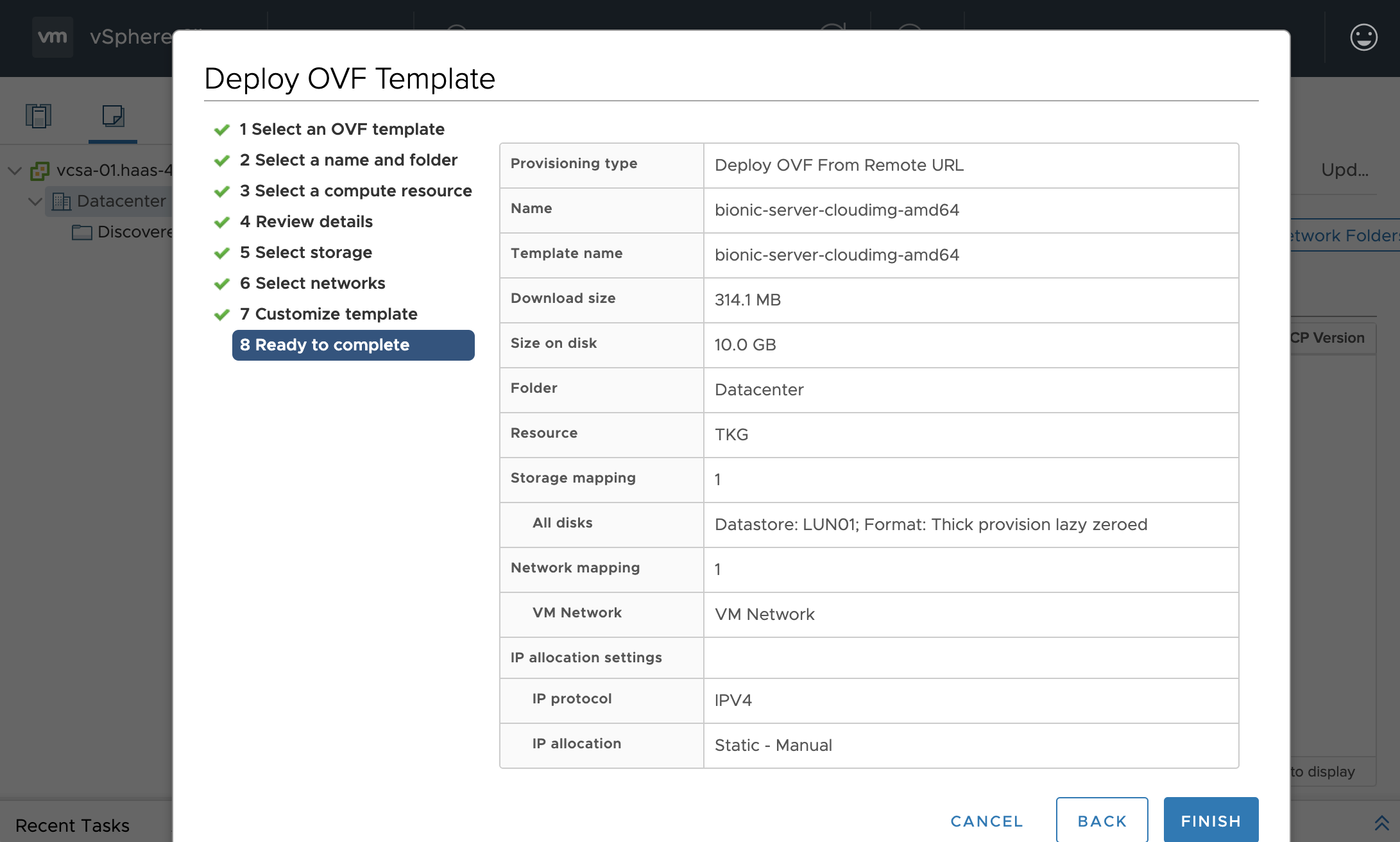

You need to deploy all OVA files as OVF templates to vSphere.

Repeat the next steps for each OVA file:

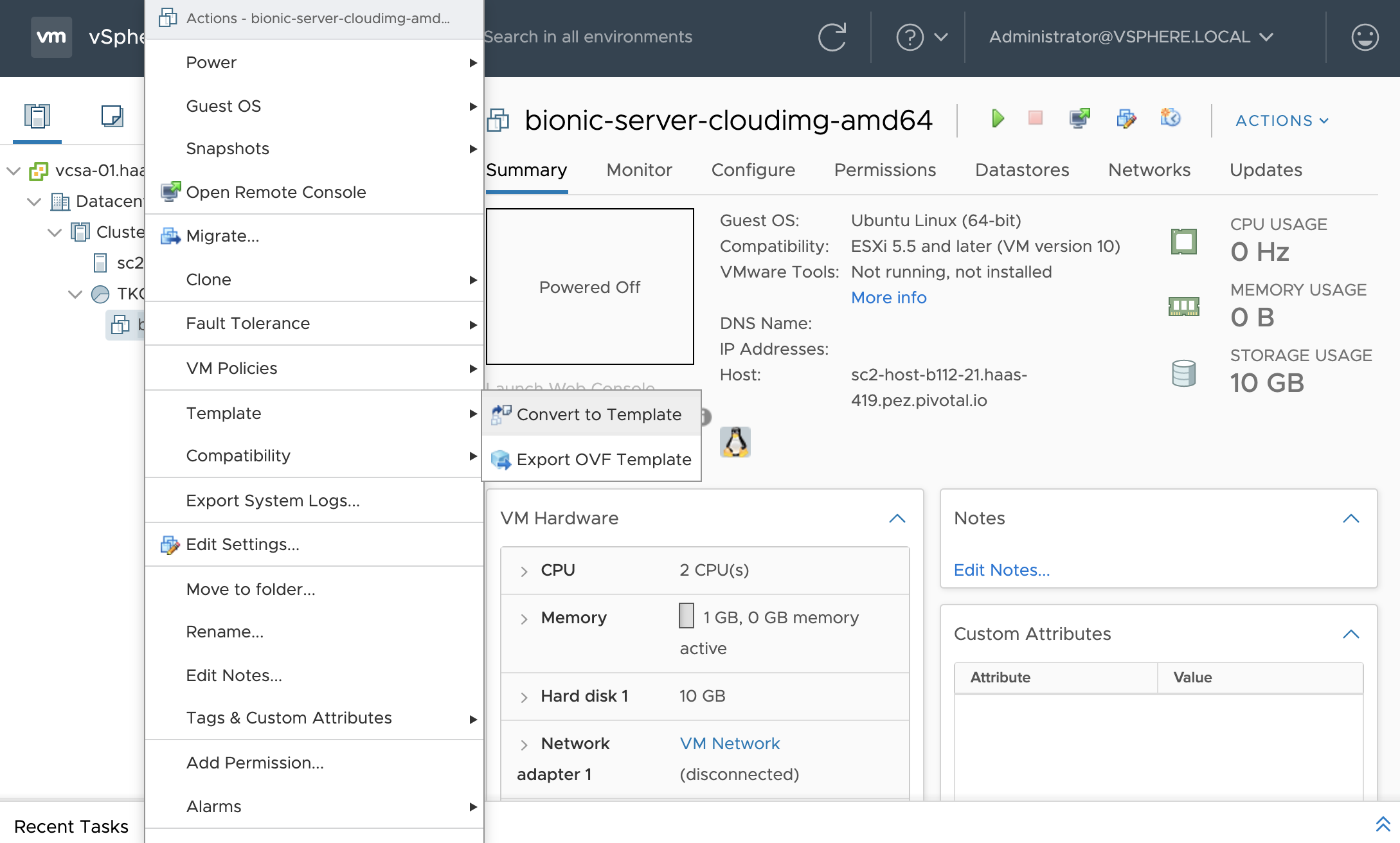

All OVA files will be uploaded to your vSphere instance as new VMs. Make sure you do not start any of these VMs.

You can now convert each of these VMs to templates:

Starting from terraform.tfvars.tpl, create new file terraform.tfvars:

vsphere_password = "changeme"

vsphere_server = "vcsa.mydomain.com"

network = "net"

datastore_url = "ds:///vmfs/volumes/changeme/"

# Management control plane endpoint.

control_plane_endpoint = 192.168.100.1As specified in the TKG documentation, you need to use a static IP for the control plane of the management cluster. Make sure that this IP address is in the same subnet as the DHCP range, but do not choose an IP address in the DHCP range.

Please note that you can also use these Terraform scripts to deploy

Tanzu Community Edition (TCE),

the open source version of TKG.

All you need to do is to copy the TCE bundle archive in this directory

(tce-linux-amd64-X.tar.gz), and set this property in your terraform.tfvars file:

tanzu_cli_file_name = "tce-linux-amd64-v0.9.1.tar.gz"First, initialize Terraform with required plugins:

$ terraform initRun this command to create a jumpbox VM using Terraform:

$ terraform applyUsing the jumpbox, you'll be able to interact with TKG using the tanzu CLI.

You'll also use this jumpbox to connect to nodes using SSH.

Deploying the jumpbox VM takes less than 5 minutes.

At the end of this process, you can retrieve the jumpbox IP address:

$ terraform output jumpbox_ip_address

10.160.28.120You may connect to the jumpbox VM using account ubuntu.

Connect to the jumpbox VM using SSH:

$ ssh ubuntu@$(terraform output jumpbox_ip_address)A default configuration for the management cluster has been generated in

the file .config/tanzu/tkg/clusterconfigs/mgmt-cluster-config.yaml.

You may want to edit this file before creating the management cluster.

Create the TKG management cluster:

$ tanzu management-cluster create --file $HOME/.config/tanzu/tkg/clusterconfigs/mgmt-cluster-config.yamlThis process takes less than 10 minutes.

You can now create workload clusters.

Create a cluster configuration file in .config/tanzu/tkg/clusterconfigs.

You may reuse the content from the management cluster configuration file, adjusting the control plane endpoint (do not pick the same IP address used for the management cluster!):

CLUSTER_NAME: dev01

CLUSTER_PLAN: dev

VSPHERE_CONTROL_PLANE_ENDPOINT: 192.168.100.10Create the workload cluster:

$ tanzu cluster create --file $HOME/.config/tanzu/tkg/clusterconfigs/dev01-cluster-config.yamlThis process takes less than 5 minutes.

Create a kubeconfig file to access your workload cluster:

$ tanzu cluster kubeconfig get dev01 --admin --export-file dev01.kubeconfigYou can now use this file to access your workload cluster:

$ KUBECONFIG=dev01.kubeconfig kubectl get nodes

NAME STATUS ROLES AGE VERSION

dev01-control-plane-r5nwl Ready master 10m v1.17.3+vmware.2

dev01-md-0-65bc768c89-xjn7h Ready <none> 9m44s v1.17.3+vmware.2Copy this file to your workstation to access the cluster without using the jumpbox VM.

Tips - use this command to merge 2 or more kubeconfig files:

$ KUBECONFIG=dev01.kubeconfig:dev02.kubeconfig kubectl config view --flatten > merged.kubeconfigYou may connect to a TKG node using this command (from the jumpbox VM):

$ ssh capv@node_ip_addressUse this command to add more nodes to your workload cluster (from the jumpbox VM):

$ tanzu cluster scale dev01 --worker-machine-count 3The workload cluster you created already includes a vSphere CSI.

The only thing you need to do is to apply a configuration file, designating the vSphere datastore to use when creating Kubernetes persistent volumes.

Use generated file vsphere-storageclass.yml:

$ kubectl apply -f vsphere-storageclass.ymlYou're done with TKG deployment. Enjoy!

Contributions are always welcome!

Feel free to open issues & send PR.

Copyright © 2022 VMware, Inc. or its affiliates.

This project is licensed under the Apache Software License version 2.0.