Aspect Based Sentiment Analysis, PyTorch Implementations.

基于方面的情感分析,使用PyTorch实现。

- pytorch >= 0.4.0

- numpy >= 1.13.3

- sklearn

- python 3.6 / 3.7

- pytorch-transformers == 1.2.0

- See pytorch-transformers for more detail.

To install requirements, run pip install -r requirements.txt.

For non-BERT-based models, GloVe pre-trained word vectors are required (See data_utils.py for more detail)

- Download pre-trained word vectors here,

- extract the glove.twitter.27B.zip and glove.42B.300d.zip to the root directory

python train.py --model_name bert_spc --dataset restaurantSee train.py for more training arguments.

Refer to train_k_fold_cross_val.py for k-fold cross validation support.

Please refer to infer_example.py for non-BERT models. Please refer to infer_example_bert_models.py for BERT models.

- For non-BERT-based models, training procedure is not very stable.

- BERT-based models are more sensitive to hyperparameters (especially learning rate) on small data sets, see this issue.

- Fine-tuning on the specific task is necessary for releasing the true power of BERT.

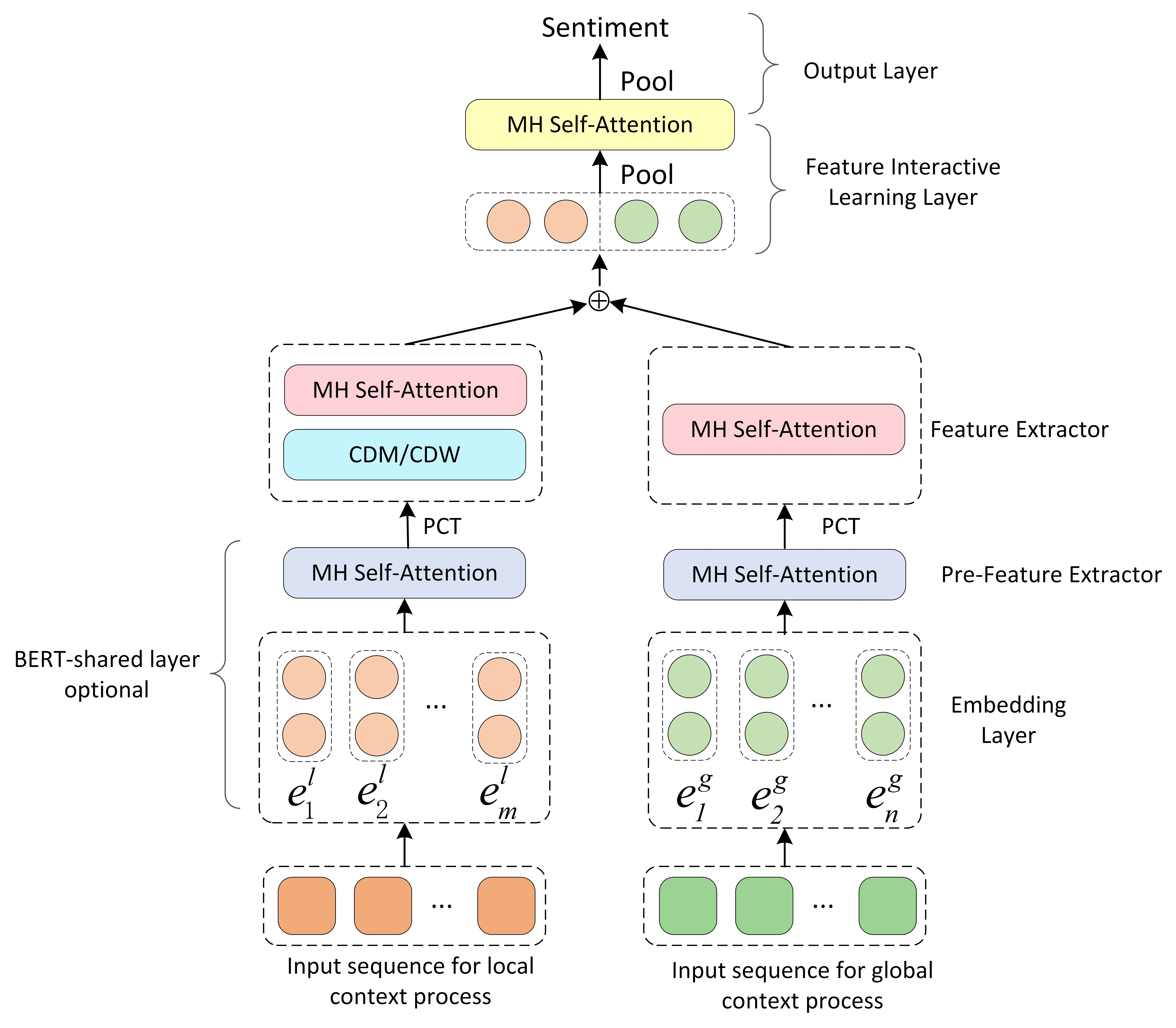

LCF-BERT (lcf_bert.py)

Zeng Biqing, Yang Heng, et al. "LCF: A Local Context Focus Mechanism for Aspect-Based Sentiment Classification." Applied Sciences. 2019, 9, 3389. [pdf]

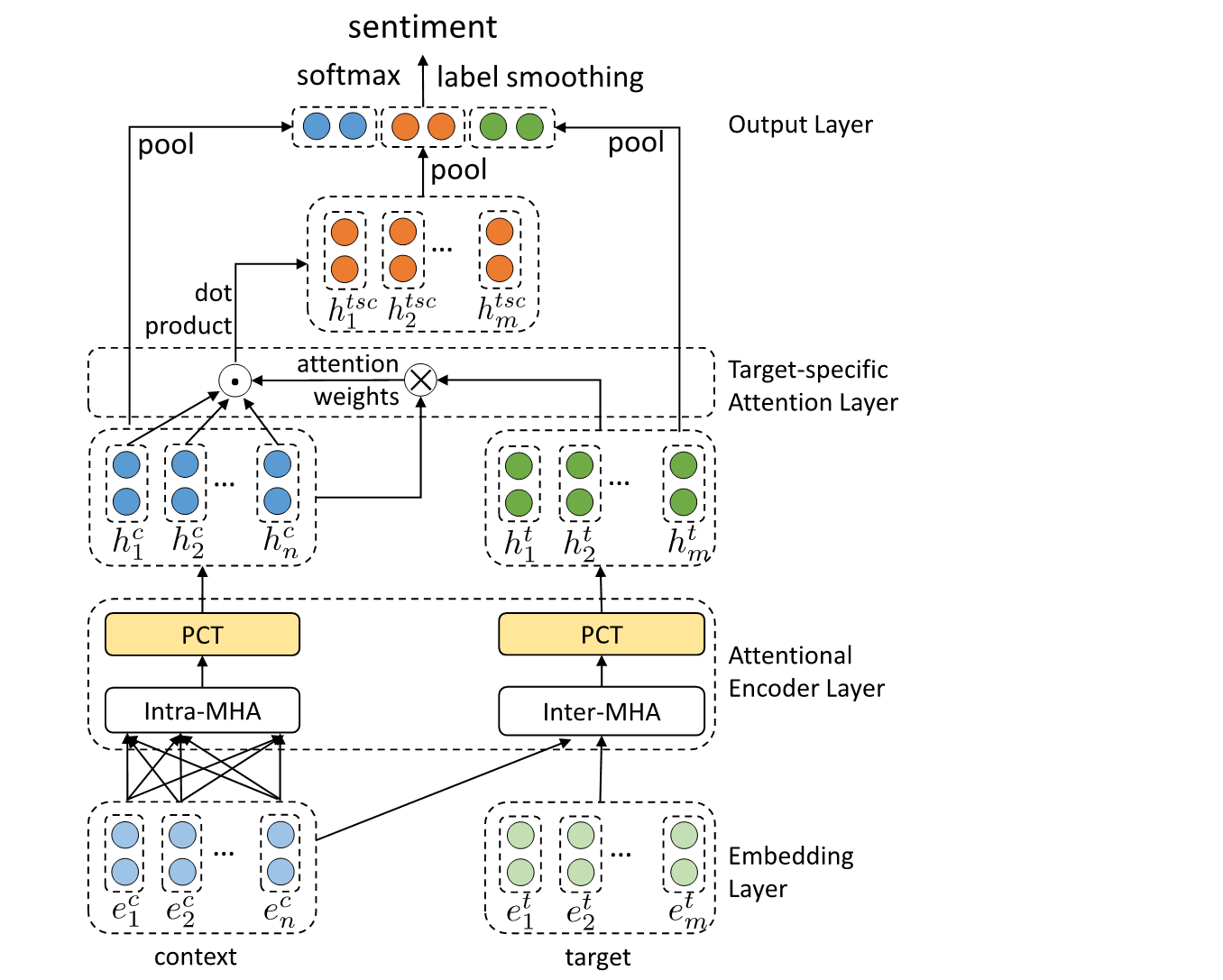

AEN-BERT (aen.py)

Song, Youwei, et al. "Attentional Encoder Network for Targeted Sentiment Classification." arXiv preprint arXiv:1902.09314 (2019). [pdf]

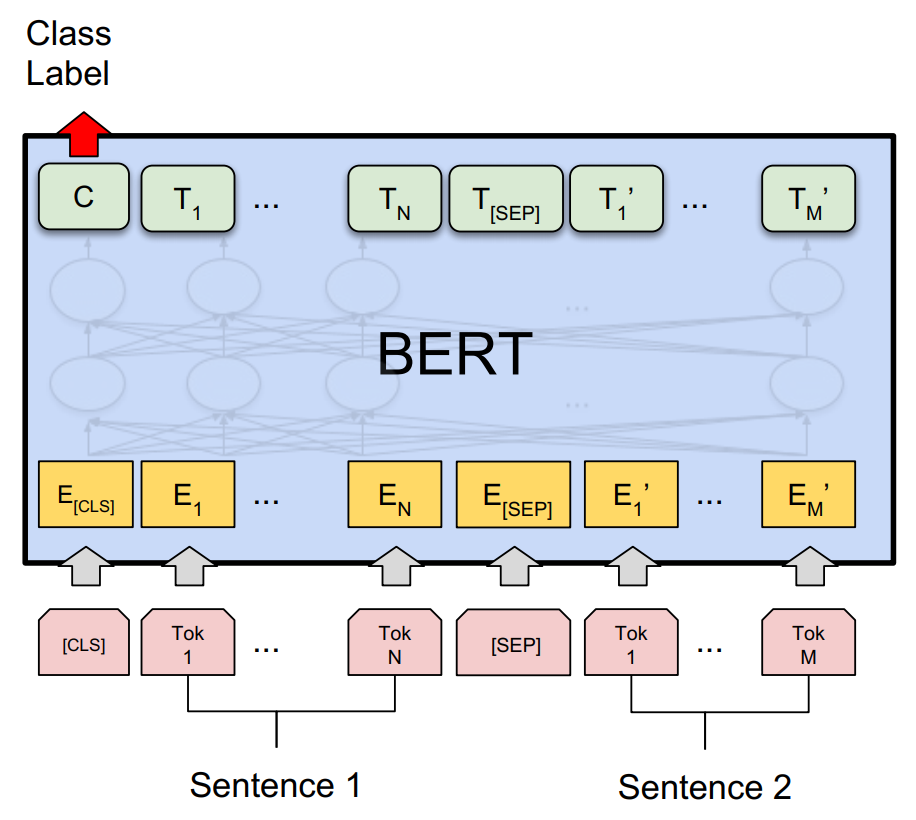

BERT for Sentence Pair Classification (bert_spc.py)

Devlin, Jacob, et al. "Bert: Pre-training of deep bidirectional transformers for language understanding." arXiv preprint arXiv:1810.04805 (2018). [pdf]

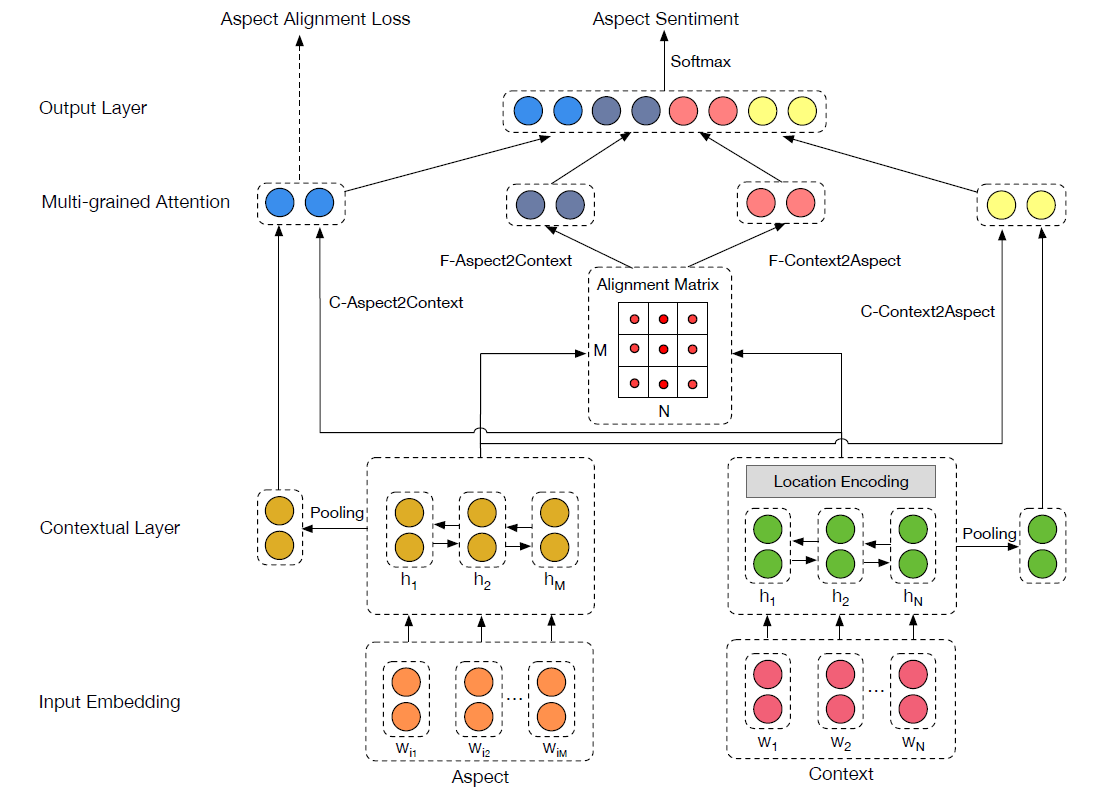

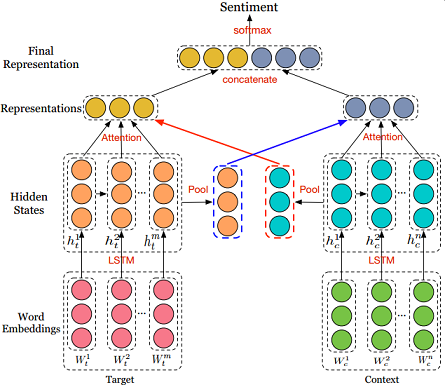

MGAN (mgan.py)

Fan, Feifan, et al. "Multi-grained Attention Network for Aspect-Level Sentiment Classification." Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. 2018. [pdf]

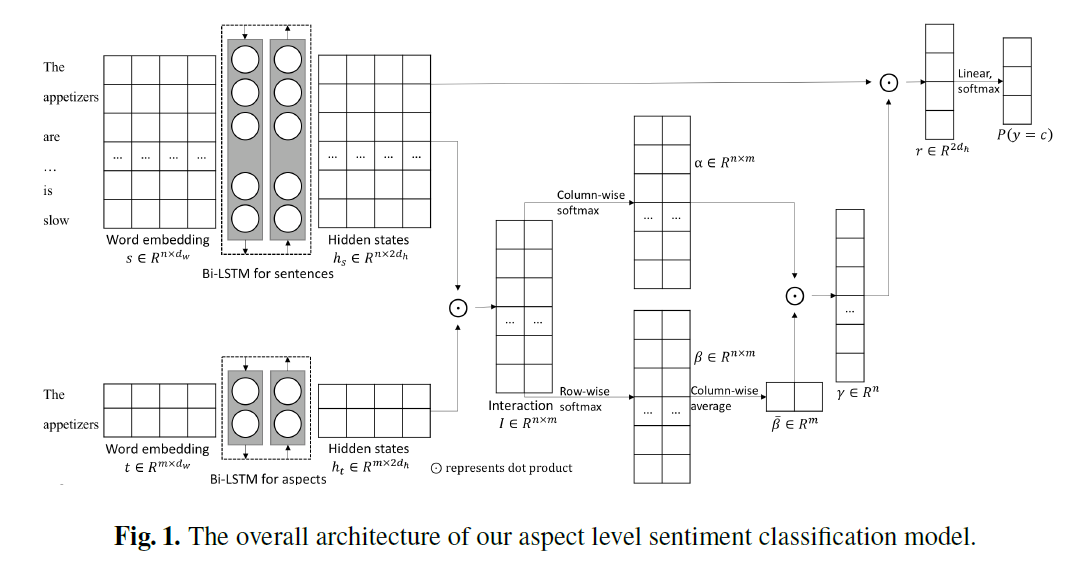

AOA (aoa.py)

Huang, Binxuan, et al. "Aspect Level Sentiment Classification with Attention-over-Attention Neural Networks." arXiv preprint arXiv:1804.06536 (2018). [pdf]

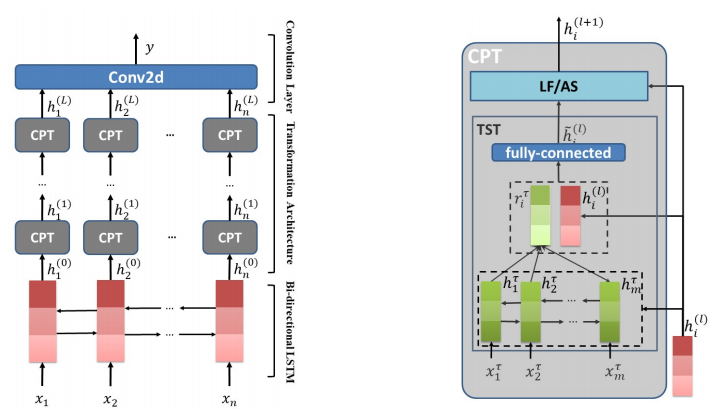

TNet (tnet_lf.py)

Li, Xin, et al. "Transformation Networks for Target-Oriented Sentiment Classification." arXiv preprint arXiv:1805.01086 (2018). [pdf]

Cabasc (cabasc.py)

Liu, Qiao, et al. "Content Attention Model for Aspect Based Sentiment Analysis." Proceedings of the 2018 World Wide Web Conference on World Wide Web. International World Wide Web Conferences Steering Committee, 2018.

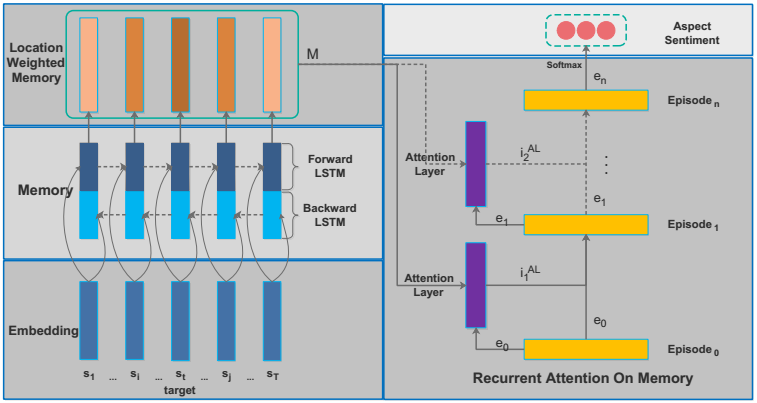

RAM (ram.py)

Chen, Peng, et al. "Recurrent Attention Network on Memory for Aspect Sentiment Analysis." Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing. 2017. [pdf]

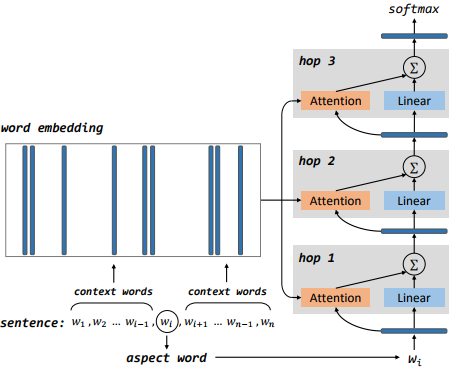

MemNet (memnet.py)

Tang, Duyu, B. Qin, and T. Liu. "Aspect Level Sentiment Classification with Deep Memory Network." Conference on Empirical Methods in Natural Language Processing 2016:214-224. [pdf]

IAN (ian.py)

Ma, Dehong, et al. "Interactive Attention Networks for Aspect-Level Sentiment Classification." arXiv preprint arXiv:1709.00893 (2017). [pdf]

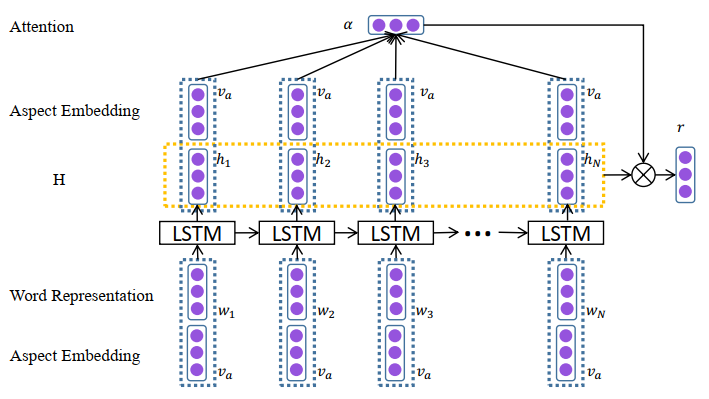

ATAE-LSTM (atae_lstm.py)

Wang, Yequan, Minlie Huang, and Li Zhao. "Attention-based lstm for aspect-level sentiment classification." Proceedings of the 2016 conference on empirical methods in natural language processing. 2016.

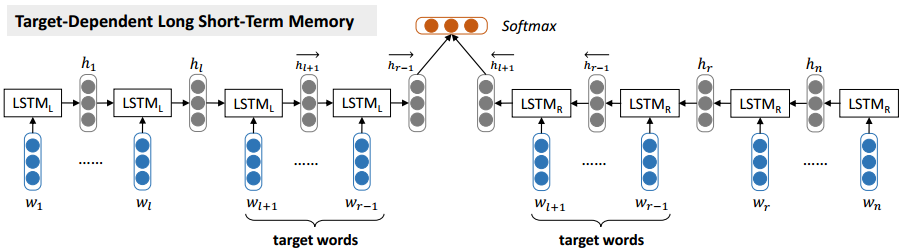

TD-LSTM (td_lstm.py)

Tang, Duyu, et al. "Effective LSTMs for Target-Dependent Sentiment Classification." Proceedings of COLING 2016, the 26th International Conference on Computational Linguistics: Technical Papers. 2016. [pdf]

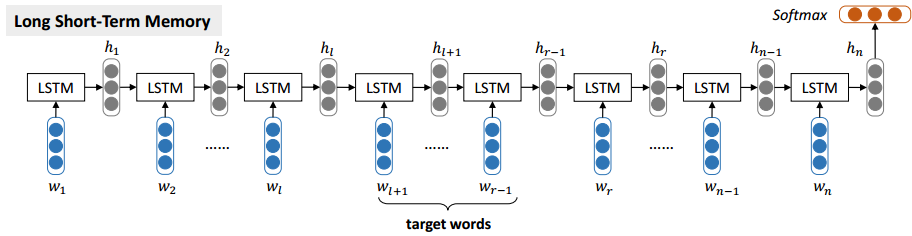

LSTM (lstm.py)

Zhang, Lei, Shuai Wang, and Bing Liu. "Deep Learning for Sentiment Analysis: A Survey." arXiv preprint arXiv:1801.07883 (2018). [pdf]

Young, Tom, et al. "Recent trends in deep learning based natural language processing." arXiv preprint arXiv:1708.02709 (2017). [pdf]

Feel free to contribute!

You can raise an issue or submit a pull request, whichever is more convenient for you.

MIT License