Neural Radiance Fields in the Industrial and Robotics Domain: Applications, Research Opportunities and Use Cases

The project IISNeRF is the implementation of two proof-of-concept experiments:

I. UAV video compression using Instant-NGP,

II. Obstacle avoidance using D-NeRF.

The proliferation of technologies, such as extended reality (XR), has increased the demand for high-quality three-dimensional (3D) graphical representations. Industrial 3D applications encompass computer-aided design (CAD), finite element analysis (FEA), scanning, and robotics. However, current methods employed for industrial 3D representations suffer from high implementation costs and reliance on manual human input for accurate 3D modeling. To address these challenges, neural radiance fields (NeRFs) have emerged as a promising approach for learning 3D scene representations based on provided training 2D images. Despite a growing interest in NeRFs, their potential applications in various industrial subdomains are still unexplored. In this paper, we deliver a comprehensive examination of NeRF industrial applications while also providing direction for future research endeavors. We also present a series of proof-of-concept experiments that demonstrate the potential of NeRFs in the industrial domain. These experiments include NeRF-based video compression techniques and using NeRFs for 3D motion estimation in the context of collision avoidance. In the video compression experiment, our results show compression savings up to 48% and 74% for resolutions of 1920x1080 and 300x168, respectively. The motion estimation experiment used a 3D animation of a robotic arm to train Dynamic-NeRF (D-NeRF) and achieved an average peak signal-to-noise ratio (PSNR) of disparity map with the value of 23 dB and an structural similarity index measure (SSIM) 0.97.

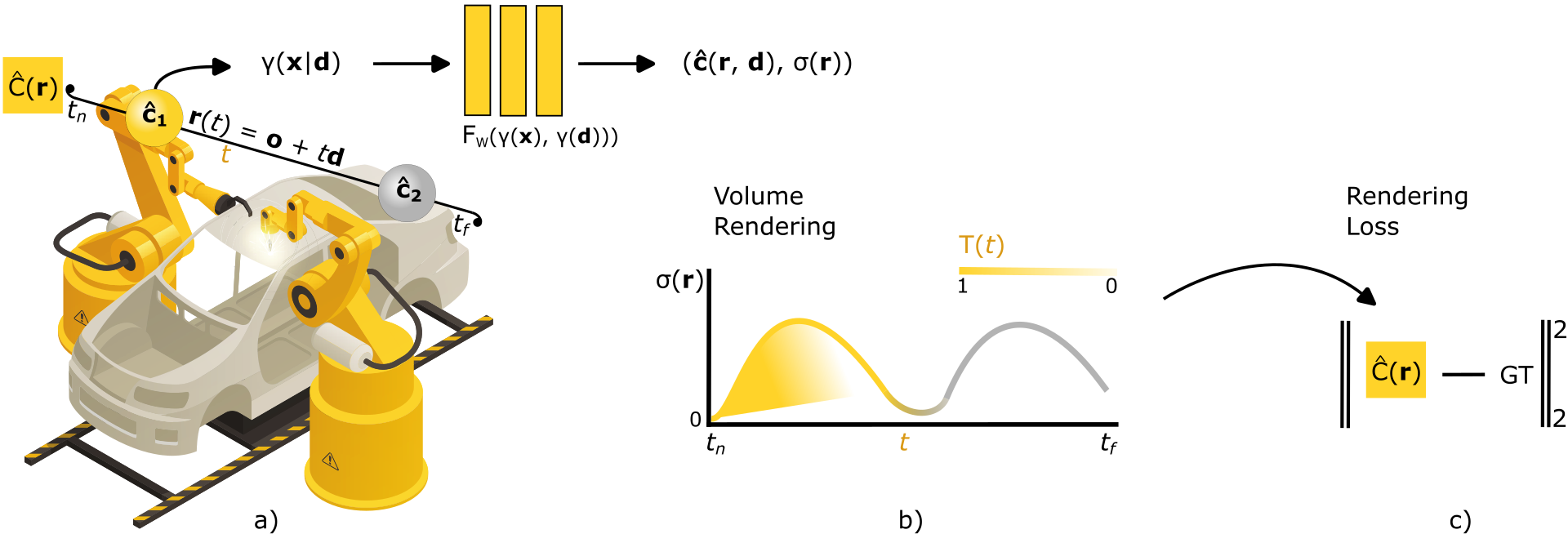

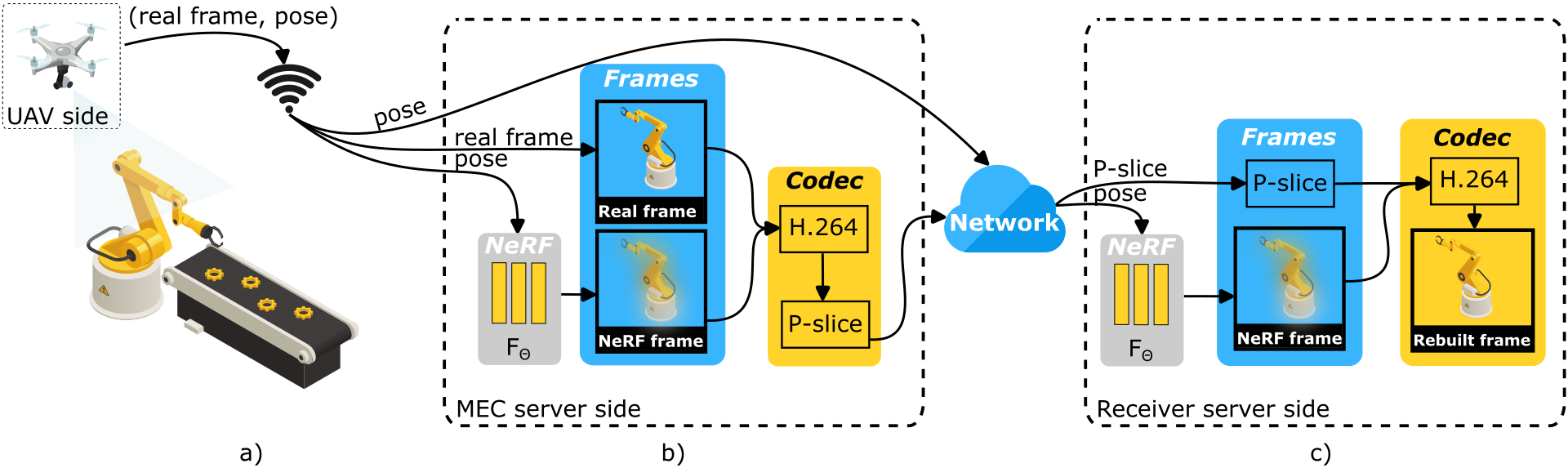

Fig. 1. (a) A 5D coordinate (spatial location in 3D coupled with directional polar angles) is transformed into a higher-dimensional space by positional encoding γ. It serves as an input for MLP FW . The output from FW consists of gradually learned color ĉ and volume density σ for the corresponding 5D input coordinate. r, d, x denote the ray vector, direction vector and spatial location vector, respectively. (b) Pixel values are obtained via volume rendering with numerically integrated rays bounded by respective near and far bounds tn and tf. (c) Ground truth pixels from training set images are used to calculate rendering loss and optimize the FW weights via backpropagation. Fig. 2. (a) A UAV camera captures the environment. The real frame and pose of the camera are transmitted wirelessly to a nearby multiaccess edge computing (MEC) server. (b) The MEC server employs the NeRF model for novel view synthesis based on camera pose. The H.264 codec encodes real and NeRF frames to obtain P frame containing their differences, which is transferred through the network with the pose. (c) Receiver rebuilds the real frame using H.264 codec from P frame and locally generated NeRF frame from camera pose. Fig. 7. a) A UAV with a specific camera pose captures the object at time t. b) The D-NeRF model outputs novel views from the camera pose at specified time t in the form of an RGB map, opacity map and disparity map depicted in Fig. 8. c) The opacity map and disparity map are key components for UAVs to perform obstacle avoidance or path planning.git clone https://github.com/Maftej/iisnerf.git

cd src

conda create -n nerf_robotics python=3.10

conda activate nerf_robotics

pip install -r requirements.txt

You can download all files used for both proof-of-concept-experiments using the link. Detailed structure of folders is located in the section 5.

| NeRF variant | Instant-NGP |

|---|---|

| abbreviated Instant-NGP repository commit hash | 11496c211f |

| AABB | 128 |

| 3D modelling software | Blender 3.4 |

| 3D renderer | Eevee |

| Blender plugin for creation of a synthetic dataset | BlenderNeRF 3.0.0 |

| BlenderNeRF dataset method | Subset of Frames (SOF) |

| view sampling interval in a training dataset | 5 times each 4th frame out of 100 and 1 time each 3rd frame out of 100 |

| third-party 3D models and textures | factory building, animated robotic exhaust pipe welding, garage doors in the original resolution 5184x3456 |

| scene lighting | HDR map in a 1K resolution |

| training view count | 159 |

| image resolutions used for training | 1920x1080 |

| image resolutions used for evaluation of image quality and compression | 300x168, 500x280, 720x404, 1920x1080 |

| H.264 codec | x264 (as a part of the FFMPEG library N-109741-gbbe95f7353-20230201) |

| multimedia content inspector | FFPROBE library N-109741-gbbe95f7353-20230201 |

| number of images encoded into single .h264 file | 2 |

| Constant Rate Factor (CRF) | 18, 23, 28 |

| Preset | Veryslow, Medium, Veryfast |

| NeRF variant | D-NeRF |

|---|---|

| abbreviated D-NeRF repository commit hash | 89ed431fe1 |

| number of training iterations | 750 000 |

| number of coarse samples per ray | 128 |

| number of additional fine samples per ray | 160 |

| batch size (number of random rays per gradient step) | 512 |

| number of steps to train on central time | 500 |

| number of rays processed in parallel | 1024*40 |

| number of points sent through the network in parallel | 1024*70 |

| frequency of tensorboard image logging | HDR map in a 1K resolution |

| training view count | 100 000 |

| frequency of weight ckpt saving | 50 000 |

| frequency of testset saving | 50 000 |

| frequency of render_poses video saving | 100 000 |

| 3D modelling software | Blender 3.4 |

| 3D Renderer | Eevee |

| view sampling count in a training dataset | 3 times each frame out of 41 |

| view sampling count in a testing and a validation dataset | 2 times each 2nd frame out of 41 |

| view sampling count in a validation dataset | 1 time each 2nd frame out of 41 |

| plugin for creation of a disparity map dataset in Blender | Light Field Camera 0.0.1 |

| cols and rows | 1 |

| base x and base y | 0.3 |

| third-party 3D models | animated robotic arm |

| training / testing / validation view count | 123 / 21 / 21 |

| image resolutions used for training and all maps | 800x800 |

| focal | 1113 |

| near and far bounds | 2 and 6 |

- Camera cannot be inside object while moving. It causes artifacts in the form of cut objects.

- TRAIN -> training of NeRF variant using single dataset and storage of the pretrained scene in a specific format.

- MERGE_DATASETS -> fusion of several datasets into single dataset based on the same properties besides from "frame" in transforms.json file.

- EVAL_DATASET -> creation of evaluation dataset based on custom trajectory in transforms.json file.

- EVAL_TRAJECTORY -> evaluation of a custom trajectory (ground truth image vs NeRF generated image).

- TEST_DATASET -> creation of testing dataset from pretrained Instant-NGP (from .ngp file) based on poses from transforms.json.

- EVAL_MODELS -> evaluation of Instant-NGP models with different AABB value, e.g., images from the same poses (ground truth image vs NeRF generated image) using PSNR and SSIM.

- IPFRAME_DATASET -> creation of dataset composed of ground truth and NeRF images from the same trajectory (the same NeRF and ground truth are encoded).

- ENCODE_IPFRAME_DATASET -> encoding of IPFRAME dataset using H.264 codec with different configurations settings as preset and Constant Rate Factor (CRF).

- EVAL_ENCODED_IPFRAME -> extraction of P-slice size of IPFRAME dataset.

- PLOT_ALL_MAPS -> rendering, processing and plotting of RGB, disparity and accumulated opacity maps.

- PLOT_DATA -> plotting all data used in the research paper.

- D_NERF

- INSTANT_NGP

- MERGE_DATASETS

- TRAIN

- TEST_DATASET

- EVAL_MODELS

- EVAL_DATASET

- IPFRAME_DATASET

- ENCODE_IPFRAME_DATASET

- EVAL_ENCODED_IPFRAME

- EVAL_TRAJECTORY

- PLOT_ALL_MAPS

- PLOT_DATA

- conda env list, conda activate nerf_robotics

- python iis_nerf.py --nerf_variant "INSTANT_NGP" --scenario "EVAL_ENCODED_H264" --scenario_path "C:\Users\mdopiriak\PycharmProjects\iis_nerf\scenarios\scenario_factory_robotics.json"

- python iis_nerf.py --nerf_variant "INSTANT_NGP" --scenario "EVAL_TRAJECTORY" --scenario_path "C:\Users\mdopiriak\PycharmProjects\iis_nerf\scenarios\scenario_test.json"

- python iis_nerf.py --nerf_variant "D_NERF" --scenario "MERGE_DATASETS" --dataset_type "TRAIN" --scenario_path "C:\Users\mdopiriak\PycharmProjects\iis_nerf\scenarios\scenario_d_nerf_arm_robot.json"

- python iis_nerf.py --nerf_variant "D_NERF" --scenario "PLOT_ALL_MAPS" --scenario_path "C:\Users\mdopiriak\PycharmProjects\iis_nerf\scenarios\scenario_d_nerf_arm_robot.json"

- python iis_nerf.py --nerf_variant "D_NERF" --scenario "PLOT_DATA" --scenario_path "C:\Users\mdopiriak\PycharmProjects\iis_nerf\scenarios\scenario_d_nerf_arm_robot.json"

- python iis_nerf.py --nerf_variant "D_NERF" --scenario "TRAIN" --scenario_path "C:\Users\mdopiriak\PycharmProjects\iis_nerf\scenarios\scenario_d_nerf_arm_robot.json"

The list of ffmpeg commands used in the first proof-of-concept experiments:

- ffmpeg -framerate 1 -start_number {order} -i {file_path} -frames:v 2 -c:v libx264 -preset {preset} -crf {crf} -r 1 {encoded_video_path}

- ffprobe -show_frames {h264_encoded_video_full_path}

.

└── iisnerf_data/

├── obstacle_avoidance_using_dnerf/

│ ├── blender_files/

│ │ └── blender_models/

│ │ ├── source/

│ │ │ └── .blend

│ │ ├── textures/

│ │ │ └── .jpg

│ │ └── .blend

│ ├── configs/

│ │ └── arm_robot.txt

│ ├── data/

│ │ └── arm_robot/

│ │ ├── test/

│ │ │ ├── 0000.png

│ │ │ ├── ...

│ │ │ └── 0040.png

│ │ ├── train/

│ │ │ ├── 001.png

│ │ │ ├── ...

│ │ │ └── 123.png

│ │ ├── val/

│ │ │ ├── 0000.png

│ │ │ ├── ...

│ │ │ └── 0040.png

│ │ ├── transforms_test.json

│ │ ├── transforms_train.json

│ │ └── transforms_val.json

│ ├── logs/

│ │ ├── 750000.tar

│ │ ├── args.txt

│ │ └── config.txt

│ └── robot_maps_data/

│ └── arm_robot_eval_dataset/

│ └── -> details in 5.3

└── uav_compression_using_instant_ngp/

├── blender_files/

│ ├── blender_models/

│ │ ├── demo-robotic-exhaust-pipe-welding/

│ │ │ ├── source/

│ │ │ │ └── Demo Exhaust Pipe.glb

│ │ │ ├── textures/

│ │ │ │ └── gltf_embedded_0.jpg

│ │ │ ├── weldings_robots.blend

│ │ │ ├── welding_robots.mtl

│ │ │ └── welding_robots.obj

│ │ └── warehouse/

│ │ └── Warehouse.fbx

│ ├── factory_dataset_normal5

│ ├── ...

│ ├── factory_dataset_normal10

│ ├── lightfield/

│ │ └── derelict_highway_midday_1k.hdr

│ ├── Textures/

│ │ └── .jpg

│ ├── factory_scene5.blend

│ ├── ...

│ └── factory_scene10.blend

├── datasets/

│ └── factory_robotics/

│ └── -> details in 5.2

└── instant_ngp_models/

├── factory_robotics_aabb_scale_1.ingp

├── ...

└── factory_robotics_aabb_scale_128.ingp

.

└── factory_robotics/

├── eval/

│ └── factory_robotics_eval/

│ ├── images/

│ │ ├── 0001.jpg

│ │ ├── ...

│ │ └── 0159.jpg

│ ├── images_res300x168/

│ │ ├── 0001.jpg

│ │ ├── ...

│ │ └── 0159.jpg

│ ├── images_res500x280/

│ │ ├── 0001.jpg

│ │ ├── ...

│ │ └── 0159.jpg

│ ├── images_res720x404/

│ │ ├── 0001.jpg

│ │ ├── ...

│ │ └── 0159.jpg

│ ├── eval_trajectory.json

│ ├── eval_trajectory_res300x168.json

│ ├── eval_trajectory_res500x280.json

│ ├── eval_trajectory_res720x404.json

│ └── transforms.json

├── test/

│ ├── images_aabb_scale_1/

│ │ ├── 001.jpg

│ │ ├── ...

│ │ └── 159.jpg

│ ├── ...

│ ├── images_aabb_scale_128/

│ │ ├── 001.jpg

│ │ ├── ...

│ │ └── 159.jpg

│ ├── evaluated_models.json

│ └── transforms.json

├── train/

│ ├── images/

│ │ ├── 001.jpg

│ │ ├── ...

│ │ └── 159.jpg

│ └── transforms.json

└── videos/

├── factory_robotics_videos/

│ ├── res_300x168_preset_medium_crf_18/

│ │ ├── encoded_video1.h264

│ │ ├── ...

│ │ ├── encoded_video159.h264

│ │ └── pkt_size_res_300x168_preset_medium_crf_18.json

│ ├── ...

│ └── res_1920x1080_preset_veryslow_crf_28/

│ ├── encoded_video1.h264

│ ├── ...

│ ├── encoded_video159.h264

│ └── pkt_size_res_1920x1080_preset_veryslow_crf_28.json

└── ipframe_dataset/

├── images/

│ ├── ipframe_dataset001.jpg

│ ├── ...

│ └── ipframe_dataset159.jpg

├── images_res300x168/

│ ├── ipframe_dataset001.jpg

│ ├── ...

│ └── ipframe_dataset159.jpg

├── images_res500x280/

│ ├── ipframe_dataset001.jpg

│ ├── ...

│ └── ipframe_dataset159.jpg

└── images_res720x404/

├── ipframe_dataset001.jpg

├── ...

└── ipframe_dataset159.jpg

.

└── arm_robot_eval_dataset/

├── d_nerf_blender_disparity_map_test/

│ ├── 001.png

│ ├── ...

│ └── 021.png

├── d_nerf_blender_disparity_map_train/

│ ├── 001.png

│ ├── ...

│ └── 123.png

├── d_nerf_blender_disparity_map_val/

│ ├── 001.png

│ ├── ...

│ └── 021.png

├── d_nerf_blender_test/

│ ├── 001.npy

│ ├── ...

│ └── 021.npy

├── d_nerf_blender_train_bottom/

│ ├── 001.npy

│ ├── ...

│ └── 041.npy

├── d_nerf_blender_train_middle/

│ ├── 001.npy

│ ├── ...

│ └── 041.npy

├── d_nerf_blender_train_top/

│ ├── 001.npy

│ ├── ...

│ └── 041.npy

├── d_nerf_blender_val/

│ ├── 001.npy

│ ├── ...

│ └── 021.npy

├── d_nerf_model_disparity_map_test/

│ ├── 001.png

│ ├── ...

│ └── 021.png

├── d_nerf_model_disparity_map_train/

│ ├── 001.png

│ ├── ...

│ └── 123.png

├── d_nerf_model_disparity_map_val/

│ ├── 001.png

│ ├── ...

│ └── 021.png

├── d_nerf_model_test/

│ ├── 001.png

│ ├── ...

│ └── 021.png

├── d_nerf_model_train/

│ ├── 001.png

│ ├── ...

│ └── 123.png

├── d_nerf_model_val/

│ ├── 001.png

│ ├── ...

│ └── 021.png

├── ground_truth/

│ ├── test/

│ │ └── .png

│ ├── train/

│ │ └── .png

│ ├── val/

│ │ └── .png

│ └── new/

│ ├── 001.png

│ ├── ...

│ └── 123.png

└── psnr_ssim_data_d_nerf.json

If you use this code or ideas from the paper for your research, please cite our paper:

@article{slapak2024102810,

title = {Neural radiance fields in the industrial and robotics domain: Applications, research opportunities and use cases},

journal = {Robotics and Computer-Integrated Manufacturing},

volume = {90},

pages = {102810},

year = {2024},

issn = {0736-5845},

doi = {https://doi.org/10.1016/j.rcim.2024.102810},

url = {https://www.sciencedirect.com/science/article/pii/S0736584524000978},

author = {Eugen Šlapak and Enric Pardo and Matúš Dopiriak and Taras Maksymyuk and Juraj Gazda}

}