A repo for sentiment analysis on Twitter data about the recent Tanishq controversial ad using Natural Language Processing

In this project, twitter data about the recent controversial Tanisq ad, which incited religious tension amongst Hindus, were scrapped and a machine learning model is generated based on Natural Language Processing. This model can be used to identify public sentiment about the company that will help company better navigate their ad campaigns.

Twitter data was collected using twitter API with tweepy python package.

Following libraries are used in this project:

| Task | Python Packages | Notebooks |

| Data Collection | tweepy | get_tweet.ipynb |

| Data Cleaning and Visualization | seaborn, matplotlib, plotly, wordcloud, pandas, nltk | cleaning_viz.ipynb |

| Manual Labeling | numpy, glob, json, pandas | labeling.ipynb |

| Pretrained model | vader, textblob, flair | pretrained_sentiment_analysis.ipynb |

| Unsupervised Learning | sklearn, xgboost, lightgbm, lazypredict | unsupervised_sentiment_analysis.ipynb |

| Supervised Learning | sklearn, xgboost, lightgbm, lazypredict | supervised_sentiment_analysis.ipynb |

| Pseudolabeling | snorkel, sklearn, xgboost, vader, textblob | pseudolabeling_2.ipynb |

| SMOTE | imblearn | smote_2.ipynb |

Here, the data is collected using tweepy python package. In order to quickly fetch data using tweepy, a small python function is written [fetch_tweets]. Access token and consumer token are stored in the .env file and accessed with dotenv python package to ensure data security (.env is not tracked with .git file). Sample usage of the get_tweets function is shown below:

import pandas as pd

from src.data.get_tweets import fetch_tweets

df = pd.DataFrame(columns=['text', 'source', 'url'])

msgs = []

msg =[]

for tweet in tweepy.Cursor(api.search, q='#MSFT', rpp=100).items(10):

msg = [tweet.text, tweet.source, tweet.source_url]

msg = tuple(msg)

msgs.append(msg)

df = pd.DataFrame(msgs)Following steps were taken to clean the data:

- Hashtags (

#), URL, emoji, mentions, and numbers were removed using tweet preprocessing python package. - All the texts were converted to lowercase and any special characters were removed.

WordNetLemmatizerandTweetTokenizerfromnltkpackage were used to lemmatize and tokenize the sentences.

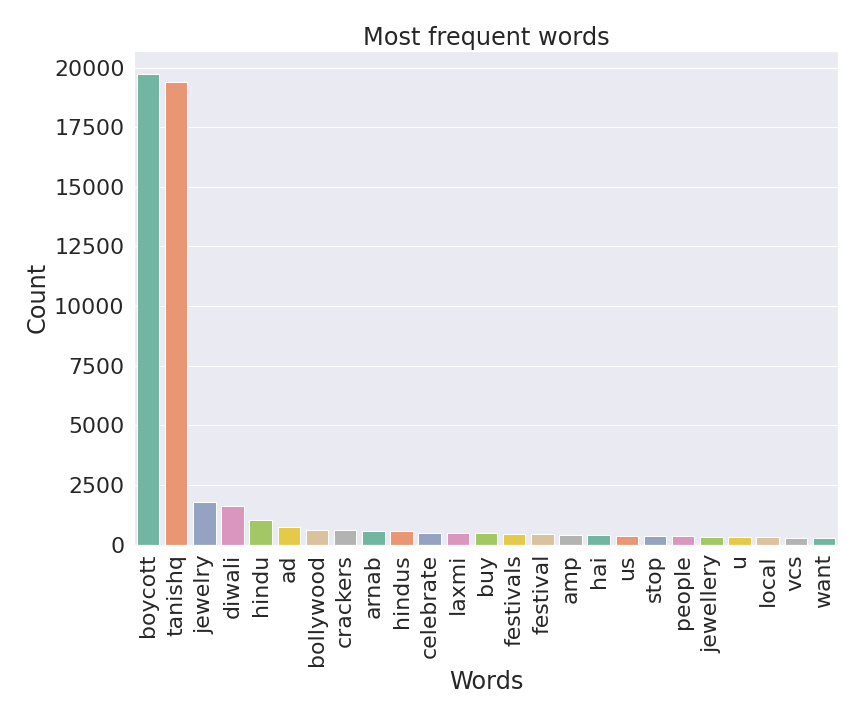

A barchart is drawn to illustrate the relative frequency of each word:

Here, it's clear that boycott and tanishq are the two most frequent words in this particular twitter corpus.

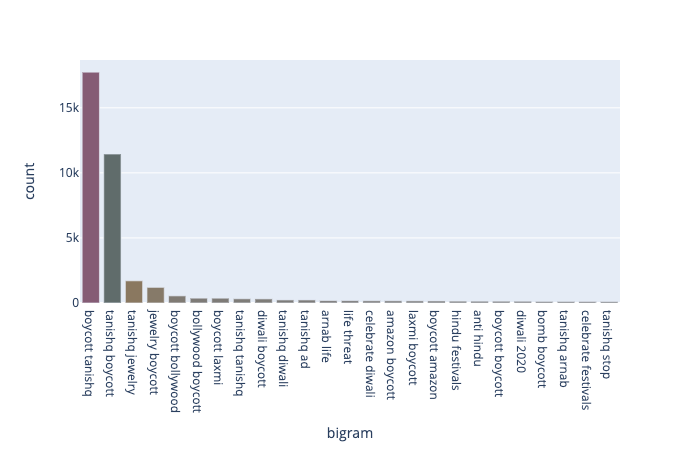

Next, I plot the bigram frequency as bigram oftentimes are very important in natural language processing:

As expected, boycott tanishq and tanishq boycott are the two most frequent bigram.

Here, I manually lablled a few data to develop machine learning model. I used python's native functionality to label the data but nowadays sophisticated GUI package available to label the data.

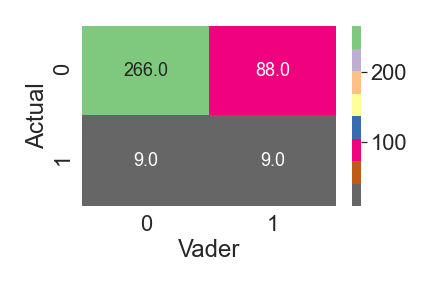

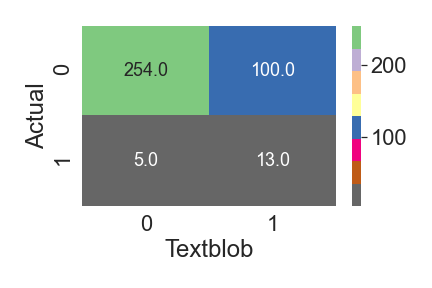

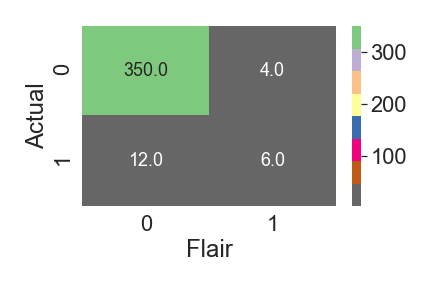

Next, I analyzed the performance of three pretrained sentiment analysis models, namely: 1. vader, 2. textblob, and 3. flair, with the labelled data as benchmark. Below I show the confusion matrix of three models:

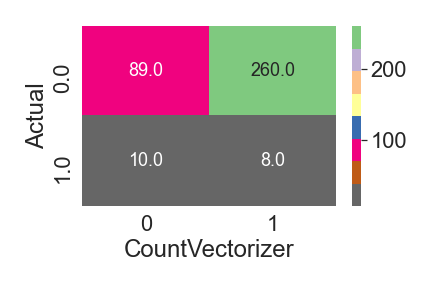

Confusion matrix for Kmeans clustering algorithm with countvecorizer:

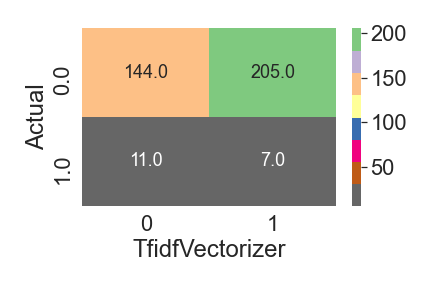

Confusion matrix for Kmeans clustering algorithm with tfidf vecorizer:

So far, only the textblob were able to have high recall for positive sentiment. Next, I'll try to implement supervised learning to improve the positive sentiment recall.

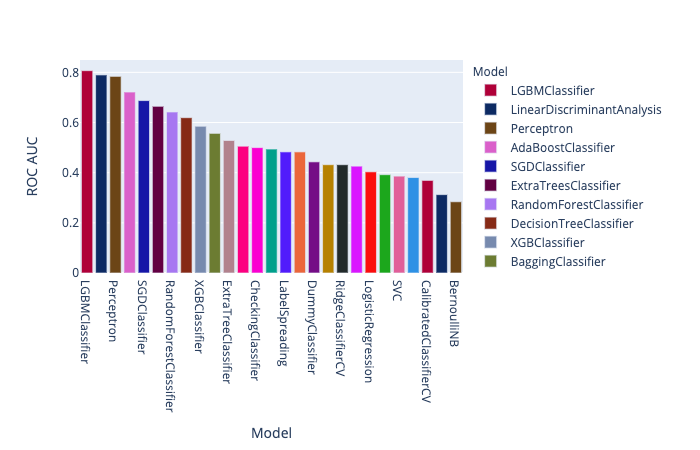

Next, I used lazypredict package to quickly scan the performance of most of the sklearn classifiers with xgboost and lightgbm without any hyperparameter optimization. Below I show the perfomance of different classifiers with countvectorizer:

| Model | Accuracy | Balanced Accuracy | ROC AUC | F1 Score | Time Taken |

|---|---|---|---|---|---|

| LinearDiscriminantAnalysis | 0.88 | 0.75 | 0.75 | 0.90 | 0.13 |

| XGBClassifier | 0.95 | 0.69 | 0.69 | 0.94 | 0.26 |

| AdaBoostClassifier | 0.96 | 0.60 | 0.60 | 0.94 | 0.24 |

| DecisionTreeClassifier | 0.95 | 0.59 | 0.59 | 0.93 | 0.05 |

| ExtraTreeClassifier | 0.95 | 0.59 | 0.59 | 0.93 | 0.04 |

| DummyClassifier | 0.92 | 0.58 | 0.58 | 0.92 | 0.05 |

| QuadraticDiscriminantAnalysis | 0.12 | 0.53 | 0.53 | 0.13 | 0.09 |

| CalibratedClassifierCV | 0.95 | 0.50 | 0.50 | 0.92 | 2.65 |

| NearestCentroid | 0.95 | 0.50 | 0.50 | 0.92 | 0.04 |

| BernoulliNB | 0.95 | 0.50 | 0.50 | 0.92 | 0.05 |

| SVC | 0.95 | 0.50 | 0.50 | 0.92 | 0.20 |

| SGDClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.06 |

| RidgeClassifierCV | 0.95 | 0.50 | 0.50 | 0.92 | 0.08 |

| RidgeClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.05 |

| RandomForestClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.17 |

| PassiveAggressiveClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.07 |

| LogisticRegression | 0.95 | 0.50 | 0.50 | 0.92 | 0.06 |

| CheckingClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.04 |

| LinearSVC | 0.95 | 0.50 | 0.50 | 0.92 | 0.71 |

| BaggingClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.11 |

| LabelSpreading | 0.95 | 0.50 | 0.50 | 0.92 | 0.04 |

| LabelPropagation | 0.95 | 0.50 | 0.50 | 0.92 | 0.05 |

| KNeighborsClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.11 |

| ExtraTreesClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.17 |

| Perceptron | 0.93 | 0.49 | 0.49 | 0.91 | 0.04 |

| GaussianNB | 0.93 | 0.49 | 0.49 | 0.91 | 0.04 |

| LGBMClassifier | 0.92 | 0.49 | 0.49 | 0.91 | 0.09 |

With tfidf vectorizer:

| Model | Accuracy | Balanced Accuracy | ROC AUC | F1 Score | Time Taken |

|---|---|---|---|---|---|

| LGBMClassifier | 0.97 | 0.70 | 0.70 | 0.96 | 0.66 |

| XGBClassifier | 0.96 | 0.69 | 0.69 | 0.95 | 2.21 |

| DecisionTreeClassifier | 0.96 | 0.60 | 0.60 | 0.94 | 0.60 |

| AdaBoostClassifier | 0.96 | 0.60 | 0.60 | 0.94 | 2.93 |

| LinearDiscriminantAnalysis | 0.95 | 0.59 | 0.59 | 0.93 | 1.21 |

| DummyClassifier | 0.92 | 0.58 | 0.58 | 0.92 | 0.39 |

| QuadraticDiscriminantAnalysis | 0.10 | 0.52 | 0.52 | 0.09 | 0.96 |

| CalibratedClassifierCV | 0.95 | 0.50 | 0.50 | 0.92 | 29.55 |

| BernoulliNB | 0.95 | 0.50 | 0.50 | 0.92 | 0.43 |

| SVC | 0.95 | 0.50 | 0.50 | 0.92 | 2.08 |

| RidgeClassifierCV | 0.95 | 0.50 | 0.50 | 0.92 | 0.48 |

| RidgeClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.46 |

| RandomForestClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.67 |

| NearestCentroid | 0.95 | 0.50 | 0.50 | 0.92 | 0.40 |

| CheckingClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.39 |

| BaggingClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.95 |

| LabelSpreading | 0.95 | 0.50 | 0.50 | 0.92 | 0.40 |

| LabelPropagation | 0.95 | 0.50 | 0.50 | 0.92 | 0.40 |

| KNeighborsClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 1.09 |

| GaussianNB | 0.95 | 0.50 | 0.50 | 0.92 | 0.45 |

| ExtraTreesClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.92 |

| LogisticRegression | 0.95 | 0.50 | 0.50 | 0.92 | 0.74 |

| LinearSVC | 0.93 | 0.49 | 0.49 | 0.91 | 7.52 |

| PassiveAggressiveClassifier | 0.93 | 0.49 | 0.49 | 0.91 | 0.88 |

| SGDClassifier | 0.93 | 0.49 | 0.49 | 0.91 | 0.44 |

| ExtraTreeClassifier | 0.93 | 0.49 | 0.49 | 0.91 | 0.40 |

| Perceptron | 0.92 | 0.49 | 0.49 | 0.91 | 0.43 |

I used snokel weak supervision approach to train an intermediate generator model to label the unlabelled data and then trained a discriminator model to classify the tweets. The performance of the final discriminator model is summarized below:

| Model | Accuracy | Balanced Accuracy | ROC AUC | F1 Score | Time Taken |

|---|---|---|---|---|---|

| LGBMClassifier | 0.43 | 0.61 | 0.61 | 0.56 | 1.64 |

| XGBClassifier | 0.41 | 0.60 | 0.61 | 0.53 | 3.82 |

| ExtraTreesClassifier | 0.20 | 0.57 | 0.59 | 0.25 | 0.79 |

| RandomForestClassifier | 0.16 | 0.56 | 0.57 | 0.20 | 0.57 |

| DecisionTreeClassifier | 0.51 | 0.55 | 0.57 | 0.63 | 0.17 |

| DummyClassifier | 0.26 | 0.51 | 0.53 | 0.36 | 0.07 |

| ExtraTreeClassifier | 0.42 | 0.51 | 0.51 | 0.55 | 0.09 |

| CalibratedClassifierCV | 0.07 | 0.51 | 0.51 | 0.03 | 26.32 |

| CheckingClassifier | 0.95 | 0.50 | 0.50 | 0.92 | 0.07 |

| BaggingClassifier | 0.40 | 0.50 | 0.54 | 0.53 | 0.76 |

| SGDClassifier | 0.38 | 0.48 | 0.49 | 0.51 | 0.39 |

| AdaBoostClassifier | 0.29 | 0.44 | 0.50 | 0.41 | 0.74 |

| Perceptron | 0.42 | 0.41 | 0.42 | 0.56 | 0.16 |

| KNeighborsClassifier | 0.07 | 0.41 | 0.42 | 0.05 | 0.32 |

| PassiveAggressiveClassifier | 0.32 | 0.36 | 0.37 | 0.44 | 0.36 |

| GaussianNB | 0.32 | 0.36 | 0.37 | 0.44 | 0.10 |

| LinearSVC | 0.29 | 0.34 | 0.42 | 0.42 | 6.62 |

| NearestCentroid | 0.27 | 0.33 | 0.38 | 0.39 | 0.10 |

| RidgeClassifier | 0.27 | 0.33 | 0.46 | 0.40 | 0.15 |

| SVC | 0.26 | 0.33 | 0.33 | 0.37 | 1.76 |

| BernoulliNB | 0.25 | 0.32 | 0.32 | 0.36 | 0.09 |

| RidgeClassifierCV | 0.21 | 0.30 | 0.39 | 0.31 | 0.29 |

| LinearDiscriminantAnalysis | 0.33 | 0.27 | 0.43 | 0.47 | 0.70 |

| QuadraticDiscriminantAnalysis | 0.09 | 0.14 | 0.57 | 0.15 | 0.36 |

| LabelSpreading | 0.01 | 0.10 | 0.53 | 0.01 | 0.12 |

| LabelPropagation | 0.00 | 0.00 | 0.50 | 0.00 | 0.56 |

The performance is worse than the supervised model, which could be due to the inadequecy of my labeling function. I need to invest some more time to carefully design better labeling function to see real improvement in the performance.

Since the data in highly imbalanced, I tried smote in order to artificially oversample the minority calss and undersample the majority class.

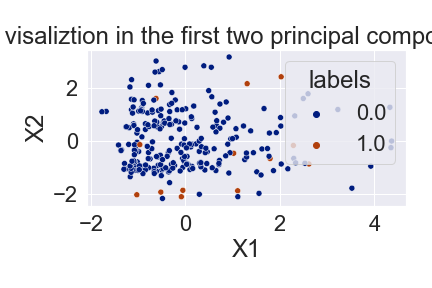

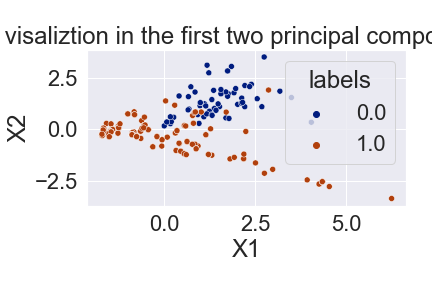

Here is the PCA before and after performing smote:

Before->

After->

After->

The classifier performance after training with smote processed data:

In terms of auc, it seems like smote is the best way to tackle this particular dataset.