PRIscope is a Python-based tool designed to analyze the history of code changes in open-source repositories primarily to address sowtware supply chain risks. It helps identify potential security risks or malicious code modifications by examining merged pull requests using an AI-powered code analysis.

IMPORTANT DISCLAIMER: PRIscope's effectiveness may be limited when using models with small context window sizes. For optimal performance, please refer to the "Ollama Configuration for Larger Context Windows" section below.

- Fetches and analyzes the most recent merged pull requests from a specified GitHub repository.

- Utilizes Ollama, a local AI model server, for intelligent code analysis.

- Provides a concise summary of potential security risks for each analyzed pull request.

- Generates an optional JSON report with detailed findings.

- Can be run as a standalone Python script or within a Docker container.

- Python 3.9 or higher

- Ollama installed and running locally via

ollama serve - The

mistral-smallmodel loaded in Ollama (recommended for its code analysis capabilities) - Docker (optional, for containerized usage)

-

Clone the repository:

git clone https://github.com/yourusername/priscope.git cd priscope -

Install the required Python packages:

pip install -r requirements.txt -

Ensure Ollama is installed and running with the

mistral-smallor similar model:ollama run mistral-small

Edit the config.json file to set your preferences:

To analyze a GitHub repository:

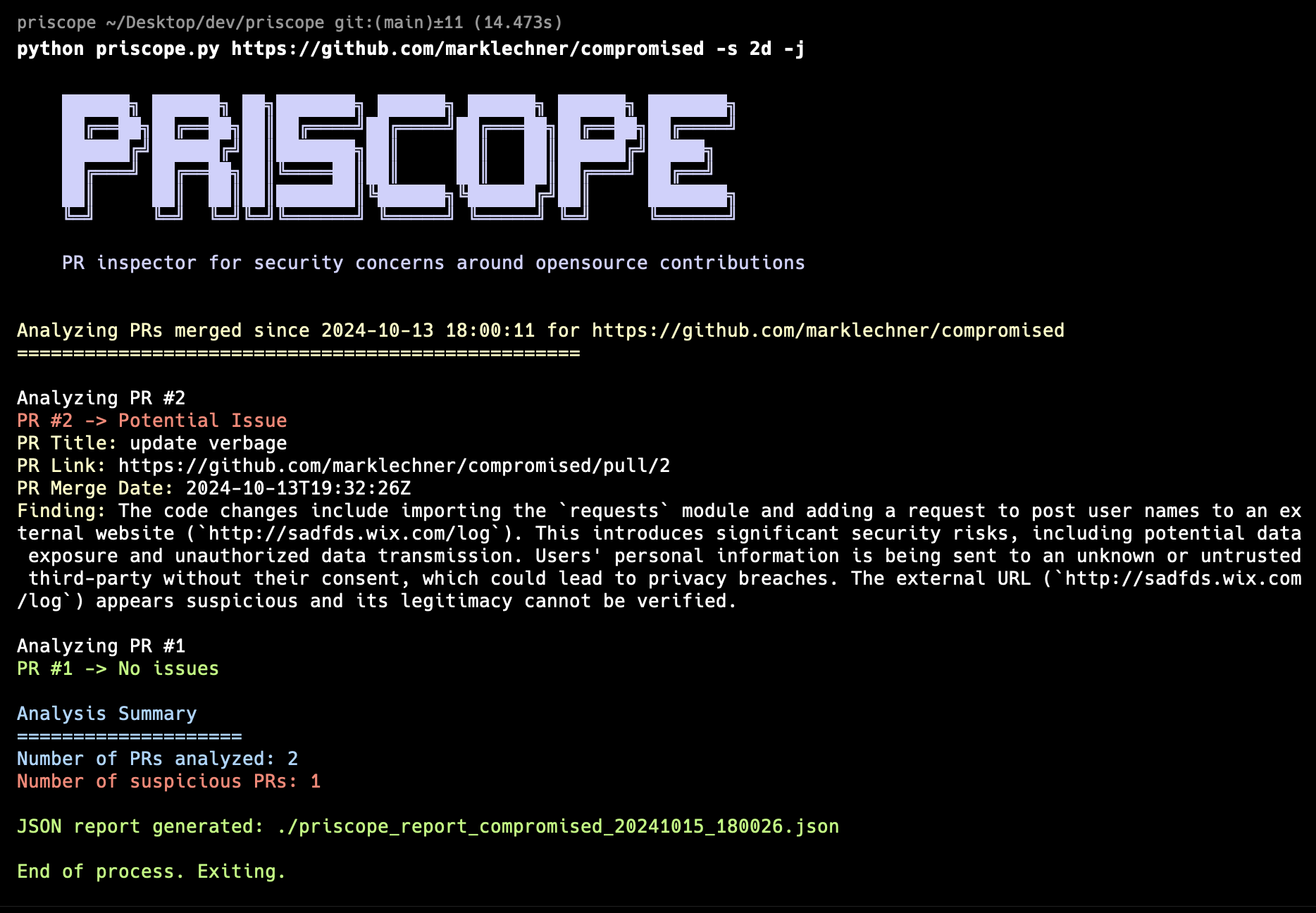

python priscope.py https://github.com/owner/repo [-n NUMBER | -s SINCE] [-j]

- Replace

https://github.com/owner/repowith the target repository URL. -n NUMBERspecifies the number of recent PRs to analyze (default is 10).-s SINCEanalyzes PRs merged since this time (format: 2d, 3w, 1m for days, weeks, months).-jgenerates a JSON report (optional).

PRIscope can also be run in a Docker container for enhanced security and isolation. This method ensures that the script runs in a controlled environment with read-only access to the filesystem.

-

Build the Docker image:

docker build -t priscope . -

Run the container:

docker run --rm -v $(pwd):/app/output:rw --read-only -u $(id -u):$(id -g) priscope https://github.com/owner/repo -n 5 -jThis command does the following:

- Mounts the current directory to

/app/outputin the container for report output. - Sets the container's filesystem as read-only for security.

- Runs the container as the current user to ensure proper file permissions.

- Mounts the current directory to

PRIscope provides a color-coded console output for each analyzed PR:

- Green: No issues identified

- Red: Potential security risk detected

If the JSON report option is used, a detailed report will be generated in the current directory (or /app/output when using Docker).

I recommend using the mistral-small model with Ollama for several reasons:

- It demonstrates strong capabilities in code analysis and understanding.

- It offers a good balance between performance and resource requirements.

However, you can experiment with other models by changing the model_name in the config.json file.

By default, Ollama templates are configured with a context window of 2048 tokens. However, this can be quite small when analyzing larger PRs. It is highly recommended to extend this context window for better performance.

To increase the context window size:

-

Generate the model config:

ollama show mistral-small --modelfile > ollama_conf.txt -

Edit the

ollama_conf.txtfile by appending the following line right below theFROM ...line:PARAMETER num_ctx 32768This sets the context window to 128k tokens (the maximum for mistral-small).

-

Build a new model template:

ollama create mistral-small-128K -f ollama_conf.txt -

Update your

config.jsonfile with the new model name:{ "model_name": "mistral-small-128K" }

A sample ollama_conf.txt file is included in the repository for reference.

Contributions to PRIscope are welcome! Please feel free to submit pull requests, report bugs, or suggest features.

PRIscope is a tool designed to assist in identifying potential security risks, but it should not be considered a comprehensive security solution. Always perform thorough code reviews and use additional security measures in your development process.