- Survey Papers

- Task-specific Methods

- Pretrainig Approaches

- Multimodal Applications (Understanidng, Classification, Generation, Retrieval, Translation)

- Multimodal Datasets

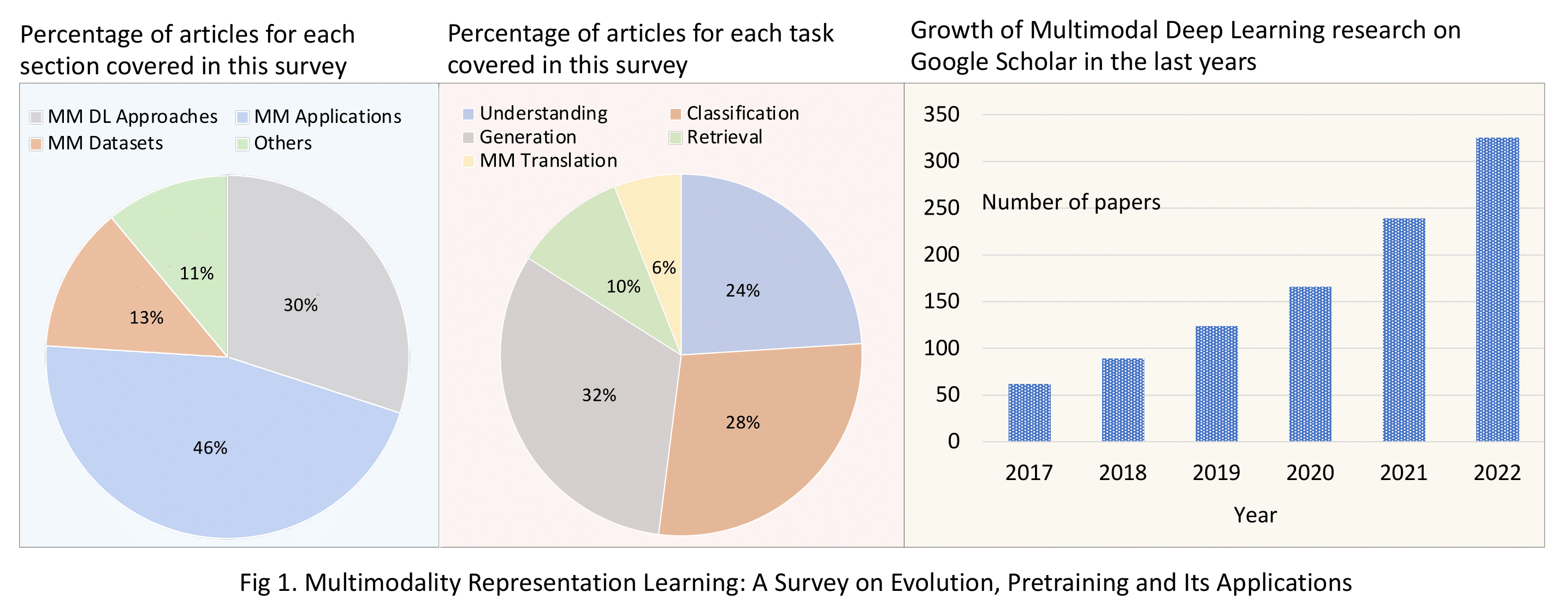

Multimodality Representation Learning: A Survey on Evolution, Pretraining and Its Applications.

Muhammad Arslan Manzoor, Sarah Albarri, Ziting Xian, Zaiqiao Meng, Preslav Nakov, and Shangsong Liang.

[PDF]

Vision-Language Pre-training:Basics, Recent Advances, and Future Trends.[17th Oct, 2022]

Zhe Gan, Linjie Li, Chunyuan Li, Lijuan Wang, Zicheng Liu, Jianfeng Gao.

[PDF]

VLP: A survey on vision-language pre-training.[18th Feb, 2022]

Feilong Chen, Duzhen Zhang, Minglun Han, Xiuyi Chen, Jing Shi, Shuang Xu, and Bo Xu.

[PDF]

A Survey of Vision-Language Pre-Trained Models.[18th Feb, 2022]

Yifan Du, Zikang Liu, Junyi Li, Wayne Xin Zhao.

[PDF]

Vision-and-Language Pretrained Models: A Survey.[15th Apr, 2022]

Siqu Long, Feiqi Cao, Soyeon Caren Han, Haiqin Yang.

[PDF]

Comprehensive reading list for Multimodal Literature

[Github]

Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing.[28th Jul, 2021]

Pengfei Liu, Weizhe Yuan, Jinlan Fu, Zhengbao Jiang, Hiroaki Hayashi, Graham Neubig

[PDF]

Recent Advances and Trends in Multimodal Deep Learning: A Review.[24th May, 2021]

Jabeen Summaira, Xi Li, Amin Muhammad Shoib, Songyuan Li, Jabbar Abdul.

[PDF]

Improving Image Captioning by Leveraging Intra- and Inter-layer Global Representation in Transformer Network.[9th Feb, 2021]

Jiayi Ji, Yunpeng Luo, Xiaoshuai Sun, Fuhai Chen, Gen Luo, Yongjian Wu, Yue Gao, Rongrong Ji

[PDF]

Cascaded Recurrent Neural Networks for Hyperspectral Image Classification.[Aug, 2019]

Renlong Hang, Qingshan Liu, Danfeng Hong, Pedram Ghamisi

[PDF]

Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks.[2015 NIPS]

Shaoqing Ren, Kaiming He, Ross Girshick, Jian Sun

[PDF]

Microsoft coco: Common objects in context.[2014 ECCV]

Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollar, C. Lawrence Zitnick

[PDF]

Multimodal Deep Learning.[2011 ICML]

Jiquan Ngiam, Aditya Khosla, Mingyu Kim, Juhan Nam, Honglak Lee, Andrew Y. Ng

[PDF]

Extracting and composing robust features with denoising autoencoders.[5th July, 2008]

Pascak Vincent, Hugo Larochelle, Yoshua Bengio, Pierre-Antoine Manzagol

[PDF]

Multi-Gate Attention Network for Image Captioning.[13th Mar, 2021]

WEITAO JIANG, XIYING LI, HAIFENG HU, QIANG LU, AND BOHONG LIU

[PDF]

AMC: Attention guided Multi-modal Correlation Learning for Image Search.[2017 CVPR]

Kan Chen, Trung Bui, Chen Fang, Zhaowen Wang, Ram Nevatia

[PDF]

Video Captioning via Hierarchical Reinforcement Learning.[2018 CVPR]

Xin Wang, Wenhu Chen, Jiawei Wu, Yuan-Fang Wang, William Yang Wang

[PDF]

Gaussian Process with Graph Convolutional Kernel for Relational Learning.[14th Aug, 2021]

Jinyuan Fang, Shangsong Liang, Zaiqiao Meng, Qiang Zhang

[PDF]

Multi-Relational Graph Representation Learning with Bayesian Gaussian Process Network.[28th June, 2022]

Guanzheng Chen, Jinyuan Fang, Zaiqiao Meng, Qiang Zhang, Shangsong Liang

[PDF]

Learning Audio-Visual Speech Representation by Masked Multimodal Cluster Prediction.[5th Jan, 2022]

Bowen Shi, Wei-Ning Hsu, Kushal Lakhotia, Abdelrahman Mohamed

[PDF]

A Survey of Vision-Language Pre-Trained Models.[18th Feb, 2022]

Yifan Du, Zikang Liu, Junyi Li, Wayne Xin Zhao

[PDF]

Attention is All you Need.[2017 NIPS]

Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, Illia Polosukhin

[PDF]

VinVL: Revisiting Visual Representations in Vision-Language Models.[2021 CVPR]

Pengchuan Zhang, Xiujun Li, Xiaowei Hu, Jianwei Yang, Lei Zhang, Lijuan Wang, Yejin Choi, Jianfeng Gao

[PDF]

M6: Multi-Modality-to-Multi-Modality Multitask Mega-transformer for Unified Pretraining.[Aug, 2021]

Junyang Lin, Rui Men, An Yang, Chang Zhou, Yichang Zhang, Peng Wang, Jingren Zhou, Jie Tang, Hongxia Yang

[PDF]

AMMU: A survey of transformer-based biomedical pretrained language models.[23th Mar, 2020]

Katikapalli Subramanyam Kalyan, Ajit Rajasekharan, Sivanesan Sangeetha

[PDF]

ELECTRA: Pre-training Text Encoders as Discriminators Rather Than Generators

Kevin Clark, Minh-Thang Luong, Quoc V. Le, Christopher D. Manning

[PDF]

RoBERTa: A Robustly Optimized BERT Pretraining Approach.[26th Jul, 2019]

Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, Veselin Stoyanov

[PDF]

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.[11th Oct, 2018]

Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova

[PDF]

BioBERT: a pre-trained biomedical language representation model for biomedical text mining.[10th Sep, 2019]

Jinhyuk Lee, Wonjin Yoon, Sungdong Kim, onghyeon Kim, Sunkyu Kim, Chan Ho So, Jaewoo Kang

[PDF]

HateBERT: Retraining BERT for Abusive Language Detection in English.[23th Oct, 2020]

Tommaso Caselli, Valerio Basile, Jelena Mitrovic, Michael Granitzer

[PDF]

InfoXLM: An Information-Theoretic Framework for Cross-Lingual Language Model Pre-Training.[15th Jul, 2020]

Zewen Chi, Li Dong, Furu Wei, Nan Yang, Saksham Singhal, Wenhui Wang, Xia Song, XIan-Ling Mao, Heyan Huang, Ming Zhou

[PDF]

Pre-training technique to localize medical BERT and enhance biomedical BERT.[14th May, 2020]

Shoya Wada, Toshihiro Takeda, Shiro Manabe, Shozo Konishi, Jun Kamohara, Yasushi, Matsumura

[PDF]

Don't Stop Pretraining: Adapt Language Models to Domains and Tasks.[23th Apr, 2020]

Suchin Gururangan, Ana Marasovic, Swabha Swayamdipta, Kyle Lo, Iz Beltagy, Doug Downey, Noah A. Smith

[PDF]

Knowledge Inheritance for Pre-trained Language Models.[28th May, 2021]

Yujia Qin, Yankai Lin, Jing Yi, Jiajie Zhang, Xu Han, Zhengyan Zhang, Yusheng Su, Zhiyuan Liu, Peng Li, Maosong Sun, Jie Zhou

[PDF]

Improving Language Understanding by Generative Pre-Training.[2018]

Alec Radford, Karthik Narasimhan, Tim Salimans, Ilya Sutskever

[PDF]

Shuffled-token Detection for Refining Pre-trained RoBERTa

Subhadarshi Panda, Anjali Agrawal, Jeewon Ha, Benjamin Bloch

[PDF]

ALBERT: A Lite BERT for Self-supervised Learning of Language Representations.[26th Sep, 2019]

Zhenzhong Lan, Minga Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, Radu Soricut

[PDF]

Exploring the limits of transfer learning with a unified text-to-text transformer.[1st Jan, 2020]

Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, Peter J. Liu

[PDF]

End-to-End Object Detection with Transformers.[3rd Nov, 2020]

Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, Sergey Zagoruyko

[PDF]

Deformable DETR: Deformable Transformers for End-to-End Object Detection.[8th Oct, 2018]

Xizhou Zhu, Weijie Su, Lewei Lu, Bin Li, Xiaogang Wang, Jifeng Dai

[PDF]

Unified Vision-Language Pre-Training for Image Captioning and VQA.[2020 AAAI]

Luowei Zhou, Hamid Palangi, Lei Zhang, Houdong Hu, Jason Corso, Jianfeng Gao

[PDF]

VirTex: Learning Visual Representations From Textual Annotations.[2021 CVPR]

Karan Desai, Justin Johnson

[PDF]

Ernie-vil: Knowledge enhanced vision-language representations through scene graphs.[2021 AAAI]

Fei Yu, Jiji Tang, Weichong Yin, Yu Sun, Hao Tian, Hua Wu, Haifeng Wang

[PDF]

OSCAR: Object-Semantics Aligned Pre-training for Vision-Language Tasks.[24th Sep, 2020]

Xiujun Li, Xi Yin, Chunyuan Li, Pengchuan Zhang, Xiaowei Hu, Lei Zhang, Lijuan Wang, Houdong Hu, Li Dong, Furu Wei, Yejin Choi, Jianfeng Gao

[PDF]

Vokenization: Improving Language Understanding with Contextualized, Visual-Grounded Supervision.[14th Oct, 2020]

Hao Tan, Mohit Bansal

[PDF]

Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models.[2015 ICCV]

Bryan A. Plummer, Liwei Wang, Chris M. Cervantes, Juan C. Caicedo, Julia Hockenmaier, Svetlana Lazebnik

[PDF]

Distributed representations of words and phrases and their compositionality.[2013 NIPS]

Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S. Corrado, Jeff Dean

[PDF]

AllenNLP: A Deep Semantic Natural Language Processing Platform.[20 Mar, 2018]

Matt Gardner, Joel Grus, Mark Neumann, Oyvind Tafjord, Pradeep Dasigi, Nelson Liu, Matthew Peters, Michael Schmitz, Luke Zettlemoyer

[PDF]

Climbing towards NLU: On Meaning, Form, and Understanding in the Age of Data.[Jul, 2020]

Emily M. Bender, Alexander Koller

[PDF]

Experience Grounds Language.[21th Apr, 2020]

Yonatan Bisk, Ari Holtzman, Jesse Thomason, Jacob Andreas, Yoshua Bengio, Joyce Chai, Mirella Lapata, Angeliki Lazaridou, Jonathan May, Aleksandr Nisnevich, Nicolas Pinto, Joseph Turian

[PDF]

Hubert: How Much Can a Bad Teacher Benefit ASR Pre-Training?

Wei-Ning Hsu, Yao-Hung Hubert Tsai, Benjamin Bolte, Ruslan Salakhutdinov, Abdelrahman Mohamed

[PDF]

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.[11th Oct, 2018]

Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova

[PDF]

Improving Language Understanding by Generative Pre-Training.[2018]

Alec Radford, Karthik Narasimhan, Tim Salimans, Ilya Sutskever

[PDF]

End-to-End Object Detection with Transformers.[3rd Nov, 2020]

Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, Sergey Zagoruyko

[PDF]

UNITER: UNiversal Image-TExt Representation Learning.[24th Sep, 2020]

Yen-Chun Chen, Linjie Li, Licheng Yu, Ahmed EI Kholy, Faisal Ahmed, Zhe Gan, Yu Cheng, Jingjing Liu

[PDF]

UNITER: UNiversal Image-TExt Representation Learning.[2021 ICCV]

Ronghang Hu, Amanpreet Singh

[PDF]

VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text.[2021 NIPS]

Hassan Akbari, Liangzhe Yuan, Rui Qian, Wei-Hong Chuang, Shih-Fu Chang, Yin Cui, Boqing Gong

[PDF]

OFA: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework.[2022 ICML]

Peng Wang, An Yang, Rui Men, Junyang Lin, Shuai Bai, Zhikang Li, Jianxin Ma, Chang Zhou, Jingren Zhou, Hongxia Yang

[PDF]

BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension.[29th Oct, 2019]

Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Ves Stoyanov, Luke Zettlemoyer

[PDF]

Learning Audio-Visual Speech Representation by Masked Multimodal Cluster Prediction.[5th Jan, 2022]

Bowen Shi, Wei-Ning Hsu, Kushal Lakhotia, Abdelrahman Mohamed

[PDF]

Self-Supervised Multimodal Opinion Summarization.[27th May, 2021]

Jinbae lm, Moonki Kim, Hoyeop Lee, Hyunsouk Cho, Sehee Chung

[PDF]

Hubert: How Much Can a Bad Teacher Benefit ASR Pre-Training?

Wei-Ning Hsu, Yao-Hung Hubert Tsai, Benjamin Bolte, Ruslan Salakhutdinov, Abdelrahman Mohamed

[PDF]

LayoutLMv2: Multi-modal Pre-training for Visually-Rich Document Understanding.[29th Dec, 2020]

Yang Xu, Yiheng Xu, Tengchao Lv, Lei Cui, Furu Wei, Guoxin Wang, Yijuan Lu, Dinei Florencio, Cha Zhang, Wanxiang Che, Min Zhang, Lidong Zhou

[PDF]

Structext: Structured text understanding with multi-modal transformers.[17th Oct, 2021]

Yulin Li, Yuxi Qian, Yuechen Yu, Xiameng Qin, Chengquan Zhang, Yan Liu, Kun Yao, Junyu Han, Jingtuo Liu, Errui Ding

[PDF]

ICDAR2019 Competition on Scanned Receipt OCR and Information Extraction.[20th Sep, 2019]

Zheng Huang, Kai Chen, Jianhua He, Xiang Bai, Dimosthenis Karatzas, Shijian Lu, C. V. Jawahar

[PDF]

FUNSD: A Dataset for Form Understanding in Noisy Scanned Documents.[20th Sep, 2019]

Guillaume Jaume, Hazim Kemal Ekenel, Jean-Philippe Thiran

[PDF]

XYLayoutLM: Towards Layout-Aware Multimodal Networks for Visually-Rich Document Understanding.[2022 CVPR]

Zhangxuan Gu, Changhua Meng, Ke Wang, Jun Lan, Weiqiang Wang, Ming Gu, Liqing Zhang

[PDF]

Multistage Fusion with Forget Gate for Multimodal Summarization in Open-Domain Videos.[2022 EMNLP]

Nayu Liu, Xian SUn, Hongfeng Yu, Wenkai Zhang, Guangluan Xu

[PDF]

Multimodal Abstractive Summarization for How2 Videos.[19th Jun, 2019]

Shruti Palaskar, Jindrich Libovicky, Spandana Gella, Florian Metze

[PDF]

Vision guided generative pre-trained language models for multimodal abstractive summarization.[6th Sep, 2021]

Tiezheng Yu, Wenliang Dai, Zihan Liu, Pascale Fung

[PDF]

How2: A Large-scale Dataset for Multimodal Language Understanding.[1st Nov, 2018]

Ramon Sanabria, Ozan Caglayan, Shruti Palaskar, Desmond Elliott, Loic Barrault, Lucia Specia, Florian Metze

[PDF]

wav2vec 2.0: A framework for self-supervised learning of speech representations.[2020 NIPS]

Alexei Baevski, Yuhao Zhou, Abdelrahman Mohamed, Michael Auli

[PDF]

DeCoAR 2.0: Deep Contextualized Acoustic Representations with Vector Quantization.[11th Dec, 2020]

Shaoshi Ling, Yuzong Liu

[PDF]

LRS3-TED: a large-scale dataset for visual speech recognition.[3rd Sep, 2018]

Triantafyllos Afouras, Joon Son Chung, Andrew Zisserman

[PDF]

Recurrent Neural Network Transducer for Audio-Visual Speech Recognition.[Dec 2019]

Takaki Makino, Hank Liao, Yannis Assael, Brendan Shillingford, Basilio Garcia, Otavio Braga, Olivier Siohan

[PDF]

Learning Individual Speaking Styles for Accurate Lip to Speech Synthesis.[2020 CVPR]

K R Prajwal, Rudrabha Mukhopadhyay, Vinay P. Namboodiri, C.V. Jawahar

[PDF]

On the importance of super-Gaussian speech priors for machine-learning based speech enhancement.[28th Nov, 2017]

Robert Rehr, Timo Gerkmann

[PDF]

Active appearance models.[1998 ECCV]

T. F. Cootes, G. J. Edwards, C. J. Taylor

[PDF]

Leveraging category information for single-frame visual sound source separation.[20th Jul, 2021]

Lingyu Zhu, Esa Rahtu

[PDF]

The Sound of Pixels.[2018 ECCV]

Hang Zhao, Chuang Gan, Andrew Rouditchenko, Carl Vondrick, Josh McDermott, Antonio Torralba

[PDF]

Vqa: Visual question answering.[2015 ICCV]

Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh

[PDF]

Topic-based content and sentiment analysis of Ebola virus on Twitter and in the news.[1th Jul, 2016]

Erin Hea-Jin Kim, Yoo Kyung Jeong, Yuyong Kim, Keun Young kang, Min Song

[PDF]

On the Role of Text Preprocessing in Neural Network Architectures: An Evaluation Study on Text Categorization and Sentiment Analysis.[6th Jul, 2017]

Jose Camacho-Collados, Mohammad Taher Pilehvar

[PDF]

Market strategies used by processed food manufacturers to increase and consolidate their power: a systematic review and document analysis.[26th Jan, 2021]

Benjamin Wood, Owain Williams, Vijaya Nagarajan, Gary Sacks

[PDF]

Swafn: Sentimental words aware fusion network for multimodal sentiment analysis.[2020 COLING]

Minping Chen, Xia Li

[PDF]

Adaptive online event detection in news streams.[15th Dec, 2017]

Linmei Hu, Bin Zhang, Lei Hou, Juanzi Li

[PDF]

Multi-source multimodal data and deep learning for disaster response: A systematic review.[27th Nov, 2021]

Nilani Algiriyage, Raj Prasanna, Kristin Stock, Emma E. H. Doyle, David Johnston

[PDF]

A Survey of Data Representation for Multi-Modality Event Detection and Evolution.[2nd Nov, 2021]

Kejing Xiao, Zhaopeng Qian, Biao Qin.

[PDF]

Crisismmd: Multimodal twitter datasets from natural disasters.[15th Jun, 2018]

Firoj Alam, Ferda Ofli, Muhammad Imran

[PDF]

Multi-modal generative adversarial networks for traffic event detection in smart cities.[1st Sep, 2021]

Qi Chen, WeiWang, Kaizhu Huang, Suparna De, Frans Coenen

[PDF]

Proppy: Organizing the news based on their propagandistic content.[5th Sep, 2019]

Alberto Barron-Cedeno, Israa Jaradat, Giovanni Da San Martino, Preslav Nakov

[PDF]

Fine-grained analysis of propaganda in news article.[Nov 2019]

Giovanni Da San Martino, Seunghak Yu, Alberto Barron-Cedeno, Rostislav Petrov, Preslav Nakov

[PDF]

Multimodal Fusion with Recurrent Neural Networks for Rumor Detection on Microblogs.[Oct, 2017]

Zhiwei Jin, Juan Cao, Han Guo, Yongdong Zhang, Jiebo Luo

[PDF]

𝖲𝖠𝖥𝖤: Similarity-Aware Multi-modal Fake News Detection.[6th May, 2020]

Xinyi Zhou, Jindi Wu, Reza Zafarani

[PDF]

From Recognition to Cognition: Visual Commonsense Reasoning.[2019 CVPR]

Rowan Zellers, Yonatan Bisk, Ali Farhadi, Yejin Choi

[PDF]

KVL-BERT: Knowledge Enhanced Visual-and-Linguistic BERT for visual commonsense reasoning.[27th Oct, 2021]

Dandan Song, Siyi Ma, Zhanchen Sun, Sicheng Yang, Lejian Liao

[PDF]

LXMERT: Learning Cross-Modality Encoder Representations from Transformers.[20th Aug, 2019]

Hao Tan, Mohit Bansal

[PDF]

Pixel-BERT: Aligning Image Pixels with Text by Deep Multi-Modal Transformers.[2 Apr, 2020]

Zhicheng Huang, Zhaoyang Zeng, Bei Liu, Dongmei Fu, Jianlong Fu

[PDF]

Vision-Language Navigation With Self-Supervised Auxiliary Reasoning Tasks.[2020 CVPR]

Fengda Zhu, Yi Zhu, Xiaojun Chang, Xiaodan Liang

[PDF]

Recent advances and trends in multimodal deep learning: A review.[24th May, 2021]

Jabeen Summaira, Xi Li, Amin Muhammad Shoib, Songyuan Li, Jabbar Abdul

[PDF]

Vqa: Visual question answering.[2015 ICCV]

Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh

[PDF]

Microsoft coco: Common objects in context.[2014 ECCV]

Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollar, C. Lawrence Zitnick

[PDF]

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.[11th Oct, 2018]

Jacob Devlin, Ming-Wei Chang, Kenton Lee, Kristina Toutanova

[PDF]

Distributed Representations of Words and Phrases and their Compositionality.[2013 NIPS]

Tomas Mikolov, Ilya Sutskever, Kai Chen, Greg S. Corrado, Jeff Dean

[PDF]

LRS3-TED: a large-scale dataset for visual speech recognition.[3rd Sep, 2018]

Triantafyllos Afouras, Joon Son Chung, Andrew Zisserman

[PDF]

A lip sync expert is all you need for speech to lip generation in the wild.[12th Oct, 2019]

K R Prajwal, Rudrabha Mukhopadhyay, Vinay P. Namboodiri, C.V. Jawahar

[PDF]

Unified Vision-Language Pre-Training for Image Captioning and VQA.[2020 AAAI]

Luowei Zhou, Hamid Palangi, Lei Zhang, Houdong Hu, Jason Corso, Jianfeng Gao

[PDF]

Show and Tell: A Neural Image Caption Generator.[2015 CVPR]

Oriol Vinyals, Alexander Toshev, Samy Bengio, Dumitru Erhan

[PDF]

SCA-CNN: Spatial and Channel-Wise Attention in Convolutional Networks for Image Captioning.[2017 CVPR]

Long Chen, Hanwang Zhang, Jun Xiao, Liqiang Nie, Jian Shao, Wei Liu, Tat-Seng Chua

[PDF]

Self-Critical Sequence Training for Image Captioning.[2017 CVPR]

Steven J. Rennie, Etienne Marcheret, Youssef Mroueh, Jerret Ross, Vaibhava Goel

[PDF]

Visual question answering: A survey of methods and datasets.[Oct, 2017]

Qi WU, Damien Teney, Peng Wang, Chunhua Shen, Anthony Dick, Anton van den Hengel

[PDF]

How to find a good image-text embedding for remote sensing visual question answering?.[24th Sep, 2021]

Christel Chappuis, Sylvain Lobry, Benjamin Kellenberger, Bertrand Le, Saux, Devis Tuia

[PDF]

An Improved Attention for Visual Question Answering.[2021 CVPR]

Tanzila Rahman, Shih-Han Chou, Leonid Sigal, Giuseppe Carenini

[PDF]

Analyzing Compositionality of Visual Question Answering.[2019 NIPS]

Sanjay Subramanian, Sameer Singh, Matt Gardner

[PDF]

OK-VQA: A Visual Question Answering Benchmark Requiring External Knowledge.[2019 CVPR]

Kenneth Marino, Mohammad Rastegari, Ali Farhadi, Roozbeh Mottaghi

[PDF]

MultiBench: Multiscale Benchmarks for Multimodal Representation Learning.[15th Jul, 2021]

Paul Pu Liang, Yiwei Lyu, Xiang Fan, Zetian Wu, Yun Cheng, Jason Wu, Leslie Chen, Peter Wu, Michelle A. Lee, Yuke Zhu5, Ruslan Salakhutdinov1, Louis-Philippe Morency

[PDF]

Benchmarking Multimodal AutoML for Tabular Data with Text Fields.[4th Nov, 20201]

Xingjian Shi, Jonas Mueller, Nick Erickson, Mu Li, Alexander J. Smola

[PDF]

Multimodal Explanations: Justifying Decisions and Pointing to the Evidence.[2018 CVPR]

Dong Huk Park, Lisa Anne Hendricks, Zeynep Akata, Anna Rohrbach, Bernt Schiele, Trevor Darrell, Marcus Rohrbach

[PDF]

Don't Just Assume; Look and Answer: Overcoming Priors for Visual Question Answering.[2018 CVPR]

Aishwarya Agrawal, Dhruv Batra, Devi Parikh, Aniruddha Kembhavi

[PDF]

Generative Adversarial Text to Image Synthesis.[2016 ICML]

Scott Reed, Zeynep Akata, Xinchen Yan, Lajanugen Logeswaran, Bernt Schiele, Honglak Lee

[PDF]

The Caltech-UCSD Birds-200-2011 Dataset.

[PDF]

AttnGAN: Fine-Grained Text to Image Generation With Attentional Generative Adversarial Networks.[2018 CVPR]

Tao Xu, Pengchuan Zhang, Qiuyuan Huang, Han Zhang, Zhe Gan, Xiaolei Huang, Xiaodong He

[PDF]

LipSound: Neural Mel-spectrogram Reconstruction for Lip Reading.[15 Sep, 2019]

Leyuan Qu, Cornelius Weber, Stefan Wermter

[PDF]

The Conversation: Deep Audio-Visual Speech Enhancement.[11th Apr, 2018]

Triantafyllos Afouras, Joon Son Chung, Andrew Zisserman

[PDF]

TCD-TIMIT: An Audio-Visual Corpus of Continuous Speech.[5th May, 2015]

Naomi Harte, Eoin Gillen

[PDF]

Deep Voice 3: Scaling Text-to-Speech with Convolutional Sequence Learning.[20 Oct, 2017]

WeiPing, KainanPeng, AndrewGibiansk, SercanO. Arık, Ajay Kannan, Sharan Narang

[PDF]

Natural TTS Synthesis by Conditioning Wavenet on MEL Spectrogram Predictions.[15th Apr, 2018]

Jonathan Shen, Ruiming Pang, Ron J. Weiss, Mile Schuster, Navdeep Jaitly, Zongheng Yang, Zhifeng Chen, Yu Zhang, Yuxuan Wang, Rj Skerrv-Ryan, Rif A. Saurous, Yannis Agiomvrgiannakis, Yonghui Wu

[PDF]

Vid2speech: Speech reconstruction from silent video.[5th Mar, 2017]

Ariel Ephrat, Shmuel Peleg

[PDF]

Lip2Audspec: Speech Reconstruction from Silent Lip Movements Video.[15th Apr, 2018]

Hassan Akbari, Himani Arora, Liangliang Cao, Nima Mesgarani

[PDF]

Video-Driven Speech Reconstruction using Generative Adversarial Networks.[14th Jun, 2019]

Konstantinos Vougioukas, Pingchuan Ma, Stavros Petridi, Maja Pantic

[PDF]

ViLBERT: Pretraining Task-Agnostic Visiolinguistic Representations for Vision-and-Language Tasks.[2019 NIPS]

Jiasen Lu, Dhruv Batra, Devi Parikh, Stefan Lee

[PDF]

Learning Robust Patient Representations from Multi-modal Electronic Health Records: A Supervised Deep Learning Approach.[2021]

Leman Akoglu, Evimaria Terzi, Xianli Zhang, Buyue Qian, Yang Liu, Xi Chen, Chong Guan, Chen Li

[PDF]

Referring Expression Comprehension: A Survey of Methods and Datasets.[7th Dec, 2020]

Yanyuan QIao, Chaorui Deng, Qi Wu

[PDF]

VL-BERT: Pre-training of Generic Visual-Linguistic Representations.[22th Aug, 2019]

Weijie Su, Xizhou Zhu, Yue Cao, Bin Li, Lewei Lu, Furu Wei, Jifeng Dai

[PDF]

Clinically Accurate Chest X-Ray Report Generation.[2019 MLHC]

Guanxiong Liu, Tzu-Ming Harry Hsu, Matthew McDermott, Willie Boag, Wei-Hung Weng, Peter Szolovits, Marzyeh Ghassemi

[PDF]

Deep Residual Learning for Image Recognition.[2016 CVPR]

Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun

[PDF]

Probing the Need for Visual Context in Multimodal Machine Translation.[20th Mar, 2019]

Ozan Caglayan, Pranava Madhyastha, Lucia Specia, Loic Barrault

[PDF]

Neural Machine Translation by Jointly Learning to Align and Translate.[1st Sep, 2014]

Dzmitry Bahdanau, Kyunghyun Cho, Yoshua Bengio

[PDF]

Multi-modal neural machine translation with deep semantic interactions.[Apr, 2021]

Jinsong Su, Jinchang Chen, Hui Jiang, Chulun Zhou, Huan Lin, Yubin Ge, Qingqiang Wu, Yongxuan Lai

[PDF]

Vqa: Visual question answering.**[2015 ICCV]

Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh

[PDF]

Microsoft coco: Common objects in context.[2014 ECCV]

Tsung-Yi Lin, Michael Maire, Serge Belongie, James Hays, Pietro Perona, Deva Ramanan, Piotr Dollar, C. Lawrence Zitnick

[PDF]

Pre-training technique to localize medical BERT and enhance biomedical BERT.[14th May, 2020]

Shoya Wada, Toshihiro Takeda, Shiro Manabe, Shozo Konishi, Jun Kamohara, Yasushi, Matsumura

[PDF]

Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models.[2015 ICCV]

Bryan A. Plummer, Liwei Wang, Chris M. Cervantes, Juan C. Caicedo, Julia Hockenmaier, Svetlana Lazebnik

[PDF]

ICDAR2019 Competition on Scanned Receipt OCR and Information Extraction.[20th Sep, 2019]

Zheng Huang, Kai Chen, Jianhua He, Xiang Bai, Dimosthenis Karatzas, Shijian Lu, C. V. Jawahar

[PDF]

FUNSD: A Dataset for Form Understanding in Noisy Scanned Documents.[20th Sep, 2019]

Guillaume Jaume, Hazim Kemal Ekenel, Jean-Philippe Thiran

[PDF]

How2: A Large-scale Dataset for Multimodal Language Understanding.[1st Nov, 2018]

Ramon Sanabria, Ozan Caglayan, Shruti Palaskar, Desmond Elliott, Loic Barrault, Lucia Specia, Florian Metze

[PDF]

Learning Individual Speaking Styles for Accurate Lip to Speech Synthesis.[2020 CVPR]

K R Prajwal, Rudrabha Mukhopadhyay, Vinay P. Namboodiri, C.V. Jawahar

[PDF]

The Sound of Pixels.[2018 ECCV]

Hang Zhao, Chuang Gan, Andrew Rouditchenko, Carl Vondrick, Josh McDermott, Antonio Torralba

[PDF]

Crisismmd: Multimodal twitter datasets from natural disasters.[15th Jun, 2018]

Firoj Alam, Ferda Ofli, Muhammad Imran

[PDF]

From Recognition to Cognition: Visual Commonsense Reasoning.[2019 CVPR]

Rowan Zellers, Yonatan Bisk, Ali Farhadi, Yejin Choi

[PDF]

The Caltech-UCSD Birds-200-2011 Dataset.

[PDF]

Framing Image Description as a Ranking Task: Data, Models and Evaluation Metrics.[30th Aug, 2013]

M. Hodosh, P. Young, J. Hockenmaier

[PDF]

Multimodal Language Analysis in the Wild: CMU-MOSEI Dataset and Interpretable Dynamic Fusion Graph.[Jul, 2018]

AmirAli Bagher Zadeh, Paul Pu Liang, Soujanya Poria, Erik Cambria, Louis-Philippe Morency

[PDF]

MIMIC-III, a freely accessible critical care database.[24th May, 2016]

Alistair E.W. Johnson, Tom J. Pollard, Li-wei H. Lehman, Mengling Feng, Mohammad Ghassemi, Benjamin Moody, Leo Anthony Celi & Roger G. Mark

[PDF]

Fashion 200K Benchmark

[Github]

Indoor scene segmentation using a structured light sensor.[Nov 2011]

Nathan Silberman, Rob Fergus

[PDF]

Indoor Segmentation and Support Inference from RGBD Images.[2012 ECCV]

Nathan Silberman, Derek Hoiem, Pushmeet Kohli, Rob Fergus

[PDF]

Good News, Everyone! Context Driven Entity-Aware Captioning for News Images.[2019 CVPR]

Ali Furkan Biten, Lluis Gomez, Marcal Rusinol, Dimosthenis Karatzas

[PDF]

MSR-VTT: A Large Video Description Dataset for Bridging Video and Language.[2016 CVPR]

Jun Xu, Tao Mei, Ting Yao, Yong Rui

[PDF]

Video Question Answering via Gradually Refined Attention over Appearance and Motion.[Oct, 2017]

Dejing Xu, Zhou Zhao, Jun Xiao, Fei Wu, Hanwang Zhang, Xiangnan He, Yueting Zhuang

[PDF]

TGIF-QA: Toward Spatio-Temporal Reasoning in Visual Question Answering.[2017 CVPR]

Yunseok Jang, Yale Song, Youngjae Yu, Youngjin Kim, Gunhee Kim

[PDF]

Multi-Target Embodied Question Answering.[2019 CVPR]

Licheng Yu, Xinlei Chen, Georgia Gkioxari, Mohit Bansal, Tamara L. Berg, Dhruv Batra

[PDF]

VideoNavQA: Bridging the Gap between Visual and Embodied Question Answering.[14th Aug, 2019]

Catalina Cangea, Eugene Belilovsky, Pietro Lio, Aaron Courville

[PDF]

An Analysis of Visual Question Answering Algorithms.[2017 ICCV]

Kushal Kafle, Christopher Kanan

[PDF]

nuScenes: A Multimodal Dataset for Autonomous Driving.[2020 CVPR]

Holger Caesar, Varun Bankiti, Alex H. Lang, Sourabh Vora, Venice Erin Liong, Qiang Xu, Anush Krishnan, Yu Pan, Giancarlo Baldan, Oscar Beijbom

[PDF]

Automated Flower Classification over a Large Number of Classes.[20th Jan, 2009]

Maria-Elena Nilsback, Andrew Zisserman

[PDF]

MOSI: Multimodal Corpus of Sentiment Intensity and Subjectivity Analysis in Online Opinion Videos.[20th Jun, 2016]

Amir Zadeh, Rowan Zellers, Eli Pincus, Louis-Philippe Morency

[PDF]

Can We Read Speech Beyond the Lips? Rethinking RoI Selection for Deep Visual Speech Recognition.[18th Jan, 2020]

Yuanhang Zhang, Shuang Yang, Jingyun Xiao, Shiguang Shan, Xilin Chen

[PDF]

The MIT Stata Center dataset. [2013]

Maurice Fallon, Hordur Johannsson, Michael Kaess and John J Leonard

[PDF]

data2vec: A General Framework for Self-supervised Learning in Speech, Vision and Language.[2022 ICML]

Alexei Baevski, Wei-Ning Hsu, Qiantong Xu, Arun Babu, Jiatao Gu, Michael Auli

[PDF]

FLAVA: A Foundational Language and Vision Alignment Model.[2022 CVPR]

Amanpreet Singh, Ronghang Hu, Vedanuj Goswami, Guillaume Couairon, Wojciech Galuba, Marcus Rohrbach, Douwe Kiela

[PDF]

UC2: Universal Cross-Lingual Cross-Modal Vision-and-Language Pre-Training.[2021 CVPR]

Mingyang Zhou, Luowei Zhou, Shuohang Wang, Yu Cheng, Linjie Li, Zhou Yu, Jingjing Liu

[PDF]

If you find the listing and survey useful for your work, please cite the paper:

@article{manzoor2023multimodality,

title={Multimodality Representation Learning: A Survey on Evolution, Pretraining and Its Applications},

author={Manzoor, Muhammad Arslan and Albarri, Sarah and Xian, Ziting and Meng, Zaiqiao and Nakov, Preslav and Liang, Shangsong},

journal={arXiv preprint arXiv:2302.00389},

year={2023}

}