| services | author |

|---|---|

aks, container-registry, azure-monitor, storage, virtual-network, virtual-machines, private-link, templates, terraform, devops |

paolosalvatori |

This sample shows how to create a private AKS clusters using:

- Terraform as infrastructure as code (IaC) tool to build, change, and version the infrastructure on Azure in a safe, repeatable, and efficient way.

- Azure DevOps Pipelines to automate the deployment and undeployment of the entire infrastructure on multiple environments on the Azure platform.

In a private AKS cluster, the API server endpoint is not exposed via a public IP address. Hence, to manage the API server, you will need to use a virtual machine that has access to the AKS cluster's Azure Virtual Network (VNet). This sample deploys a jumpbox virtual machine in the hub virtual network peered with the virtual network that hosts the private AKS cluster. There are several options for establishing network connectivity to the private cluster.

- Create a virtual machine in the same Azure Virtual Network (VNet) as the AKS cluster.

- Use a virtual machine in a separate network and set up Virtual network peering. See the section below for more information on this option.

- Use an Express Route or VPN connection.

Creating a virtual machine in the same virtual network as the AKS cluster or in a peered virtual network is the easiest option. Express Route and VPNs add costs and require additional networking complexity. Virtual network peering requires you to plan your network CIDR ranges to ensure there are no overlapping ranges. For more information, see Create a private Azure Kubernetes Service cluster. For more information on Azure Private Links, see What is Azure Private Link?.

In addition, the sample creates a private endpoint to access all the managed services deployed by the Terraform modules via a private IP address:

- Azure Container Registry

- Azure Storage Account

- Azure Key Vault

NOTE

If you want to deploy a private AKS cluster using a public DNS zone to simplify the DNS resolution of the API Server to the private IP address of the private endpoint, you can use this project under my GitHub account or on Azure Quickstart Templates.

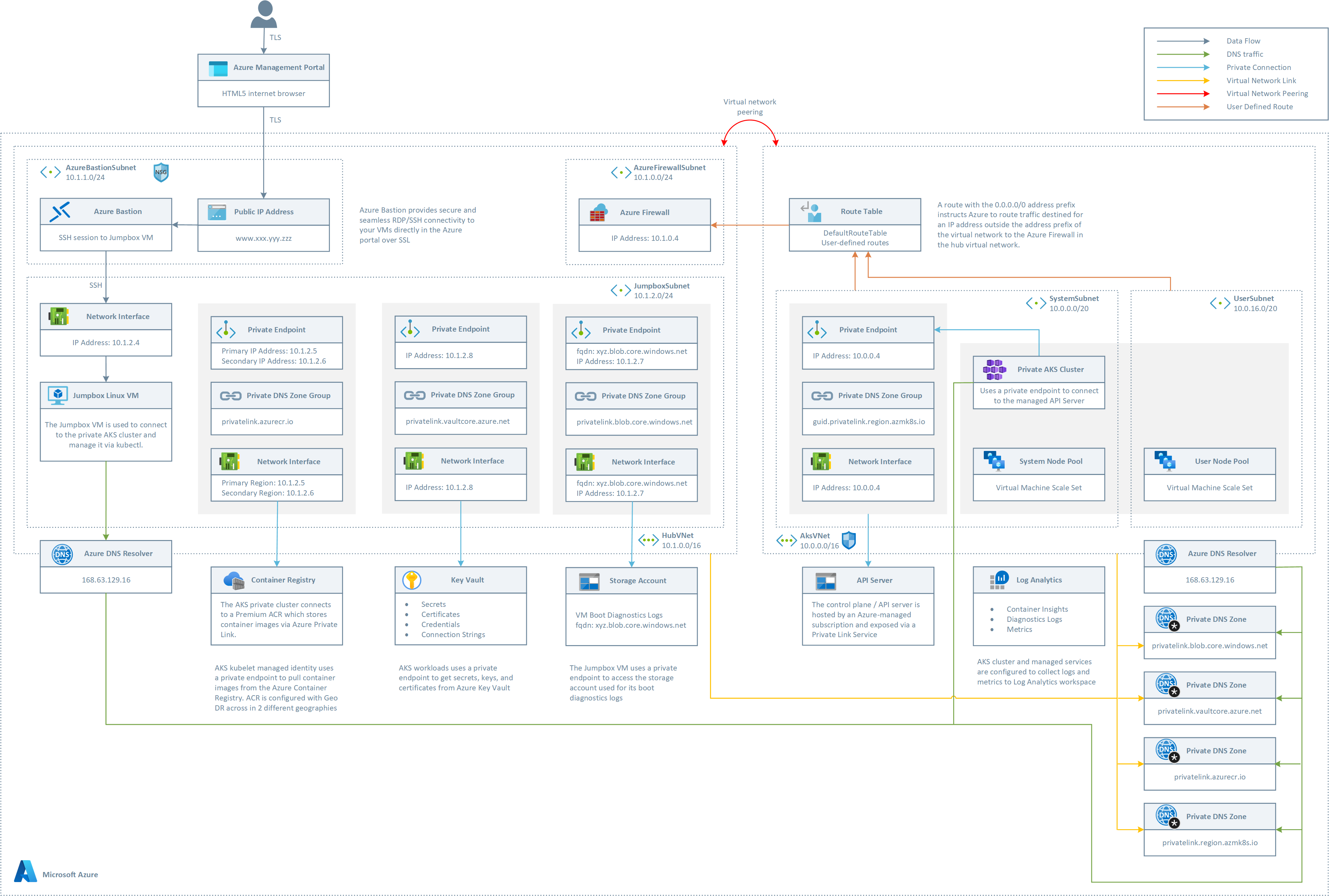

The following picture shows the architecture created by the Terraform modules included in this sample:

The architecture is composed of the following elements:

- A hub virtual network with three subnets:

- AzureBastionSubnet used by Azure Bastion

- AzureFirewallSubnet used by Azure Firewall

- JumpboxSubnet used by the jumpbox virtual machine and private endpoints

- A new virtual network with three subnets:

- SystemSubnet used by the AKS system node pool

- UserSubnet used by the AKS user node pool

- The private AKS cluster uses a user-defined managed identity to create additional resources like load balancers and managed disks in Azure.

- The private AKS cluster is composed of a:

- System node pool hosting only critical system pods and services. The worker nodes have node taint which prevents application pods from beings scheduled on this node pool.

- User node pool hosting user workloads and artifacts.

- An Azure Firewall used to control the egress traffic from the private AKS cluster. For more information on how to lock down your private AKS cluster and filter outbound traffic, see:

- An AKS cluster with a private endpoint to the control plane / API server hosted by an AKS-managed Azure subscription. The cluster can communicate with the API server exposed via a Private Link Service using a private endpoint.

- An Azure Bastion resource that provides secure and seamless SSH connectivity to the Jumpbox virtual machine directly in the Azure portal over SSL

- An Azure Container Registry (ACR) to build, store, and manage container images and artifacts in a private registry for all types of container deployments.

- When the ACR SKU is equal to Premium, a Private Endpoint is created to allow the private AKS cluster to access ACR via a private IP address. For more information, see Connect privately to an Azure container registry using Azure Private Link.

- A Private DNS Zone for the name resolution of each private endpoint.

- A Virtual Network Link between each Private DNS Zone and both the hub and AKS virtual networks

- A jumpbox virtual machine to manage the private AKS cluster in the hub virtual network

- A Log Analytics workspace to collect the diagnostics logs and metrics of both the AKS cluster and Jumpbox virtual machine.

There are some requirements you need to complete before we can deploy Terraform modules using Azure DevOps.

- Store the Terraform state file to an Azure storage account. For more information on how to create to use a storage account to store remote Terraform state, state locking, and encryption at rest, see Store Terraform state in Azure Storage

- Create an Azure DevOps Project. For more information, see Create a project in Azure DevOps

- Create an Azure DevOps Service Connection to your Azure subscription. No matter you use Service Principal Authentication (SPA) or an Azure-Managed Service Identity when creating the service connection, make sure that the service principal or managed identity used by Azure DevOps to connect to your Azure subscription is assigned the owner role on the entire subscription.

In order to deploy Terraform modules to Azure you can use Azure DevOps CI/CD pipelines. Azure DevOps provides developer services for support teams to plan work, collaborate on code development, and build and deploy applications and infrastructure components using IaC technologies such as ARM Templates, Bicep, and Terraform.

Terraform stores state about your managed infrastructure and configuration in a special file called state file. This state is used by Terraform to map real-world resources to your configuration, keep track of metadata, and to improve performance for large infrastructures. Terraform state is used to reconcile deployed resources with Terraform configurations. When using Terraform to deploy Azure resources, the state allows Terraform to know what Azure resources to add, update, or delete. By default, Terraform state is stored in a local file named "terraform.tfstate", but it can also be stored remotely, which works better in a team environment. Storing the state in a local file isn't ideal for the following reasons:

- Storing the Terraform state in a local file doesn't work well in a team or collaborative environment.

- Terraform state can include sensitive information.

- Storing state locally increases the chance of inadvertent deletion.

Each Terraform configuration can specify a backend, which defines where and how operations are performed, where state snapshots are stored. The Azure Provider or azurerm can be used to configure infrastructure in Microsoft Azure using the Azure Resource Manager API's. Terraform provides a backend for the Azure Provider that allows to store the state as a Blob with the given Key within a given Blob Container inside a Blob Storage Account. This backend also supports state locking and consistency checking via native capabilities of the Azure Blob Storage. When using Azure DevOps to deploy services to a cloud environment, you should use this backend to store the state to a remote storage account. For more information on how to create to use a storage account to store remote Terraform state, state locking, and encryption at rest, see Store Terraform state in Azure Storage. Under the storage-account folder in this sample, you can find a Terraform module and bash script to deploy an Azure storage account where you can persist the Terraform state as a blob.

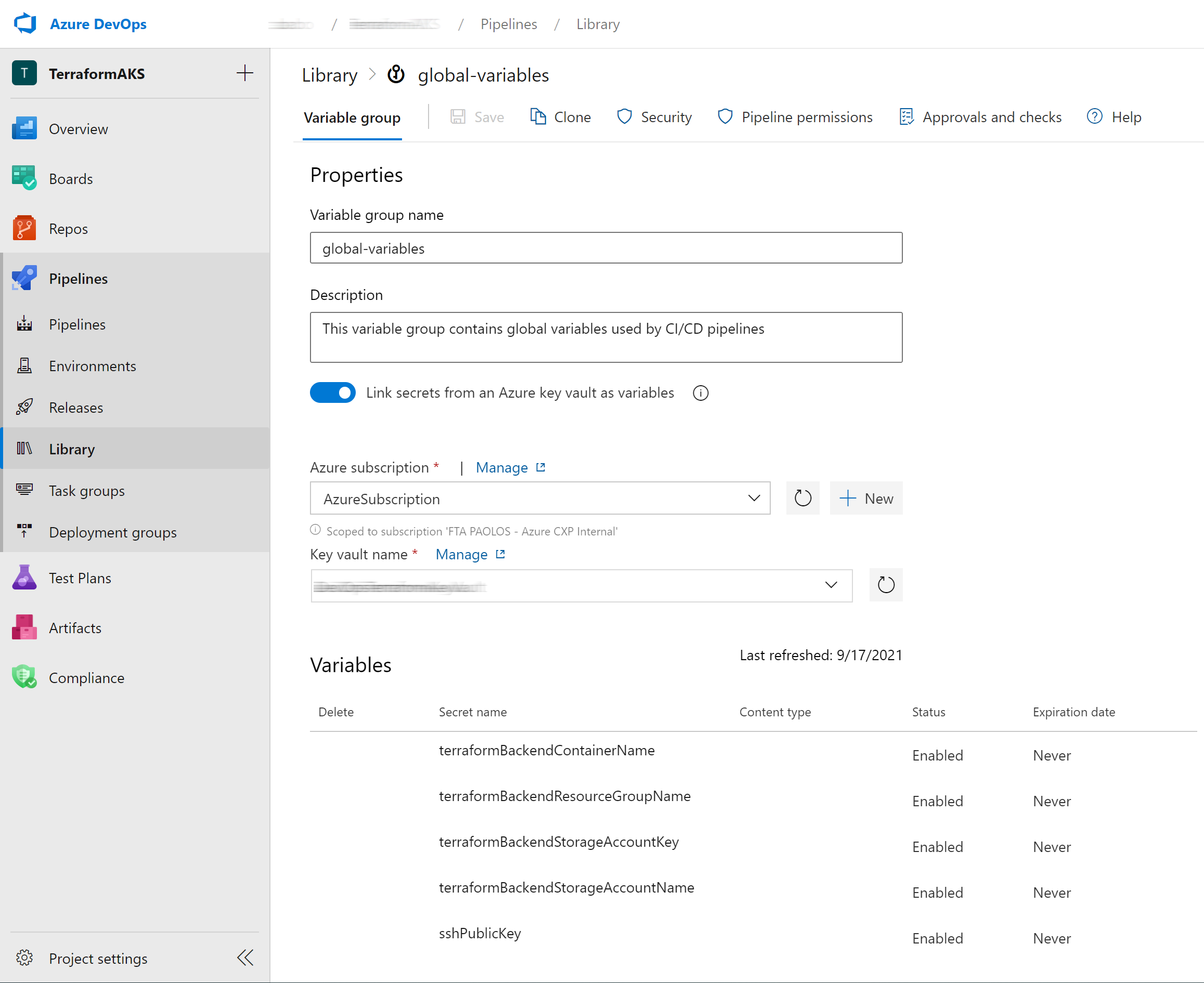

The key-vault folder contains a bash script that uses Azure CLI to store the following data to an Azure Key Vault. This sensitive data will be used by Azure DevOps CD pipelines via variable groups. Variable groups store values and secrets that you want to pass into a YAML pipeline or make available across multiple pipelines. You can share use variables groups in multiple pipelines in the same project. You can Link an existing Azure key vault to a variable group and map selective vault secrets to the variable group. You can link an existing Azure Key Vault to a variable group and select which secrets you want to expose as variables in the variable group. For more information, see Link secrets from an Azure Key Vault.

The YAML pipelines in this sample use a variable group shown in the following picture:

The variable group is configured to use the following secrets from an existing Key Vault:

- terraformBackendContainerName: name of the blob container holding the Terraform remote state

- terraformBackendResourceGroupName: resource group name of the storage account that contains the Terraform remote state

- terraformBackendStorageAccountKey: key of the storage account that contains the Terraform remote state

- terraformBackendStorageAccountName: name of the storage account that contains the Terraform remote state

- sshPublicKey: key used by Terraform to configure the SSH public key for the administrator user of the virtual machine and AKS worker nodes

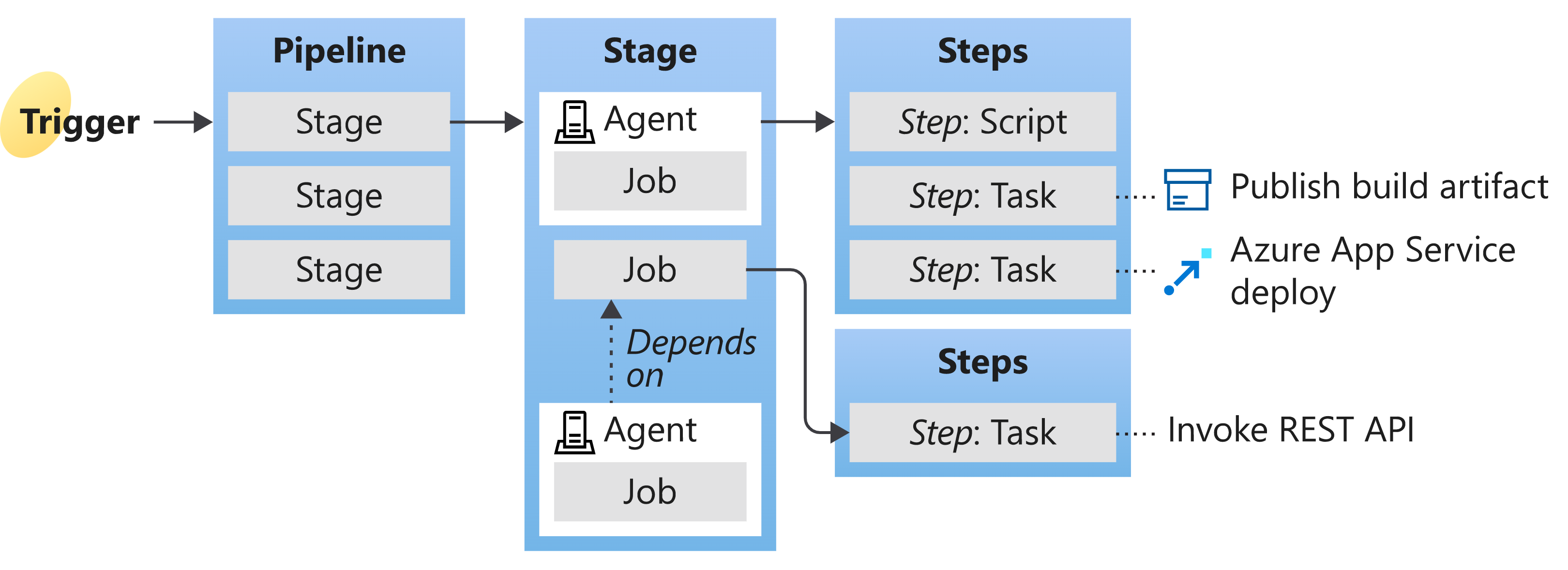

You can use Azure DevOps YAML pipelines to deploy resources to the target environment. Pipelines are part of the same Git repo that contains the artifacts such as Terraform modules and scripts and as such pipelines can be versioned as any other file in the Git reppsitory. You can follow a pull-request process to ensure changes are verified and approved before being merged. The following picture shows the key concepts of an Azure DevOps pipeline.

- A trigger tells a Pipeline to run.

- A pipeline is made up of one or more stages. A pipeline can deploy to one or more environments.

- A stage is a way of organizing jobs in a pipeline and each stage can have one or more jobs.

- Each job runs on one agent. A job can also be agentless.

- Each agent runs a job that contains one or more steps.

- A step can be a task or script and is the smallest building block of a pipeline.

- A task is a pre-packaged script that performs an action, such as invoking a REST API or publishing a build artifact.

- An artifact is a collection of files or packages published by a run.

For more information on Azure DevOps pipelines, see:

This sample provides three pipelines to deploy the infrastructure using Terraform modules, and one to undeploy the infrastructure.

- cd-validate-plan-apply-one-stage-tfvars: in Terraform, to set a large number of variables, you can specify their values in a variable definitions file (with a filename ending in either

.tfvarsor.tfvars.json) and then specify that file on the command line with a-var-fileparameter. For more information, see Input Variables. The sample contains three different.tfvarsfiles under the tfvars folder. Each file contains a different value for each variable and can be used to deploy the same infrastructure to three distinct environment: production, staging, and test. - cd-validate-plan-apply-one-stage-vars: this pipeline specifies variable values for Terraform plan and apply commands with the

-varcommand line option. For more information, see Input Variables. - cd-validate-plan-apply-separate-stages.yml: this pipeline is composed of three distinct stages for validate, plan, and apply. Each stage can be run separately.

- destroy-deployment: this pipeline uses the destroy to fully remove the resource group and all the Azure resources.

All the pipelines make use of the tasks of the Terraform extension. This extension provides the following components:

- A service connection for connecting to an Amazon Web Services(AWS) account

- A service connection for connecting to a Google Cloud Platform(GCP) account

- A task for installing a specific version of Terraform, if not already installed, on the agent

- A task for executing the core Terraform commands

The Terraform tool installer task acquires a specified version of Terraform from the Internet or the tools cache and prepends it to the PATH of the Azure Pipelines Agent (hosted or private). This task can be used to change the version of Terraform used in subsequent tasks. Adding this task before the Terraform task in a build definition ensures you are using that task with the right Terraform version.

The Terraform task enables running Terraform commands as part of Azure Build and Release Pipelines providing support for the following Terraform commands

This extension is intended to run on Windows, Linux and MacOS agents. As an alternative, you can use the Bash Task or PowerShell Task to install Terraform to the agent and run Terraform commands.

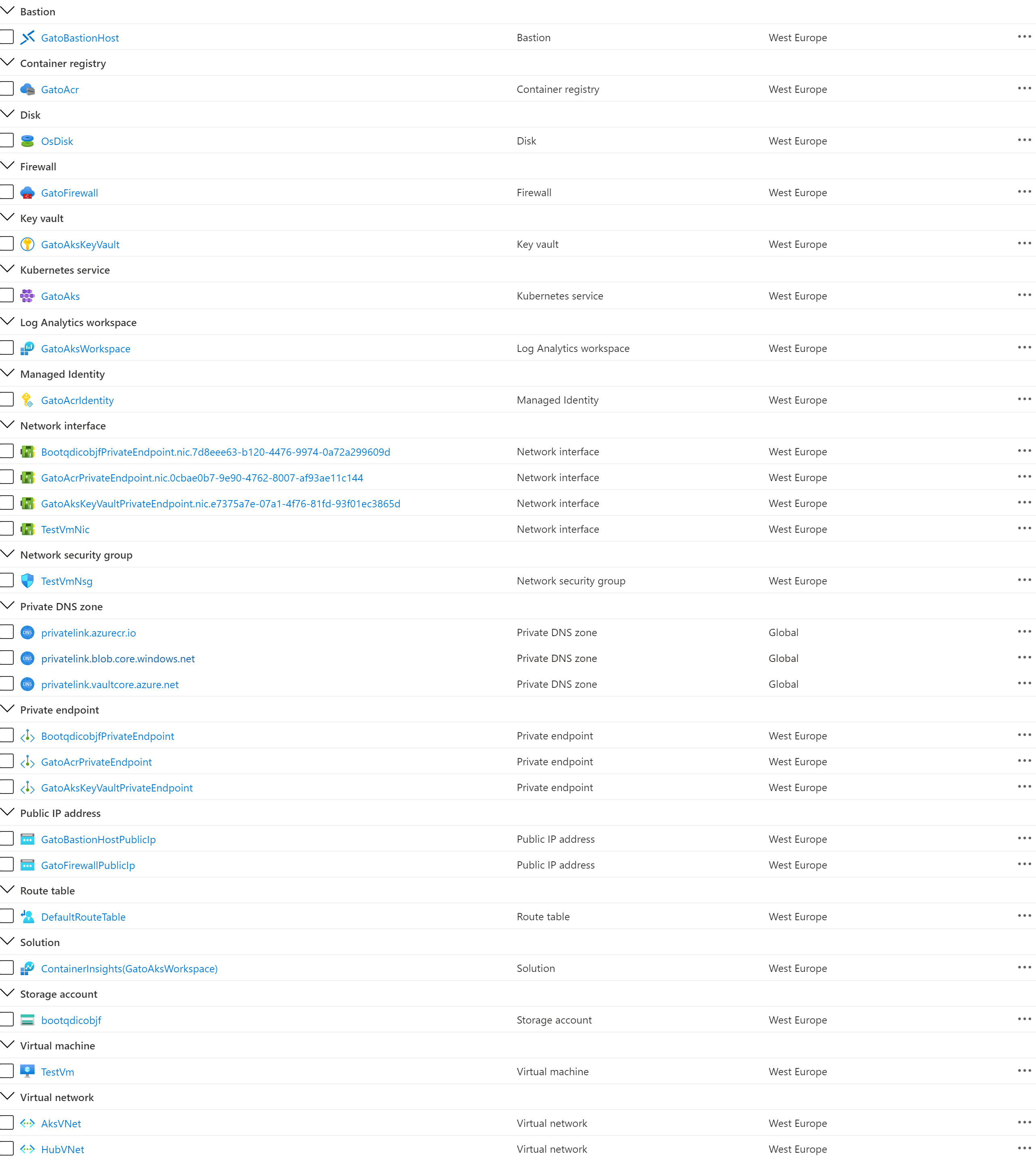

The following picture shows the resources deployed by the ARM template in the target resource group.

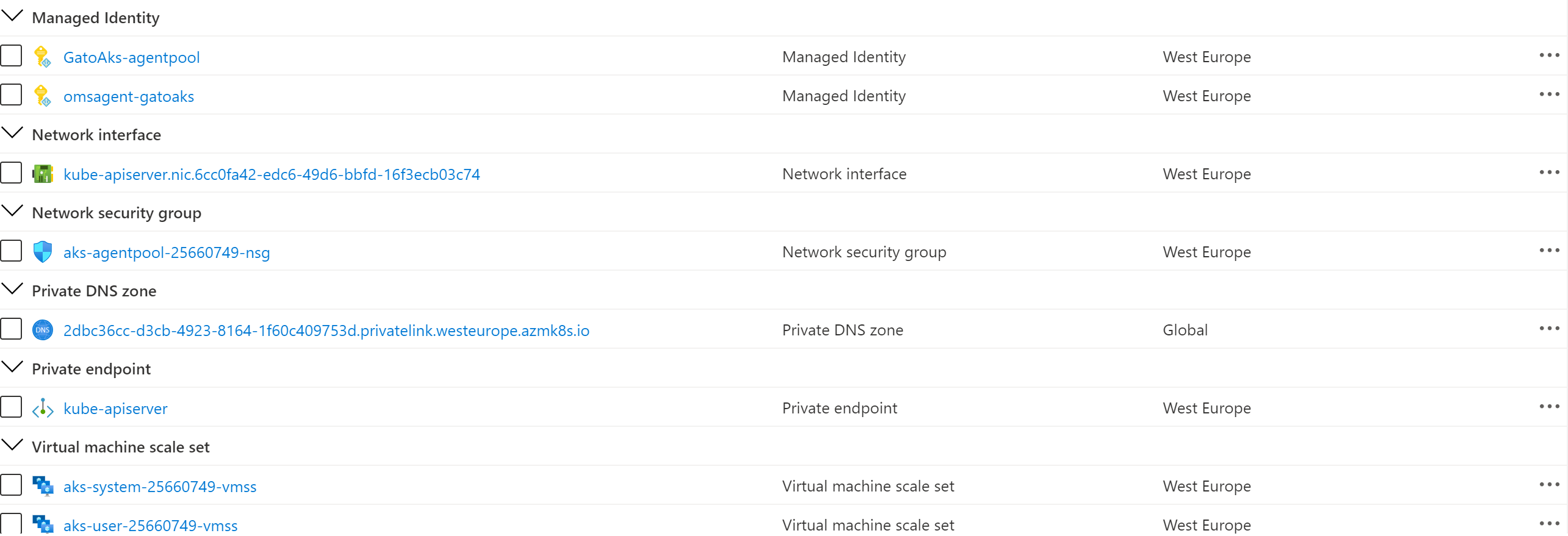

The following picture shows the resources deployed by the ARM template in the MC resource group associated to the AKS cluster:

In the visio folder you can find the Visio document which contains the above diagrams.

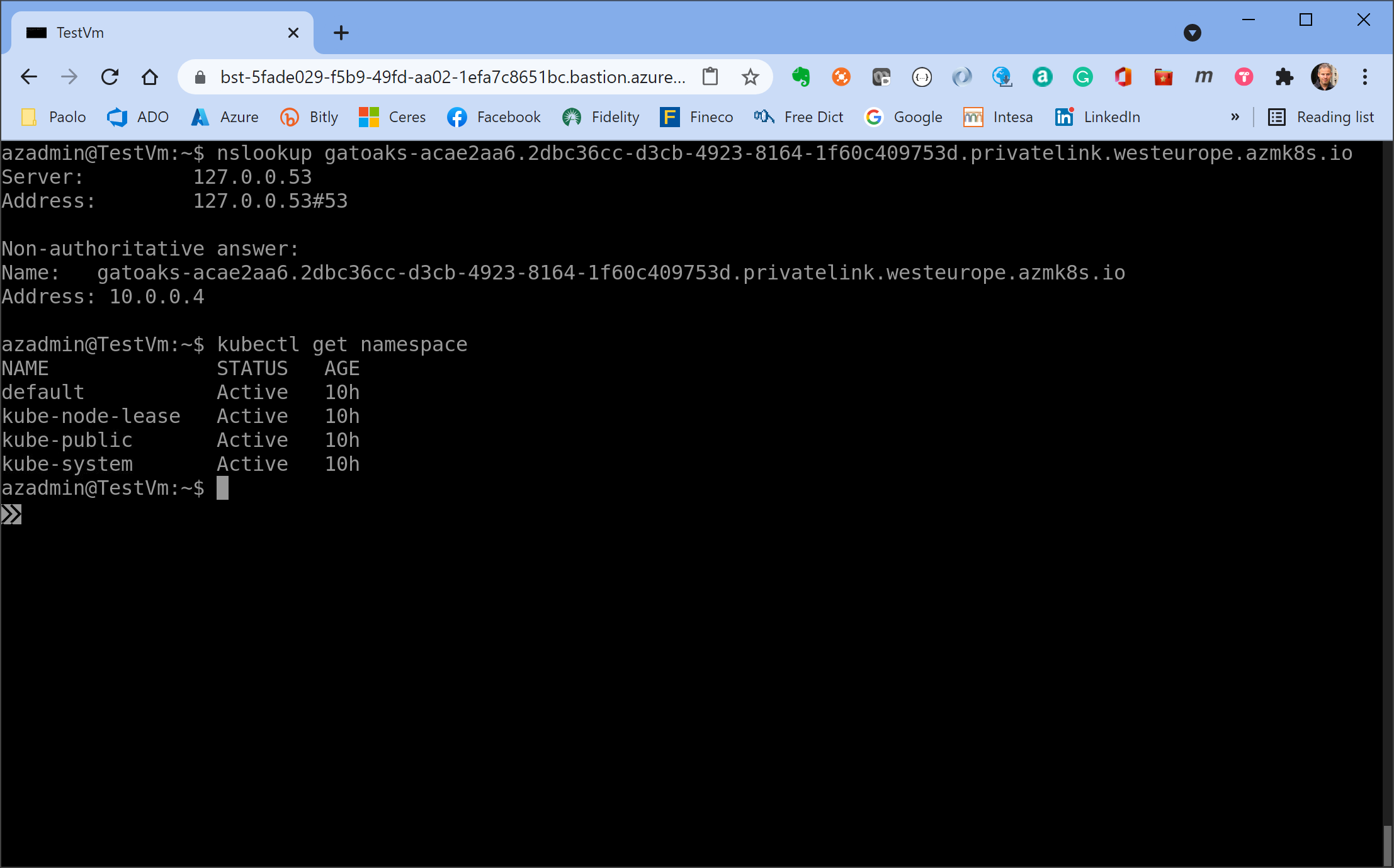

If you open an ssh session to the Linux virtual machine via Azure Bastion and manually run the nslookup command using the FQND of the API server as a parameter, you should see an output like the the following:

NOTE: the Terraform module runs an Azure Custom Script Extension that installed the kubectl and Azure CLI on the jumpbox virtual machine.