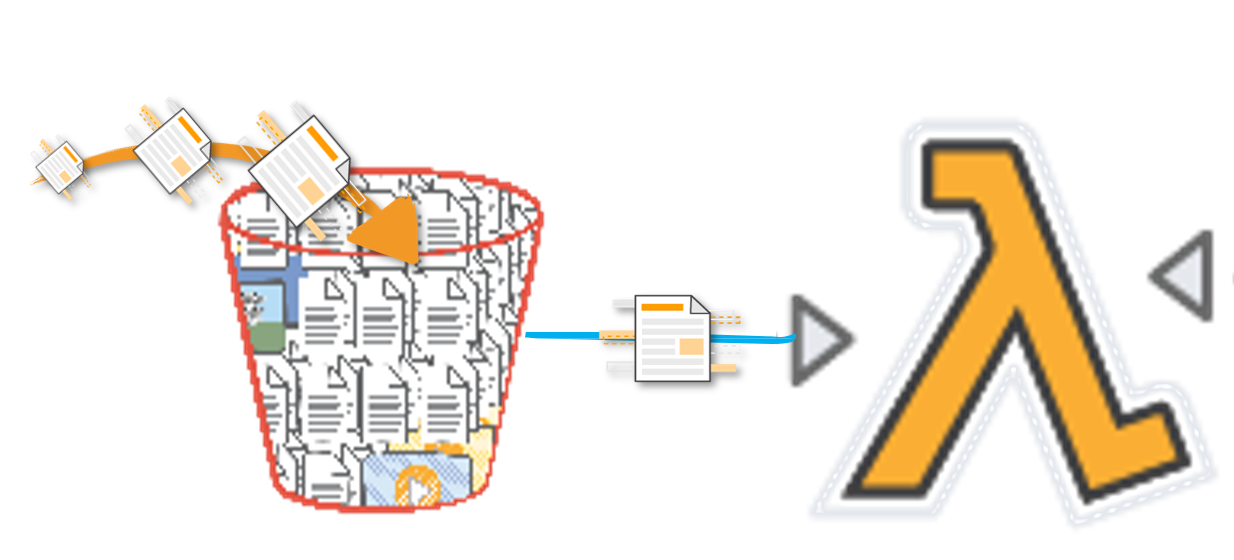

Lets say, we have to do some action for every object uploaded / added to S3 Bucket. We can use DynamoDB Streams along with Lambda to achieve the same.

Follow this article in Youtube

- AWS CLI pre-configured

-

git clone https://github.com/miztiik/serverless-s3-event-processor.git

-

Edit the

./helper_scripts/deploy.shto update your environment variables. The expected output in cloudwatch logs should look like this,AWS_PROFILE="default" BUCKET_NAME="sam-templates-011" # bucket must exist in the SAME region the deployment is taking place SERVICE_NAME="serverless-s3-event-processor" TEMPLATE_NAME="${SERVICE_NAME}.yaml" STACK_NAME="${SERVICE_NAME}" OUTPUT_DIR="./outputs/" PACKAGED_OUTPUT_TEMPLATE="${OUTPUT_DIR}${STACK_NAME}-packaged-template.yaml"

-

We will use the

deploy.shin thehelper_scriptsdirectory to deploy our AWS SAM templatechmod +x ./helper_scripts/deploy.sh ./helper_scripts/deploy.sh

-

Upload an object to the S3 Bucket or use the

event.jsonin thesrcdirectory to test the lambda function. In the lambda logs you will see the following output,{ "status": "True", "TotalItems": { "Received": 1, "Processed": 1 }, "Items": [ { "time": "2019-05-08T18:51:00.097Z", "object_owner": "AWS:AIDdR7KsQLWs56LRA", "bucket_name": "serverless-s3-event-processor-eventbucket-novet8m933s4", "key": "c19cf74b-ca14-4458-b2f1-c97b51789f67.xls" } ] }

You can reach out to us to get more details through here.