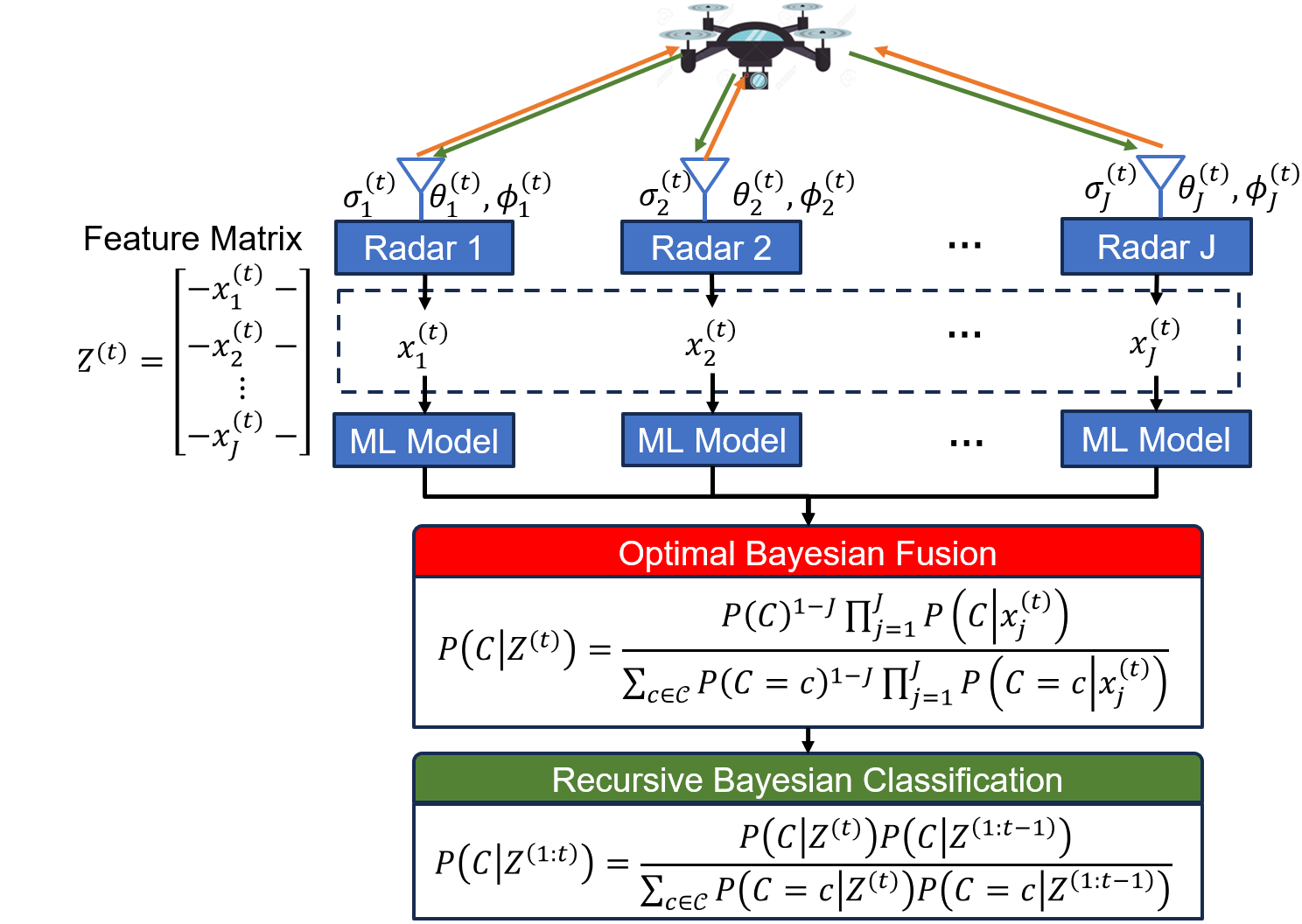

The first thrust of the FREEDOM project task 2.2.2 focused on Bayesian inference of a target UAV type classification using radar cross section (RCS) measurements in a multistatic radar configuration.

- Download and install Anaconda for python package management.

- Create a virtual environment with python version 3.9

conda create -n FREEDOM python=3.9

- Activate the conda FREEDOM virtual environment

conda activate FREEDOM

- Clone the Github repo

git clone https://github.com/mlpotter/RCS_ATR

- Download RCS data from IEEE DataPort here into

RCS_ATR\Drone_RCS_Measurement_Dataset

- Install python packages

pip install -r requirements.txt

To run a basic example of 4 radars recursively classifying a targets over 100 time steps.

# change to the folders related to mobile radars

cd RCS_ATR

python main_mc_trajectory_SNR.py --mlflow_track \

--experiment_name=radar_target_recognition_joke \

--SNR_constraint=-20 \

--model_choice=logistic \

--no-geometry \

--num_points=10000 \

--MC_Trials=10 \

--n_radars=4 \

--TN=100 \

--vx=50 \

--time_step_size=0.1 \

--yaw_range=np.pi/15 \

--pitch_range=np.pi/20 \

--roll_range=0 \

--fusion_method=fusion \

--noise_method=random \

--color=color \

--elevation_center=0 \

--elevation_spread=190 \

--azimuth_center=90 \

--azimuth_spread=180 \

--single_method=random \

--azimuth_jitter_bounds=0_180 \

--elevation_jitter_bounds=-90_90 \

--azimuth_jitter_width=20 \

--elevation_jitter_width=20 \More details on experiment parameters may be found by running python main_expectation.py -h

python main_mc_trajectory_SNR.py -h

-h, --help show this help message and exit

--num_points NUM_POINTS

Number of random points per class label (default: 1000)

--MC_Trials MC_TRIALS

Number of MC Trials (default: 10)

--n_radars N_RADARS Number of radars in the xy grid (default: 16)

--mlflow_track, --no-mlflow_track

Do you wish to track experiments with mlflow? --mlflow_track for yes --no-mlflow_track for no (default: False)

--geometry, --no-geometry

Do you want az-el measurements in experiments? --geometry for yes --no-geometry for no (default: False)

--fusion_method FUSION_METHOD

how to aggregate predictions of distributed classifier (default: average)

--model_choice MODEL_CHOICE

Model to train (default: xgboost)

--TN TN Number of time steps for the experiment (default: 20)

--vx VX The Velocity of the Drone in the forward direction (default: 50)

--yaw_range YAW_RANGE

The yaw std of the random drone walk (default: np.pi/8)

--pitch_range PITCH_RANGE

The pitch std of the random drone walk (default: np.pi/15)

--roll_range ROLL_RANGE

The roll std of the random drone walk (default: 0)

--time_step_size TIME_STEP_SIZE

The Velocity of the Drone in the forward direction (default: 0.1)

--noise_method NOISE_METHOD

The noise method to choose from (see code for examples) (default: random)

--color COLOR The type of noise (color,white) (default: white)

--SNR_constraint SNR_CONSTRAINT

The signal to noise ratio (default: 0)

--azimuth_center AZIMUTH_CENTER

azimuth center to sample for target single point RCS, bound box for random (default: 90)

--azimuth_spread AZIMUTH_SPREAD

std of sample for target single point RCS, bound box for random (default: 5)

--elevation_center ELEVATION_CENTER

elevation center to sample for target single point RCS, bound box for random (default: 0)

--elevation_spread ELEVATION_SPREAD

std of sample for target single point RCS, bound box for random (default: 5)

--single_method SINGLE_METHOD

target is for sampling around some designated point, random is to sample uniformly over grid (default: target)

--azimuth_jitter_width AZIMUTH_JITTER_WIDTH

the width of the jitter for azimuth (default: 10)

--azimuth_jitter_bounds AZIMUTH_JITTER_BOUNDS

lower and upper bound of the azimuth when adding noise to clip (default: 0_180)

--elevation_jitter_width ELEVATION_JITTER_WIDTH

the width of the jitter for elevation (default: 10)

--elevation_jitter_bounds ELEVATION_JITTER_BOUNDS

lower and upper bound of the elevation when adding noise to clip (default: -90_90)

--experiment_name EXPERIMENT_NAME

experimennt name for MLFLOW (default: radar_target_recognition)

--random_seed RANDOM_SEED

Michael Potter - linkedin - potter.mi@northeastern.edu

Research was sponsored by the Army Research Laboratory and was accomplished under Cooperative Agreement Number W911NF-23-2-0014. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the Army Research Laboratory or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein.

Please cite the following paper if you intend to use this code or dataset for your research.

@misc{potter2024multistaticradar, title={Multistatic-Radar RCS-Signature Recognition of Aerial Vehicles: A Bayesian Fusion Approach}, author={Michael Potter and Murat Akcakaya and Marius Necsoiu and Gunar Schirner and Deniz Erdogmus and Tales Imbiriba}, year={2024}, eprint={2402.17987}, archivePrefix={arXiv}, primaryClass={eess.SP} }